Introduction

Clinical research professionals (CRPs), e.g., research coordinators, research assistants, research project managers, and research nurses, are integral to the conduct of safe, ethical, and high-quality clinical research [Reference Freel, Snyder and Bastarache1,Reference Davis, Hull, Grady, Wilfond and Henderson2]. CRPs perform a wide variety of roles that require a high level of competency and can influence research quality, efficiency, and participant safety. CRPs help ensure validity, reliability, and adherence to the Good Clinical Practice (GCP) principles in research [Reference Fedor and Cola3]. A highly proficient CRP workforce requires ongoing education and training due to the complex and ever-changing clinical research environment [Reference Mori, Mullen and Hill4–Reference Speicher, Fromell and Avery6].

Providing CRPs with relevant competency-based continuing education and professional development opportunities is of paramount importance to the overall success of the clinical research enterprise within Academic Medical Centers (AMCs). Successful implementation of continuing education for CRPs positively impacts research quality, reliability of data, and protection of rights, safety, and welfare of research participants [Reference Davis, Hull, Grady, Wilfond and Henderson2–Reference Mori, Mullen and Hill4,Reference Speicher, Fromell and Avery6–Reference Isaacman and Reynolds9].

Strong continuing education programs are needed but there is no standard pedagogy [Reference Speicher, Fromell and Avery6,Reference Rojewski, Choi and Hill10,Reference Rojewski, Choi and Hill11]. Recent Clinical and Translational Science Award (CTSA) Notice of Funding Opportunities (NOFOs) advise that continuing education programs for members of the research team be aligned with adult learning principles, and be “tailored, practical, and interactive…designed around relevant, real-world scenarios to be solved individually or by teams” emphasizing that “clinical research is a collaborative endeavor.” These notices encourage collaboration across CTSA hubs to “streamline resources” and “avoid redundancy” and require training and mentoring for CRPs as part of professional development [12,13]. These NOFOs promote training that goes beyond static instruction to active, interactive, and collaborative real-world learning environments that support professional development.

Research Professionals Networks (RPNs) have played a key role within CTSAs, providing infrastructure for networking and education for the CRP workforce [Reference Brandt, Bosch and Bayless14,Reference Baedorf-Kassis, Winkler, Glanforti and Needler15]. In 2017, Boston University Medical Campus/Boston Medical Center (BUMC/BMC) launched the RPN Workshops as the primary educational initiative of its RPN, to specifically address the need for CRP continuing education. The workshops were designed to be peer-led, based on identified competencies from the Joint Task Force (JTF) Core Competency Framework [Reference Sonstein and Jones16], and interactive, using methods that support adult learning, enabling CRPs to engage with and learn from each other. The workshops were intentionally not based on a set curriculum but instead were intended to supplement and extend existing formal curriculum-based training on clinical research and GCP, with topics informed by the reported needs and interests of the RPN.

Methods that support adult learning are based on the adult learning theory, called “andragogy,” developed by Malcolm S. Knowles, which outlines basic assumptions of adult learners and principles for the implementation of adult learning [17]. These assumptions and principles include the ideas that adult learners benefit most from learning that is based on their experiences, relates to their goals and objectives, and is applicable to their jobs. Additionally, adult learners are self-motivated and benefit from interactive and problem-based training and being immersed in their own training development [18].

In 2018, BUMC/BMC partnered with the University of Vermont Larner College of Medicine to offer the RPN Workshops inter-institutionally, increasing the presenter and learner pools and coordinating training offerings across institutions. Workshop participants and presenters embraced this collaboration, which catalyzed the further expansion of the RPN Workshop initiative to include the University of Florida in November 2019, and the Medical University of South Carolina in March 2021.

The RPN Workshops continued to evolve over the years and are now a collaborative, multi-institutional framework for ongoing continuing education and professional development for CRPs designed around five key components:

-

1. Interactive workshop format to support adult learning and enable participants to practice with new material while engaging with and learning from others;

-

2. Topics, skills leveling, and objective development based on the JTF Core Competency Framework for Clinical Research Professionals;

-

3. Peer-led to provide content based on real-world “boots on the ground” experience;

-

4. Professional development and continuing education opportunities for both attendees and presenters;

-

5. Inter-institutional collaboration to expand reach and perspective, share resources, and promote CRP engagement and network development.

In this manuscript, we present our RPN Workshop framework and quantitative and qualitative results from a four-year (Academic Year [AY] 2017–18 through AY 2020–21) utilization-focused evaluation and discuss the implications for CRP continuing education. We anticipate that other CTSA programs and AMCs may benefit from our experience and draw on the information presented to enhance CRP’s focus on continuing education and professional development programs at their institutions.

Methods

Implementation and management of RPN workshops: operations, format, and activities

RPN Workshops are held monthly from September through June. A workshop planning team, comprised of one to two individuals from each collaborating institution, develops the workshop calendar and identifies topics and peer presenters. The members of the planning team have experience in workforce development, working as CRPs, and in clinical research operations, and have central roles that relate to operations, research quality, and training and education efforts across their respective institutions. Members of the planning team meet with peer presenters to guide session development and provide feedback on content, activities, and presentation. The planning team also works collaboratively to administer the program, communicate and promote workshop participation, and oversee allocation of continuing education credits.

Each workshop is 75 or 90 minutes in duration and includes didactic and interactive learning. Presenters are strongly encouraged to devote 30%–50% of workshop time to activities. As mentioned previously, RPN Workshops are based on the principles and guidance of the adult learning theory [18]. This means incorporating hands-on activities that are designed to promote peer-to-peer inter-institutional networking, sharing of best practices and experiences, and discussion of new approaches or affirming current approaches. Activities support the presented content by enabling participants to engage with the material through case studies, real-world examples, and simulated scenarios. Methods for workshop activities include Zoom (breakout rooms, chat), group brainstorming and problem-solving (Zoom whiteboard and Google Jamboard), polling (Zoom, PollEverywhere, Slido), and large-group discussions. Discussions and “report backs” from the breakout room create opportunities for CRPs to ruminate on the content and its applicability to their roles.

The planning team also works with volunteer peer facilitators who help guide the activities and discussion within the breakout rooms. Facilitators are key in helping to orient the breakout room group to the activity and encourage individuals to work together, interact, and contribute to the small group discussions. The planning team provides the facilitators with a guidance document that describes the facilitator’s role, specific workshop/activity materials, and teaching prompts in advance of the workshop.

The workshop topic selection process is dynamic and allows for workshop topics to be responsive to the research needs at the collaborating institutions, as well as to the constantly changing environment in clinical and human research. Topics are derived from multiple sources, including participant evaluations and suggestions, institutional needs and priorities, core information on study conduct (e.g., GCP, informed consent), knowledge gaps identified from the collaborating institutions’ Quality Assurance programs, and changes to research regulations, guidance, policies, and best practices. See Appendix 1 for the workshop titles from AY 2017/18–2020/21. Topics are broadly relevant to all CRPs and do not focus on a single institution’s policies. However, institution-specific links to resources and policies for each collaborating institution are provided within the presentation slides as needed.

The workshops are peer-led by CRP presenters who are identified by two primary methods: “call for presenters” emails at the beginning of the academic year and as needed throughout the year, and individual invitations to CRPs who have known expertise in each content area. Peer presenters have practical experience with the topic and an interest in personal and professional development, developing their presentation skills, and enhancing their expertise on the topic. When possible, workshops are given by presenters from at least two of the collaborating institutions to elevate the experience for both the learners and the peer presenters by facilitating intra- and inter-institutional collaboration and broadening perspectives for all involved.

Individual presenters are introduced and connected to each other by the workshop planning team. During presenter team meetings the workshop planning team and presenters brainstorm workshop aims and activities which are informed by the JTF Competency Framework. Following the planning team meetings, the presenter guide and an outline of timelines and deadlines are shared with the presenting team.

Participants are surveyed both immediately and at 6–8 weeks after the workshop. Survey data is analyzed to understand the impact on workforce development. Survey data for each workshop is also summarized and provided to presenters to use for their own professional development, to identify what went well, and to use constructive feedback to improve in the future. This data is utilized to implement ongoing programmatic quality improvements.

Continuing education credits are provided to attendees who have certifications from the Association of Clinical Research Professionals or Society of Clinical Research Associates. This is beneficial for certified CRPs and their ongoing professional development for maintenance of professional certifications (e.g. CCRP, CCRC).

Materials from each workshop, including video recordings, presentation materials, and workshop activities, are archived and publicly available on the BUMC/BMC Clinical Research Resources Office website [19]. This growing library of workshop videos and materials promotes continuing education for RPN members who are unable to attend a workshop.

Connecting the institutions

To achieve the highest impact from the inter-institutional workshop design, learners from multiple institutions must be connected with each other and the presenters in real time. From 2017 to early 2020, Zoom was used to connect classrooms of in-person learners at the collaborating institutions. Attendees viewed the presentation as a group from their institution’s classroom, where the presenter could be physically present or on a screen, if presenting from another institution. While this process did promote discussion and learner engagement, it was mostly limited to connections within the classrooms. The method of connecting the institutions changed significantly when work-from-home requirements during the COVID-19 pandemic forced a shift to participants accessing Zoom individually rather than as a group assembled within a classroom. This shift enabled learners to directly engage with their peers and presenters more easily at the collaborating institutions and with the presenters. The impact of this change was assessed in the evaluation.

Evaluation

Evaluations were conducted for RPN Workshops occurring between September 2017 and June 2021, inclusive of four academic years (AYs 2017–18, 2018–19, 2019–20 and 2020–21). The survey population included individuals who attended a workshop from within the collaborating institutions. Two anonymous Qualtrics surveys were sent to all registered participants via email after each workshop.

-

Immediate Evaluation Survey: This survey was emailed to participants immediately after each workshop and contained closed and open-ended questions to assess demographics, quality (overall, workshop activities, teaching strategies, teaching effectiveness), and relevance of topic to respondents’ work setting/role. Beginning in January 2020, two questions were added to assess use of technology to connect the collaborating institutions, as well as to assess participants’ value of the inter-institutional collaboration from a content perspective.

-

Follow-up Survey: This survey was emailed to participants 6–8 weeks after each workshop and contained closed and open-ended questions to assess application of and motivation to implement learnings, incentive to implement learnings, whether the participant sought additional learning on the topic, and barriers and incentives to apply learnings in the work setting.

See Appendix 2 for both surveys.

This research was reviewed by the BUMC/BMC Institutional Review Board and determined to meet exemption category 2 under the federal regulations.

Quantitative analysis

Survey responses were exported to two Excel databases, one for the Immediate Evaluation Survey and one for the Follow-up Survey. The databases were cleaned, and some variables were recoded/truncated to facilitate additional analyses. Numeric values for categorical variables were also entered into the databases for subsequent analyses. For each study database, descriptive statistical analyses were conducted, stratifying by key factors (e.g., AY, institution, pre and post March 2020). Statistical analyses (median, mean, SD, and range) were performed to assess continuous variables, which were then analyzed with the Student’s t test or ANOVA. Contingency tables were used to assess categorical variables, which were assessed by Fisher’s exact or Chi-square statistics. The alpha level was set at p < 0.05 and all quantitative calculations were performed using the NCSS statistical package [20].

Qualitative analysis

Responses to the open-ended survey questions were analyzed in Excel using a general inductive approach. The general inductive approach allowed the evaluation to glean high-level themes that emerged when the “totality” of all responses to the specified question were examined [Reference Thomas21–Reference Coryn, Noakes, Westine and Schroeter23]. Two researchers, trained in qualitative methods, reviewed the qualitative data to identify relevant categories related to the quality of workshops, the inter-institutional collaboration (including technology and value), skill application to work/role, and facilitators and barriers to skill application. Qualitative responses were first analyzed by training focus and then according to training competencies. Responses associated with the specific competency categories were then analyzed for broad, emergent themes.

Results

Thirty-six RPN Workshops were held between September 2017 and June 2021. There were 1655 participants (median of 33.5, mean/SD of 41.5/29.7 per workshop). Of the 1655 participants, 710 were unique individuals. Over this timeframe three institutions and their affiliates joined as ongoing collaborators in developing and giving the RPN Workshops, increasing the median and mean number of attendees over time (Fig. 1). Beginning in March 2021, all four collaborating institutions and their affiliates were involved in the workshops, and during the timeframe of March through June 2021 there were 350 participants (median of 75, mean/SD 87.5/32.6 per workshop). Of the 350 participants during this 4-month timeframe, 264 were unique individuals. The workshop level was considered “Fundamental” for 20 (55.5%) workshops and “Advanced” for 16 (44.5%) workshops (see Appendix 1). A total of 999 Immediate Evaluation Surveys (60.4% response rate) and 378 Follow-up Surveys (22.8% response rate) were analyzed.

Figure 1. Mean and Total Attendance at Research Professionals Network (RPN) Workshops by academic years 2017–18 to AY 2020–21 with the addition of collaborating institutions. BUMC/BMC = Boston University Medical Campus/Boston Medical Center; UVM = University of Vermont; UF = University of Florida; MUSC = Medical University of South Carolina.

The percentage breakdown of Immediate Evaluation Survey respondents by role shows that the majority (82.3%) reported their role to be research coordinator, research assistant, research project manager, or research nurse. Administrators (9%), investigators (2.8%), and “other” (14.9%), which included pharmacists, data analysts, and IRB staff, comprised the difference.

Sample sizes by survey type stratified by institution and AY are presented in Table 1. The increasing number of survey responses each academic year reflects the addition of the collaborating institutions over time (as detailed in Fig. 1) and suggest an increased familiarity with and uptake of the workshops by CRPs at the collaborating institutions.

Table 1. Sample sizes of Research Professionals Network (RPN) surveys by type, institution, academic year (AY), and pre/post march 2020 change in how Zoom was used to connect institutions

CTSA = Clinical and Translational Science Award; BUMC/BMC = Boston University Medical Campus/Boston Medical Center; UVM = University of Vermont; UF = University of Florida; MUSC = Medical University of South Carolina.

Immediate evaluation survey results

Distribution of responses from the Immediate Evaluation Survey by Academic Year is provided in Table 2 (for additional details see Appendix 3). The Immediate Evaluation Survey assessed demographics, quality, relevance of topic to respondents’ work setting/role and (beginning January 2020) use of technology to connect the institutions, and participants’ value of the collaboration from a content perspective. There was general consistency in results over the academic years. Overall, Immediate Evaluation Survey respondents gave high ratings for workshop evaluation parameters:

Table 2. Percent distribution of responses from Research Professionals Network immediate evaluation close-ended questions (see Appendix 3 for detailed Table 2)

-

A total of 95.2% considered the overall quality of the workshops to be excellent or good.

-

A total of 90.7% considered the quality of the hands-on activities to be excellent or good.

-

A total of 92.7% considered the teaching strategies to be excellent or good.

-

A total of 84.3% noted that they would definitely or probably apply the skills learned in the workshop to their work setting.

Analysis of open-ended responses on the quality parameter revealed three themes: Content/Skills, Structure, and Presenter. Participants reported being highly satisfied with the content and skills presented, noting that topic background information and templates and guidance documents provided within the workshop were valuable for their work. One participant noted: “It was packed full of content and well-organized. The personal examples were helpful.” Participants also appreciated the skills presented: “They provided real solutions that I am excited to implement.” This also speaks to the value of the workshop content leading to positive change. Participants greatly valued the workshop structure, a combination of didactic content and interactive activities, which promoted practice with the learnings in small- and large-group activities and discussions. This is demonstrated in the following example comments: “The activities were very engaging and were generating excellent dialogue” and “The interactive approach is appreciated, more conducive to learning than lecture style.” When there was dissatisfaction with workshop structure, it was typically due to time management, where in a few cases workshops were rushed and the workshop activities were cut short. Feedback on Peer Presenters centered on teaching style, level of engagement, and knowledge about the topic. In general, participant assessment was very positive and highlighted their appreciation of the experience and knowledge of the Peer Presenters and their ability to engage the learners. The value of the “boots on the ground” experience of peer presenters was highlighted in many comments, such as: “Very useful to hear the direct experience and advice from someone who went through the process…” and “Complex information was clearly presented with nice examples. It was helpful to hear tips on her experience as well.”

The Immediate Evaluation Survey also queried participants about the inter-institutional collaboration, both from a technology perspective (experience with technology used to connect the learners), and content perspective (how much the participant valued the inter-institutional collaboration). 92.5% of respondents noted that their experience with the technology used to enable the inter-institutional collaboration was “excellent” or “good.” 99% of respondents rated their value of the inter-institutional collaboration as “very much” or “somewhat” versus “not at all” (1%).

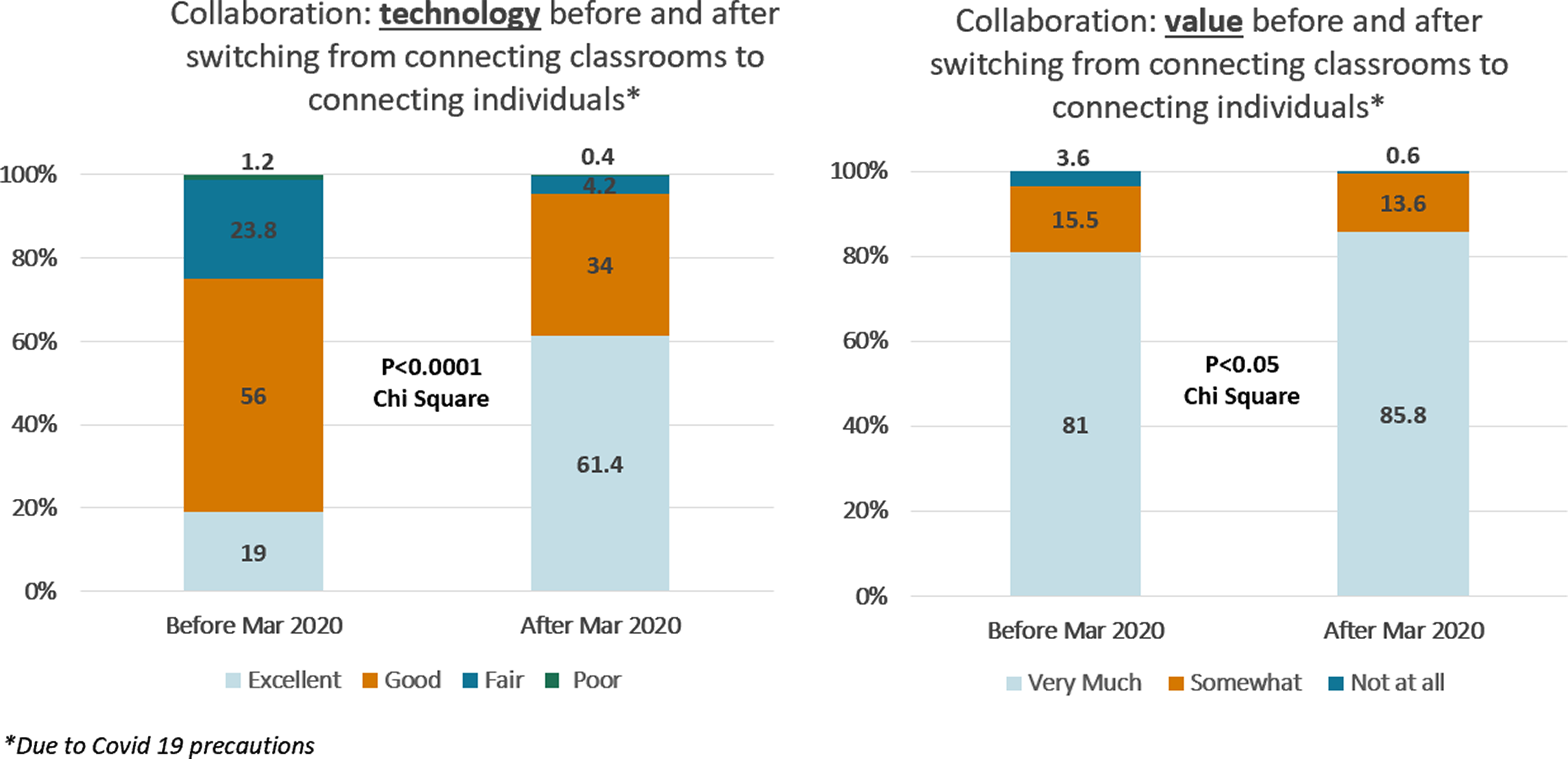

The large-scale mandatory work-from-home orders put in place in March 2020 due to the COVID-19 pandemic provided a natural experiment to assess whether this change in how Zoom was used to deliver the workshop (i.e., from connecting classrooms of assembled learners to connecting individuals directly with each other) had an impact on how participants viewed the inter-institutional collaboration. An analysis was done to understand the effects of this change on participant ratings.

Results (Fig. 2) show there were notable changes in the distribution of responses before and after March 2020 in how participants viewed the inter-institutional collaboration regarding (1) their experience with the technology to connect the institutions, and (2) perceived value of the content relative to the inter-institutional collaboration. For experience with the technology, responses of “excellent,” increased from 19.0% to 61.4%. Responses of “excellent” or “good” increased from 75% to 95.4%, while responses of “fair” or “poor” decreased from 25% to 4.6%. For the value of inter-institutional collaboration, responses of “very much” increased from 81.0% to 85.8%, while “not at all” decreased from 3.6% to 0.6%. The changes for both evaluation elements are statistically significant (Chi square = 73.312, 3 DF, p < 0.0001 and Chi square = 6.562, 2 DF, p < 0.05, respectively).

Figure 2. Inter-institutional collaboration technology and value from immediate evaluation survey, pre/post March 2020 change in how Zoom was used to connect institutions.

The open-ended responses provided further context and understanding on these elements, underscoring overall high satisfaction of and appreciation for the inter-institutional collaboration, especially after the March 2020 change in how Zoom was used in the workshops. Participants noted the increased accessibility that the Zoom platform provided, including facilitating communication between participants: “I really enjoyed the Zoom as it felt more accessible to more people across institutions… the use of teaching technology and breakout rooms was interactive and engaging” and “Participants seem more at ease and asking questions and sharing opinions feels less stressful and more natural.”

They also had a lot to say about their value of the inter-institutional nature of the workshops: “I gain valuable insight into how other academic institutions are operationalizing research, their problem-solving approaches, and their interpretation of regulations” and “I think there is always tremendous value in working with people from other institutions as we gain knowledge from different insights and methods used by those elsewhere.” Participants also express an appreciation for the idea that “we are all in this together” and “It’s helpful to hear how we’re all finding similar challenges and sharing strategies and stories is great; it’s nice to feel part of a larger group beyond our own organizations.”

Follow-up survey results

Results from the Follow-up Survey (conducted 6–8 weeks after the workshop) are presented in Table 3 (for additional details see Appendix 3). This survey assessed implementation of the workshop learnings by participants through evaluation of the following parameters: workshop content and topic as applicable to their job or role, motivation to practice skills presented in the workshop, incentives to apply skills to their work, and continued learning on the topic beyond the workshop. As shown in the table, most respondents strongly agreed or agreed regarding each of these parameters.

Table 3. Percent distributions of reponses from follow-up survey closed-ended questions (see Appendix 3 for detailed Table 3)

-

A total of 73.9% strongly agreed or agreed that they applied the workshop content to their current job.

-

A total of 88.6% strongly agreed or agreed they were motivated to practice the new skills.

-

A total of 83% strongly agreed or agreed that they had incentives to apply the skills to their work.

-

A total of 60.7% strongly agreed or agreed that they continued their learning on the topic beyond the workshop.

Analysis of the open-ended questions revealed three overarching themes, which reflect workshop impact: Skill Application to Work/Role, Environment that Enables Skill Application, and Barriers to Skill Application (Table 4). Responses suggest that numerous variables affect the implementation and application of training skills, such as local infrastructure and organizational working conditions, support from leadership, and how well other research team members were engaged in professional growth. Participants reported greater implementation of new tools or processes if management allowed their use. Certain environments better-enabled skill building through methods of support, flexibility, and autonomy. Reported barriers to skill application included insufficient time and/or resources, and team or department dynamics.

Table 4. A sampling of qualitative results/inductive coding of the open-ended qualitative responses to follow-up survey questions

AE/SAE = Adverse Event/Serious Adverse Event; SOPs = Standard Operating Procedures; PI = Principal Investigator.

Participants listed numerous ways that skills learned in the workshop were able to be applied to their jobs, including the use of new tools, changes in workflows, and informing others of new processes.

Discussion

The monthly RPN Workshops help to support each collaborating institution’s continuing education offerings through peer-led competency-based training on topics of relevance for CRPs. These workshops are a unique solution for continuing education training because they incorporate key elements and best practices outlined within recent CTSA NOFOs [12,13] but they are challenging to operationalize compared with other training methods such as utilizing static “review and quiz” formats.

The workshops were developed to support competency-based CRP professional development through active engagement in collaborative exercises, where attendees engage with the new material and with each other. Having workshops led by mentored peer presenters ensures a “boots on the ground” perspective, providing the important (and sometimes elusive) how something is done in addition to what needs to be done. It also is an excellent professional development opportunity for those leading the workshops. The JTF Core Competency Framework provides a structure for developing workshop content, objectives, and leveling.

Inter-institutional workshops require more effort and planning but offer the potential for significant benefits related to the participant experience. The inter-institutional collaboration promotes expanding reach, sharing of resources, engagement of CRPs beyond a single institution, and widening perspectives. From an operational perspective, it allows the opportunity to leverage the resources, ideas, and energy of multiple institutions to conduct the workshops rather than just one. This collaboration also reduces redundancy and duplication of effort at each of the organizations by creating CRP education and training materials that are used among the four partnering organizations and their affiliates. Importantly, it also significantly increases the pool of possible CRP peers who may want to co-lead a workshop as an opportunity for their own professional development.

Analysis of the four years of RPN Evaluation and Follow-up Survey data provides further understanding of the impact of the workshops on CRP practice to enhance quality clinical research. This may also be utilized to inform others who may want to offer similar initiatives based on the model described here. Results show high satisfaction for all outcomes: overall workshop quality (content, presenter, interactive activities), skill utilization and application, and quality and value of the inter-institutional collaboration. There are several findings that highlight key strengths of the RPN Workshop initiative, specifically in relation to the inter-institutional collaboration and the interactive workshop activities. The authors believe there is a synergistic effect at play; the cross-institutional exchange of ideas and best practices was significantly enhanced when the planning team pivoted to use the Zoom technology to directly connect individuals. This resulted in higher participant satisfaction with the inter-institutional collaboration.

While participants valued the inter-institutional collaboration from the start, their scores related to “value” of the collaboration increased significantly after changing to connecting participants individually via Zoom (Fig. 2). Qualitative responses show that the change enhanced sharing by enabling easier cross-institutional exchange of ideas and best practices. Participants frequently comment that they are glad to know they are “not alone” in the complexities, challenges, barriers, and difficulties inherent to their roles. They also cite being stimulated by new perspectives and describe specific examples of incorporating these learnings into their own research settings. They report valuing the ability to connect with other CRPs at the collaborating institutions, saying they learn new ways of doing things and best practices: “The value of the collaboration between our institutions could never be overestimated. It is priceless. I garner from these interactions support, feedback on best practices, and new ideas. I always leave wanting more…” Further, participants provide examples of how they have integrated learnings from others into their own studies:

“During the group activity our group explored factors that make recruitment harder for studies. After this session, I explored with our PI how our trial could improve any issues with recruitment, by finding where we introduce constraints that are not scientifically impactful.”

Participants have noted that they like the interactive nature of the workshops. Survey results demonstrate they like the interactive nature even more now that they connect with and learn from people both inside and outside of their institutions.

RPN Workshop presentations, paired with a chance to exchange ideas in breakout rooms and chats, facilitate participatory collaborative learning and is attuned to the needs of adult learners wanting to evolve in their careers. This social learning experience seems to facilitate a “deeper learning” around what it means to be a competent clinical research professional [Reference Behar-Horenstein, Prikhidko and Kolb24,Reference Dede, Bellance and Brandt25]. Deeper learning as an instructional strategy requires activities that involve shared interactions with others in a community [26] and has been described as a means of instilling critical thinking, reasoning, and responsibility [Reference Dede, Bellance and Brandt25]. Deeper learning cultivates opportunities to develop competencies that are transmissible and structured around essential ethical values of practice [26]. In the context of the RPN Workshops, this approach allows individual CRPs to transfer experience and knowledge gained from the workshops into their work settings as well as to transfer their experience back to the workshops by engaging with other attendees. CRP peer presenters are typically those who are involved in developing and carrying out their study processes and procedures, and often provide key insights and anecdotes from their experiences, including details on what did and what did not work and why.

Engaging with others to promote learning of a practice is not new. Although it was not intentional, what developed organically in these workshops is a “Community of Practice” (CoP). A CoP is a group of people who come together and share common concerns, challenges, and interests in a particular topic [Reference Lave and Wenger27,Reference Li, Grimshaw, Nielsen, Judd, Coyte and Graham28] with a focus on sharing best practices and creating new knowledge to advance professional practice. Ongoing interaction is key to CoPs; therefore, we want to further develop the community by providing more opportunities for CRPs to connect with each other outside of the workshop. We can utilize the strength of this multi-institutional community of CRPs to increase engagement, e.g., ongoing communications beyond the workshops through a web-based software platform to facilitate easy interaction, communication, and sharing by individuals within and between institutions. This type of initiative can “keep the conversation going” after a workshop and serve to reinforce learning and promote successful implementation of best practices.

Conclusion

The peer-led, interactive, inter-institutional RPN Workshops provide a successful model that can be implemented by CTSA hubs and others to address challenges in clinical research and continuing education. We have found that continuing education is significantly improved by implementing training where learners engage with the material and other CRPs in a collaborative space that cultivates the exchange of perspectives, learnings, and experiences. The workshops cover topics critical to CRP practices and are delivered in an engaging format, leveraging adult learning principles. The RPN Workshop model creates a supportive environment that fosters the sharing of ideas while facilitating career development and growth. The inter-institutional nature promotes a diversity of insights that enrich the workshop learnings, while also reducing redundant training at each site. The concept of CoPs identified as an important outcome of the RPN Workshops, should be further developed and evaluated.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/cts.2024.531.

Author contributions

Mary-Tara Roth: Manuscript Conception and Design, Collection or Contribution of Data, Data Analysis, Contribution of Data Expertise, Drafting & Editing Manuscript and Table Creation. Diana Lee-Chavarria: Manuscript Conception and Design, Collection or Contribution of Data, Data Analysis, Contribution of Data Expertise, Drafting & Editing Manuscript, and Table Creation. H. Robert Kolb: Manuscript Conception and Design, Collection or Contribution of Data, Contribution of Data Expertise, and Drafting Manuscript. Karla Damus, Jennifer Sikov & Rechelle Paranal: Data Analysis, Performance of Data Analysis and Contribution of Data Expertise. Kimberly Luebbers: Manuscript Conception and Design, Collection or Contribution of Data, Data Analysis, Contribution of Data Expertise, Drafting & Editing Manuscript, and Table Creation.

Funding statement

This publication was supported, in part, by the National Center for Advancing Translational Sciences of the National Institutes of Health under grant numbers UL1 TR001450 (Brady KT, Flume PA), UL1 TR001430 (Center DM, Bair-Merritt MH), and UL1 TR001427 (Mitchell DA); and the National Institute of General Medical Sciences of the National Institutes of Health under grant number U54 GM115516 (Rosen CJ, Stein GS). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Competing interests

This work was presented in part during a session of the Association of Clinical and Translational Science Annual Meeting on April 19, 2022.