Article contents

A Markov jump process associated with the matrix-exponential distribution

Published online by Cambridge University Press: 20 September 2022

Abstract

Let f be the density function associated to a matrix-exponential distribution of parameters

$(\boldsymbol{\alpha}, T,\boldsymbol{{s}})$

. By exponentially tilting f, we find a probabilistic interpretation which generalizes the one associated to phase-type distributions. More specifically, we show that for any sufficiently large

$(\boldsymbol{\alpha}, T,\boldsymbol{{s}})$

. By exponentially tilting f, we find a probabilistic interpretation which generalizes the one associated to phase-type distributions. More specifically, we show that for any sufficiently large

$\lambda\ge 0$

, the function

$\lambda\ge 0$

, the function

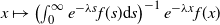

$x\mapsto \left(\int_0^\infty e^{-\lambda s}f(s)\textrm{d} s\right)^{-1}e^{-\lambda x}f(x)$

can be described in terms of a finite-state Markov jump process whose generator is tied to T. Finally, we show how to revert the exponential tilting in order to assign a probabilistic interpretation to f itself.

$x\mapsto \left(\int_0^\infty e^{-\lambda s}f(s)\textrm{d} s\right)^{-1}e^{-\lambda x}f(x)$

can be described in terms of a finite-state Markov jump process whose generator is tied to T. Finally, we show how to revert the exponential tilting in order to assign a probabilistic interpretation to f itself.

Keywords

MSC classification

- Type

- Original Article

- Information

- Copyright

- © The Author(s), 2022. Published by Cambridge University Press on behalf of Applied Probability Trust

References

- 1

- Cited by