1. Introduction and main results

1.1. Introduction

Complex networks are everywhere. Prominent examples include social networks, the Internet, the World Wide Web, transportation networks, etc. In any network, it is of great interest to be able to quantify who is ‘important’ and who is less so. This is what centrality measures aim to do.

There are several well-known centrality measures in networks [Reference Newman38, Chapter 7], such as degree centrality, PageRank centrality, and betweenness centrality. PageRank, first proposed in [Reference Page, Brin, Motwani and Winograd39], can be visualised as the stationary distribution of a random walk with uniform restarts. Betweenness centrality was first defined by Anthonisse [Reference Anthonisse2]. For a survey of centrality measures, see Boldi and Vigna [Reference Boldi and Vigna8] and Borgatti [Reference Borgatti12].

There are many ways in which we can compare the effectiveness of centrality measures. Here, one can think of importance for spreading diseases or information [Reference Wei, Zhao, Liu and Wang42], for being an information bridge between different communities, or for being an important source of information in a network of scientific papers [Reference Senanayake, Piraveenan and Zomaya41].

In this paper, we compare centrality measures by investigating the rate of disintegration of the network by the removal of central vertices. This approach quantifies the notion that a centrality measure is more effective when the network disintegrates more upon the removal of the most central vertices. This work is motivated by the analysis and simulations performed in [Reference Mocanu, Exarchakos and Liotta35], where the number of connected components and the size of the giant were compared after the removal of the most central vertices based on several centrality measures from a simulation perspective.

Central questions. Our key questions are as follows.

-

How will the number of connected components grow with the removal of the most/least central vertices?

-

Does vertex removal with respect to centrality measures preserve the existence of local limits?

-

What are the subcritical and supercritical regimes of the giant component for vertex removals with respect to centrality measure?

-

What is the proportion of vertices in the giant component?

Main innovation of this paper. To answer the above questions, we rely on the theory of local convergence [Reference Aldous and Steele1, Reference Benjamini and Schramm6]; see also [Reference Hofstad23, Chapter 2] for an extensive overview. We show that when considering strictly local centrality measures, i.e. measures that depend on a fixed-radius neighbourhood of a vertex, the number of connected components after the vertex removal procedure converges, and the local limit of the removed graph can be determined in terms of the original local limit. Thus, this answers the first two questions rather generally, assuming local convergence.

It is well known that the giant in a random graph is not determined by its local limit (even though it frequently ‘almost’ is: see [Reference Hofstad22]). While the upper bound on the giant is always equal to the survival probability of the local limit, the matching lower bound can be different. For instance, take two disjoint unions of graphs with the same size and local limit. Then, the survival probability of the local limit remains the same but the proportion of vertices in the largest connected component is reduced by a factor of 2, which is strictly less than the survival probability if it is positive. To give an example where we can prove that the giant of the vertex-removed graph equals the survival probability of its local limit, we restrict our attention to the configuration model with a given degree distribution, and degree centrality. There, we identify the giant of the vertex-removed graph, and also show that the giant is smaller when removing more degree-central vertices.

1.2. Preliminaries

In this section, we define centrality measures and then give an informal introduction to local convergence, as these play a central role in this paper. We will assume that

![]() $|V(G)|=n$

, and for convenience assume that

$|V(G)|=n$

, and for convenience assume that

![]() $V(G)=\{1, \ldots, n\}\equiv[n]$

.

$V(G)=\{1, \ldots, n\}\equiv[n]$

.

1.2.1. Centrality measures

In this section, we define centrality measures.

Definition 1.1. (Centrality measures in undirected graphs.) For a finite (undirected) graph

![]() $G=(V(G),E(G))$

, a centrality measure is a function

$G=(V(G),E(G))$

, a centrality measure is a function

![]() $R\colon V(G) \to \mathbb{R}_{\geq0}$

, where we consider v to be central if R(v) is large.

$R\colon V(G) \to \mathbb{R}_{\geq0}$

, where we consider v to be central if R(v) is large.

Centrality measures can be extended to directed graphs, but in this paper we restrict to undirected graphs as in [Reference Mocanu, Exarchakos and Liotta35]. Commonly used examples of centrality measures are as follows.

Degree centrality. In degree centrality, we rank the vertices according to their degrees and then divide the rank by the total number of vertices. We randomly assign ranks to vertices with the same degree, to remove ties.

PageRank centrality. PageRank is a popularly known algorithm for measuring centrality in the World Wide Web [Reference Brin and Page13].

Definition 1.2. (PageRank centrality.) Consider a finite (undirected) graph G. Let

![]() $e_{j,i}$

be the number of edges between j and i. Denote the degree of vertex

$e_{j,i}$

be the number of edges between j and i. Denote the degree of vertex

![]() $i \in [n]$

by

$i \in [n]$

by

![]() $d_{i}$

. Fix a damping factor or teleportation parameter

$d_{i}$

. Fix a damping factor or teleportation parameter

![]() $c\in(0, 1)$

. Then PageRank is the unique probability vector

$c\in(0, 1)$

. Then PageRank is the unique probability vector

![]() $\boldsymbol{\pi}_n = (\pi_n(i))_{i\in [n]}$

that satisfies that, for every

$\boldsymbol{\pi}_n = (\pi_n(i))_{i\in [n]}$

that satisfies that, for every

![]() $i \in [n]$

,

$i \in [n]$

,

Suppose

![]() $\textbf{1} = (1, 1, \ldots, 1)$

is the all-one vector. Then, with

$\textbf{1} = (1, 1, \ldots, 1)$

is the all-one vector. Then, with

![]() $P = (p_{i,j})_{i, j \in V(G)}$

and

$P = (p_{i,j})_{i, j \in V(G)}$

and

![]() $p_{i,j}= e_{i, j}/d_i$

the transition matrix for the random walk on G,

$p_{i,j}= e_{i, j}/d_i$

the transition matrix for the random walk on G,

In order to use local convergence techniques [Reference Garavaglia, Hofstad and Litvak19], it is useful to work with the graph-normalised PageRank, given by

Since

![]() $c\in(0,1)$

, (1.1) implies

$c\in(0,1)$

, (1.1) implies

Equation (1.2) is sometimes called power-iteration for PageRank [Reference Avrachenkov and Lebedev3, Reference Bianchini, Gori and Scarselli7, Reference Boldi, Santini and Vigna9]. It will also be useful to consider the finite-radius PageRank,

![]() $\boldsymbol{R}_n^{\scriptscriptstyle(N)}$

, for

$\boldsymbol{R}_n^{\scriptscriptstyle(N)}$

, for

![]() $N\in \mathbb{N}$

, defined by

$N\in \mathbb{N}$

, defined by

\begin{equation} \boldsymbol{R}_n^{\scriptscriptstyle(N)} \;:\!=\; \biggl( \dfrac{1-c}{n}\biggr) \textbf{1}\sum_{k= 0}^{N} c^k P^k, \end{equation}

\begin{equation} \boldsymbol{R}_n^{\scriptscriptstyle(N)} \;:\!=\; \biggl( \dfrac{1-c}{n}\biggr) \textbf{1}\sum_{k= 0}^{N} c^k P^k, \end{equation}

which approximates PageRank by a finite number of powers in (1.2). PageRank has been intensely studied on various random graph models [Reference Banerjee and Olvera-Cravioto5, Reference Chen, Litvak and Olvera-Cravioto15, Reference Chen, Litvak and Olvera-Cravioto16, Reference Garavaglia, Hofstad and Litvak19, Reference Jelenković and Olvera-Cravioto31, Reference Lee and Olvera-Cravioto32, Reference Litvak, Scheinhardt and Volkovich34], with the main focus being to prove or disprove that PageRank has the same power-law exponent as the in-degree distribution.

Other popular centrality measures. Closeness centrality measures the average distance of a vertex from a randomly chosen vertex. The higher the average, the lower the centrality index, and vice versa. Interestingly, Evans and Chen [Reference Evans and Chen18] predict that closeness centrality is closely related to degree centrality, at least for locally tree-like graphs. Betweenness centrality measures the extent to which vertices are important for interconnecting different vertices, and is given by

where

![]() $\sigma_{u, v}$

is the number of shortest paths from vertex u to vertex v, and

$\sigma_{u, v}$

is the number of shortest paths from vertex u to vertex v, and

![]() $\sigma_{u, v}(w)$

is the number of shortest paths between u and v containing w.

$\sigma_{u, v}(w)$

is the number of shortest paths between u and v containing w.

In this paper we mainly work with strictly local centrality measures.

Definition 1.3. (Strictly local centrality measures.) For a finite (undirected) graph

![]() $G=(V(G),E(G))$

, a strictly local centrality measure is a centrality measure

$G=(V(G),E(G))$

, a strictly local centrality measure is a centrality measure

![]() $R \colon V(G) \to \mathbb{R}_{\geq0}$

, such that there exists an

$R \colon V(G) \to \mathbb{R}_{\geq0}$

, such that there exists an

![]() $r\in \mathbb{N}$

, and for each vertex

$r\in \mathbb{N}$

, and for each vertex

![]() $v\in V(G)$

, R(v) depends only on the graph through the neighbourhood

$v\in V(G)$

, R(v) depends only on the graph through the neighbourhood

where

![]() $\textrm{dist}_{\scriptscriptstyle G}(u,v)$

denotes the graph distance between u and v in G.

$\textrm{dist}_{\scriptscriptstyle G}(u,v)$

denotes the graph distance between u and v in G.

PageRank is very well approximated by its strictly local version as in (1.3) [Reference Avrachenkov, Litvak, Nemirovsky and Osipova4, Reference Boldi and Vigna8, Reference Garavaglia, Hofstad and Litvak19].

1.2.2. Local convergence of undirected random graphs

In this section, we informally introduce local convergence of random graphs, which describes what a graph locally looks like from the perspective of a uniformly chosen vertex, as the number of vertices in a graph goes to infinity. For example, the sparse Erdős–Rényi random graph, which is formed by bond-percolation on the complete graph, locally looks like a Poisson branching process, as n tends to infinity [Reference Hofstad23, Chapter 2]. Before moving further, we discuss the following notation.

Notation 1.1. (Probability convergence.) Suppose

![]() $(X_n)_{n\geq 1}$

,

$(X_n)_{n\geq 1}$

,

![]() $(Y_n)_{n\geq 1}$

are two sequences of random variables and X is a random variable.

$(Y_n)_{n\geq 1}$

are two sequences of random variables and X is a random variable.

-

(1) We write

$X_n \overset{\scriptscriptstyle{\mathbb{P}}/d}{\to} X$

when

$X_n \overset{\scriptscriptstyle{\mathbb{P}}/d}{\to} X$

when

$X_n$

converges in probability/distribution to X.

$X_n$

converges in probability/distribution to X. -

(2) We write

$X_n = o_{\scriptscriptstyle\mathbb{P}}(Y_n)$

when

$X_n = o_{\scriptscriptstyle\mathbb{P}}(Y_n)$

when

$X_n/Y_n \overset{\scriptscriptstyle\mathbb{P}}{\to} 0$

.

$X_n/Y_n \overset{\scriptscriptstyle\mathbb{P}}{\to} 0$

.

Now let us give the informal definition of local convergence. We will rely on two types of local convergence, namely, local weak convergence and local convergence in probability. Let

![]() $o_n$

denote a uniformly chosen vertex from

$o_n$

denote a uniformly chosen vertex from

![]() $V(G_n)$

. Local weak convergence means that

$V(G_n)$

. Local weak convergence means that

for all rooted graphs (H, o′), where a rooted graph is a graph with a distinguished vertex in the vertex set V(H) of H. In (1.4),

![]() $(\bar{G}, o) \sim \bar{\mu}$

is a random rooted graph, which is called the local weak limit. For local convergence in probability, instead, we require that

$(\bar{G}, o) \sim \bar{\mu}$

is a random rooted graph, which is called the local weak limit. For local convergence in probability, instead, we require that

holds for all rooted graphs (H, o′). In (1.5),

![]() $(G, o) \sim \mu$

is a random rooted graph which is called the local limit in probability (and bear in mind that

$(G, o) \sim \mu$

is a random rooted graph which is called the local limit in probability (and bear in mind that

![]() $\mu$

can possibly be a random measure on rooted graphs). Both (1.4) and (1.5) describe the convergence of the proportions of vertices around which the graph locally looks like a certain specific graph. We discuss this definition more formally in Section 2.1. We now turn to our main results.

$\mu$

can possibly be a random measure on rooted graphs). Both (1.4) and (1.5) describe the convergence of the proportions of vertices around which the graph locally looks like a certain specific graph. We discuss this definition more formally in Section 2.1. We now turn to our main results.

1.3. Main results

In this section, we state our main results. In Section 1.3.1 we discuss our results that hold for general strictly local centrality measures on locally converging random graph sequences. In Section 1.3.2 we investigate the size of the giant after vertex removal. Due to the non-local nature of the giant, there we restrict to degree centrality on the configuration model.

1.3.1. Strictly local centrality measures

We first define our vertex removal procedure based on centrality.

Definition 1.4. (Vertex removal based on centrality threshold.) Let G be an arbitrary graph. Define G(R, r) to be the graph obtained after removing all the vertices v for which

![]() $R(v)> r$

. We call this the

$R(v)> r$

. We call this the

![]() $r$

-killed graph of G.

$r$

-killed graph of G.

Theorem 1.1. (Continuity of vertex removal.) Let R be a strictly local centrality measure and

![]() ${(G_n)}_{n\geq 1}$

a sequence of random graphs that converges locally weakly/locally in probability to

${(G_n)}_{n\geq 1}$

a sequence of random graphs that converges locally weakly/locally in probability to

![]() $(G, o) \sim \mu$

. Then

$(G, o) \sim \mu$

. Then

![]() $(G_n(R, r))_{n\geq 1}$

converges locally weakly/locally in probability to

$(G_n(R, r))_{n\geq 1}$

converges locally weakly/locally in probability to

![]() $(G(R, r), o)$

, respectively.

$(G(R, r), o)$

, respectively.

Remark 1.1. (Extension to ‘almost’ local centrality measures.) We believe that the proof for Theorem 1.1 can be extended to ‘almost’ local centrality measures like PageRank, but the proof is much more involved.

Theorem 1.1 means that vertex removal with respect to a strictly local centrality threshold is continuous with respect to the local convergence.

Let

![]() $C_r(o)$

denote the connected component containing the root in the r-killed graph,

$C_r(o)$

denote the connected component containing the root in the r-killed graph,

![]() $(G(R,r), o)\sim \mu$

. As a corollary to Theorem 1.1, we bound the limiting value of the proportion of vertices in the giant component in

$(G(R,r), o)\sim \mu$

. As a corollary to Theorem 1.1, we bound the limiting value of the proportion of vertices in the giant component in

![]() $G_n(R, r)$

in probability by the survival probability of

$G_n(R, r)$

in probability by the survival probability of

![]() $(G(R, r), o)\sim \mu$

, which is

$(G(R, r), o)\sim \mu$

, which is

![]() $\mu(|C_r(o)| = \infty)$

.

$\mu(|C_r(o)| = \infty)$

.

Corollary 1.1. (Upper bound on giant.) Let

![]() $v(C_1(G_n(R, r))$

denote the number of vertices in the giant component of

$v(C_1(G_n(R, r))$

denote the number of vertices in the giant component of

![]() $G_n(R, r)$

. Under the conditions of Theorem 1.1,

$G_n(R, r)$

. Under the conditions of Theorem 1.1,

for all

![]() $\varepsilon>0$

, where

$\varepsilon>0$

, where

![]() $\zeta = \mu(|C_r(o)| = \infty).$

$\zeta = \mu(|C_r(o)| = \infty).$

The intuition for Corollary 1.1 is as follows. For any integer k, the set of vertices with component sizes larger than k, if non-empty, contains the vertices in the largest connected component. Thus the proportion of vertices with component size larger than k is an upper bound for the proportion of vertices in the giant component. The former, when divided by n, is the probability that a uniformly chosen vertex has a component size greater than k, which converges due to local convergence properties of the graph sequence. Sending k to infinity gives us the required upper bound.

Let

![]() $K_n^r(R)$

denote the number of connected component in the killed graph

$K_n^r(R)$

denote the number of connected component in the killed graph

![]() $G_n(R, r)$

. As another corollary to Theorem 1.1, we give the convergence properties for

$G_n(R, r)$

. As another corollary to Theorem 1.1, we give the convergence properties for

![]() $K_n^r(R)$

.

$K_n^r(R)$

.

Corollary 1.2. (Number of connected components.) Under the conditions of Theorem 1.1, we have the following.

-

(a) If

${(G_n)}_{n\geq 1}$

converges locally in probability to

${(G_n)}_{n\geq 1}$

converges locally in probability to

$(G, o) \sim \mu$

, then

$(G, o) \sim \mu$

, then  \begin{equation*} \dfrac{K_n^r(R)}{n} \overset{\scriptscriptstyle\mathbb{P}}{\to} \mathbb{E}_{\mu}\biggl[\dfrac{1}{|C_r(o)|}\biggr]. \end{equation*}

\begin{equation*} \dfrac{K_n^r(R)}{n} \overset{\scriptscriptstyle\mathbb{P}}{\to} \mathbb{E}_{\mu}\biggl[\dfrac{1}{|C_r(o)|}\biggr]. \end{equation*}

-

(b) If

${(G_n)}_{n\geq 1}$

converges locally weakly to

${(G_n)}_{n\geq 1}$

converges locally weakly to

$(\bar{G}, \bar{o}) \sim \bar{\mu}$

, then

$(\bar{G}, \bar{o}) \sim \bar{\mu}$

, then  \begin{equation*} \dfrac{\mathbb{E}[K_n^r(R)]}{n} \to \mathbb{E}_{\bar{\mu}}\biggl[\dfrac{1}{|C_r(o)|}\biggr]. \end{equation*}

\begin{equation*} \dfrac{\mathbb{E}[K_n^r(R)]}{n} \to \mathbb{E}_{\bar{\mu}}\biggl[\dfrac{1}{|C_r(o)|}\biggr]. \end{equation*}

The limit in Corollary 1.2 follows from the identity

where

![]() $o_n$

is a uniformly chosen vertex and

$o_n$

is a uniformly chosen vertex and

![]() $|C(v)|$

is the connected component containing v. The function

$|C(v)|$

is the connected component containing v. The function

![]() $f(G, v) = 1/|C(v)|$

is continuous and bounded on the metric space of rooted graphs. Thus we can apply local convergence results on this function.

$f(G, v) = 1/|C(v)|$

is continuous and bounded on the metric space of rooted graphs. Thus we can apply local convergence results on this function.

1.3.2. Degree centrality and configuration model

In this section, we restrict to degree centrality in the configuration model. Before starting with our main results, we introduce the configuration model.

Configuration model. The configuration model was introduced by Bollobás [Reference Bollobás10]; see also [Reference Hofstad21, Chapter 7] and the references therein for an extensive introduction. It is one of the simplest possible models for generating a random graph with a given degree distribution. Written as

![]() $\textrm{CM}_n(\boldsymbol{d})$

, it is a random graph on n vertices having a given degree sequence

$\textrm{CM}_n(\boldsymbol{d})$

, it is a random graph on n vertices having a given degree sequence

![]() $\boldsymbol{d}$

, where

$\boldsymbol{d}$

, where

![]() $\boldsymbol{d} = (d_1, d_2,\ldots, d_n) \in\mathbb{N}^{n}.$

The giant in the configuration model has attracted considerable attention in, for example, [Reference Bollobás and Riordan11], [Reference Hofstad22], [Reference Janson and Luczak30], [Reference Molloy and Reed36], and [Reference Molloy and Reed37]. It is also known how the tail of the limiting degree distribution influences the size of giant [Reference Deijfen, Rosengren and Trapman17]. Further, the diameter and distances in the supercritical regime have been studied in [Reference Hofstad, Hooghiemstra and Van Mieghem24], [Reference Hofstad, Hooghiemstra and Znamenski25], and [Reference Hofstad, Hooghiemstra and Znamenski26], while criteria for the graph to be simple appear in [Reference Britton, Deijfen and Martin-Löf14], [Reference Janson28], and [Reference Janson29]. In this paper we assume that the degrees satisfy the following usual conditions.

$\boldsymbol{d} = (d_1, d_2,\ldots, d_n) \in\mathbb{N}^{n}.$

The giant in the configuration model has attracted considerable attention in, for example, [Reference Bollobás and Riordan11], [Reference Hofstad22], [Reference Janson and Luczak30], [Reference Molloy and Reed36], and [Reference Molloy and Reed37]. It is also known how the tail of the limiting degree distribution influences the size of giant [Reference Deijfen, Rosengren and Trapman17]. Further, the diameter and distances in the supercritical regime have been studied in [Reference Hofstad, Hooghiemstra and Van Mieghem24], [Reference Hofstad, Hooghiemstra and Znamenski25], and [Reference Hofstad, Hooghiemstra and Znamenski26], while criteria for the graph to be simple appear in [Reference Britton, Deijfen and Martin-Löf14], [Reference Janson28], and [Reference Janson29]. In this paper we assume that the degrees satisfy the following usual conditions.

Condition 1.1. (Degree conditions.) Let

![]() $\boldsymbol{d} = (d_1, d_2, \ldots , d_n)$

denote a degree sequence. Let

$\boldsymbol{d} = (d_1, d_2, \ldots , d_n)$

denote a degree sequence. Let

![]() $n_j=\{v\colon d_v=j\}$

denote the number of vertices with degree j. We assume that there exists a probability distribution

$n_j=\{v\colon d_v=j\}$

denote the number of vertices with degree j. We assume that there exists a probability distribution

![]() $(p_j)_{j\geq 1}$

such that the following hold:

$(p_j)_{j\geq 1}$

such that the following hold:

-

(a)

$\lim_{n\rightarrow \infty}n_j/n= p_j$

,

$\lim_{n\rightarrow \infty}n_j/n= p_j$

, -

(b)

$\lim_{n\rightarrow \infty}\sum_{j\geq 1} j n_j/n= \sum_{j\geq 1} jp_j<\infty$

.

$\lim_{n\rightarrow \infty}\sum_{j\geq 1} j n_j/n= \sum_{j\geq 1} jp_j<\infty$

.

Let D be a non-negative integer random variable with probability mass function

![]() $(p_j)_{j\geq 1}$

. These regularity conditions ensure that the sequence of graphs converges locally in probability to a unimodular branching process, with degree distribution D. See [Reference Hofstad23, Chapter 4] for more details.

$(p_j)_{j\geq 1}$

. These regularity conditions ensure that the sequence of graphs converges locally in probability to a unimodular branching process, with degree distribution D. See [Reference Hofstad23, Chapter 4] for more details.

Generalised vertex removal based on degrees. We wish to study the effect of the removal of the

![]() $\alpha$

proportion of vertices with the highest or lowest degrees on the giant of the configuration model. For this, we define

$\alpha$

proportion of vertices with the highest or lowest degrees on the giant of the configuration model. For this, we define

![]() $\alpha$

-sequences with respect to a probability mass function as follows.

$\alpha$

-sequences with respect to a probability mass function as follows.

Definition 1.5. (

![]() $\alpha$

-sequence.) Fix

$\alpha$

-sequence.) Fix

![]() $\alpha \in (0,1)$

. Let

$\alpha \in (0,1)$

. Let

![]() $\boldsymbol{r}\;:\!=\; (r_i)_{i\geq1}$

be a sequence of elements of [0, 1] satisfying

$\boldsymbol{r}\;:\!=\; (r_i)_{i\geq1}$

be a sequence of elements of [0, 1] satisfying

Then

![]() $\boldsymbol{r}$

is called an

$\boldsymbol{r}$

is called an

![]() $\alpha$

-sequence with respect to

$\alpha$

-sequence with respect to

![]() $\boldsymbol{p} = (p_j)_{j\geq 1}$

.

$\boldsymbol{p} = (p_j)_{j\geq 1}$

.

Suppose

![]() $(G_n)_{n\geq 1}$

is a sequence of random graphs converging locally in probability to

$(G_n)_{n\geq 1}$

is a sequence of random graphs converging locally in probability to

![]() $(G,o)\sim\mu$

, and let the limiting degree distribution be D, with probability mass function as

$(G,o)\sim\mu$

, and let the limiting degree distribution be D, with probability mass function as

![]() $\boldsymbol{p} = (p_j)_{j\geq 1}$

. Suppose

$\boldsymbol{p} = (p_j)_{j\geq 1}$

. Suppose

![]() $\boldsymbol{r}=(r_j)_{j\geq 1}$

is an

$\boldsymbol{r}=(r_j)_{j\geq 1}$

is an

![]() $\alpha$

-sequence with respect to

$\alpha$

-sequence with respect to

![]() $\boldsymbol{p}$

; then we define vertex removal based on

$\boldsymbol{p}$

; then we define vertex removal based on

![]() $\alpha$

-sequences as follows.

$\alpha$

-sequences as follows.

Definition 1.6. (Vertex removal based on

![]() $\alpha$

-sequences.) Remove

$\alpha$

-sequences.) Remove

![]() $\lfloor n r_i p_i\rfloor$

vertices of degree i from

$\lfloor n r_i p_i\rfloor$

vertices of degree i from

![]() $G_n$

uniformly at random, for each

$G_n$

uniformly at random, for each

![]() $n\geq 1$

. This gives us the vertex-removed graph according to the

$n\geq 1$

. This gives us the vertex-removed graph according to the

![]() $\alpha$

-sequence

$\alpha$

-sequence

![]() $\boldsymbol{r}=(r_j)_{j\geq 1}$

, denoted by

$\boldsymbol{r}=(r_j)_{j\geq 1}$

, denoted by

![]() $(G_{n,\boldsymbol{r}})_{n\geq 1}$

.

$(G_{n,\boldsymbol{r}})_{n\geq 1}$

.

Remark 1.2. In

![]() $G_{n, \boldsymbol{r}}$

, we asymptotically remove an

$G_{n, \boldsymbol{r}}$

, we asymptotically remove an

![]() $\alpha$

proportion of vertices because, due to Condition 1.1 and the dominated convergence theorem,

$\alpha$

proportion of vertices because, due to Condition 1.1 and the dominated convergence theorem,

Results for the configuration model. Let

![]() $(G_n)_{n\geq1}$

be a sequence of random graphs satisfying

$(G_n)_{n\geq1}$

be a sequence of random graphs satisfying

![]() $G_n \sim \textrm{CM}_n(\boldsymbol{d})$

. Throughout the paper, we shall assume that

$G_n \sim \textrm{CM}_n(\boldsymbol{d})$

. Throughout the paper, we shall assume that

Indeed, for

![]() $\nu < 1$

, we already know that there is no giant to start with, and it cannot appear by removing vertices.

$\nu < 1$

, we already know that there is no giant to start with, and it cannot appear by removing vertices.

Definition 1.7. (

![]() $\boldsymbol{r}$

-set.) Suppose

$\boldsymbol{r}$

-set.) Suppose

![]() $\boldsymbol{r} = (r_j)_{j\geq 1}$

is an

$\boldsymbol{r} = (r_j)_{j\geq 1}$

is an

![]() $\alpha$

-sequence with respect to

$\alpha$

-sequence with respect to

![]() $\boldsymbol{p} = (p_j)_{j\geq 1}$

. Let

$\boldsymbol{p} = (p_j)_{j\geq 1}$

. Let

![]() $X = [0, 1]^{\mathbb{N}}$

be the set of all sequences in [0, 1]. Define the set

$X = [0, 1]^{\mathbb{N}}$

be the set of all sequences in [0, 1]. Define the set

An

![]() $\boldsymbol{r}$

-set

$\boldsymbol{r}$

-set

![]() $S(\boldsymbol{r})$

can be thought of as the set of those sequences in

$S(\boldsymbol{r})$

can be thought of as the set of those sequences in

![]() $[0, 1]^{\mathbb{N}}$

which converge to

$[0, 1]^{\mathbb{N}}$

which converge to

![]() $\boldsymbol{r}$

component-wise. Let

$\boldsymbol{r}$

component-wise. Let

![]() $v(C_1({\boldsymbol{r}}))$

and

$v(C_1({\boldsymbol{r}}))$

and

![]() $e(C_1({\boldsymbol{r}}))$

denote the number of vertices and edges in the giant component of

$e(C_1({\boldsymbol{r}}))$

denote the number of vertices and edges in the giant component of

![]() $G_{n,{\boldsymbol{r}}}$

. The following theorem describes the giant in

$G_{n,{\boldsymbol{r}}}$

. The following theorem describes the giant in

![]() $G_{n,{\boldsymbol{r}}^{\scriptscriptstyle(\boldsymbol{n})}}$

.

$G_{n,{\boldsymbol{r}}^{\scriptscriptstyle(\boldsymbol{n})}}$

.

Theorem 1.2. (Existence of giant after vertex removal.) Let

![]() $\boldsymbol{r}$

be an

$\boldsymbol{r}$

be an

![]() $\alpha$

-sequence. Let

$\alpha$

-sequence. Let

![]() $(\boldsymbol{r}^{\scriptscriptstyle(n)})_{n\geq 1}$

be a sequence from the

$(\boldsymbol{r}^{\scriptscriptstyle(n)})_{n\geq 1}$

be a sequence from the

![]() $\boldsymbol{r}$

-set

$\boldsymbol{r}$

-set

![]() $S(\boldsymbol{r})$

. Then the graph

$S(\boldsymbol{r})$

. Then the graph

![]() $G_{n,\boldsymbol{r}^{\scriptscriptstyle(n)}}$

has a giant component if and only if

$G_{n,\boldsymbol{r}^{\scriptscriptstyle(n)}}$

has a giant component if and only if

![]() $\nu_{\boldsymbol{r}}>1$

, where

$\nu_{\boldsymbol{r}}>1$

, where

For

![]() $\nu_{\boldsymbol{r}}>1$

,

$\nu_{\boldsymbol{r}}>1$

,

In the above equations,

![]() ${\eta_{\boldsymbol{r}}} \in (0, 1]$

is the smallest solution of

${\eta_{\boldsymbol{r}}} \in (0, 1]$

is the smallest solution of

where

![]() $\beta_{\boldsymbol{r}} = \mathbb{E}[Dr_D]+1-\alpha$

and

$\beta_{\boldsymbol{r}} = \mathbb{E}[Dr_D]+1-\alpha$

and

![]() $g_{\boldsymbol{r}}(\!\cdot\!)$

is the generating function for a random variable dependent on

$g_{\boldsymbol{r}}(\!\cdot\!)$

is the generating function for a random variable dependent on

![]() $\boldsymbol{r}$

given by

$\boldsymbol{r}$

given by

Remark 1.3. (Class of sequences with the same limiting proportion.) For the class of sequences which converge to a given

![]() $\alpha$

-sequence component-wise, we always have the same limiting proportion of vertices/edges in the giant component. Thus, choosing our sequence

$\alpha$

-sequence component-wise, we always have the same limiting proportion of vertices/edges in the giant component. Thus, choosing our sequence

![]() $(\boldsymbol{r}^{\scriptscriptstyle(n)})_{n\geq 1}$

appropriately, it is always possible to remove an exact

$(\boldsymbol{r}^{\scriptscriptstyle(n)})_{n\geq 1}$

appropriately, it is always possible to remove an exact

![]() $\alpha$

proportion of the vertices from each

$\alpha$

proportion of the vertices from each

![]() $G_n$

having the same limiting graph.

$G_n$

having the same limiting graph.

We next investigate the effect of removing the

![]() $\alpha$

proportion of vertices with the highest/lowest degrees. For that we first define quantiles.

$\alpha$

proportion of vertices with the highest/lowest degrees. For that we first define quantiles.

Definition 1.8. (Degree centrality quantiles.) For each

![]() $\alpha \in (0, 1)$

, let

$\alpha \in (0, 1)$

, let

![]() $k_\alpha$

, the top

$k_\alpha$

, the top

![]() $\alpha$

-quantile, satisfy

$\alpha$

-quantile, satisfy

Similarly, for each

![]() $\alpha \in (0, 1)$

, let

$\alpha \in (0, 1)$

, let

![]() $l_\alpha$

, the bottom

$l_\alpha$

, the bottom

![]() $\alpha$

-quantile, satisfy

$\alpha$

-quantile, satisfy

Definition 1.9. (

![]() $\alpha$

-sequences corresponding to top and bottom removal.) Let k be the top

$\alpha$

-sequences corresponding to top and bottom removal.) Let k be the top

![]() $\alpha$

-quantile for the degree distribution D. Define

$\alpha$

-quantile for the degree distribution D. Define

![]() $\bar{\boldsymbol{r}}(\alpha)$

to have coordinates equal to zero until the kth coordinate, which is

$\bar{\boldsymbol{r}}(\alpha)$

to have coordinates equal to zero until the kth coordinate, which is

![]() $(\alpha-\mathbb{P}(D>k))/p_k$

, and ones thereafter. Then

$(\alpha-\mathbb{P}(D>k))/p_k$

, and ones thereafter. Then

![]() $\bar{\boldsymbol{r}}(\alpha)$

is the

$\bar{\boldsymbol{r}}(\alpha)$

is the

![]() $\alpha$

-sequence corresponding to the top

$\alpha$

-sequence corresponding to the top

![]() $\alpha$

-removal.

$\alpha$

-removal.

Similarly, let l be the lower

![]() $\alpha$

-quantile for the degree distribution D. Define

$\alpha$

-quantile for the degree distribution D. Define

![]() $\underline{\boldsymbol{r}}(\alpha)$

to have coordinates equal to one until the lth coordinate, which is

$\underline{\boldsymbol{r}}(\alpha)$

to have coordinates equal to one until the lth coordinate, which is

![]() $(\alpha-\mathbb{P}(D<l))/p_l$

, and zeros thereafter. Then

$(\alpha-\mathbb{P}(D<l))/p_l$

, and zeros thereafter. Then

![]() $\underline{\boldsymbol{r}}(\alpha)$

is the

$\underline{\boldsymbol{r}}(\alpha)$

is the

![]() $\alpha$

-sequence corresponding to the bottom

$\alpha$

-sequence corresponding to the bottom

![]() $\alpha$

-removal.

$\alpha$

-removal.

Corollary 1.3. (Highest/lowest

![]() $\alpha$

-proportion removal.) Let

$\alpha$

-proportion removal.) Let

![]() $\bar{\alpha}_c$

and

$\bar{\alpha}_c$

and

![]() $\underline{\alpha}_c$

be defined as

$\underline{\alpha}_c$

be defined as

-

(1) Let

$v(\bar{C}_1^\alpha)$

and

$v(\bar{C}_1^\alpha)$

and

$e(\bar{C}_1^\alpha)$

be the number of vertices and edges in the largest connected component of the top

$e(\bar{C}_1^\alpha)$

be the number of vertices and edges in the largest connected component of the top

$\alpha$

-proportion degree vertex-removed graph, respectively. If

$\alpha$

-proportion degree vertex-removed graph, respectively. If

$\alpha \geq \bar{\alpha}_c$

, then there is no giant component, i.e.

$\alpha \geq \bar{\alpha}_c$

, then there is no giant component, i.e.

$v(\bar{C}_1^{\alpha})/n\stackrel{\scriptscriptstyle {\mathbb P}}{\longrightarrow} 0$

. If

$v(\bar{C}_1^{\alpha})/n\stackrel{\scriptscriptstyle {\mathbb P}}{\longrightarrow} 0$

. If

$\alpha < \bar{\alpha}_c$

, then the giant exists and

$\alpha < \bar{\alpha}_c$

, then the giant exists and  \begin{equation*} \dfrac{v(\bar{C}_1^{\alpha})}{n}\overset{\scriptscriptstyle\mathbb{P}}{\to} \rho(\bar{\boldsymbol{r}}(\alpha))>0\quad \textit{and} \quad\dfrac{e(\bar{C}_1^{\alpha})}{n}\overset{\scriptscriptstyle\mathbb{P}}{\to} e(\bar{\boldsymbol{r}}(\alpha))>0. \end{equation*}

\begin{equation*} \dfrac{v(\bar{C}_1^{\alpha})}{n}\overset{\scriptscriptstyle\mathbb{P}}{\to} \rho(\bar{\boldsymbol{r}}(\alpha))>0\quad \textit{and} \quad\dfrac{e(\bar{C}_1^{\alpha})}{n}\overset{\scriptscriptstyle\mathbb{P}}{\to} e(\bar{\boldsymbol{r}}(\alpha))>0. \end{equation*}

-

(2) Let

$v(\underline{C}_1^\alpha)$

and

$v(\underline{C}_1^\alpha)$

and

$e(\underline{C}_1^\alpha)$

be the number of vertices and edges in the largest connected component of the lowest

$e(\underline{C}_1^\alpha)$

be the number of vertices and edges in the largest connected component of the lowest

$\alpha$

proportion degree vertex-removed graph, respectively. If

$\alpha$

proportion degree vertex-removed graph, respectively. If

$\alpha \geq \underline{\alpha}_c$

, then there is no giant, i.e.

$\alpha \geq \underline{\alpha}_c$

, then there is no giant, i.e.

$v(\underline{C}_1^{\alpha})/n\stackrel{\scriptscriptstyle {\mathbb P}}{\longrightarrow} 0$

. If

$v(\underline{C}_1^{\alpha})/n\stackrel{\scriptscriptstyle {\mathbb P}}{\longrightarrow} 0$

. If

$\alpha < \underline{\alpha}_c$

, then the giant exists and

$\alpha < \underline{\alpha}_c$

, then the giant exists and  \begin{equation*} \dfrac{v(\underline{C}_1^{\alpha})}{n}\overset{\scriptscriptstyle\mathbb{P}}{\to} \rho(\underline{\boldsymbol{r}}(\alpha))>0\quad \textit{and} \quad\dfrac{e(\underline{C}_1^{\alpha})}{n}\overset{\scriptscriptstyle\mathbb{P}}{\to} e(\underline{\boldsymbol{r}}(\alpha))>0.\end{equation*}

\begin{equation*} \dfrac{v(\underline{C}_1^{\alpha})}{n}\overset{\scriptscriptstyle\mathbb{P}}{\to} \rho(\underline{\boldsymbol{r}}(\alpha))>0\quad \textit{and} \quad\dfrac{e(\underline{C}_1^{\alpha})}{n}\overset{\scriptscriptstyle\mathbb{P}}{\to} e(\underline{\boldsymbol{r}}(\alpha))>0.\end{equation*}

We next give bounds on the size of the giant in the vertex-removed graph.

Theorem 1.3. (Bounds for proportion of giant.) Let

![]() ${\boldsymbol{r}}$

be an

${\boldsymbol{r}}$

be an

![]() $\alpha$

-sequence. Then

$\alpha$

-sequence. Then

In the above equation,

![]() $\eta_{\boldsymbol{r}} \in (0, 1]$

satisfies (1.9). Furthermore,

$\eta_{\boldsymbol{r}} \in (0, 1]$

satisfies (1.9). Furthermore,

and

Additionally, for

![]() $\alpha$

-sequences which are positively correlated with degree, we have

$\alpha$

-sequences which are positively correlated with degree, we have

Remark 1.4.

![]() $\bar{\boldsymbol{r}}(\alpha)$

is positively correlated with degree. Thus, for top

$\bar{\boldsymbol{r}}(\alpha)$

is positively correlated with degree. Thus, for top

![]() $\alpha$

-proportion removal (1.13), we have

$\alpha$

-proportion removal (1.13), we have

The next logical question is which

![]() $\alpha$

-sequence destroys the graph the most. Intuitively, the size of the giant should be the smallest if we remove the top

$\alpha$

-sequence destroys the graph the most. Intuitively, the size of the giant should be the smallest if we remove the top

![]() $\alpha$

-proportion degree vertices and the largest when we remove the bottom

$\alpha$

-proportion degree vertices and the largest when we remove the bottom

![]() $\alpha$

proportion degree vertices, i.e.

$\alpha$

proportion degree vertices, i.e.

![]() $\rho(\bar{\boldsymbol{r}}(\alpha)) \leq \rho(\boldsymbol{r}) \leq \rho(\underline{\boldsymbol{r}}(\alpha))$

and

$\rho(\bar{\boldsymbol{r}}(\alpha)) \leq \rho(\boldsymbol{r}) \leq \rho(\underline{\boldsymbol{r}}(\alpha))$

and

![]() $e(\bar{\boldsymbol{r}}(\alpha)) \leq e(\boldsymbol{r}) \leq e(\underline{\boldsymbol{r}}(\alpha))$

. To prove this, we first define a partial order over the set of all

$e(\bar{\boldsymbol{r}}(\alpha)) \leq e(\boldsymbol{r}) \leq e(\underline{\boldsymbol{r}}(\alpha))$

. To prove this, we first define a partial order over the set of all

![]() $\alpha$

-sequences that is able to capture which vertex removal is more effective for the destruction of the giant in the configuration model. For this, we recall that, for two non-negative measures

$\alpha$

-sequences that is able to capture which vertex removal is more effective for the destruction of the giant in the configuration model. For this, we recall that, for two non-negative measures

![]() $\mu_1$

and

$\mu_1$

and

![]() $\mu_2$

on the real line, we say that

$\mu_2$

on the real line, we say that

![]() $\mu_1$

is stochastically dominated by

$\mu_1$

is stochastically dominated by

![]() $\mu_2$

, and write

$\mu_2$

, and write

![]() $\mu_1\preccurlyeq_{\textrm{st}} \mu_2$

, if

$\mu_1\preccurlyeq_{\textrm{st}} \mu_2$

, if

![]() $\mu_1([K, \infty)) \leq \mu_2([K, \infty))$

for all

$\mu_1([K, \infty)) \leq \mu_2([K, \infty))$

for all

![]() $K \in \mathbb{R}$

. We next extend this to a partial order over the set of all

$K \in \mathbb{R}$

. We next extend this to a partial order over the set of all

![]() $\alpha$

-sequences.

$\alpha$

-sequences.

Definition 1.10. (Stochastically dominant sequences.) Let

![]() ${\boldsymbol{r}}=(r_j)_{j\geq 1}$

and

${\boldsymbol{r}}=(r_j)_{j\geq 1}$

and

![]() ${\boldsymbol{r}'}=(r'_{\!\!j})_{j\geq 1}$

be

${\boldsymbol{r}'}=(r'_{\!\!j})_{j\geq 1}$

be

![]() $\alpha_1$

- and

$\alpha_1$

- and

![]() $\alpha_2$

-sequences, respectively. Then the sequences

$\alpha_2$

-sequences, respectively. Then the sequences

![]() ${\boldsymbol{q}} = (p_j r_j)_{j\geq 1}$

and

${\boldsymbol{q}} = (p_j r_j)_{j\geq 1}$

and

![]() ${\boldsymbol{q}'} = (p_j r'_{\!\!j})_{j\geq 1}$

form finite non-negative measures. We say that

${\boldsymbol{q}'} = (p_j r'_{\!\!j})_{j\geq 1}$

form finite non-negative measures. We say that

![]() $\boldsymbol{r}'$

stochastically dominates

$\boldsymbol{r}'$

stochastically dominates

![]() $\boldsymbol{r}$

, or

$\boldsymbol{r}$

, or

![]() $\boldsymbol{r} \preccurlyeq_{\boldsymbol{p}} \boldsymbol{r}'$

, if

$\boldsymbol{r} \preccurlyeq_{\boldsymbol{p}} \boldsymbol{r}'$

, if

![]() $\boldsymbol{q} \preccurlyeq_{\textrm{st}} \boldsymbol{q}'$

.

$\boldsymbol{q} \preccurlyeq_{\textrm{st}} \boldsymbol{q}'$

.

The following theorem answers which are the best and worst ways to remove

![]() $\alpha$

-proportion vertices from a configuration model, and compares other

$\alpha$

-proportion vertices from a configuration model, and compares other

![]() $\alpha$

-sequences.

$\alpha$

-sequences.

Theorem 1.4. (Comparison between

![]() $\alpha$

-sequences.) Let

$\alpha$

-sequences.) Let

![]() ${\boldsymbol{r}}=(r_j)_{j\geq 1}$

and

${\boldsymbol{r}}=(r_j)_{j\geq 1}$

and

![]() ${\boldsymbol{r}}'=(r'_{\!\!j})_{j\geq 1}$

be two

${\boldsymbol{r}}'=(r'_{\!\!j})_{j\geq 1}$

be two

![]() $\alpha$

-sequences such that

$\alpha$

-sequences such that

![]() ${\boldsymbol{r}}' \preccurlyeq_{\boldsymbol{p}} {\boldsymbol{r}}$

. Then

${\boldsymbol{r}}' \preccurlyeq_{\boldsymbol{p}} {\boldsymbol{r}}$

. Then

As a result, the critical

![]() $\alpha$

for the bottom and top vertex removal satisfy

$\alpha$

for the bottom and top vertex removal satisfy

![]() $\underline{\alpha}_c > \bar{\alpha}_c.$

$\underline{\alpha}_c > \bar{\alpha}_c.$

The following corollary is stronger than the theorem (as the corollary immediately implies the theorem), but it follows from the proof of Theorem 1.4.

Corollary 1.4. (General comparison.) Suppose

![]() $\boldsymbol{r},\boldsymbol{r}' \in [0, 1]^\mathbb{N}$

satisfy

$\boldsymbol{r},\boldsymbol{r}' \in [0, 1]^\mathbb{N}$

satisfy

![]() $\boldsymbol{r} \preccurlyeq_{\boldsymbol{p}} \boldsymbol{r}'$

. Then

$\boldsymbol{r} \preccurlyeq_{\boldsymbol{p}} \boldsymbol{r}'$

. Then

![]() $\rho(\boldsymbol{r}')\leq \rho(\boldsymbol{r})$

and

$\rho(\boldsymbol{r}')\leq \rho(\boldsymbol{r})$

and

![]() $e(\boldsymbol{r}') \leq e(\boldsymbol{r})$

.

$e(\boldsymbol{r}') \leq e(\boldsymbol{r})$

.

1.4. Overview of the proof

In this section, we give an overview to the proofs. The theorems in this paper are of two types: the first concerns arbitrary strictly local centrality measures on a sequence of locally converging random graphs, whereas the second concerns degree centrality on the configuration model. We discuss these separately in the next two sections.

1.4.1. Strictly local centrality measures

In this section, we discuss the proof structure for Theorem 1.1, which involves the following steps.

-

(1) Proof that the vertex removal function with respect to a strictly local centrality measure is continuous with respect to the rooted graph topology. As a result, if a function on the set of rooted graphs is continuous, then this function composed with the function of vertex removal with respect to the strictly local centrality R is also continuous.

-

(2) Next we use the above observations and the local convergence of the sequence

$(G_n)_{n\geq 1}$

to complete the proof of Theorem 1.1, and Corollaries 1.1 and 1.2 are immediate consequences.

$(G_n)_{n\geq 1}$

to complete the proof of Theorem 1.1, and Corollaries 1.1 and 1.2 are immediate consequences.

1.4.2. Configuration model and degree centrality

In this section, we discuss a construction, motivated by Janson [Reference Janson27], and how it helps in the study of vertex removal procedure on the configuration model.

Let

![]() $G = (V,E)$

be a graph. Suppose

$G = (V,E)$

be a graph. Suppose

![]() $V' \subset V$

is the set of vertices that we wish to remove. We will start by constructing an intermediate graph, which we call the vertex- exploded graph, and denote it by

$V' \subset V$

is the set of vertices that we wish to remove. We will start by constructing an intermediate graph, which we call the vertex- exploded graph, and denote it by

![]() $\tilde{G}(V')$

. To obtain

$\tilde{G}(V')$

. To obtain

![]() $\tilde{G}(V')$

from G, we use the following steps.

$\tilde{G}(V')$

from G, we use the following steps.

-

(1) Index the vertices of the set

$V'= \{v_1, v_2, \ldots, v_m\}$

, where

$V'= \{v_1, v_2, \ldots, v_m\}$

, where

$m=|V'|$

.

$m=|V'|$

. -

(2) Explode the first vertex from the set V′ into

$d_{v_1}$

vertices of degree 1.

$d_{v_1}$

vertices of degree 1. -

(3) Label these new degree-1 vertices red.

-

(4) Do this for each vertex of V′.

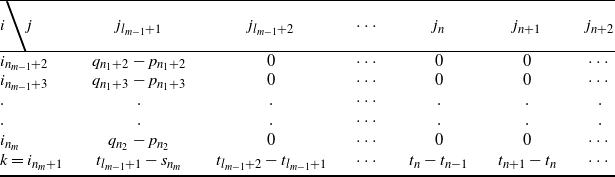

The half-edges incident to the exploded vertices are redistributed to form the half-edges incident to the red vertices. Thus, after explosion, a degree-k vertex falls apart into k degree-1 vertices. This is illustrated in Figure 1, where a degree-k vertex is exploded.

Remark 1.5. (Significance of vertex explosion.) The vertex explosion ensures that the exploded graph is again a configuration model. Removing the degree-1 vertices of the exploded graph does not non-trivially change the component structure of components having linear size. The removal of the red vertices, which are degree-1 vertices, from the exploded graph gives the vertex-removed graph.

We denote the number of vertices and degree sequence of

![]() $\tilde{G}(V')$

by

$\tilde{G}(V')$

by

![]() $\tilde{n}$

and its degree sequence by

$\tilde{n}$

and its degree sequence by

![]() $\tilde{\boldsymbol{d}}$

. The removal of red vertices, together with the (single) edge adjacent to them, from

$\tilde{\boldsymbol{d}}$

. The removal of red vertices, together with the (single) edge adjacent to them, from

![]() $\tilde{G}(V')$

gives the V′-removed graph. Next, we show that the vertex-exploded graph remains a configuration model, if vertices are removed with respect to their degree sequence. This means that we only look at the degree sequence to decide which vertices to remove, independently of the graph. It is this property that makes the configuration model amenable for vertex removal based on degree centrality.

$\tilde{G}(V')$

gives the V′-removed graph. Next, we show that the vertex-exploded graph remains a configuration model, if vertices are removed with respect to their degree sequence. This means that we only look at the degree sequence to decide which vertices to remove, independently of the graph. It is this property that makes the configuration model amenable for vertex removal based on degree centrality.

Figure 1. Explosion of a degree-k vertex.

Lemma 1.1. (Exploded configuration model is still a configuration model.) Let

![]() $G_n = \textrm{CM}_n(\boldsymbol{d})$

be a configuration model with degree sequence

$G_n = \textrm{CM}_n(\boldsymbol{d})$

be a configuration model with degree sequence

![]() $\boldsymbol{d} = (d_i)_{i\in [n]}$

. Let

$\boldsymbol{d} = (d_i)_{i\in [n]}$

. Let

![]() $\tilde{G}_n$

be the vertex-exploded graph formed from

$\tilde{G}_n$

be the vertex-exploded graph formed from

![]() $G_n$

, where vertices are exploded with respect to the degree sequence. Then

$G_n$

, where vertices are exploded with respect to the degree sequence. Then

![]() $\tilde{G}_n$

has the same distribution as

$\tilde{G}_n$

has the same distribution as

![]() $\textrm{CM}_{\tilde{n}}({\tilde{\boldsymbol{d}}}).$

$\textrm{CM}_{\tilde{n}}({\tilde{\boldsymbol{d}}}).$

Proof. A degree sequence in a configuration model can be viewed as half-edges incident to vertices. These half-edges are matched via a uniform perfect matching. Suppose one explodes a vertex of degree i in

![]() $\textrm{CM}_n(\boldsymbol{d})$

. Then we still have its i half-edges, but these half-edges are now incident to i newly formed red vertices of degree 1. Since we are just changing the vertex to which these half-edges are incident, the half-edges in the vertex-exploded graph are still matched via a uniform perfect matching, so that the newly formed graph is also a configuration model, but now with

$\textrm{CM}_n(\boldsymbol{d})$

. Then we still have its i half-edges, but these half-edges are now incident to i newly formed red vertices of degree 1. Since we are just changing the vertex to which these half-edges are incident, the half-edges in the vertex-exploded graph are still matched via a uniform perfect matching, so that the newly formed graph is also a configuration model, but now with

![]() $\tilde{n}$

vertices and degree sequence

$\tilde{n}$

vertices and degree sequence

![]() $\tilde{\boldsymbol{d}}$

.

$\tilde{\boldsymbol{d}}$

.

This lemma is useful because of the following result by Janson and Luczak [Reference Janson and Luczak30] to study the giant component in a configuration model.

Theorem 1.5. (Janson and Luczak [Reference Janson and Luczak30].) Consider

![]() $\textrm{CM}_n(\boldsymbol{d})$

satisfying regularity Condition 1.1 with limiting degree distribution D. Let

$\textrm{CM}_n(\boldsymbol{d})$

satisfying regularity Condition 1.1 with limiting degree distribution D. Let

![]() $g_{D}(x) \;:\!=\; \sum_{j} p_j x^j$

be the probability generating function of D. Assume

$g_{D}(x) \;:\!=\; \sum_{j} p_j x^j$

be the probability generating function of D. Assume

![]() $p_1>0$

.

$p_1>0$

.

-

(i) If

$\mathbb{E}[D(D-2)]>0$

, then there exists unique

$\mathbb{E}[D(D-2)]>0$

, then there exists unique

$\eta \in (0, 1)$

such that

$\eta \in (0, 1)$

such that

$g'_{\!\!D}(\eta) = \mathbb{E}[D] \eta$

and

$g'_{\!\!D}(\eta) = \mathbb{E}[D] \eta$

and-

(a)

${{v(C_1)}/{n}} \overset{\scriptscriptstyle\mathbb{P}}{\to} 1 - g_D(\eta)$

,

${{v(C_1)}/{n}} \overset{\scriptscriptstyle\mathbb{P}}{\to} 1 - g_D(\eta)$

, -

(b)

${{v_j(C_1)}/{n}} \overset{\scriptscriptstyle\mathbb{P}}{\to} p_j(1 - \eta ^{j})$

for all

${{v_j(C_1)}/{n}} \overset{\scriptscriptstyle\mathbb{P}}{\to} p_j(1 - \eta ^{j})$

for all

$j\geq 0 $

,

$j\geq 0 $

, -

(c)

${{e(C_1)}/{n}} \overset{\scriptscriptstyle\mathbb{P}}{\to} ({{\mathbb{E}[D]}/{2}})(1-\eta^2)$

.

${{e(C_1)}/{n}} \overset{\scriptscriptstyle\mathbb{P}}{\to} ({{\mathbb{E}[D]}/{2}})(1-\eta^2)$

.

-

-

(ii) If

$\mathbb{E}[D(D-2)] \leq 0$

, then

$\mathbb{E}[D(D-2)] \leq 0$

, then

${{v(C_1)}/{n}}\overset{\scriptscriptstyle\mathbb{P}}{\to}0$

and

${{v(C_1)}/{n}}\overset{\scriptscriptstyle\mathbb{P}}{\to}0$

and

${{e(C_1)}/{n}} \overset{\scriptscriptstyle\mathbb{P}}{\to} 0 $

.

${{e(C_1)}/{n}} \overset{\scriptscriptstyle\mathbb{P}}{\to} 0 $

.

Here

![]() $C_1$

denotes the largest component of

$C_1$

denotes the largest component of

![]() $\textrm{CM}_n(\boldsymbol{d})$

.

$\textrm{CM}_n(\boldsymbol{d})$

.

Intuition. The local limit of the configuration model is a unimodular branching process with root offspring distribution

![]() $(p_k)_{k\geq1}$

. A unimodular branching process is a branching process in which the offspring distribution of future generations, generations coming after the root, is given by the size-biased distribution of the root’s offspring distribution minus one, denoted by

$(p_k)_{k\geq1}$

. A unimodular branching process is a branching process in which the offspring distribution of future generations, generations coming after the root, is given by the size-biased distribution of the root’s offspring distribution minus one, denoted by

![]() $(p^*_k)_{k\geq 1}$

, that is,

$(p^*_k)_{k\geq 1}$

, that is,

In terms of this local limit, we can interpret the limits in Theorem 1.5 as follows. First,

![]() $\eta$

is the extinction probability of a half-edge. We think of this as the extinction probability of a half-edge in the configuration model. Then the proportion of surviving degree-k vertices is given by

$\eta$

is the extinction probability of a half-edge. We think of this as the extinction probability of a half-edge in the configuration model. Then the proportion of surviving degree-k vertices is given by

![]() $p_k(1-\eta^k)$

, which is the proportion of degree-k vertices times the probability that one of its k half-edges survives as in Theorem 1.5(i(b)). Summing this over all possible values of k gives Theorem 1.5(i(a)). Then

$p_k(1-\eta^k)$

, which is the proportion of degree-k vertices times the probability that one of its k half-edges survives as in Theorem 1.5(i(b)). Summing this over all possible values of k gives Theorem 1.5(i(a)). Then

![]() $\eta$

, the extinction probability of the root of the branching process when it has degree 1, satisfies the equation

$\eta$

, the extinction probability of the root of the branching process when it has degree 1, satisfies the equation

Similarly, for Theorem 1.5(i(c)), an edge does not survive when both its half-edges go extinct, which occurs with probability

![]() $\eta^2$

. Thus

$\eta^2$

. Thus

where

![]() $\ell_n = \sum_{v}d_v$

is the number of half-edges. Thus

$\ell_n = \sum_{v}d_v$

is the number of half-edges. Thus

![]() $\lim_{n\to \infty} \ell_n/n =\mathbb{E}[D]$

, due to Condition 1.1(b).

$\lim_{n\to \infty} \ell_n/n =\mathbb{E}[D]$

, due to Condition 1.1(b).

Using this theorem on the vertex-exploded graph, we obtain the size of giant (vertices and edges). Removal of the red vertices in the vertex-exploded graph gives the required vertex-removed graph. This fact is used to complete the proof of Theorem 1.2. The remaining results are proved by carefully investigating the limiting proportion of vertices and edges in the giant, and how they relate to the precise

![]() $\alpha$

-sequence.

$\alpha$

-sequence.

1.5. Discussion and open problems

In this section, we discuss the motivation of this paper and some open problems. The problem of vertex removal with respect to centrality measures was motivated by Mocanu et al. [Reference Mocanu, Exarchakos and Liotta35], which showed by simulation how the size of the giant and the number of connected components behave for vertex removal, based on different centrality measures. These plots were used to compare the effectiveness of these centrality measures.

A related problem is whether we can compare the size of giants in configuration models if their limiting degree distributions have some stochastic ordering. This question can be answered using the notion of

![]() $\varepsilon$

-transformation as discussed in Definition 3.1 below. Let

$\varepsilon$

-transformation as discussed in Definition 3.1 below. Let

![]() $\rho_{\scriptscriptstyle\textrm{CM}}(\boldsymbol{p})$

denote the size of giant for the configuration model case when the limiting degree distribution is

$\rho_{\scriptscriptstyle\textrm{CM}}(\boldsymbol{p})$

denote the size of giant for the configuration model case when the limiting degree distribution is

![]() $\boldsymbol{p}$

.

$\boldsymbol{p}$

.

Theorem 1.6. (Stochastic ordering and giant in configuration model.) If

![]() $\boldsymbol{p}\preccurlyeq_{\textrm{st}} \boldsymbol{q},$

then

$\boldsymbol{p}\preccurlyeq_{\textrm{st}} \boldsymbol{q},$

then

![]() $\rho_{\scriptscriptstyle\textrm{CM}}(\boldsymbol{p}) \leq \rho_{\scriptscriptstyle\textrm{CM}}(\boldsymbol{q})$

.

$\rho_{\scriptscriptstyle\textrm{CM}}(\boldsymbol{p}) \leq \rho_{\scriptscriptstyle\textrm{CM}}(\boldsymbol{q})$

.

A similar result is proved in [Reference Leskelä and Ngo33] for increasing concave ordering (a stochastic order different from the one used in this paper), but it requires a strong additional assumption that we can remove.

Open problems. We close this section with some related open problems.

-

(1) What is the size of the configuration model giant for the vertex-removed graph in the case of strictly local centrality measures? Which strictly local centrality measure performs the best? Can we extend this to non-local centrality measures?

-

(2) Strictly local approximations to non-local centrality measures. PageRank can be very well approximated by the strictly local approximation in (1.3). Can betweenness, closeness, and other important centrality measures also be well approximated by strictly local centrality measures? What graph properties are needed for such an approximation to be valid? Is it true on configuration models, as predicted for closeness centrality in [Reference Evans and Chen18] and suggested for both closeness and betweenness in [Reference Hinne20]? If so, then one can use these strictly local centrality measures for vertex removal procedures.

-

(3) Stochastic monotonicity for the number of connected components. If one starts removing vertices from a graph, the number of connected components first increases and then decreases. Where does it start decreasing?

-

(4) Extension of results to directed graphs. Can we extend the results of this paper to directed graphs? For the directed graphs, there are several connectivity notions, while vertices have two degrees, making degree centrality less obvious.

2. Proofs: strictly local centrality measures

In this section, we define local convergence of random graphs and then prove the results stated in Section 1.3.1.

2.1. Local convergence of undirected random graphs

To define local convergence, we introduce isomorphisms of rooted graphs, a metric space on them, and finally convergence in it. For more details, refer to [Reference Hofstad23, Chapter 2].

Definition 2.1. (Rooted (multi)graph, rooted isomorphism, and neighbourhood.)

-

(1) We call a pair (G,o) a rooted (multi)graph if G is a locally finite, connected (multi)graph and o is a distinguished vertex of G.

-

(2) We say that two multigraphs

$G_1$

and

$G_1$

and

$G_2$

are isomorphic, written as

$G_2$

are isomorphic, written as

$G_1 \cong G_2$

, if there exists a bijection

$G_1 \cong G_2$

, if there exists a bijection

$\phi \colon V(G_1) \to V(G_2)$

such that for any

$\phi \colon V(G_1) \to V(G_2)$

such that for any

$v, w \in V(G_1)$

, the number of edges between v, w in

$v, w \in V(G_1)$

, the number of edges between v, w in

$G_1$

equals the number of edges between

$G_1$

equals the number of edges between

$\phi(v), \phi(w)$

in

$\phi(v), \phi(w)$

in

$G_2$

.

$G_2$

. -

(3) We say that the rooted (multi)graphs

$(G_1, o_1)$

and

$(G_1, o_1)$

and

$(G_2, o_2)$

are rooted isomorphic if there exists a graph-isomorphism between

$(G_2, o_2)$

are rooted isomorphic if there exists a graph-isomorphism between

$G_1$

and

$G_1$

and

$G_2$

that maps

$G_2$

that maps

$o_1$

to

$o_1$

to

$o_2$

.

$o_2$

. -

(4) For

$r \in \mathbb{N}$

, we define

$r \in \mathbb{N}$

, we define

$B_{r}^{\scriptscriptstyle(G)}(o)$

, the (closed) r-ball around o in G or r-neighbourhood of o in G, as the subgraph of G spanned by all vertices of graph distance at most r from o. We think of

$B_{r}^{\scriptscriptstyle(G)}(o)$

, the (closed) r-ball around o in G or r-neighbourhood of o in G, as the subgraph of G spanned by all vertices of graph distance at most r from o. We think of

$B_{r}^{\scriptscriptstyle(G)}(o)$

as a rooted (multi)graph with root o.

$B_{r}^{\scriptscriptstyle(G)}(o)$

as a rooted (multi)graph with root o.

We next define a metric space over the set of rooted (multi)graphs.

Definition 2.2. (Metric space on rooted (multi)graphs.) Let

![]() $\mathcal{G}_\star$

be the space of all rooted (multi)graphs. Let

$\mathcal{G}_\star$

be the space of all rooted (multi)graphs. Let

![]() $(G_1, o_1)$

and

$(G_1, o_1)$

and

![]() $(G_2, o_2)$

be two rooted (multi)graphs. Let

$(G_2, o_2)$

be two rooted (multi)graphs. Let

The metric

![]() $d_{\scriptscriptstyle \mathcal{G}_\star}\colon \mathcal{G}_\star \times \mathcal{G}_\star \to \mathbb{R}_{\geq 0}$

is defined by

$d_{\scriptscriptstyle \mathcal{G}_\star}\colon \mathcal{G}_\star \times \mathcal{G}_\star \to \mathbb{R}_{\geq 0}$

is defined by

We next define local weak convergence and local convergence in probability for any sequence from the space of rooted random graphs.

Definition 2.3. (Local weak convergence of rooted (multi)graphs.) A sequence of random (multi)graphs

![]() $(G_n)_{n\geq 1}$

is said to converge locally weakly to the random rooted graph (G, o), a random variable taking values in

$(G_n)_{n\geq 1}$

is said to converge locally weakly to the random rooted graph (G, o), a random variable taking values in

![]() $\mathcal{G}_\star$

, having law

$\mathcal{G}_\star$

, having law

![]() $\mu$

, if, for every bounded continuous function

$\mu$

, if, for every bounded continuous function

![]() $f\colon \mathcal{G}_\star \to \mathbb{R}$

, as

$f\colon \mathcal{G}_\star \to \mathbb{R}$

, as

![]() $n\to \infty$

,

$n\to \infty$

,

where

![]() $o_n$

is a uniformly chosen vertex from the vertex set of

$o_n$

is a uniformly chosen vertex from the vertex set of

![]() $G_n$

.

$G_n$

.

Definition 2.4. (Local convergence in probability of rooted (multi)graphs.) A sequence of random (multi)graphs

![]() $(G_n)_{n\geq 1}$

is said to converge locally in probability to the random rooted graph (G, o), a random variable taking values in

$(G_n)_{n\geq 1}$

is said to converge locally in probability to the random rooted graph (G, o), a random variable taking values in

![]() $\mathcal{G}_\star$

, having law

$\mathcal{G}_\star$

, having law

![]() $\mu$

, when, for every

$\mu$

, when, for every

![]() $r > 0$

, and for every

$r > 0$

, and for every

![]() $(H, o') \in \mathcal{G}_\star$

, as

$(H, o') \in \mathcal{G}_\star$

, as

![]() $n\to \infty$

,

$n\to \infty$

,

2.2. Local convergence proofs

In this section, we prove the results stated in Section 1.3.1. Let us take a strictly local centrality measure R. This means that it only depends on a finite fixed neighbourhood, say

![]() $N\in \mathbb{N}$

. The following lemma shows that the map

$N\in \mathbb{N}$

. The following lemma shows that the map

![]() $(G, o)\to (G(R, r), o)$

is continuous.

$(G, o)\to (G(R, r), o)$

is continuous.

Lemma 2.1. (Continuity of centrality-based vertex removal.) Let R be a strictly local centrality measure and let

![]() $h\colon \mathcal{G}_\star \to \mathbb{R}$

be a continuous bounded map, where

$h\colon \mathcal{G}_\star \to \mathbb{R}$

be a continuous bounded map, where

![]() $\mathcal{G}_\star$

is the space of rooted graphs, with the standard metric, d, as in Definition 2.2. Fix

$\mathcal{G}_\star$

is the space of rooted graphs, with the standard metric, d, as in Definition 2.2. Fix

![]() $r>0$

. Suppose

$r>0$

. Suppose

![]() $f\colon \mathcal{G}_\star \to \mathbb{R}$

satisfies

$f\colon \mathcal{G}_\star \to \mathbb{R}$

satisfies

Then

![]() $f(\!\cdot\!)$

is a bounded continuous function.

$f(\!\cdot\!)$

is a bounded continuous function.

Proof.

![]() $f(\!\cdot\!)$

is bounded because

$f(\!\cdot\!)$

is bounded because

![]() $h(\!\cdot\!)$

is bounded. Thus we only need to show that

$h(\!\cdot\!)$

is bounded. Thus we only need to show that

![]() $f(\!\cdot\!)$

is continuous, that is, for all

$f(\!\cdot\!)$

is continuous, that is, for all

![]() $\varepsilon > 0$

, there exists

$\varepsilon > 0$

, there exists

![]() $\delta > 0$

such that

$\delta > 0$

such that

Recall that

![]() $d_{\scriptscriptstyle\mathcal{G}_\star}$

denotes the metric on

$d_{\scriptscriptstyle\mathcal{G}_\star}$

denotes the metric on

![]() $\mathcal{G}_\star$

. By continuity of

$\mathcal{G}_\star$

. By continuity of

![]() $h(\!\cdot\!)$

, for all

$h(\!\cdot\!)$

, for all

![]() $\varepsilon > 0$

, there exists

$\varepsilon > 0$

, there exists

![]() $\delta' > 0$

such that

$\delta' > 0$

such that

Thus it is enough to show that, for all

![]() $\delta'>0$

, there exists

$\delta'>0$

, there exists

![]() $\delta>0$

, such that

$\delta>0$

, such that

Let

Then we claim that (2.1) holds. Here we have used the definition of metric space over rooted graphs along with the fact that for all

![]() $l\geq 0$

$l\geq 0$

This is because the centrality measure R(v) only depends on

![]() $B_N^{\scriptscriptstyle(G)}(v)$

. This proves the lemma.

$B_N^{\scriptscriptstyle(G)}(v)$

. This proves the lemma.

Proof of Theorem

1.1. Suppose that

![]() $(G_n, o_n)$

converges locally weakly to

$(G_n, o_n)$

converges locally weakly to

![]() $(G, o) \sim \mu$

, which means that for all continuous and bounded functions

$(G, o) \sim \mu$

, which means that for all continuous and bounded functions

![]() $f \colon \mathcal{G}_\star \to \mathbb{R}$

,

$f \colon \mathcal{G}_\star \to \mathbb{R}$

,

Using

![]() $f(\!\cdot\!)$

from Lemma 2.1 in (2.2), we get

$f(\!\cdot\!)$

from Lemma 2.1 in (2.2), we get

Thus

![]() $(G_n(R, r), o_n)$

converges locally weakly to (G(R, r), o). Similarly, suppose that

$(G_n(R, r), o_n)$

converges locally weakly to (G(R, r), o). Similarly, suppose that

![]() $(G_n, o_n)$

converges locally in probability to

$(G_n, o_n)$

converges locally in probability to

![]() $(G, o) \sim \mu$

, which means for all continuous and bounded function

$(G, o) \sim \mu$

, which means for all continuous and bounded function

![]() $f \colon \mathcal{G}_\star \to \mathbb{R}$

,

$f \colon \mathcal{G}_\star \to \mathbb{R}$

,

Using

![]() $f(\!\cdot\!)$

from Lemma 2.1 in (2.3), we get

$f(\!\cdot\!)$

from Lemma 2.1 in (2.3), we get

Thus

![]() $(G_n(R, r), o_n)$

converges locally in probability to (G(R, r), o).

$(G_n(R, r), o_n)$

converges locally in probability to (G(R, r), o).

Proof of Corollaries 1.1 and 1.2. These proofs follow immediately from the upper bound on the giant given in [Reference Hofstad23, Corollary 2.28], and the convergence of the number of connected components in [Reference Hofstad23, Corollary 2.22].

3. Proofs: Degree centrality and configuration model

In this section, we restrict ourselves to the configuration model and investigate all possible

![]() $\alpha$

-proportion vertex removals, which can be performed with respect to degree centrality, and then compare them on the basis of the size of the giant. For two sequences

$\alpha$

-proportion vertex removals, which can be performed with respect to degree centrality, and then compare them on the basis of the size of the giant. For two sequences

![]() $(f(n))_{n\geq 1}$

and

$(f(n))_{n\geq 1}$

and

![]() $(g(n))_{n \geq 1}$

, we write

$(g(n))_{n \geq 1}$

, we write

![]() $f(n) = o(g(n))$

when

$f(n) = o(g(n))$

when

![]() $\lim_{n\to \infty}f(n)/g(n) = 0.$

$\lim_{n\to \infty}f(n)/g(n) = 0.$

3.1. Giant in vertex-removed configuration model: Proof of Theorem 1.2

For the proof we use the vertex explosion construction as in Section 1.4.2. We apply the following steps on

![]() $G_n$

.

$G_n$

.

-

(1) Choose

$\lfloor n_k r_k^{\scriptscriptstyle(n)}\rfloor$

vertices uniformly from the set of vertices of degree k and explode them into k vertices of degree 1.

$\lfloor n_k r_k^{\scriptscriptstyle(n)}\rfloor$

vertices uniformly from the set of vertices of degree k and explode them into k vertices of degree 1. -

(2) Label these newly formed degree-1 vertices red.

-

(3) Repeat this for each

$k\in\mathbb{N}$

.

$k\in\mathbb{N}$

.

This newly constructed graph is

![]() $\tilde{G}(V')$

from Section 1.4.2 for an appropriately chosen V′. We shall denote it by

$\tilde{G}(V')$

from Section 1.4.2 for an appropriately chosen V′. We shall denote it by

![]() $\tilde{G}_n$

. Let

$\tilde{G}_n$

. Let

![]() $\tilde{n}_k $

,

$\tilde{n}_k $

,

![]() $\tilde{n}$

and

$\tilde{n}$

and

![]() $n_+$

denote the number of degree-k vertices, total number of vertices, and number of vertices with a red label in the vertex-exploded graph

$n_+$

denote the number of degree-k vertices, total number of vertices, and number of vertices with a red label in the vertex-exploded graph

![]() $\tilde{G}_n$

, respectively. Then

$\tilde{G}_n$

, respectively. Then

\begin{align} \tilde{n}_i &= \begin{cases} n_+ +n_1-\lfloor n_1 r^{\scriptscriptstyle(n)}_1 \rfloor & \text{for }i = 1, \\[5pt] n_i - \lfloor n_i r^{\scriptscriptstyle(n)}_i \rfloor & \text{for }i\neq 1, \end{cases} \end{align}

\begin{align} \tilde{n}_i &= \begin{cases} n_+ +n_1-\lfloor n_1 r^{\scriptscriptstyle(n)}_1 \rfloor & \text{for }i = 1, \\[5pt] n_i - \lfloor n_i r^{\scriptscriptstyle(n)}_i \rfloor & \text{for }i\neq 1, \end{cases} \end{align}

\begin{align} \tilde{n} \sum_{i=1}^{\infty} \tilde{n}_k & = n + \sum_{i=1}^{\infty}(i-1) \lfloor n_i r^{\scriptscriptstyle(n)}_i\rfloor = n\Biggl(1+\sum_{i=1}^{\infty}(i-1) \dfrac{\lfloor n_i r^{\scriptscriptstyle(n)}_i\rfloor}{n}\Biggr) .\end{align}

\begin{align} \tilde{n} \sum_{i=1}^{\infty} \tilde{n}_k & = n + \sum_{i=1}^{\infty}(i-1) \lfloor n_i r^{\scriptscriptstyle(n)}_i\rfloor = n\Biggl(1+\sum_{i=1}^{\infty}(i-1) \dfrac{\lfloor n_i r^{\scriptscriptstyle(n)}_i\rfloor}{n}\Biggr) .\end{align}

Then, by applying the generalised dominated convergence theorem on (3.2) and (3.3),

Recall that

![]() $\beta_{\boldsymbol{r}} = \mathbb{E}[D r_D] + 1 - \alpha$

. Due to (3.1) and (3.4),

$\beta_{\boldsymbol{r}} = \mathbb{E}[D r_D] + 1 - \alpha$

. Due to (3.1) and (3.4),

\begin{equation} \dfrac{\tilde{n}_i}{n} = \begin{cases} \mathbb{E}[D r_D] +p_1(1-r_1)+ o(1) & \text{for }i = 1, \\[5pt] p_i(1-r_i)+ o(1) & \text{for }i\neq 1. \end{cases}\end{equation}

\begin{equation} \dfrac{\tilde{n}_i}{n} = \begin{cases} \mathbb{E}[D r_D] +p_1(1-r_1)+ o(1) & \text{for }i = 1, \\[5pt] p_i(1-r_i)+ o(1) & \text{for }i\neq 1. \end{cases}\end{equation}

We define

\begin{equation*} \tilde{p}_i \;:\!=\; \begin{cases} \beta_{\boldsymbol{r}}^{-1}(\mathbb{E}[D r_D] +p_1(1-r_1)) & \text{for }i = 1, \\[5pt] \beta_{\boldsymbol{r}}^{-1}p_i(1-r_i) & \text{for }i\neq 1. \end{cases} \end{equation*}

\begin{equation*} \tilde{p}_i \;:\!=\; \begin{cases} \beta_{\boldsymbol{r}}^{-1}(\mathbb{E}[D r_D] +p_1(1-r_1)) & \text{for }i = 1, \\[5pt] \beta_{\boldsymbol{r}}^{-1}p_i(1-r_i) & \text{for }i\neq 1. \end{cases} \end{equation*}

Then, by (3.5), for every

![]() $i\geq 1$

,

$i\geq 1$

,

Let

![]() $\tilde{D}$

be a random variable with probability mass function

$\tilde{D}$

be a random variable with probability mass function

![]() $(\tilde{p}_j)_{j\geq 1}$

.

$(\tilde{p}_j)_{j\geq 1}$

.

By Lemma 1.1,

![]() $\tilde{G}_{n}$

is also a configuration model. Equation (3.6) shows that the regularity Condition 1.1(a) holds for

$\tilde{G}_{n}$

is also a configuration model. Equation (3.6) shows that the regularity Condition 1.1(a) holds for

![]() $\tilde{\boldsymbol{d}}$

. Similarly, Condition 1.1(b) also holds. Using Theorem 1.5, the giant in

$\tilde{\boldsymbol{d}}$

. Similarly, Condition 1.1(b) also holds. Using Theorem 1.5, the giant in

![]() $\tilde{G}_{n}$

exists if and only if

$\tilde{G}_{n}$

exists if and only if

![]() $\mathbb{E}[\tilde{D}(\tilde{D}-2)] > 0$

. We compute

$\mathbb{E}[\tilde{D}(\tilde{D}-2)] > 0$

. We compute

\begin{align} \mathbb{E}[\tilde{D}(\tilde{D}-2)]& = \dfrac{1}{\beta_{\boldsymbol{r}}}\sum_{j\geq 1}j(j-2)p_j(1-r_j) -\dfrac{1}{\beta_{\boldsymbol{r}}}\mathbb{E}[Dr_D]\notag \\[5pt] &= \dfrac{1}{\beta_{\boldsymbol{r}}}\mathbb{E}[D(D-2)(1-r_D)]- \dfrac{1}{\beta_{\boldsymbol{r}}}\mathbb{E}[Dr_D]\notag \\[5pt] &=\dfrac{1}{\beta_{\boldsymbol{r}}}\mathbb{E}[D(D-1)(1-r_D)]- \dfrac{1}{\beta_{\boldsymbol{r}}}\mathbb{E}[D]. \end{align}

\begin{align} \mathbb{E}[\tilde{D}(\tilde{D}-2)]& = \dfrac{1}{\beta_{\boldsymbol{r}}}\sum_{j\geq 1}j(j-2)p_j(1-r_j) -\dfrac{1}{\beta_{\boldsymbol{r}}}\mathbb{E}[Dr_D]\notag \\[5pt] &= \dfrac{1}{\beta_{\boldsymbol{r}}}\mathbb{E}[D(D-2)(1-r_D)]- \dfrac{1}{\beta_{\boldsymbol{r}}}\mathbb{E}[Dr_D]\notag \\[5pt] &=\dfrac{1}{\beta_{\boldsymbol{r}}}\mathbb{E}[D(D-1)(1-r_D)]- \dfrac{1}{\beta_{\boldsymbol{r}}}\mathbb{E}[D]. \end{align}

Recall

![]() $\nu_{\boldsymbol{r}}$

from (1.6). By (3.7),

$\nu_{\boldsymbol{r}}$

from (1.6). By (3.7),

Thus

![]() $\tilde{G}_{n}$

has a giant if and only if

$\tilde{G}_{n}$

has a giant if and only if

![]() $\nu_{\boldsymbol{r}}>1$

. Recall that the removal of red vertices (having degree 1) from

$\nu_{\boldsymbol{r}}>1$

. Recall that the removal of red vertices (having degree 1) from

![]() $\tilde{G}_n$

gives

$\tilde{G}_n$

gives

![]() $G_{n,\boldsymbol{r}^{\scriptscriptstyle(n)}}$

. Since we remove a fraction

$G_{n,\boldsymbol{r}^{\scriptscriptstyle(n)}}$