Antibiotics are among the most commonly prescribed medications, and there is evidence to guide the optimal use of these agents for most situations encountered in clinical medicine, including for both treatment and prophylaxis. Nevertheless, clinicians routinely prescribe antibiotics in ways that diverge from this evidence, such as prescribing them when not indicated, for durations longer than necessary, or selecting broad-spectrum antibiotics when a narrower-spectrum agent would suffice. Reference Magill, O’Leary and Ray1,Reference Fleming-Dutra, Hersh and Shapiro2 This overuse of antibiotics contributes to the public health crisis of antibiotic resistance while exposing patients to potential antibiotic-related harms.

Antibiotic stewardship refers to the coordinated effort to improve how antibiotics are prescribed by clinicians. To achieve this goal, antibiotic stewardship leverages different practices, such as prospective audit-and-feedback, prior authorization, education, clinical decision support, and rapid diagnostics. Reference Barlam, Cosgrove and Abbo3 Implementing antibiotic stewardship can be challenging because it involves changing knowledge, deeply held attitudes, cultural norms, and the emotionally influenced behaviors of clinicians and patients toward antibiotic prescribing and use. The implementation process may also vary based on the healthcare setting, the antibiotic prescribing practice that needs to be improved, and the stakeholders involved. Even though those who participate in antibiotic stewardship know this to be true from experience, a gap exists in our understanding of the way each of these factors can be addressed to increase the likelihood of implementation success. Reference Morris, Calderwood and Fridkin4

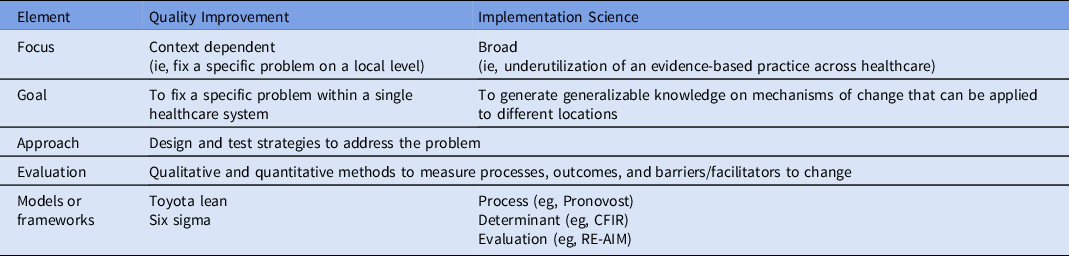

Implementation science can help address this research gap. Implementation science is “the scientific study of methods to promote the systematic uptake of proven clinical treatments, practices, organizational and management interventions into routine practice, and hence to improve health.” 5 The field originated in response to the growing recognition of how difficult it is to translate research into routine use. Reference Nilsen, Stahl, Roback and Cairney6 Implementation science is unique from quality improvement, although the 2 fields share much in common (Table 1). In this white paper, we discuss how to apply key implementation science principles and methods to studies of antibiotic stewardship. This paper is meant to be a primer on conducting implementation research about antibiotic stewardship while demonstrating how implementation science principles can inform local antibiotic stewardship efforts.

Table 1. Comparison of Quality Improvement and Implementation Science

Note. CFIR, The Consolidated Framework for Implementation Research; RE-AIM, Reach Effectiveness Adoption Implementation Maintenance Framework.

Here, we take the reader through the steps involved in the design and conduct of an implementation research study, while introducing and defining key implementation science principles. We describe the importance of establishing the evidence–practice gap to be addressed in a study. We then introduce 2 bedrock concepts within the field of implementation science and how to think about them in relation to antibiotic stewardship: frameworks and implementation strategies. We argue that what has been historically defined as antibiotic stewardship strategies, such as prospective audit and feedback and prior authorization, can also be described as implementation strategies. We discuss important preimplementation activities, including stakeholder engagement, understanding the reasons for the evidence-practice gap and selecting implementation strategies. Finally, we describe how to evaluate the implementation process. The order of the sections follows the general order of events in planning and executing a study; however, implementation research is rarely linear. Prior decisions often need to be revisited based on new information that is gained in the course of research.

Identifying the evidence–practice gap

Implementation science is the systematic study of methods to promote the uptake of proven approaches to improve health outcomes; thus, implementation research projects must clearly identify the evidence-based clinical treatment, practice, organizational, or management intervention that is the focus of the implementation effort. Within antibiotic stewardship, the evidence-based practice will typically be some aspect of antibiotic prescribing (eg, the decision to initiate antibiotic therapy, antibiotic selection, or antibiotic duration).

Implementation science focuses on generating knowledge about how to improve health outcomes by reducing the gap between what we know works to promote health (ie, an evidence-based practice) and how we actually make it work (ie, how we deliver evidence-based practice in routine, real-world settings). Reference Brownson, Colditz and Proctor7,Reference Eccles and Mittman8 Therefore, it is critical to establish whether your project addresses a gap between evidence-based optimal practice and the current status quo. Reference Kitson and Straus9 Establishing that such an evidence gap exists requires data from either the local setting or from the extant research literature. Reference Crable, Biancarelli, Walkey, Allen, Proctor and Drainoni10

Identifying this gap in antibiotic stewardship should begin with documenting whether antibiotic prescribing in a clinical setting is consistent with the best available evidence. Although clear and reproducible definitions of unnecessary or suboptimal antibiotic prescribing are needed, a helpful framework to consider comprises the “5 D’s” of optimal antibiotic therapy: diagnosis, drug, dose, duration, and de-escalation. Reference Morris, Calderwood and Fridkin4,Reference Doron and Davidson11,Reference Joseph and Rodvold12 Gaps between what we know about how antibiotics should be used and how they are actually used can occur in any of these moments along the prescribing pathway. Reference Tamma, Miller and Cosgrove13

Data demonstrating these gaps could include (1) documentation of clinician- or site-level use of antibiotics for conditions that do not require them, (2) excessive duration of antibiotic therapy for uncomplicated conditions in which short-course therapy has been demonstrated to be safe, (3) high rates of using broad-spectrum antibiotics when narrow-spectrum drugs would be equally effective, or (4) composite proportions of suboptimal antibiotic-prescribing based on evidence-based guidelines for common clinical conditions.

Implementation strategies defined

The primary focus of implementation science is the rigorous evaluation of “implementation strategies.” An implementation strategy is a method or technique used to enhance the adoption, implementation, and sustainability of an evidence-based practice by adapting it to fit the local context. 14 Implementation strategies can take several different forms. A review by Waltz et al Reference Waltz, Powell and Matthieu15 classified 73 discrete implementation strategies into 9 broad categories (Table 2). Others have categorized implementation strategies broadly into “top down–bottom up,” “push–pull,” or “carrot–stick” approaches. Reference Proctor, Powell and McMillen16

Table 2. Implementation Strategies

a Examples of audit-and-feedback include “handshake stewardship,” a form of postprescription audit and review, and leveraging peer-to-peer comparisons to give retrospective feedback.

b An antibiogram is one example of capturing and sharing local knowledge, as it aggregates local antibiotic resistance data into a single tool.

c Institutional guidelines on antibiotic prescribing are often developed by a local, multidisciplinary group of experts, which includes the stewardship team. Antibiotic stewardship committees often draw upon a variety of disciplines to garner broad support for stewardship activities.

d Clinicians can be reminded in a number of ways, such as antibiotic time-outs or order sets that prompt clinicians to choose a guideline-concordant antibiotic regimen.

e For example, antibiotic stewardship personnel may call clinicians with the results of rapid diagnostic tests and use this conversation as an opportunity to provide real-time antibiotic treatment recommendations.

f Hospitals frequently mandate that certain antibiotics receive prior authorization before initiation.

g A hospital’s antibiotic stewardship expertise can be centralized in a stewardship team, eg, an infectious disease–trained pharmacist and physician.

h In ambulatory care, patients and families are often educated about when antibiotics are unnecessary. Shared decision making is another way to involve patients in the antibiotic-prescribing process; this has been used to decrease unnecessary antibiotic treatment for acute respiratory tract infections.

In simplistic terms, the evidence-based practice is “the thing” that is known to be effective in improving health or minimizing harm, based on prior studies. Reference Curran17 The implementation strategy is what is done “to try to help people and places ‘do the thing.’” If the evidence-based practice is some aspect of antibiotic prescribing, then the implementation strategy is what helps clinicians adopt this evidence-based practice into their routine prescribing behavior. Many commonly used and proven antibiotic stewardship practices, such as audit and feedback, education, commitment posters, electronic medical record-based prompts, or the development of local consensus guidelines, are examples of implementation strategies that can be used alone or in combination to encourage clinicians to prescribe antibiotics in an evidence-based manner.

Choosing an implementation science framework

All research on implementing evidence-based antibiotic stewardship practices should make use of an implementation science framework. Implementation frameworks are grounded in theory and are sometimes referred to as “models.” In-depth discussions of the technical differences between theories, models, and frameworks can be found elsewhere. Reference Nilsen18,Reference Damschroder19

Frameworks, which are typically summarized in a single diagram, provide a systematic approach to developing, managing, and evaluating the implementation process. These frameworks clarify assumptions about the multiple interrelated factors that influence implementation (eg, individuals, units, and organizations), and they focus attention on essential change processes. By standardizing concepts, relationships, and definitions, implementation frameworks speak a common language, and studies that use established frameworks more efficiently contribute to generalized knowledge. Reference Leeman, Birken, Powell, Rohweder and Shea20

Choosing an implementation framework should be informed by the research question, the implementation strategies that a given project plans to use, and the stage of program development and evaluation. Consulting with someone who has a working knowledge of implementation science frameworks and methods is highly recommended. In general, implementation frameworks can be categorized into 1 of 3 categories: process, determinant, or evaluation. Reference Nilsen18,Reference Damschroder19 A resource for identifying an appropriate framework can be found online at https://dissemination-implementation.org; this web tool was developed through a collaborative effort across 3 United States universities. Reference Tabak, Khoong, Chambers and Brownson21

Process frameworks provide a how-to guide on planning and executing implementation initiatives. These frameworks are meant to be practical and often include a stepwise sequence of implementation stages. Three examples of process frameworks include the Knowledge to Action framework, the Iowa model and Pronovost’s 4E process theory. Reference Graham, Logan and Harrison22–Reference Pronovost, Berenholtz and Needham24

-

1. The Knowledge to Action framework describes how new knowledge is created and how this knowledge is applied and adapted to the local context. Reference Graham, Logan and Harrison22 In the country of Cameroon, the Knowledge to Action framework was used to guide the successful national implementation of a surgical safety checklist. Reference White, Daya and Karel25

-

2. The Iowa model, developed 25 years ago by nurses and revised in 2017, provides users with a pragmatic flow diagram depicting steps and decision points in the process of moving from the realization that a change in practice is needed to the integration and sustainment of that change. Reference Titler, Kleiber and Steelman23,Reference Collaborative, Buckwalter and Cullen26

-

3. Pronovost’s 4E process theory moves through the stages of summarizing the available evidence, identifying implementation barriers, measuring current performance, and targeting key stakeholders with the 4Es of implementation: engage, educate, execute, and evaluate. Reference Pronovost, Berenholtz and Needham24

Determinant frameworks outline different factors that potentially impede or enable the implementation process. These factors are often referred to as “barriers” and “facilitators.” Leveraging determinant frameworks can be helpful during the preimplementation process to inform the optimal approach to implementation. Determinant frameworks can also be used to understand in hindsight why the implementation process either succeeded or failed. Three examples of determinant frameworks include the Consolidated Framework for Implementation Research (CFIR), the integrated Promoting Action on Research Implementation in Health Services (i-PARIHS) framework, and the Theoretical Domains framework (TDF). Reference Damschroder, Aron, Keith, Kirsh, Alexander and Lowery27–Reference Michie, Johnston and Abraham29

-

1. The CFIR is made up of 5 domains that potentially influence the implementation of interventions: intervention characteristics, outer setting, inner setting, characteristics of individuals, and process. Reference Damschroder, Aron, Keith, Kirsh, Alexander and Lowery27 The CFIR model has been used to study perceptions of diagnosing infections in nursing home residents and the perceptions of antibiotic stewardship personnel regarding why their programs were successful. Reference Barlam, Childs and Zieminski30,Reference Kuil, Schneeberger, van Leth, de Jong and Harting31

-

2. i-PARIHS, an updated version of PARiHS, suggests that the success of implementation is dependent on the features of the innovation, the context in which implementation occurs, the people who are affected by and influence implementation, and the manner in which the implementation process is facilitated. Reference Harvey and Kitson28 Researchers have used the PARiHS framework to classify organizational factors that may facilitate ASP design, development, and implementation. Reference Chou, Graber and Zhang32

-

3. The TDF outlines 14 potential determinants of healthcare worker behavior, including knowledge, skills, beliefs, environmental context, and resources. Reference Michie, Johnston and Abraham29,Reference Cane, O’Connor and Michie33 Researchers used the TDF framework as part of a cluster randomized trial to understand barriers and facilitators to dentists using local measures instead of systemic antibiotics to manage dental infections. Reference Newlands, Duncan and Prior34

The third type of implementation framework is the evaluation framework. These frameworks help choose implementation outcomes to measure and, in turn, can be useful to incorporate into quality improvement initiatives or clinical trials. Two examples of evaluation frameworks include RE-AIM and a framework described by Proctor et al. Reference Glasgow, Vogt and Boles35,Reference Proctor, Landsverk, Aarons, Chambers, Glisson and Mittman36

-

1. RE-AIM sets forth 5 dimensions to evaluate health behavior interventions: reach, effectiveness, adoption, implementation, and maintenance. Reference Glasgow, Vogt and Boles35 These dimensions are evaluated at multiple levels (eg, the healthcare system, clinicians, and patients). In rural China, the RE-AIM framework was used to evaluate a community-based program for disposing households’ expired, unwanted or unused antibiotics. Reference Lin, Wang, Wang, Zhou and Hargreaves37

-

2. Proctor et al Reference Proctor, Landsverk, Aarons, Chambers, Glisson and Mittman36 proposes a framework in which improvements in outcomes are dependent not only on the evidence-based practice (the “what”) that is implemented but also on the implementation strategies (the “how”) used to implement the practice. The Proctor framework distinguishes between the evidence-based practice and different levels of implementation strategies (the approach for putting the practice into place). It incorporates 3 levels of outcomes: implementation, service, and client.

Implementation scientists sometimes apply 2 or more framework types simultaneously (eg, CFIR and RE-AIM). Such hybrid frameworks can be useful for projects with multifaceted goals or for identifying clear outcomes with frameworks that are more conceptual.

Establishing stakeholder engagement

In biomedical research, it is traditional for investigators to examine a scientific phenomenon in controlled conditions, removed from the people whose lives or work are affected by the study topic. However, addressing the gap between evidence and practice requires a close engagement between researchers and the “end users” of scientific knowledge. A major contribution of implementation science is the finding that successful implementation is a function of how well an evidence-based practice fits with the preferences and priorities of stakeholders (ie, those who will ultimately be affected by the practice) and the context in which they are embedded. Reference Brownson, Colditz and Proctor7 As such, a key component of an implementation research project is stakeholder engagement.

Stakeholders can include patients and their families, clinicians, health system administrators, community groups, insurers, guideline developers, researchers, professional organizations, and regulatory bodies. Antibiotic stewardship stakeholders will vary depending on the evidence-practice gap and setting. For example, an implementation research project in ambulatory surgical centers might target the underuse of β-lactam antibiotics in patients with reported (but inaccurate) penicillin allergies through the use of a nurse-driven, preoperative, penicillin-allergy delabelling program. A wide range of stakeholders could be involved in such a project, including patients, nurses, surgeons, allergists, pharmacists, health system information technology specialists, surgical center administrators, and health system leaders. Reference Jani, Williams and Krishna38

Once stakeholders are identified, they must be engaged. Although the level of engagement may vary depending on the project, the goal is to ensure that stakeholder needs, preferences, priorities, concerns and beliefs about the evidence-based practice proposed for implementation have been identified and used to inform the study. It is also helpful to understand the relationships among the stakeholders, the roles they might play in facilitating or hindering implementation, which stakeholders have access to “levers” of change in the setting, and stakeholder levels of interest in and commitment to the proposed project. Reference Bernstein, Weiss and Curry39

Stakeholder analysis methods can be used to systematically elicit this information using a combination of research techniques. Reference Brugha and Varvasovszky40,Reference Aarons, Wells, Zagursky, Fettes and Palinkas41 Ideally, stakeholders are engaged from the planning process through the end of the project, and they weigh in on all aspects of study design and conduct, including implementation strategies and outcomes. Reference Crable, Biancarelli, Walkey, Allen, Proctor and Drainoni10 A good example of a systematic approach to stakeholder engagement in stewardship is the development and optimization of the Antibiotic Review Kit (ARK), a multifaceted intervention aimed at reducing excess duration of antibiotic use in hospital inpatients. Reference Santillo, Sivyer and Krusche42,43

Considering context: Identifying barriers to implementation and establishing readiness to change

Implementation science approaches to change in healthcare have highlighted the influence of context on the success of integrating an evidence-based practice into routine use. 44 Context is “a multidimensional construct encompassing micro-, meso-, and macro-level determinants that are pre-existing, dynamic and emergent throughout the implementation process.” Reference Rogers, De Brun and McAuliffe45 These micro, meso, and macro determinants include concepts such as individual stakeholder perceptions and attitudes, teamwork, leadership, organizational climate and culture, readiness to change, resources, health system characteristics, and the political environment. Implementation science frameworks often include contextual domains in their conceptualizations of how successful implementation is achieved, for example, inner–outer setting in CFIR, inner–outer context in i-PARIHS, environmental context, and resources–social influences in TDF.

In the planning phase of an implementation research study, methods should be used to identify and assess contextual determinants likely to influence implementation. Such inquiry can be directed toward understanding the reasons for the evidence–practice gap, establishing a setting’s readiness to change, identifying potential barriers to and facilitators of implementation, and identifying which implementation strategy (or combination of strategies) will be needed to encourage sustainable change.

Mixed methods, or the combination of quantitative and qualitative approaches, are most commonly used to understand context in implementation science. Reference Rogers, De Brun and McAuliffe45,46 Survey methodology can be used to measure attitudes and perceptions of individuals or groups. A number of scales with established validity and reliability can be used at this stage, such as the Implementation Climate Scale (ICS), Organizational Readiness to Change Assessment (ORCA), and the Determinants of Implementation Behavior Questionnaire (DIBQ). Reference Ehrhart, Aarons and Farahnak47–Reference Huijg, Gebhardt and Dusseldorp49 Qualitative methodologies, such as focus groups, semistructured interviews, and rapid ethnographic observations, are commonly used to gather information about stakeholder beliefs, existing workflows or processes, social norms, and group dynamics related to the evidence–practice gap. Reference Palinkas, Aarons, Horwitz, Chamberlain, Hurlburt and Landsverk50

The selection of methods should be informed by the research question, what is already known about barriers and facilitators to the evidence-based practice, time and resources available to conduct the work, the implementation framework informing the project, and the availability of collaborators with appropriate expertise. Certain evidence-based practices in stewardship have a robust extant literature identifying reasons for the evidence–practice gap, stakeholder response to interventions and barriers to implementation, such as interventions directed at reducing unnecessary use of antibiotics for viral acute respiratory infections (ARIs) in outpatient primary-care settings. Reference Anthierens, Tonkin-Crine and Cals51–Reference Jeffs, McIsaac and Zahradnik56 An implementation research project focused on this gap will likely only need to examine local contextual determinants related to the setting’s readiness to change, the need for adaptations to approaches described in the literature and tailored to the local context, and stakeholder engagement. Less is known about the reasons for other evidence–practice gaps in stewardship, such as antibiotic overuse at hospital discharge. An implementation research project on this topic could include rapid formative evaluation as a component of the preimplementation inquiry. Reference Stetler, Legro and Wallace57–Reference Sharara, Arbaje and Cosgrove60

Choosing an implementation strategy or a bundle of strategies

Preimplementation planning informs the choice of an implementation strategy. Almost all stewardship interventions are multifaceted and will leverage >1 implementation strategy. The MITIGATE tool kit, for example, aims to deimplement unnecessary antibiotic use in emergency departments and urgent care clinics. Reference May, Yadav, Gaona, Mistry, Stahmer, Meeker, Doctor and Fleischman61 In this case, the evidence-based practice is not prescribing antibiotics for viral ARIs. The tool kit sets forth multiple implementation strategies to enhance the adoption of this evidence-based practice, including developing stakeholder relationships (eg, identifying champions, obtaining commitment letters), training and educating stakeholders, using evaluative strategies (eg, audit and feedback), and engaging patients. Reference May, Yadav, Gaona, Mistry, Stahmer, Meeker, Doctor and Fleischman61

When choosing an implementation strategy or a bundle of strategies for any given project, several questions should be considered. First, what is the evidence-based practice being implemented? Does the practice need to be adapted or tailored to the local context? There is an inevitable tension between fidelity to the practice and adaptation at the local level. Second, what are the known barriers and facilitators to adopting the evidence-based practice? How would any specific implementation strategy address a known barrier or leverage an established facilitator? Third, are the implementation strategies feasible given local resources? Fourth, would pilot testing the implementation strategy on a small scale provide valuable information before the strategy is evaluated more broadly?

Implementation strategies should be rigorously reported so that they can be scientifically retested and employed in other healthcare settings. This aspect can be challenging, as implementation strategies are complex and are often addressing multiple barriers to change. Supplementary Table 1 (online) provides guidance on the level of detail with which an implementation strategy should be described. Such reports describe the actor, the action, the action targets, temporality, dose, a theoretical justification, and which implementation outcomes are addressed. Consensus criteria for reporting implementation studies have also been published. Reference Proctor, Powell and McMillen16,Reference Pinnock, Barwick and Carpenter62

Evaluating the implementation process

Any type of clinical study design can be used to evaluate implementation. However, a key difference between an implementation trial and an effectiveness trial is the measurement of implementation outcomes.

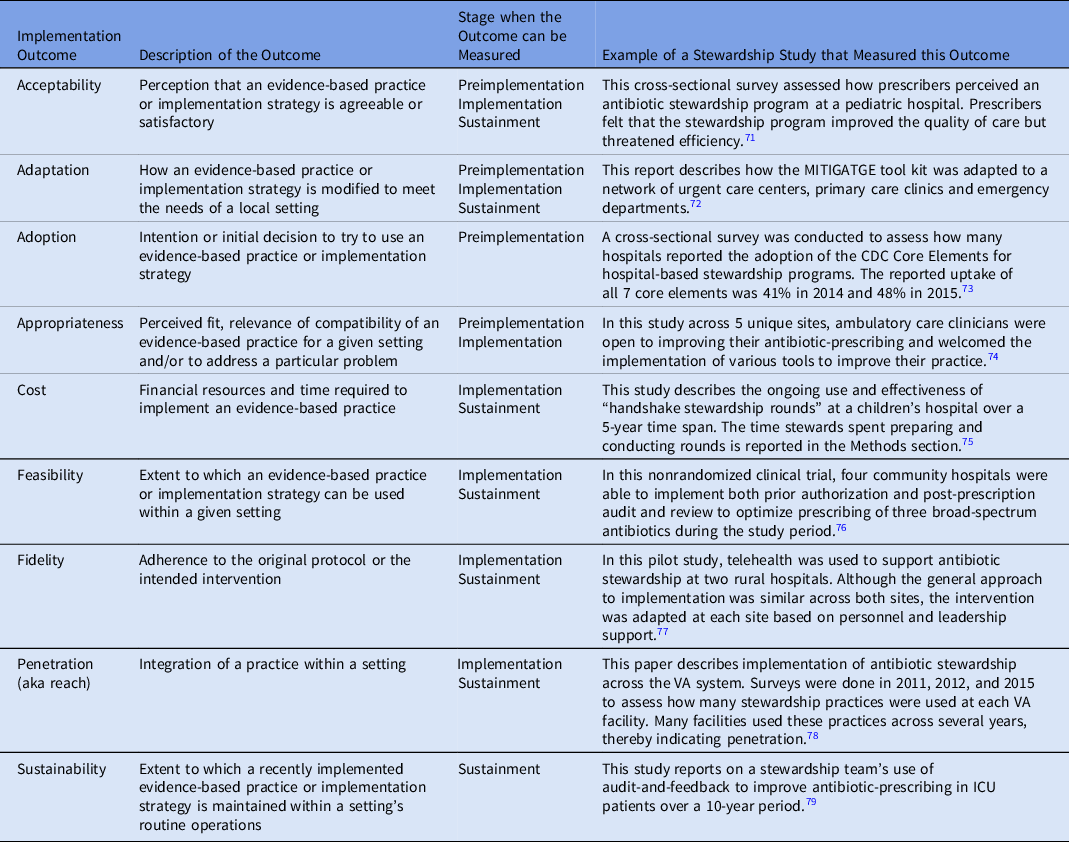

Implementation outcomes are the effects of deliberate actions to implement new practices. These outcomes are often measured from the perspective of clinicians or patients, and they serve as indicators of whether implementation strategies were successful. When evidence-based practices do not lead to the desired outcome, implementation outcomes can help distinguish the reasons for that failure. Such reasons may include a practice that is a poor fit for the setting or an implementation process that was incomplete. Table 3 provides the definitions of common implementation outcomes and examples of how they have been measured in the antibiotic stewardship literature.

Table 3. Implementation Outcomes and Their Measurement in the Antibiotic Stewardship Literature a

a This table is modified from a similar table at https://www.queri.research.va.gov/tools/QUERI-Implementation-Roadmap-Guide.pdf

Certain implementation outcomes are best measured during specific phases of the implementation process, as shown in Table 3. The findings from these evaluations can be used in real time to refine implementation efforts, or they may provide a summative assessment once the implementation process has concluded. In addition, some outcomes can be measured before implementation even begins.

Implementation outcomes can be measured using both quantitative and qualitative approaches. Some implementation outcomes can be ascertained via survey tools, like the Acceptability of Intervention Measure (AIM), the Intervention Appropriateness Measure (IAM) and the Feasibility of Intervention Measure (FIM). Reference Weiner, Lewis and Stanick63 However, not all of these measures have been empirically tested for content validity, reliability, and usability, so many investigators create study-specific measures. Implementation science experts have called for more rigorous measure development moving forward. Reference Dorsey, Damschroder and Lewis64 In addition, implementation outcomes can be ascertained through qualitative methods, especially semistructured interviews. In this case, qualitative data collection and analysis are typically informed by the implementation framework that is being used. Administrative data can also be used to measure changes in process utilization. Guidance on using these different tools has been summarized previously. Reference Safdar, Abbo, Knobloch and Seo65,Reference Patton66

Within any implementation initiative, clinical effectiveness should continue to be measured. For antibiotic stewardship, clinical effectiveness outcomes may include volume-based metrics of antibiotic use, length of hospital stay, and Clostridioides difficile infections. If the initiative has potential unintended consequences, these should also be measured. Both the RE-AIM and the Proctor implementation frameworks call for measuring outcomes of both clinical effectiveness and implementation effectiveness when testing implementation strategies. Likewise, implementation-effectiveness hybrid trials focus on both clinical effectiveness and implementation outcomes. Reference Curran, Bauer, Mittman, Pyne and Stetler67 These hybrid trials often make use of study designs commonly used in efficacy studies (eg, randomized allocation) while also evaluating at least 1 implementation strategy.

Putting it all together

In Supplementary Tables 2–4, we show how different implementation frameworks could be applied to an intervention to reduce antibiotic prescribing for viral ARIs in an emergency department network. Although several different frameworks could be useful for this type of project, we have chosen to highlight a process framework (Provonost’s 4E Process Theory), a determinant framework (CFIR), and an evaluation framework (RE-AIM). Supplementary Table 1 (online) shows how the implementation strategies for such a project could be conceptualized and reported.

In conclusion, antibiotic prescribing is complex and often context dependent, but a large body of evidence exists to guide the optimal use of these agents. Efforts to synthesize and evaluate the quality of this evidence are frequently being updated. Reference Davey, Marwick and Scott68–70 In addition, clinical research will continue to define the optimal use of antibiotics for many infection types.

Antibiotic stewardship works to implement this evidence into routine medical practice. From a practical perspective, the discipline of implementation science can be beneficial to antibiotic stewards by encouraging thoughtful reflection on the preimplementation phase and can provide a variety of implementation strategies that can be leveraged to enhance the adoption of evidence-based prescribing practices.

We have also shown how implementation science methods can enhance research on stewardship implementation. In this white paper, we have presented different frameworks and reviewed how implementation outcomes can be measured. We have argued that what has been traditionally described as antibiotic stewardship strategies (eg, prospective audit and feedback, prior authorization) can also be conceptualized as implementation strategies. By reframing stewardship strategies in this manner, we have tried to show how the field of antibiotic stewardship intersects with the discipline of implementation science. In addition, through this reframing, we are encouraging researchers to engage a broader range of literature to examine the full extent of implementation strategies in various clinical contexts, as well as to describe the implementation of stewardship strategies with the same rigor that implementation strategies are reported. This should enable future studies to more effectively contribute to generalizable knowledge on stewardship implementation.

In conclusion, antibiotic resistance is a major public health challenge, and practicing antibiotic stewardship is an important solution to this problem. Finding areas of synergy between the disciplines of antibiotic stewardship and implementation science has the potential to accelerate the pace at which evidence-based antibiotic-prescribing practices are incorporated into routine care.

Supplementary material

For supplementary material accompanying this paper visit https://doi.org/10.1017/ice.2021.480

Acknowledgments

We thank Kristy Weinshel for her support in the preparation of this manuscript and for the feedback we received from the SHEA Antimicrobial Stewardship Committee.

Financial support

No financial support was provided relevant to this article.

Conflict of interest

All authors report no conflicts of interest relevant to this article.