Introduction

Every day, people form initial impressions when encountering others. Such impressions are typically based on short slices of verbal and nonverbal behavior of the other person (e.g., Weisbuch et al., Reference Weisbuch, Ambady, Clarke, Achor and Weele2010), they are formed rapidly (e.g., Willis & Todorov, Reference Willis and Todorov2006), and can have far-reaching consequences (see Harris & Garris, Reference Harris, Garris, Ambady and Skowronski2008; Swider et al., Reference Swider, Harris and Gong2022 for an overview). For example, a positive impression can result in evaluating someone as a potential friend, romantic partner, or future employee (e.g., Barrick et al., Reference Barrick, Swider and Stewart2010; Gazzard Kerr et al., Reference Gazzard Kerr, Tissera, McClure, Lydon, Back and Human2020; Human et al., Reference Human, Sandstrom, Biesanz and Dunn2013; Swider et al., Reference Swider, Barrick and Harris2016). In personnel selection, initial impressions are thought to be especially relevant for interpersonal selection procedures such as interviews and assessment centers (ACs) and have been found to influence selection outcomes (e.g., Barrick et al., Reference Barrick, Swider and Stewart2010; Ingold et al., Reference Ingold, Dönni and Lievens2018). Yet, in contrast to research on interviews scrutinizing the role of initial impressions (Barrick et al., Reference Barrick, Swider and Stewart2010, Reference Barrick, Dustin, Giluk, Stewart, Shaffer and Swider2012; Swider et al., 2011, 2016), research on initial impressions in ACs has been comparatively scarce (Swider et al., Reference Swider, Harris and Gong2022).

Creating more knowledge on the role of initial impressions in ACs is critical for both practice and theory. Practically, ACs are frequently used across organizations and countries for selecting employees at different levels (Krause & Thornton, Reference Krause and Thornton2009), and understanding how impressions affect these influential AC ratings is key for designing ACs and training assessors. ACs are highly interpersonal selection procedures during which trained assessors face the demanding task of rating assessees’ performance on different constructs in several simulation exercises (International Taskforce on Assessment Center Guidelines, 2015; Kleinmann & Ingold, Reference Kleinmann and Ingold2019). Theoretically, this cognitively demanding task creates a condition during which, according to dual process theories (e.g., Evans & Stanovich, Reference Evans and Stanovich2013), quickly formed impressions should have a strong effect on assessor ratings. Similarly, earlier research has looked at general impressions and their relation to AC ratings (Lance et al., Reference Lance, Foster, Gentry and Thoresen2004). Empirically, more recent AC research (Ingold et al., Reference Ingold, Dönni and Lievens2018) has supported the relevance of initial impressions because it found that initial impressions formed in the first two minutes of an exercise by two different group of raters (i.e., raters with and without assessor experience) reliably predicted AC performance ratings as rated by trained assessors, indicating the need for future research.

The current study aims to contribute to the body of research on the role of initial impressions in ACs. Based on the “thin slices” of behavior paradigm in personality and social psychology (e.g., Ambady & Rosenthal, Reference Ambady and Rosenthal1992; Borkenau et al., Reference Borkenau, Mauer, Riemann, Spinath and Angleitner2004), we dig into what role the specific slice of the AC exercise (i.e., the time point of the observation that initial impressions are based on) plays for the relationship between initial impressions and selection outcomes. Specifically, we examine effects related to initial impressions when observing a slice of assessees’ behavior at either the beginning, middle, or end of an AC exercise. Slices from different time points can contain different behavioral samples that assessors can rely on for building their impressions about assessees. On the one hand, assessees might appear prone to conveying constantly a good impression over the course of an AC exercise, making a positive correlation of impressions formed upon different slices likely. On the other hand, in this demanding situation, assessees might vary in their behaviors over the course of an AC exercise due to affective, cognitive, or motivational processes such as nervousness, information processing, or impression management (Fletcher et al., Reference Fletcher, Lovatt and Baldry1997; McFarland et al., Reference McFarland, Yun, Harold, Viera and Moore2005).

To provide knowledge on the effects of initial impressions formed from different slices, this study has the following objectives. First, we will explore the consistency of initial impressions formed of assessees across AC exercises (i.e., using slices from the same time points in different exercises) and within AC exercises (i.e., using slices from different time points within the same exercise). This will advance our conceptual understanding of the nature of initial impressions by addressing whether the observed slice of behavior generally matters for forming an initial impression (i.e., does assessees’ conveyed impression change across and within exercises). Second, we will scrutinize the relation between initial impressions from different slices and AC performance. This will allow for insights into assessors’ cognitive processes by investigating to what extent their ratings are affected by impressions that assessees’ convey during exercises, and if these relationships vary. Third, we will explore the criterion-related validity of initial impressions in ACs for different slices. We aim to generate practically relevant knowledge by exploring whether initial impression ratings from different slices contain criterion-relevant or irrelevant variance by examining their correlations with supervisor ratings of assessee’s job performance. Apart from increasing the theoretical understanding of impressions conveyed by assessees throughout an exercise, this will inform practitioners on whether to suppress or make use of impressions conveyed from assessees at different time points.

Research questions and hypotheses

Consistency of initial impressions across and within AC exercises

In the evaluative situation of the AC, assessees are supposed to put their best foot forward to achieve favorable ratings, and this should apply across AC exercises as well as to the whole time span of each AC exercise. As such, it appears plausible that the impressions conveyed by assessees at the same time point (beginning, middle, or end) in different AC exercises, and also at different time points in the same AC exercise, should converge. Findings on initial impressions outside the selection literature support this, indicating their relative stability across different situations (e.g., Borkenau et al., Reference Borkenau, Mauer, Riemann, Spinath and Angleitner2004; Leikas et al., Reference Leikas, Lönnqvist and Verkasalo2012).

However, it could be that some assessees behave inconsistently across different exercises depending on the specific situational demands of the exercises (Breil et al., Reference Breil, Lievens, Forthmann and Back2023; Lance, Reference Lance2008; Woehr & Arthur, Reference Woehr and Arthur2003). This might result in different initial impressions conveyed across different exercises. In a similar vein, it is possible that assessees behave inconsistently within the same exercise. For example, at the beginning of an AC exercise, an assessee might be especially nervous and therefore appear less competent as compared to the end of an AC exercise when the same assessee might be more relaxed and appears more competent. Given the different possibilities, we explore:

Research Question 1 Are initial impressions formed of an assessee based on 2-min observations of the beginning, the middle, and the end of different AC exercises positively related across different AC exercises?

Research Question 2 Are initial impressions formed of an assessee based on 2-min observations of the beginning, the middle, and the end of different AC exercises positively related within the same AC exercises?

Predicting assessment center performance from initial impressions

From a dual process theory perspective (Evans, Reference Evans2008; Kahneman, Reference Kahneman2003), assessors might be prone to use their quick judgments as a general anchor to arrive at AC performance ratings. Dual process theories assume that two cognitive processes are relevant to human judgment: Type 1 processing that happens automatically (i.e., quick, intuitive, non-conscious judgment) and Type 2 processing that is deliberate (i.e., slower, analytical, conscious judgment; Kahneman, Reference Kahneman2003). In an AC, high cognitive demands are placed on assessors, and this represents a fertile ground for intuitive Type 1 processing such as initial impressions to affect judgment outcomes (Kahneman & Frederick, Reference Kahneman, Frederick, Gilovich, Griffin and Kahneman2002; Stanovich & West, Reference Stanovich and West2000). This is also what has been argued in more recent theorizing on assessor rating processes (Ingold et al., Reference Ingold, Dönni and Lievens2018). Empirically, this has only been shown for initial impression based upon thin slices of the beginning of AC exercises (Ingold et al., Reference Ingold, Dönni and Lievens2018). Thus, we posit the following hypotheses, and examine Hypothesis 1a as replication:

Hypotheses 1a-c Initial impressions formed of an assessee based on 2-min observations at the (a) beginning, (b) middle, and (c) end of an AC exercise will predict AC performance ratings as rated by trained assessors in this exercise.

Predicting job performance from initial impressions

A prevailing position in AC textbooks and in AC rater trainings has been hat intuitive Type 1 processing might lead to AC ratings that contain criterion-irrelevant variance (e.g., Thornton et al., Reference Thornton, Rupp and Hoffman2014), and thus initial impressions might be detrimental to the AC ratings’ criterion-related validity and should therefore be avoided. On the other hand, in more recent dual process theory building, a shift has occurred: Whereas past research on dual process theories highlighted the error-proneness of Type’s 1 quick and unreflective processes, there is now more attention spent on their advantages (see Evans & Stanovich, Reference Evans and Stanovich2013; Krueger & Funder, Reference Krueger and Funder2004; Kruglanski & Gigerenzer, Reference Kruglanski and Gigerenzer2011). Specifically, dual process theory scholars have pointed out that “intuitive thinking can also be powerful and accurate” (Kahneman, Reference Kahneman2003, p. 699) and “Type 1 processing can lead to right answers” (Evans & Stanovich, Reference Evans and Stanovich2013, p.229). For example, decades of research have shown that intuitive judgments of strangers’ personality traits are surprisingly accurate (e.g., Borkenau et al., Reference Borkenau, Mauer, Riemann, Spinath and Angleitner2004; Connelly & Ones, Reference Connelly and Ones2010). Thus, it seems plausible that initial impressions from ACs contain some criterion-relevant variance and predict job performance. However, the only empirical investigation on this relation points toward a non-significant, positive effect (Ingold et al., Reference Ingold, Dönni and Lievens2018), and a recent review on initial impressions in work settings, highlights the ongoing debate about valid versus biased information contained in initial impressions (Swider et al., Reference Swider, Harris and Gong2022). Thus, we explore for all three slices:

Research Question 3 How do initial impressions based on 2-min observations of the beginning, the middle, and the end of AC exercises relate to job performance?

Methods

Sample

The original sample consisted of 223 assessees (91 women). This sample was part of a large data collection from the Swiss National Science Foundation (146039; https://data.snf.ch/grants/grant/146039) and has also been studied in Heimann et al. (Reference Heimann, Ingold, Lievens, Melchers, Keen and Kleinmann2022) and Heimann et al. (Reference Heimann, Ingold, Debus and Kleinmann2021). Assessees were employed in various organizations and had registered to participate in a one-day AC as part of a job application training program to prepare for future applications. They agreed that their data including videotapes of them participating in AC exercises would be used for research purposes. In return for their participation, assessees received feedback and recommendations regarding their AC performance. The mean age of assessees was 30.56 (SD = 7.32) years and they had been employed in their current job for 2.57 (SD = 2.22) years on average. All assessees provided the contact details of their direct supervisor upon signing up for the job application trainings so that we could collect supervisory job performance ratings. Assessees and assessors did not have access to supervisory performance ratings, and supervisors did not have access to assessees’ AC performance ratings.

Procedure

Assessees completed three AC exercises in randomized order: one competitive leaderless group discussion (LGD) and two cooperative LGDs. For each of these interactive exercises, assessees had 15 minutes of individual preparation time and 30 minutes for the actual exercise. In the competitive LGD (Exercise 1), a group of assessees discussed different strategies to solve a given problem. The group had to agree on a rank order for the effectiveness of the different strategies they had discussed, and each assessee had the task to convince the group of their particular rank order. In the two cooperative LGDs (Exercise 2 and Exercise 3), a group of assessees had to find the best solution to a given problem as a team. Each assessee held different pieces of information and they had to share their information with the other assessees in their group to come up with a suitable solution. All three AC exercises had been used in previous AC studies, and had been shown to be criterion-valid (Ingold et al., Reference Ingold, Kleinmann, König and Melchers2016; Jansen et al., Reference Jansen, Melchers, Lievens, Kleinmann, Brändli, Fraefel and König2013; König et al., Reference König, Melchers, Kleinmann, Richter and Klehe2007). In each AC exercise, a panel of two trained assessors rated assessees’ behavioral competencies. Teams of assessors rotated across AC exercises, so that each assessee was evaluated by four assessors (i.e., two teams) in total during the AC. Assessors stemmed from a pool of 43 (34 women) trained university students who were majoring in I-O psychology and who participated as interns to gain experience as assessors. Assessors mean age was 28.33 years (SD = 6.87), and they had been studying psychology for 3.20 (SD = 1.25) years on average. Two thirds of them (67%) were graduate students. Prior research did not find a difference in the rating accuracy of psychology students versus managers as assessors (Wirz et al., Reference Wirz, Melchers, Lievens, De Corte and Kleinmann2013). To comply with recommended AC practices (International Taskforce on Assessment Center Guidelines, 2015), assessors had previously completed a one-day frame-of-reference assessor training (Roch et al., Reference Roch, Woehr, Mishra and Kieszczynska2012).

After the initial data collection (i.e., the administration of AC exercises), we collected ratings of initial impressions from a novel pool of raters to ensure that assessees’ AC performance and initial impressions were evaluated by different individuals. Following the procedure from previous AC research as well as interview research (e.g., Barrick et al., 2010, 2012; Ingold et al., Reference Ingold, Dönni and Lievens2018; Schmid Mast et al., Reference Schmid Mast, Bangerter, Bulliard and Aerni2011; Swider et al., Reference Swider, Barrick, Harris and Stoverink2011), we obtained initial impression ratings based on 2-min video excerpts. Specifically, we extracted two minutes from the beginning, middle, and end of each of the three video-recorded AC exercises. We had to exclude some videos due to recording issues. In parallel to Ingold et al. (Reference Ingold, Dönni and Lievens2018), five raters per video independently rated initial impressions. In each AC exercises and at each time point, initial impressions were rated by a different set of five raters—so that each set of raters evaluated each assessee only once. This led to nine different sets of raters (i.e., 45 raters) per assessee. Video clips were randomly assigned to the sets of raters. The rater pool consisted of 150 (114 women) individuals who, similar to the assessors providing AC ratings, were university students majoring in I-O psychology. Their mean age was 24.95 years (SD = 6.27), and they had been studying psychology for 1.54 (SD = 0.96) years on average. About a quarter of them (26%) were graduate students. We recruited a group of lay raters without requiring any experience as assessors given that prior research showed no difference between ratings from experienced and unexperienced initial impression raters (Ingold et al., Reference Ingold, Dönni and Lievens2018). Similar to previous research on initial impressions (Barrick et al., Reference Barrick, Swider and Stewart2010; Swider et al., Reference Swider, Barrick and Harris2016), raters were not trained and they were not allowed to discuss their ratings with each other as common in the thin slice paradigm. Initial impression raters had no access to any other ratings of assessees (i.e., AC performance, job performance).

Measures

Initial impressions

To assess initial impressions in AC exercises, we used the same scale as Ingold et al. (Reference Ingold, Dönni and Lievens2018). The scale consisted of eight items targeting raters’ general initial impressions of assessees. An example item for this scale is “I have a positive impression of this assessee”. Raters rated all items on a 7-point scale ranging from 1 (strongly disagree) to 7 (strongly agree). Across raters, AC exercises, and time points the internal consistency of the initial impression scale was α = .98. Interrater reliabilities for single raters were ICC(1, 1) = .26 in Exercise 1, .35 in Exercise 2, .30 in Exercise 3, and .30 averaged across exercises. Given that reliabilities for single raters are typically low when assessing initial impressions, each assessee was rated by five raters on each item (see also Swider et al., Reference Swider, Barrick and Harris2016). Interrater reliabilities of initial impression ratings averaged across the five raters were ICC(1, 5) = .64 in Exercise 1, .73 in Exercise 2, .68 in Exercise 3, and .68 averaged across exercises, which is in line with what to expect based on previous initial impression studies with a similar design (Ingold et al., Reference Ingold, Dönni and Lievens2018; Swider et al., Reference Swider, Barrick and Harris2016).

AC performance

In the AC, assessees’ performance was rated on five AC dimensions in total. The AC dimensions were Communication, Influencing Others, Consideration, Organizing and Planning, and Problem Solving (see Arthur et al., Reference Arthur, Day, McNelly and Edens2003). For each AC exercise, assessors were provided with behavioral anchors for each of the AC dimensions they had to rate. Assessors individually rated four AC dimensions on a 5-point-scale from 1 (very low expression) to 5 (very high expression) in each of the three AC exercises. As common in AC practice (International Taskforce on Assessment Center Guidelines, 2015), each assessee was rated by a team of two trained assessors in each exercise. After completion of all AC exercises, assessors discussed their individual ratings. To obtain scores of AC performance, we averaged ratings across AC dimensions within each AC exercise. Before discussion, interrater reliabilities of AC ratings from individual assessors were ICC (1, 1) = .64 in Exercise 1, .70 in Exercise 2, .69 in Exercise 3, and .68 averaged across exercises, and interrater reliabilities for AC ratings averaged across the two assessors were ICC(1, 2) = .78 in Exercise 1, .83 in Exercise 2, .81 in Exercise 3, and .81 averaged across exercises. After discussion, interrater reliabilities for individual assessors were ICC(1, 1) = .80 in Exercise 1, .82 in Exercise 2, .81 in Exercise 3, and .81 averaged across exercises, and interrater reliabilities for AC ratings averaged across the two assessors were ICC(1, 2) = .89 in Exercise 1, .90 in Exercise 2, .89 in Exercise 3, and .90 averaged across exercises, which is comparable to the interrater reliability reported in previous studies with a similar design (Heimann et al., Reference Heimann, Ingold, Lievens, Melchers, Keen and Kleinmann2022; Ingold et al., Reference Ingold, Kleinmann, König and Melchers2016; Jansen et al., Reference Jansen, Melchers, Lievens, Kleinmann, Brändli, Fraefel and König2013; Wirz et al., Reference Wirz, Melchers, Kleinmann, Lievens, Annen, Blum and Ingold2020).

Job performance

After assessees had completed the AC, we contacted their direct supervisors to obtain job performance ratings via an online survey. In total, 201 supervisors (57 women) completed the survey. We measured job performance with nine items from Jansen et al. (Reference Jansen, Melchers, Lievens, Kleinmann, Brändli, Fraefel and König2013) on a 7-point scale ranging from 1 (not at all) to 7 (absolutely). An example item is “S/he achieves the objectives of the job”. The internal consistency of the scale was α = .91.

Results

All statistical analyses were performed in R. We provide the anonymized data and code for our analyses on https://osf.io/e4rsq.

Consistency of initial impressions

Research Question 1 addressed the relationship of initial expressions across different AC exercises. Thus, we calculated bivariate correlations of assessees’ initial impressions separately for the beginning, middle, and end across the three exercises. Results are displayed in Table 1. Overall, initial impressions from the same time point (beginning, middle, and end of an AC exercise) were consistent across AC exercises. For the initial impressions based upon ratings of the beginning of three different exercises, we found significant correlations ranging from r = .19–.28 (average correlation .25). Similar results were found for initial impressions based on the middle (r = .17–.25; average correlation .21), and the end (r = .13–.17; average correlation .15; with one non-significant correlation of .13) of the exercises. Overall, the mean correlation for initial impressions measured at the same time point in different exercises was .20. Hence, individuals who were perceived more favorable on a given slice in one exercise were also perceived more favorable based on the same slices in other exercises.

Table 1. Means, Standard Deviations, and Intercorrelations of Initial Impressions in Assessment Center Exercises

Note. Initial impressions were rated by five initial impression raters. For each assessment center exercise (Exercise 1, 2, and 3) and each time point (beginning, middle, and end), a different set of five raters were used. Significant values (p < .05) are displayed in bold.

For Research Question 2, we investigated the relationship of initial impressions from different time points within the same AC exercises (see Table 1). All correlations were significant ranging from r = .19 (average correlation of initial impressions within Exercise 2) to .26 (average correlation of initial impressions within Exercise 3). The mean correlation for initial impressions measured at different time points within the same exercise was .22. Thus, initial impressions were also consistent within exercises. This may indicate that individuals that were perceived more favorable based on their impression from the beginning of an exercise were also received more favorable based on their impression from the middle and end of the same exercise.

To further explore the consistency of initial impressions, we also investigated the relationships of initial impressions from different exercises and different time points (i.e., slices). Correlations ranged from r = .07–.33. The mean correlation for initial impressions measured at different time points and in different exercises was .22, and thus similar to the mean correlations for initial impressions measured at the same time point in different exercises, and for initial impressions measured at different time points within the same exercise. Taken together, results speak in favor of some general consistency in initial impressions across different types of slices (exercises and time points), especially given that initial impression ratings of different slices stemmed from different groups of untrained raters.

Initial impressions predicting AC performance

In Hypotheses 1a–c, we predicted that initial impressions formed at the (a) beginning, (b) middle, and (c) end of an AC exercise by untrained raters would predict AC performance ratings as rated by trained assessors in this exercise. To account for the fact that multiple different assessors evaluated different assessees across different exercises, we calculated multilevel models (crossed-random effects; see also Judd et al., Reference Judd, Westfall and Kenny2012 for advantages; Putka & Hoffman, Reference Putka and Hoffman2013) using the R package lme4 (Bates et al., Reference Bates, Mächler, Bolker and Walker2014). For this, we specified assessees, assessors, and exercises as random effects. This allows to acknowledge for the fact that (1) some assessees performed better than others (“assessee variance”), (2) some assessors gave more lenient/severe performance ratings than others (“assessor variance”), and (3) some exercises were harder/easier than others (“exercise variance”). As shown in Table 2, while the differences between assessees were large, the differences between assessors or exercises were negligible. Variance in performance ratings that could not be explained by either general assessee performance, assessor rating styles, or exercises effects is found in the residual variance. For our main research question, we calculated three models in which we added initial impressions received from the beginning (see Model 1), the middle (see Model 2), or the end of the AC exercise (see Model 3) as fixed effect (i.e., one model for each time point). Here we wanted to see if higher initial impression ratings (across all assessees, assessors, and exercises) would lead to higher performance ratings. Results showed that initial impressions from all time points significantly predicted AC performance ratings (beginning b = .17; p < .001; middle b = .15; p < .001; end b = .17; p < .001). That is, initial impression ratings were positively related to AC performance, thereby supporting Hypotheses 1a–c.

Table 2. Multilevel Models of Initial Impressions Predicting Assessment Center Ratings

Note. Displayed are four different multilevel models with three random intercepts each (as well as the residual variance). Assessee variance: Variance in performance ratings attributable to different assessees (i.e., some assessees performed better than others). Assessor variance: Variance in performance ratings attributable to different assessors (i.e., some assessors gave more lenient/severe performance ratings than others). Exercise variance: Variance in performance rating attributable to different exercises (i.e., some exercises were harder/easier than others). Residual variance: Variance in performance ratings that could not be explained by assessee, assessor, or exercise main effects. The included fixed effects differed depending on the model: Model 1 includes only initial impressions based on the beginning of the assessment center (AC) exercises, Model 2 includes only initial impressions based on the middle of the AC exercises, Model 3 includes only initial impressions based on the end of the AC exercises, and Model 4 includes initial impressions from all time points.

As part of an exploratory analysis, we further investigated the unique importance of the three initial impressions for AC performance ratings based on the different slices (i.e., from different time points). For this, we added all three initial impressions from different time points as fixed effects into the same model (see Model 4 in Table 2) such that, in contrast to the previous models, all initial impressions were considered simultaneously. All three impressions were significantly related to AC performance, even when controlling for the respective other impressions (beginning b = .17; middle b = .14; end b = .16; all ps < .001). Hence, for example, the initial impression received from the end predicted additional AC performance in comparison to the other initial impressions from the other slices (i.e., from the beginning and the middle).

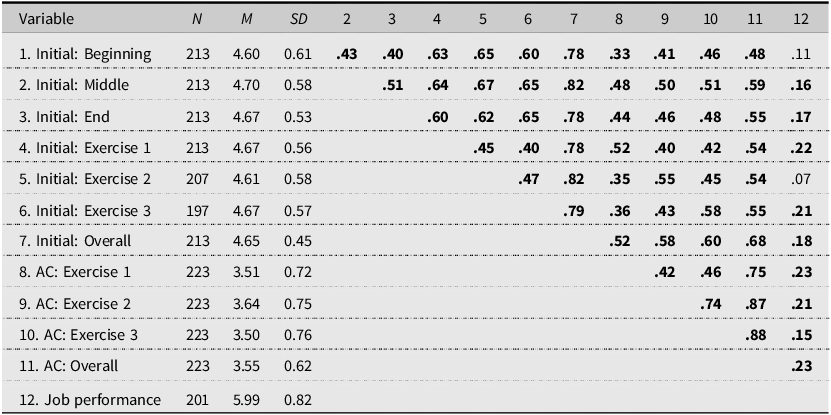

Finally, as additional robustness check, we calculated bivariate correlations for all relevant variables, as can be seen in Table 3. The first six rows in Table 3 refer to initial impressions aggregated to the time point (Rows 1 to 3) or the exercise (Rows 4–6). Row 7 refers to an overall initial impression rating aggregated across time points and exercises. Thus, correlations between the first six rows and Row 7 can be seen as part-whole correlation. Rows 8–11 refer to the AC performance ratings (on the exercise-level and the overall-level). Row 12 refers to job performance. The main results presented in this table (i.e., correlations between initial impressions from the beginning, middle, and end of an AC exercise with performance in the respective exercise) are in line with the results of the crossed-random effects models that are presented in Table 2.

Table 3. Means, Standard Deviations, and Intercorrelations of Main Study Variables

Note. Initial impressions were rated by initial impression raters. Initial: beginning = Initial impression ratings from the beginning aggregated across exercises. Initial: middle = Initial impression ratings from the middle aggregated across exercises. Initial: end = Initial impression ratings from the end aggregated across exercises. Initial: Exercise 1 = Initial impression ratings from Exercise 1 aggregated across time points. Initial: Exercise 2 = Initial impression ratings from Exercise 2 aggregated across time points. Initial: Exercise 3 = Initial impression ratings from Exercise 3 aggregated across time points. Initial: Overall = Initial impression ratings aggregated across exercises and time points. Assessment center (AC) ratings were rated by two assessors. AC: Exercise 1 = Assessment center ratings aggregated across assessors at Exercise 1. AC: Exercise 2 = Assessment center ratings aggregated across assessors at Exercise 2. AC: Exercise 3 = Assessment center ratings aggregated across assessors at Exercise 3. AC: Overall = Assessment center ratings aggregated across assessors and exercises. Job performance = Job performance ratings by supervisors. Significant values (p < .05) are displayed in bold.

Initial impressions predicting job performance

Research Question 3 explored the relationship between initial impressions and supervisor-rated job performance (see Table 3 for results). We performed this analysis with initial impressions aggregated (1) across exercises, (2) across time points, and (3) across exercises and time points. First, we analyzed initial impressions aggregated across the three exercises for each time point. Results of bivariate correlations showed that initial impressions based on the middle and on the end of AC exercises significantly related to job performance (middle r = .16, p = .030; end r = .17, p = .019). Initial impressions based on the beginning of the exercises did not significantly relate to job performance (r = .11; p = .146). Correlations between initial impressions from different time points and job performance did not differ significantly from each other, neither for initial impression from the beginning versus the middle (z = −0.68, p = .252), the beginning versus the end (z = −0.78, p = .217), nor the middle versus the end (z = −0.15, p = .443).

Second, we aggregated initial impressions across the three time points for each exercise. Here, initial impressions correlated significantly with job performance for Exercise 1 (r = .22, p = .002) and Exercise 3 (r = .21, p = .006), but not for Exercise 2 (r = .07, p = .377), even though all three exercises were criterion-valid (see Table 3). Correlations between initial impressions from different exercises and job performance differed significantly from each other for Exercise 1 versus Exercise 2 (z = 2.04, p = .020), and for Exercise 2 versus Exercise 3 (z = −1.81, p = .035), but not for Exercise 1 versus Exercise 3 (z = 0.14, p = .445).

Lastly, when aggregating initial impressions across all exercises and time points, the relationship between overall initial impressions and job performance was significant (r = .18, p = .012). Descriptively, this relationship appeared to approach the relationship between aggregated ratings of AC performance and job performance (r = .23, p < .001). Statistically, the correlations of job performance with overall initial impressions and with overall AC performance did not differ significantly from each other (z = −0.90, p = .184).

Discussion

The present study set out to extend prior research highlighting the role of initial impressions for AC ratings (Ingold et al., Reference Ingold, Dönni and Lievens2018) and to generate new knowledge about the consistency and relevance of slice positions for impressions in ACs. Drawing from the “thin slices” of behavior paradigm (e.g., Ambady, Reference Ambady2010; Ambady & Rosenthal, Reference Ambady and Rosenthal1992), results showed that initial impressions formed upon different slices of AC exercises (i.e., two minutes at the beginning, middle, and end of an AC exercise) were consistent across and within exercises, predicted AC performance, and—for two out of three time points and exercises—also predicted job performance.

Findings and implications for theory and research

A key finding of this study is that the relationship between initial impressions and AC performance is consistent and independent of what slice of an AC exercise is used to assess initial impressions. Across different exercises, we found that initial impressions were significantly related to ratings of AC performance—no matter if initial impressions were based on observing assessees at the beginning, middle, or end of an exercise. This also aligns with our finding that initial impressions were relatively consistent across different slices (i.e., across exercises and time points). Thus, assessees seem to convey similar impressions across different slices that constantly relate to AC performance. This effect exists even across different raters (as every rater was only allowed to see a candidate once) which speaks in favor of assessees’ consistency in conveying impressions. Conceptually, these findings imply that the initial impressions that a person evokes in others are (1) a relatively stable person characteristics in different evaluative situations within an AC (consistent with research on social cognition; e.g., Fiske et al., Reference Fiske, Cuddy and Glick2007), and (b) that, across different slices, these impressions are likely to influence how assessors evaluate this person’s behavior in an AC (consistent with dual process theory; Evans, Reference Evans2008).

A second relevant finding is that most of the initial impression ratings were positively related to job performance, thereby suggesting that initial impressions appear to contain some criterion-relevant information. Concerning the relevance of the time point of the slice in relation to job performance, the timing of the slice appeared irrelevant for the prediction of job performance, given that the consistently positive correlations from different time points did not differ statistically. At the same time, the relationship of exercise-level initial impressions and job performance appeared to differ across exercises (with one correlation based on initial impressions in one exercise being not significant and differing in size in comparison to the two correlations from the other two exercises). Thus, it seems noteworthy to study the influence of the situation on the criterion-relevance of initial impressions systematically (see below)—especially since all three exercises were structurally similar (i.e., all of them were LGDs) and criterion-valid (i.e., ratings of AC performance from all three exercises related to supervisory performance ratings). In combination with the exploratory finding that the relationship between overall initial impressions (i.e., when aggregated across all time points and exercises) and job performance did not differ significantly from the relationship of AC performance with job performance, these findings provide further evidence for the perspective that initial impressions in interactive personnel selection methods can contain valid information (Barrick et al., Reference Barrick, Swider and Stewart2010; Ingold et al., Reference Ingold, Dönni and Lievens2018).

At first sight, our results might then be read to suggest that when raters can observe assessees’ behavior in well-constructed AC exercises, detailed observations by trained assessors could be accompanied and to some degree potentially compensated for by using a larger amount of untrained raters providing generic evaluations (i.e., initial impression ratings). Yet, the reliability and total number of raters for AC ratings versus initial impression raters needs to be taken into consideration when interpreting the criterion-related validity results of aggregated initial impression ratings. The reliability of a single untrained lay rater in this study was ICC (1,1) = .30, whereas the reliability of a single trained assessor was ICC (1,1) = .68 (before discussing their individual ratings with other assessors). This illustrates that ratings from untrained lay raters are likely to be substantially less reliable than ratings from trained assessors, which in turn implies that a lot more lay raters are needed to achieve reliable scores (see also Wirz et al., Reference Wirz, Melchers, Lievens, De Corte and Kleinmann2013). For example, the overall score for AC performance in the present study relied on ratings from four assessors per assessee, whereas the overall score for initial impressions relied on ratings from 45 raters per assessee. In line with classical test theory (e.g., Gulliksen, Reference Gulliksen and Gulliksen1950), increasing the number of raters (i.e., which is equivalent to increasing test length) enhances the reliability of a measure, which has the potential to improve validity (if performance-relevant information is assessed) such that the considerable higher number of raters worked in favor of the criterion-related validity of initial impressions. This favorable effect of aggregating ratings from multiple raters has also been discussed in the context of initial impressions (Eisenkraft, Reference Eisenkraft2013; Ingold et al., Reference Ingold, Dönni and Lievens2018) as well as for multiple shorter speed assessments (Herde & Lievens, Reference Herde and Lievens2023). Accordingly, we see this research as a starting point and advocate for more research that scrutinizes the factors contributing to the criterion-relevant variance of initial impression ratings to allow for a more comprehensive database and a better theoretical understanding of the underlying factors.

To advance from here, future research particularly needs to shed light on the key drivers of the criterion-relevance of initial impressions, thereby extending prior research on antecedents of initial impressions in selection settings (Barrick et al., Reference Barrick, Dustin, Giluk, Stewart, Shaffer and Swider2012; Ingold et al., Reference Ingold, Dönni and Lievens2018; Swider et al., Reference Swider, Barrick and Harris2016). One methodological approach toward addressing this lies in coding micro-behaviors (Breil et al., Reference Breil, Lievens, Forthmann and Back2023) that are potentially observable in thin slices of AC exercises. This would allow answering the question to what extent initial impressions rely on observations of micro-behaviors (i.e., the degree to which they may be influenced by Type 2 processing; intentional and targeted evaluations), and thereby contribute to assessors’ ratings of AC performance, respectively. This is also relevant in light of the discussion about how Type 1 and Type 2 processing interrelate (Evans & Stanovich, Reference Evans and Stanovich2013), and in light of determining why some initial impressions appear to be more job relevant than others (as discussed above).

Whereas selection research on initial impression has tended to look into the characteristics of the person being rated (e.g., Barrick et al., Reference Barrick, Dustin, Giluk, Stewart, Shaffer and Swider2012; Ingold et al., Reference Ingold, Dönni and Lievens2018), another relevant area of research relates to shedding light on the role of situational characteristics on the criterion-relevance of initial impressions. In this study, the interpersonal, work-related nature of the AC exercises might have contributed to the criterion-relevance of initial impressions. To allow for a better understanding of the situational component, we suggest shedding light on how the fidelity and job relevance impacts the degree to which initial impressions may predict job performance, for instance by manipulating situational characteristics in experimental studies.

Limitations

A relevant limitation is that the AC in this study was not conducted for selection purposes, which could overestimate the criterion-related validity of initial impressions to some degree. The AC in this study was part of a job application training and therefore stakes were not as high as for assessees in real selection situations—even though we simulated an AC that followed the international AC guidelines (International Taskforce on Assessment Center Guidelines, 2015), and assessees reported that they were motivated to perform well in the AC. In a high-stakes selection AC, assessees might have been more nervous or would engage in more impression management as compared to the present AC. In consequence, the initial impressions that assessees convey in a high-stakes AC might be less criterion-valid than the initial impressions conveyed in the present AC because assessees’ appearance and behavior in a high-stakes AC might be less representative of the impressions they typically convey at work.

A second limitation is that assessees in the present study held various jobs, which could underestimate the criterion-related validity of AC ratings to some degree. Although the conducted AC was criterion-valid, criterion-validity estimates for AC performance ratings might potentially be higher in a study with assessees who hold similar types of jobs and in which AC exercises are specifically tailored to this type of job (see Bartels & Doverspike, Reference Bartels and Doverspike1997).

Implications for AC practices

The findings of this study offer two major takeaways for AC practitioners. First, initial impressions appear to contain information that is relevant to job performance. Therefore, it might be helpful to consider assessing initial impressions in ACs (notably in addition to traditional AC ratings)—instead of telling raters to suppress initial impressions. Second, in light of earlier research on the relevance of different exercises for the criterion-related validity (Speer et al., Reference Speer, Christiansen, Goffin and Goff2014), practitioners and researchers should join forces in piloting ACs with multiple shortened exercises (Herde & Lievens, Reference Herde and Lievens2020), and notably evaluate effects on the AC’s criterion-related validity across various samples to allow for meta-analytic conclusions on the validity of multiple shorter observations with different rating approaches in ACs in the long run.

Data availability statement

The data and R code for this article can be found in the online supplements at https://osf.io/e4rsq.

Acknowledgements

We thank Nadine Maier und Anna Luisa Grimm for helping to collect the initial impression data for this study. Parts of the data were collected as part of a larger Swiss National Science Foundation Project (grant number 146039), and parts of the these data have been used in Heimann et al. (Reference Heimann, Ingold, Lievens, Melchers, Keen and Kleinmann2022) and Heimann et al. (Reference Heimann, Ingold, Debus and Kleinmann2021).

Competing interests

We have no known conflict of interest to disclose.