1 Introduction and main results

1.1 Introduction

Given an ergodic measure preserving system

![]() $(X, \mu ,T)$

and functions

$(X, \mu ,T)$

and functions

![]() $f,g\in L^\infty (\mu )$

, it was shown in [Reference Frantzikinakis6] that for distinct

$f,g\in L^\infty (\mu )$

, it was shown in [Reference Frantzikinakis6] that for distinct

![]() $a,b\in {\mathbb R}_+\setminus {\mathbb Z}$

, we have

$a,b\in {\mathbb R}_+\setminus {\mathbb Z}$

, we have

$$ \begin{align} \lim_{N\to\infty} \frac{1}{N}\sum_{n=1}^N \, T^{[n^a]}f\cdot T^{[n^b]}g=\int f\, d\mu \cdot \int g\, d\mu\\[-16pt]\nonumber \end{align} $$

$$ \begin{align} \lim_{N\to\infty} \frac{1}{N}\sum_{n=1}^N \, T^{[n^a]}f\cdot T^{[n^b]}g=\int f\, d\mu \cdot \int g\, d\mu\\[-16pt]\nonumber \end{align} $$

in

![]() $L^2(\mu )$

.Footnote

1

An immediate consequence of this limit formula is that for every (not necessarily ergodic) measure preserving system and measurable set A, we have

$L^2(\mu )$

.Footnote

1

An immediate consequence of this limit formula is that for every (not necessarily ergodic) measure preserving system and measurable set A, we have

$$ \begin{align} \lim_{N\to\infty} \frac{1}{N}\sum_{n=1}^N \, \mu(A\cap T^{-[n^a]}A\cap T^{-[n^b]}A)\geq \mu(A)^3.\\[-16pt]\nonumber \end{align} $$

$$ \begin{align} \lim_{N\to\infty} \frac{1}{N}\sum_{n=1}^N \, \mu(A\cap T^{-[n^a]}A\cap T^{-[n^b]}A)\geq \mu(A)^3.\\[-16pt]\nonumber \end{align} $$

Examples of periodic systems show that equations (1) and (2) fail if either a or b is an integer greater than

![]() $1$

. Using the Furstenberg correspondence principle [Reference Furstenberg10, Reference Furstenberg11], it is easy to deduce from equation (2) that every set of integers with positive upper density contains patterns of the form

$1$

. Using the Furstenberg correspondence principle [Reference Furstenberg10, Reference Furstenberg11], it is easy to deduce from equation (2) that every set of integers with positive upper density contains patterns of the form

for some

![]() $m,n\in {\mathbb N}$

.

$m,n\in {\mathbb N}$

.

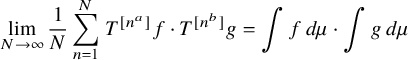

The main goal of this article is to establish similar convergence and multiple recurrence results, and deduce related combinatorial consequences, when in the previous statements we replace the variable n with the nth prime number

![]() $p_n$

. For instance, we show in Theorem 1.1 that if

$p_n$

. For instance, we show in Theorem 1.1 that if

![]() $a,b\in {\mathbb R}_+$

are distinct nonintegers, then

$a,b\in {\mathbb R}_+$

are distinct nonintegers, then

$$ \begin{align} \lim_{N\to\infty} \frac{1}{N}\sum_{n=1}^N \, T^{[p_n^a]}f\cdot T^{[p_n^b]}g=\int f\, d\mu \cdot \int g\, d\mu\\[-16pt]\nonumber \end{align} $$

$$ \begin{align} \lim_{N\to\infty} \frac{1}{N}\sum_{n=1}^N \, T^{[p_n^a]}f\cdot T^{[p_n^b]}g=\int f\, d\mu \cdot \int g\, d\mu\\[-16pt]\nonumber \end{align} $$

in

![]() $L^2(\mu )$

. We also prove more general statements of this sort involving two or more linearly independent polynomials with fractional exponents evaluated at primes (related results for fractional powers of integers were previously established in [Reference Bergelson, Moreira and Richter4, Reference Frantzikinakis6, Reference Richter26]).

$L^2(\mu )$

. We also prove more general statements of this sort involving two or more linearly independent polynomials with fractional exponents evaluated at primes (related results for fractional powers of integers were previously established in [Reference Bergelson, Moreira and Richter4, Reference Frantzikinakis6, Reference Richter26]).

If

![]() $a,b\in {\mathbb N}$

are natural numbers, then equation (3) fails because of obvious congruence obstructions. On the other hand, using the method in [Reference Frantzikinakis, Host and Kra9], it can be shown that if

$a,b\in {\mathbb N}$

are natural numbers, then equation (3) fails because of obvious congruence obstructions. On the other hand, using the method in [Reference Frantzikinakis, Host and Kra9], it can be shown that if

![]() $a,b\in {\mathbb N}$

are distinct, then equation (3) does hold under the additional assumption that the system is totally ergodic; see also [Reference Karageorgos and Koutsogiannis19, Reference Koutsogiannis20] for related work regarding polynomials in

$a,b\in {\mathbb N}$

are distinct, then equation (3) does hold under the additional assumption that the system is totally ergodic; see also [Reference Karageorgos and Koutsogiannis19, Reference Koutsogiannis20] for related work regarding polynomials in

![]() ${\mathbb R}[t]$

evaluated at primes. The main idea in the proof of these results is to show that the difference of a modification of the averages in equation (3) and the averages equation (1) converges to

${\mathbb R}[t]$

evaluated at primes. The main idea in the proof of these results is to show that the difference of a modification of the averages in equation (3) and the averages equation (1) converges to

![]() $0$

in

$0$

in

![]() $L^2(\mu )$

. This comparison method works well when

$L^2(\mu )$

. This comparison method works well when

![]() $a,b$

are positive integers since, in this case, one can bound this difference by the Gowers uniformity norm of the modified von Mangoldt function

$a,b$

are positive integers since, in this case, one can bound this difference by the Gowers uniformity norm of the modified von Mangoldt function

![]() $\tilde {\Lambda }_N$

(see [Reference Frantzikinakis, Host and Kra9, Lemma 3.5] for the precise statement), which is known by [Reference Green and Tao14] to converge to

$\tilde {\Lambda }_N$

(see [Reference Frantzikinakis, Host and Kra9, Lemma 3.5] for the precise statement), which is known by [Reference Green and Tao14] to converge to

![]() $0$

as

$0$

as

![]() $N\to \infty $

. Unfortunately, if

$N\to \infty $

. Unfortunately, if

![]() $a,b$

are not integers, this comparison step breaks down, since it requires a uniformity property for

$a,b$

are not integers, this comparison step breaks down, since it requires a uniformity property for

![]() $\tilde {\Lambda }_N$

in which some of the averaging parameters lie in very short intervals, a property that is currently not known. An alternative approach for establishing equation (3) is given by the argument used in [Reference Frantzikinakis6] to prove equation (1). It uses the theory of characteristic factors that originates from [Reference Host and Kra16] and eventually reduces the problem to an equidistribution result on nilmanifolds. This method is also blocked since we are unable to establish the needed equidistribution properties on general nilmanifolds.Footnote

2

$\tilde {\Lambda }_N$

in which some of the averaging parameters lie in very short intervals, a property that is currently not known. An alternative approach for establishing equation (3) is given by the argument used in [Reference Frantzikinakis6] to prove equation (1). It uses the theory of characteristic factors that originates from [Reference Host and Kra16] and eventually reduces the problem to an equidistribution result on nilmanifolds. This method is also blocked since we are unable to establish the needed equidistribution properties on general nilmanifolds.Footnote

2

Our approach is quite different and is based on a recent result of the author from [Reference Frantzikinakis8] (see Theorem 2.1 below); it implies that in order to verify equation (3), it suffices to obtain suitable seminorm estimates and equidistribution results on the circle (versus the general nilmanifold that the method of characteristic factors requires). The needed equidistribution property follows from [Reference Bergelson, Kolesnik, Madritsch, Son and Tichy2] (see Theorem 2.2 below), and the bulk of this article is devoted to the rather tricky proof of the seminorm estimates (see Theorem 1.4 below).

1.2 Main results

To facilitate discussion, we use the following definition from [Reference Frantzikinakis8].

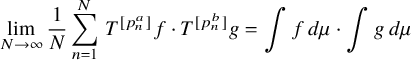

Definition. We say that the collection of sequences

![]() $b_1,\ldots , b_\ell \colon {\mathbb N}\to {\mathbb Z}$

is jointly ergodic if, for every ergodic system

$b_1,\ldots , b_\ell \colon {\mathbb N}\to {\mathbb Z}$

is jointly ergodic if, for every ergodic system

![]() $(X,\mu ,T)$

and functions

$(X,\mu ,T)$

and functions

![]() $f_1,\ldots , f_\ell \in L^\infty (\mu )$

, we have

$f_1,\ldots , f_\ell \in L^\infty (\mu )$

, we have

$$ \begin{align*} \lim_{N\to\infty} \frac{1}{N}\sum_{n=1}^N \, T^{b_1(n)}f_1 \cdot\ldots \cdot T^{b_\ell(n)}f_\ell= \int f_1\, d\mu\cdot \ldots \cdot \int f_\ell\, d\mu \end{align*} $$

$$ \begin{align*} \lim_{N\to\infty} \frac{1}{N}\sum_{n=1}^N \, T^{b_1(n)}f_1 \cdot\ldots \cdot T^{b_\ell(n)}f_\ell= \int f_1\, d\mu\cdot \ldots \cdot \int f_\ell\, d\mu \end{align*} $$

in

![]() $L^2(\mu )$

.

$L^2(\mu )$

.

For instance, the identities in equations (1) and (3) are equivalent to the joint ergodicity of the pairs of sequences

![]() $\{[n^a], [n^b]\}$

and

$\{[n^a], [n^b]\}$

and

![]() $\{[p_n^a], [p_n^b]\}$

when

$\{[p_n^a], [p_n^b]\}$

when

![]() $a,b\in {\mathbb R}_+$

are distinct nonintegers.

$a,b\in {\mathbb R}_+$

are distinct nonintegers.

We will establish joint ergodicity properties involving the class of fractional polynomials that we define next.

Definition. A polynomial with real exponents is a function

![]() $a\colon {\mathbb R}_+\to {\mathbb R}$

of the form

$a\colon {\mathbb R}_+\to {\mathbb R}$

of the form

![]() $a(t)=\sum _{j=1}^r \alpha _jt^{d_j}$

, where

$a(t)=\sum _{j=1}^r \alpha _jt^{d_j}$

, where

![]() $\alpha _j\in {\mathbb R}$

and

$\alpha _j\in {\mathbb R}$

and

![]() $d_j\in {\mathbb R}_+$

,

$d_j\in {\mathbb R}_+$

,

![]() $j=1,\ldots , r$

. If

$j=1,\ldots , r$

. If

![]() $d_1,\ldots , d_r\in {\mathbb R}_+\setminus {\mathbb Z}$

, we call it a fractional polynomial.

$d_1,\ldots , d_r\in {\mathbb R}_+\setminus {\mathbb Z}$

, we call it a fractional polynomial.

The following is the main result of this article:

Theorem 1.1. Let

![]() $a_1,\ldots , a_\ell $

be linearly independentFootnote

3

fractional polynomials. Then the collection of sequences

$a_1,\ldots , a_\ell $

be linearly independentFootnote

3

fractional polynomials. Then the collection of sequences

![]() $[a_1(p_n)],\ldots , [a_\ell (p_n)]$

is jointly ergodic.

$[a_1(p_n)],\ldots , [a_\ell (p_n)]$

is jointly ergodic.

In particular, this applies to the collection of sequences

![]() $[n^{c_1}],\ldots , [n^{c_\ell }]$

, where

$[n^{c_1}],\ldots , [n^{c_\ell }]$

, where

![]() $c_1,\ldots , c_\ell \in {\mathbb R}_+\setminus {\mathbb Z}$

are distinct. We remark also that the linear independence assumption is necessary for joint ergodicity. Indeed, suppose that

$c_1,\ldots , c_\ell \in {\mathbb R}_+\setminus {\mathbb Z}$

are distinct. We remark also that the linear independence assumption is necessary for joint ergodicity. Indeed, suppose that

![]() $a_1,\ldots ,a_\ell $

is a collection of linearly depended sequences. Then

$a_1,\ldots ,a_\ell $

is a collection of linearly depended sequences. Then

![]() $c_1a_1+\cdots +c_\ell a_\ell =0$

for some

$c_1a_1+\cdots +c_\ell a_\ell =0$

for some

![]() $c_1,\ldots , c_\ell \in {\mathbb R}$

not all of them

$c_1,\ldots , c_\ell \in {\mathbb R}$

not all of them

![]() $0$

. After multiplying by an appropriate constant, we can assume that at least one of the

$0$

. After multiplying by an appropriate constant, we can assume that at least one of the

![]() $c_1,\ldots , c_\ell $

is not an integer and

$c_1,\ldots , c_\ell $

is not an integer and

![]() $\max _{i=1,\ldots , \ell }|c_i|\leq 1/(10\ell )$

. Then

$\max _{i=1,\ldots , \ell }|c_i|\leq 1/(10\ell )$

. Then

![]() $c_1[a_1(n)]+\cdots +c_\ell [a_\ell (n)]\in [-1/10,1/10]$

for all

$c_1[a_1(n)]+\cdots +c_\ell [a_\ell (n)]\in [-1/10,1/10]$

for all

![]() $n\in {\mathbb N}$

, and this easily implies that the collection

$n\in {\mathbb N}$

, and this easily implies that the collection

![]() $[a_1(n)],\ldots , [a_\ell (n)]$

is not good for equidistribution (see definition in Section 2) and hence not jointly ergodic.

$[a_1(n)],\ldots , [a_\ell (n)]$

is not good for equidistribution (see definition in Section 2) and hence not jointly ergodic.

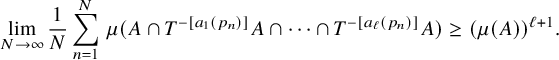

Using standard methods, we immediately deduce from Theorem 1.1 the following multiple recurrence result:

Corollary 1.2. Let

![]() $a_1,\ldots , a_\ell $

be linearly independent fractional polynomials. Then for every system

$a_1,\ldots , a_\ell $

be linearly independent fractional polynomials. Then for every system

![]() $(X,\mu ,T)$

and measurable set A, we have

$(X,\mu ,T)$

and measurable set A, we have

$$ \begin{align*} \lim_{N\to\infty} \frac{1}{N}\sum_{n=1}^N \, \mu(A\cap T^{-[a_1(p_n)]}A\cap\cdots\cap T^{-[a_{\ell}(p_n)]}A)\geq (\mu(A))^{\ell+1}. \end{align*} $$

$$ \begin{align*} \lim_{N\to\infty} \frac{1}{N}\sum_{n=1}^N \, \mu(A\cap T^{-[a_1(p_n)]}A\cap\cdots\cap T^{-[a_{\ell}(p_n)]}A)\geq (\mu(A))^{\ell+1}. \end{align*} $$

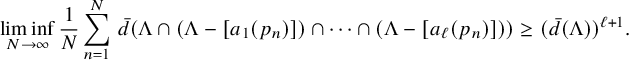

Using the Furstenberg correspondence principle [Reference Furstenberg10, Reference Furstenberg11], we deduce the following combinatorial consequence:

Corollary 1.3. Let

![]() $a_1,\ldots , a_\ell $

be linearly independent fractional polynomials. Then for every subset

$a_1,\ldots , a_\ell $

be linearly independent fractional polynomials. Then for every subset

![]() $\Lambda $

of

$\Lambda $

of

![]() ${\mathbb N}$

, we haveFootnote

4

${\mathbb N}$

, we haveFootnote

4

$$ \begin{align*} \liminf_{N\to\infty} \frac{1}{N}\sum_{n=1}^N \, \bar{d}(\Lambda\cap (\Lambda -[a_1(p_n)])\cap \cdots \cap (\Lambda -[a_\ell(p_n)]))\geq (\bar{d}(\Lambda))^{\ell+1}.\\[-15pt] \end{align*} $$

$$ \begin{align*} \liminf_{N\to\infty} \frac{1}{N}\sum_{n=1}^N \, \bar{d}(\Lambda\cap (\Lambda -[a_1(p_n)])\cap \cdots \cap (\Lambda -[a_\ell(p_n)]))\geq (\bar{d}(\Lambda))^{\ell+1}.\\[-15pt] \end{align*} $$

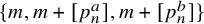

Hence, every set of integers with positive upper density contains patterns of the form

![]() $\{m,m+[a_1(p_n)], \ldots , m+[a_\ell (p_n)] \}$

for some

$\{m,m+[a_1(p_n)], \ldots , m+[a_\ell (p_n)] \}$

for some

![]() $m,n\in {\mathbb N}$

.

$m,n\in {\mathbb N}$

.

An essential tool in the proof of our main result is the following statement that is of independent interest since it covers a larger class of collections of fractional polynomials (not necessarily linearly independent) evaluated at primes. See Section 2 for the definition of the seminorms

![]() $\lvert \!|\!| \cdot |\!|\!\rvert _s$

.

$\lvert \!|\!| \cdot |\!|\!\rvert _s$

.

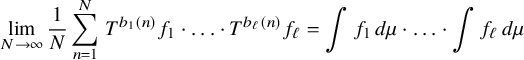

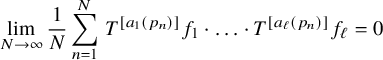

Theorem 1.4. Suppose that the fractional polynomials

![]() $a_1,\ldots , a_\ell $

and their pairwise differences are nonzero. Then there exists

$a_1,\ldots , a_\ell $

and their pairwise differences are nonzero. Then there exists

![]() $s\in {\mathbb N}$

such that for every ergodic system

$s\in {\mathbb N}$

such that for every ergodic system

![]() $(X,\mu ,T)$

and functions

$(X,\mu ,T)$

and functions

![]() $f_1,\ldots , f_\ell \in L^\infty (\mu )$

with

$f_1,\ldots , f_\ell \in L^\infty (\mu )$

with

![]() $\lvert \!|\!| f_i|\!|\!\rvert _{s}=0$

for some

$\lvert \!|\!| f_i|\!|\!\rvert _{s}=0$

for some

![]() $i\in \{1,\ldots , \ell \}$

, we have

$i\in \{1,\ldots , \ell \}$

, we have

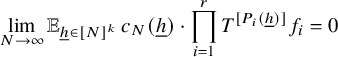

$$ \begin{align} \lim_{N\to\infty} \frac{1}{N}\sum_{n=1}^N\, T^{[a_1(p_n)]}f_1\cdot \ldots \cdot T^{[a_\ell(p_n)]}f_\ell=0\\[-15pt]\nonumber \end{align} $$

$$ \begin{align} \lim_{N\to\infty} \frac{1}{N}\sum_{n=1}^N\, T^{[a_1(p_n)]}f_1\cdot \ldots \cdot T^{[a_\ell(p_n)]}f_\ell=0\\[-15pt]\nonumber \end{align} $$

in

![]() $L^2(\mu )$

.

$L^2(\mu )$

.

Remark. It seems likely that with some additional effort the techniques of this article can cover the more general case of Hardy field functions

![]() $a_1,\ldots , a_\ell $

such that the functions and their differences belong to the set

$a_1,\ldots , a_\ell $

such that the functions and their differences belong to the set

![]() $\{a\colon {\mathbb R}_+\to {\mathbb R}\colon t^{k+\varepsilon }\prec a(t)\prec t^{k+1-\varepsilon } \text { for some } k\in {\mathbb Z}_+ \text { and } \varepsilon>0\}$

. Using the equidistribution result in [Reference Bergelson, Kolesnik and Son3] and the argument in Section 2, this would immediately give a corresponding strengthening of Theorem 1.1. We opted not to deal with these more general statements because the added technical complexity would obscure the main ideas of the proof of Theorem 1.4.

$\{a\colon {\mathbb R}_+\to {\mathbb R}\colon t^{k+\varepsilon }\prec a(t)\prec t^{k+1-\varepsilon } \text { for some } k\in {\mathbb Z}_+ \text { and } \varepsilon>0\}$

. Using the equidistribution result in [Reference Bergelson, Kolesnik and Son3] and the argument in Section 2, this would immediately give a corresponding strengthening of Theorem 1.1. We opted not to deal with these more general statements because the added technical complexity would obscure the main ideas of the proof of Theorem 1.4.

The proof of Theorem 1.4 crucially uses the fact that the iterates

![]() $a_1,\ldots , a_\ell $

have ‘fractional power growth’, and our argument fails for iterates with ‘integer power growth’. Similar results that cover the case of polynomials with integer or real coefficients were obtained in [Reference Frantzikinakis, Host and Kra9, Reference Wooley and Ziegler29] and [Reference Karageorgos and Koutsogiannis19], respectively, and depend on deep properties of the von Mangoldt function from [Reference Green and Tao13] and [Reference Green and Tao14], but these results and their proofs do not appear to be useful for our purposes. Instead, we rely on some softer number theory input that follows from standard sieve theory techniques (see Section 3.2) and an argument that is fine-tuned for the case of fractional polynomials (but fails for polynomials with integer exponents). This argument eventually enables us to bound the averages in equation (4) with averages involving iterates given by multivariate polynomials with real coefficients evaluated at the integers, a case that was essentially handled in [Reference Leibman23].

$a_1,\ldots , a_\ell $

have ‘fractional power growth’, and our argument fails for iterates with ‘integer power growth’. Similar results that cover the case of polynomials with integer or real coefficients were obtained in [Reference Frantzikinakis, Host and Kra9, Reference Wooley and Ziegler29] and [Reference Karageorgos and Koutsogiannis19], respectively, and depend on deep properties of the von Mangoldt function from [Reference Green and Tao13] and [Reference Green and Tao14], but these results and their proofs do not appear to be useful for our purposes. Instead, we rely on some softer number theory input that follows from standard sieve theory techniques (see Section 3.2) and an argument that is fine-tuned for the case of fractional polynomials (but fails for polynomials with integer exponents). This argument eventually enables us to bound the averages in equation (4) with averages involving iterates given by multivariate polynomials with real coefficients evaluated at the integers, a case that was essentially handled in [Reference Leibman23].

1.3 Limitations of our techniques and open problems

We expect that the following generalisation of Theorem 1.1 holds:

Problem. Let

![]() $a_1,\ldots ,a_\ell $

be functions from a Hardy field with polynomial growth such that every nontrivial linear combination b of them satisfies

$a_1,\ldots ,a_\ell $

be functions from a Hardy field with polynomial growth such that every nontrivial linear combination b of them satisfies

![]() $ |b(t)-p(t)|/\log {t}\to \infty $

for all

$ |b(t)-p(t)|/\log {t}\to \infty $

for all

![]() $p\in {\mathbb Z}[t]$

. Then the collection of sequences

$p\in {\mathbb Z}[t]$

. Then the collection of sequences

![]() $[a_1(p_n)],\ldots , [a_\ell (p_n)]$

is jointly ergodic.

$[a_1(p_n)],\ldots , [a_\ell (p_n)]$

is jointly ergodic.

By Theorem 2.1, it suffices to show that the collection

![]() $[a_1(p_n)],\ldots , [a_\ell (p_n)]$

is good for equidistribution and seminorm estimates. Although the needed equidistribution property has been proved in [Reference Bergelson, Kolesnik and Son3, Theorem 3.1], the seminorm estimates that extend Theorem 1.4 seem hard to establish. Our argument breaks down when some of the functions, or their differences, are close to integral powers of t: for example, when they are

$[a_1(p_n)],\ldots , [a_\ell (p_n)]$

is good for equidistribution and seminorm estimates. Although the needed equidistribution property has been proved in [Reference Bergelson, Kolesnik and Son3, Theorem 3.1], the seminorm estimates that extend Theorem 1.4 seem hard to establish. Our argument breaks down when some of the functions, or their differences, are close to integral powers of t: for example, when they are

![]() $t^k\log {t}$

or

$t^k\log {t}$

or

![]() $t^k/\log \log {t}$

for some

$t^k/\log \log {t}$

for some

![]() $k\in {\mathbb N}$

. In both cases, the vdC-operation (see Section 5.2) leads to sequences of sublinear growth for which we can no longer establish Lemma 4.1, in the first case because the estimate equation (20) fails and in the second case because in equation (22), the length of the interval in the average is too short for Corollary 3.4 to be applicable.

$k\in {\mathbb N}$

. In both cases, the vdC-operation (see Section 5.2) leads to sequences of sublinear growth for which we can no longer establish Lemma 4.1, in the first case because the estimate equation (20) fails and in the second case because in equation (22), the length of the interval in the average is too short for Corollary 3.4 to be applicable.

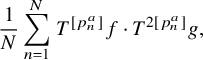

Finally, we remark that although the reduction offered by Theorem 2.1 is very helpful when dealing with averages with independent iterates, as is the case in equation (3), it does not offer any help when the iterates are linearly dependent, which is the case for the averages

$$ \begin{align} \frac{1}{N}\sum_{n=1}^N \, T^{[p_n^{a}]}f\cdot T^{2[p_n^a]}g, \end{align} $$

$$ \begin{align} \frac{1}{N}\sum_{n=1}^N \, T^{[p_n^{a}]}f\cdot T^{2[p_n^a]}g, \end{align} $$

where

![]() $a\in {\mathbb R}_+$

is not an integer. We do expect the

$a\in {\mathbb R}_+$

is not an integer. We do expect the

![]() $L^2(\mu )$

-limit of the averages in equation (5) to be equal to the

$L^2(\mu )$

-limit of the averages in equation (5) to be equal to the

![]() $L^2(\mu )$

-limit of the averages

$L^2(\mu )$

-limit of the averages

![]() $ \frac {1}{N}\sum _{n=1}^N \,T^{n}f\cdot T^{2n}g$

, but this remains a challenging open problemFootnote

5

; see Problem 27 in [Reference Frantzikinakis7].

$ \frac {1}{N}\sum _{n=1}^N \,T^{n}f\cdot T^{2n}g$

, but this remains a challenging open problemFootnote

5

; see Problem 27 in [Reference Frantzikinakis7].

1.4 Notation

With

![]() ${\mathbb N}$

, we denote the set of positive integers, and with

${\mathbb N}$

, we denote the set of positive integers, and with

![]() ${\mathbb Z}_+$

, the set of nonnegative integers. With

${\mathbb Z}_+$

, the set of nonnegative integers. With

![]() ${\mathbb P}$

, we denote the set of prime numbers. With

${\mathbb P}$

, we denote the set of prime numbers. With

![]() ${\mathbb R}_+$

, we denote the set of nonnegative real numbers. For

${\mathbb R}_+$

, we denote the set of nonnegative real numbers. For

![]() $t\in {\mathbb R}$

, we let

$t\in {\mathbb R}$

, we let

![]() $e(t):=e^{2\pi i t}$

. If

$e(t):=e^{2\pi i t}$

. If

![]() $x\in {\mathbb R}_+$

, when there is no danger for confusion, with

$x\in {\mathbb R}_+$

, when there is no danger for confusion, with

![]() $[x]$

, we denote both the integer part of x and the set

$[x]$

, we denote both the integer part of x and the set

![]() $\{1,\ldots , [x]\}$

. We denote with

$\{1,\ldots , [x]\}$

. We denote with

![]() $\Re (z)$

the real part of the complex number z.

$\Re (z)$

the real part of the complex number z.

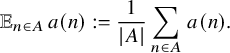

Let

![]() $a\colon {\mathbb N}\to {\mathbb C}$

be a bounded sequence. If A is a nonempty finite subset of

$a\colon {\mathbb N}\to {\mathbb C}$

be a bounded sequence. If A is a nonempty finite subset of

![]() ${\mathbb N}$

, we let

${\mathbb N}$

, we let

$$ \begin{align*}{\mathbb E}_{n\in A}\,a(n):=\frac{1}{|A|}\sum_{n\in A}\, a(n). \end{align*} $$

$$ \begin{align*}{\mathbb E}_{n\in A}\,a(n):=\frac{1}{|A|}\sum_{n\in A}\, a(n). \end{align*} $$

If

![]() $a,b\colon {\mathbb R}_+\to {\mathbb R}$

are functions, we write

$a,b\colon {\mathbb R}_+\to {\mathbb R}$

are functions, we write

-

○

$a(t)\prec b(t)$

if

$a(t)\prec b(t)$

if

$\lim _{t\to +\infty } a(t)/b(t)=0$

;

$\lim _{t\to +\infty } a(t)/b(t)=0$

; -

○

$a(t)\sim b(t)$

if

$a(t)\sim b(t)$

if

$\lim _{t\to +\infty } a(t)/b(t)$

exists and is nonzero;

$\lim _{t\to +\infty } a(t)/b(t)$

exists and is nonzero; -

○

$A_{c_1,\ldots , c_\ell }(t)\ll _{c_1,\ldots , c_\ell } B_{c_1,\ldots , c_\ell }(t)$

if there exist

$A_{c_1,\ldots , c_\ell }(t)\ll _{c_1,\ldots , c_\ell } B_{c_1,\ldots , c_\ell }(t)$

if there exist

$t_0=t_0(c_1,\ldots , c_\ell )\in {\mathbb R}_+$

and

$t_0=t_0(c_1,\ldots , c_\ell )\in {\mathbb R}_+$

and

$C=C(c_1,\ldots , c_\ell )>0$

such that

$C=C(c_1,\ldots , c_\ell )>0$

such that

$|A_{c_1,\ldots , c_\ell }(t)|\leq C |B_{c_1,\ldots , c_\ell }(t)|$

for all

$|A_{c_1,\ldots , c_\ell }(t)|\leq C |B_{c_1,\ldots , c_\ell }(t)|$

for all

$t\geq t_0$

.

$t\geq t_0$

.

We use the same notation for sequences

![]() $a,b\colon {\mathbb N}\to {\mathbb R}$

.

$a,b\colon {\mathbb N}\to {\mathbb R}$

.

Throughout, we let

![]() $L_N:=[e^{\sqrt {\log {N}}}]$

,

$L_N:=[e^{\sqrt {\log {N}}}]$

,

![]() $N\in {\mathbb N}$

.

$N\in {\mathbb N}$

.

We say that a sequence

![]() $(c_{N,{\underline {h}}}(n))$

, where

$(c_{N,{\underline {h}}}(n))$

, where

![]() ${\underline {h}}\in [L_N]^k$

,

${\underline {h}}\in [L_N]^k$

,

![]() $n\in [N]$

,

$n\in [N]$

,

![]() $N\in {\mathbb N}$

, is bounded if there exists C>0 such that

$N\in {\mathbb N}$

, is bounded if there exists C>0 such that

![]() $|c_{N,{\underline {h}}}(n)|\leq C$

for all

$|c_{N,{\underline {h}}}(n)|\leq C$

for all

![]() ${\underline {h}}\in [L_N]^k$

,

${\underline {h}}\in [L_N]^k$

,

![]() $n\in [N]$

,

$n\in [N]$

,

![]() $N\in {\mathbb N}$

.

$N\in {\mathbb N}$

.

2 Proof strategy

Our argument depends upon a convenient criterion for joint ergodicity that was established recently in [Reference Frantzikinakis8] (and was motivated by work in [Reference Peluse24, Reference Peluse and Prendiville25]). To state it, we need to review the definition of the ergodic seminorms from [Reference Host and Kra16].

Definition. For a given ergodic system

![]() $(X,\mu ,T)$

and function

$(X,\mu ,T)$

and function

![]() $f\in L^\infty (\mu )$

, we define

$f\in L^\infty (\mu )$

, we define

![]() $\lvert \!|\!| \cdot |\!|\!\rvert _s$

inductively as follows:

$\lvert \!|\!| \cdot |\!|\!\rvert _s$

inductively as follows:

$$ \begin{align*} \lvert\!|\!| f|\!|\!\rvert_{1}\mathrel{\mathop:} &=\Big| \int f \ d\mu\Big|\ ;\\ \lvert\!|\!| f|\!|\!\rvert_{s+1}^{2^{s+1}} \mathrel{\mathop:}=\lim_{N\to\infty}\frac{1}{N} &\sum_{n=1}^{N} \lvert\!|\!| \overline{f}\cdot T^nf|\!|\!\rvert_{s}^{2^{s}}, \quad s\in {\mathbb N}. \end{align*} $$

$$ \begin{align*} \lvert\!|\!| f|\!|\!\rvert_{1}\mathrel{\mathop:} &=\Big| \int f \ d\mu\Big|\ ;\\ \lvert\!|\!| f|\!|\!\rvert_{s+1}^{2^{s+1}} \mathrel{\mathop:}=\lim_{N\to\infty}\frac{1}{N} &\sum_{n=1}^{N} \lvert\!|\!| \overline{f}\cdot T^nf|\!|\!\rvert_{s}^{2^{s}}, \quad s\in {\mathbb N}. \end{align*} $$

It was shown in [Reference Host and Kra16], via successive uses of the mean ergodic theorem, that for every

![]() $s\in {\mathbb N}$

, the above limit exists, and

$s\in {\mathbb N}$

, the above limit exists, and

![]() $\lvert \!|\!| \cdot |\!|\!\rvert _s$

defines an increasing sequence of seminorms on

$\lvert \!|\!| \cdot |\!|\!\rvert _s$

defines an increasing sequence of seminorms on

![]() $L^\infty (\mu )$

.

$L^\infty (\mu )$

.

Definition. We say that the collection of sequences

![]() $b_1,\ldots , b_\ell \colon {\mathbb N}\to {\mathbb Z}$

is:

$b_1,\ldots , b_\ell \colon {\mathbb N}\to {\mathbb Z}$

is:

-

1. Good for seminorm estimates, if for every ergodic system

$(X,\mu ,T)$

, there exists

$(X,\mu ,T)$

, there exists

$s\in {\mathbb N}$

such that if

$s\in {\mathbb N}$

such that if

$f_1,\ldots , f_\ell \in L^\infty (\mu )$

and

$f_1,\ldots , f_\ell \in L^\infty (\mu )$

and

$\lvert \!|\!| f_m|\!|\!\rvert _{s}=0$

for some

$\lvert \!|\!| f_m|\!|\!\rvert _{s}=0$

for some

$m\in \{1,\ldots , \ell \}$

, then (6)in

$m\in \{1,\ldots , \ell \}$

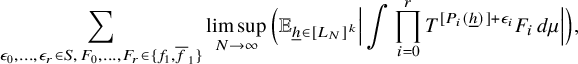

, then (6)in $$ \begin{align} \lim_{N\to\infty} {\mathbb E}_{n\in [N]}\, T^{b_1(n)}f_1\cdot\ldots \cdot T^{b_m(n)}f_m= 0 \end{align} $$

$$ \begin{align} \lim_{N\to\infty} {\mathbb E}_{n\in [N]}\, T^{b_1(n)}f_1\cdot\ldots \cdot T^{b_m(n)}f_m= 0 \end{align} $$

$L^2(\mu )$

.Footnote

6

$L^2(\mu )$

.Footnote

6

-

2. Good for equidistribution, if for all

$t_1,\ldots , t_\ell \in [0,1)$

, not all of them

$t_1,\ldots , t_\ell \in [0,1)$

, not all of them

$0$

, we have

$0$

, we have  $$ \begin{align*} \lim_{N\to\infty} {\mathbb E}_{n\in[N]}\, e(b_1(n)t_1+\cdots+ b_\ell(n)t_\ell) =0. \end{align*} $$

$$ \begin{align*} \lim_{N\to\infty} {\mathbb E}_{n\in[N]}\, e(b_1(n)t_1+\cdots+ b_\ell(n)t_\ell) =0. \end{align*} $$

We remark that any collection of nonconstant integer polynomial sequences with pairwise nonconstant differences is known to be good for seminorm estimates [Reference Leibman23], and examples of periodic systems show that no such collection is good for equidistribution (unless

![]() $\ell =1$

and

$\ell =1$

and

![]() $b_1(t)=\pm t+k$

). On the other hand, a collection of linearly independent fractional polynomials is known to be good both for seminorm estimates [Reference Frantzikinakis6, Theorem 2.9] and equidistribution (follows from [Reference Kuipers and Niederreiter22, Theorem 3.4] and [Reference Frantzikinakis8, Lemma 6.2]).

$b_1(t)=\pm t+k$

). On the other hand, a collection of linearly independent fractional polynomials is known to be good both for seminorm estimates [Reference Frantzikinakis6, Theorem 2.9] and equidistribution (follows from [Reference Kuipers and Niederreiter22, Theorem 3.4] and [Reference Frantzikinakis8, Lemma 6.2]).

A crucial ingredient used in the proof of our main result is the following result that gives convenient necessary and sufficient conditions for joint ergodicity of a collection of sequences (see also [Reference Best and Moragues5] for an extension of this result for sequences

![]() $b_1,\ldots , b_\ell \colon {\mathbb N}^k\to {\mathbb Z}$

).

$b_1,\ldots , b_\ell \colon {\mathbb N}^k\to {\mathbb Z}$

).

Theorem 2.1 ([Reference Frantzikinakis8])

The sequences

![]() $b_1,\ldots , b_\ell \colon {\mathbb N}\to {\mathbb Z}$

are jointly ergodic if and only if they are good for equidistribution and seminorm estimates.

$b_1,\ldots , b_\ell \colon {\mathbb N}\to {\mathbb Z}$

are jointly ergodic if and only if they are good for equidistribution and seminorm estimates.

Remark. The proof of this result uses ‘soft’ tools from ergodic theory and avoids deeper tools like the Host-Kra theory of characteristic factors (see [Reference Host and Kra17, Chapter 21] for a detailed description) and equidistribution results on nilmanifolds.

In view of this result, in order to establish Theorem 1.1, it suffices to show that a collection of linearly independent fractional polynomials evaluated at primes is good for seminorm estimates and equidistribution. The good equidistribution property is a consequence of the following result [Reference Bergelson, Kolesnik, Madritsch, Son and Tichy2, Theorem 2.1]:

Theorem 2.2 ([Reference Bergelson, Kolesnik, Madritsch, Son and Tichy2])

If

![]() $a(t)$

is a nonzero fractional polynomial, then the sequence

$a(t)$

is a nonzero fractional polynomial, then the sequence

![]() $(a(p_n))$

is equidistributed

$(a(p_n))$

is equidistributed

![]() $\! \! \pmod {1}$

.

$\! \! \pmod {1}$

.

Using the previous result and [Reference Frantzikinakis8, Lemma 6.2], we immediately deduce the following:

Corollary 2.3. If

![]() $a_1,\ldots , a_\ell $

are linearly independent fractional polynomials, then the collection of sequences

$a_1,\ldots , a_\ell $

are linearly independent fractional polynomials, then the collection of sequences

![]() $[a_1(p_n)], \ldots , [a_\ell (p_n)]$

is good for equidistribution.

$[a_1(p_n)], \ldots , [a_\ell (p_n)]$

is good for equidistribution.

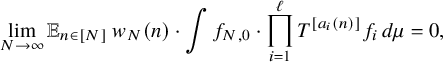

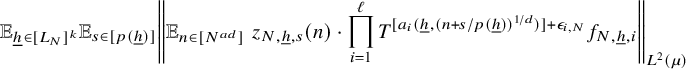

We let

![]() $\Lambda '\colon {\mathbb N}\to {\mathbb R}_+$

be the following slight modification of the von Mangoldt function:

$\Lambda '\colon {\mathbb N}\to {\mathbb R}_+$

be the following slight modification of the von Mangoldt function:

![]() $\Lambda '(n):=\log (n)$

if n is a prime number and

$\Lambda '(n):=\log (n)$

if n is a prime number and

![]() $0$

otherwise. To establish that the collection

$0$

otherwise. To establish that the collection

![]() $[a_1(p_n)], \ldots , [a_\ell (p_n)]$

is good for seminorm estimates, it suffices to prove the following result (the case

$[a_1(p_n)], \ldots , [a_\ell (p_n)]$

is good for seminorm estimates, it suffices to prove the following result (the case

![]() $w_N(n):=\Lambda '(n)$

,

$w_N(n):=\Lambda '(n)$

,

![]() $N,n\in {\mathbb N}$

, implies Theorem 1.4 in a standard way; see, for example, [Reference Frantzikinakis, Host and Kra9, Lemma 2.1]):

$N,n\in {\mathbb N}$

, implies Theorem 1.4 in a standard way; see, for example, [Reference Frantzikinakis, Host and Kra9, Lemma 2.1]):

Theorem 2.4. Suppose that the fractional polynomials

![]() $a_1,\ldots , a_\ell $

and their pairwise differences are nonzero. Then there exists

$a_1,\ldots , a_\ell $

and their pairwise differences are nonzero. Then there exists

![]() $s\in {\mathbb N}$

such that the following holds: If

$s\in {\mathbb N}$

such that the following holds: If

![]() $(X,\mu ,T)$

is an ergodic system and

$(X,\mu ,T)$

is an ergodic system and

![]() $f_1,\ldots , f_\ell \in L^\infty (\mu )$

are such that

$f_1,\ldots , f_\ell \in L^\infty (\mu )$

are such that

![]() $\lvert \!|\!| f_i|\!|\!\rvert _{s}=0$

for some

$\lvert \!|\!| f_i|\!|\!\rvert _{s}=0$

for some

![]() $i\in \{1,\ldots , \ell \}$

, then for every

$i\in \{1,\ldots , \ell \}$

, then for every

![]() $1$

-bounded sequence

$1$

-bounded sequence

![]() $(c_{N}(n))$

, we have

$(c_{N}(n))$

, we have

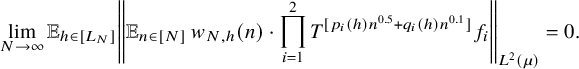

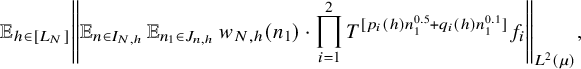

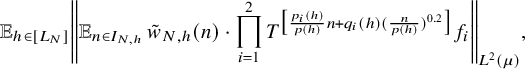

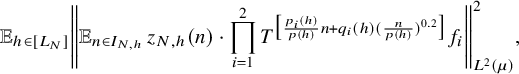

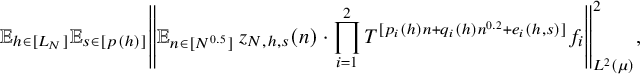

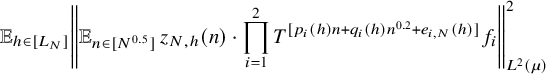

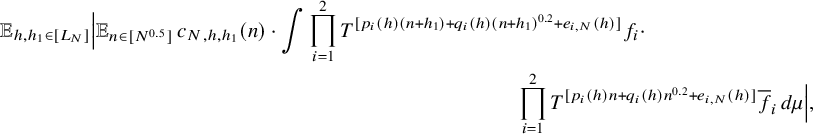

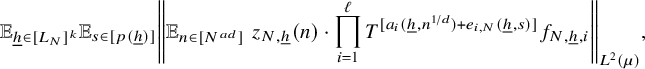

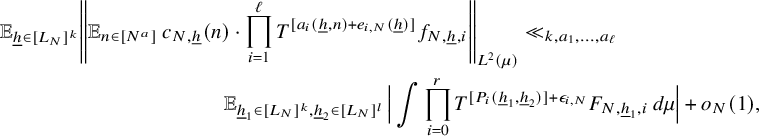

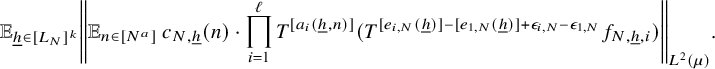

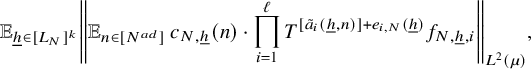

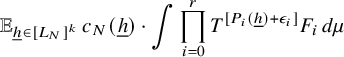

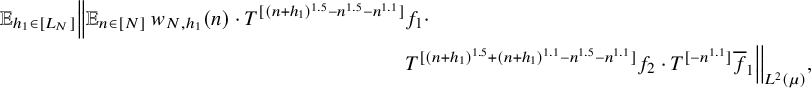

in

![]() $L^2(\mu )$

, where

$L^2(\mu )$

, where

![]() $w_N(n):=\Lambda '(n)\cdot c_N(n)$

,

$w_N(n):=\Lambda '(n)\cdot c_N(n)$

,

![]() $n\in [N]$

,

$n\in [N]$

,

![]() $N\in {\mathbb N}$

.

$N\in {\mathbb N}$

.

Remarks.

-

○ The sequence

$(c_N(n))$

is not essential in order to deduce Theorem 1.4. It is used because it helps us absorb error terms that often appear in our argument.

$(c_N(n))$

is not essential in order to deduce Theorem 1.4. It is used because it helps us absorb error terms that often appear in our argument. -

○ Our proof shows that the place of the sequence

$(\Lambda '(n))$

can take any nonnegative sequence

$(\Lambda '(n))$

can take any nonnegative sequence

$(b(n))$

that satisfies properties

$(b(n))$

that satisfies properties

$(i)$

and

$(i)$

and

$(ii)$

of Corollary 3.4 and the estimate

$(ii)$

of Corollary 3.4 and the estimate

$b(n)\ll n^\varepsilon $

for every

$b(n)\ll n^\varepsilon $

for every

$\varepsilon>0$

.

$\varepsilon>0$

.

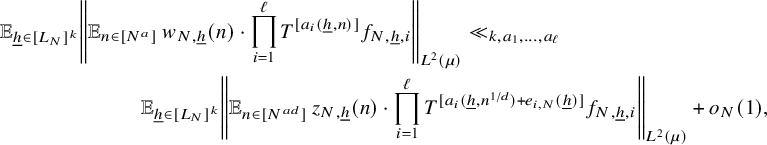

To prove Theorem 2.4, we use an induction argument, similar to the polynomial exhaustion technique (PET-induction) introduced in [Reference Bergelson1], which is based on variants of the van der Corput inequality stated immediately after Lemma 3.5. The fact that the weight sequence

![]() $(w_N(n))$

is unbounded forces us to apply Lemma 3.5 in the form given in equation (15) with

$(w_N(n))$

is unbounded forces us to apply Lemma 3.5 in the form given in equation (15) with

![]() $L_N\in {\mathbb N}$

that satisfy

$L_N\in {\mathbb N}$

that satisfy

![]() $L_N\succ (\log {N})^A$

for every

$L_N\succ (\log {N})^A$

for every

![]() $A>0$

. On the other hand, since we have to take care of some error terms that are of the order

$A>0$

. On the other hand, since we have to take care of some error terms that are of the order

![]() $L_N^B/N^a$

for arbitrary

$L_N^B/N^a$

for arbitrary

![]() $a, B>0$

, we are also forced to take

$a, B>0$

, we are also forced to take

![]() $L_N\prec N^a$

for every

$L_N\prec N^a$

for every

![]() $a>0$

in order for these errors to be negligible. These two estimates are satisfied for example when

$a>0$

in order for these errors to be negligible. These two estimates are satisfied for example when

![]() $L_N=[e^{\sqrt {\log {N}}}]$

,

$L_N=[e^{\sqrt {\log {N}}}]$

,

![]() $N\in {\mathbb N}$

, which is the value of

$N\in {\mathbb N}$

, which is the value of

![]() $L_N$

that we use henceforth.

$L_N$

that we use henceforth.

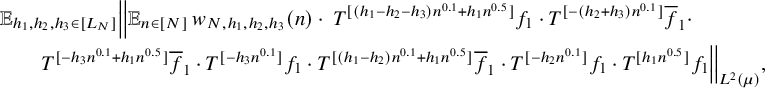

During the course of the PET-induction argument, we have to keep close track of the additional parameters

![]() $h_1,\ldots , h_k$

that arise after each application of Lemma 3.5 in the form given in equation (15). This is why we prove a more general variant of Theorem 2.4 that is stated in Theorem 3.1 and involves fractional polynomials with coefficients depending on finitely many parameters. It turns out that the most laborious part of its proof is the base case of the induction where all iterates have sublinear growth. This case is dealt with in three steps. First, in Lemma 4.1, we use a change of variables argument and the number theory input from Corollary 3.4 to reduce matters to the case where the weight sequence

$h_1,\ldots , h_k$

that arise after each application of Lemma 3.5 in the form given in equation (15). This is why we prove a more general variant of Theorem 2.4 that is stated in Theorem 3.1 and involves fractional polynomials with coefficients depending on finitely many parameters. It turns out that the most laborious part of its proof is the base case of the induction where all iterates have sublinear growth. This case is dealt with in three steps. First, in Lemma 4.1, we use a change of variables argument and the number theory input from Corollary 3.4 to reduce matters to the case where the weight sequence

![]() $(w_N(n))$

is bounded. Next, in Lemma 4.2, we use another change of variables argument and Lemma 3.5 to successively ‘eliminate’ the sequences

$(w_N(n))$

is bounded. Next, in Lemma 4.2, we use another change of variables argument and Lemma 3.5 to successively ‘eliminate’ the sequences

![]() $a_1, \ldots , a_\ell $

, and, after

$a_1, \ldots , a_\ell $

, and, after

![]() $\ell $

-iterations, we get an upper bound that involves iterates given by the integer parts of polynomials in several variables with real coefficients. Finally, in Lemma 4.3, we show that averages with such iterates obey good seminorm bounds. This last step is carried out by adapting an argument from [Reference Leibman23] to our setup; this is done by another PET-induction, which this time uses Lemma 3.5 in the form given in equation (16). In Sections 4.1 and 5.1, the reader will find examples that explain how these arguments work in some model cases that contain the essential ideas of the general arguments.

$\ell $

-iterations, we get an upper bound that involves iterates given by the integer parts of polynomials in several variables with real coefficients. Finally, in Lemma 4.3, we show that averages with such iterates obey good seminorm bounds. This last step is carried out by adapting an argument from [Reference Leibman23] to our setup; this is done by another PET-induction, which this time uses Lemma 3.5 in the form given in equation (16). In Sections 4.1 and 5.1, the reader will find examples that explain how these arguments work in some model cases that contain the essential ideas of the general arguments.

To conclude this section, we remark that to prove Theorem 1.1, it suffices to prove Theorem 2.4; the remaining sections are devoted to this task.

3 Seminorm estimates – some preparation

3.1 A more general statement

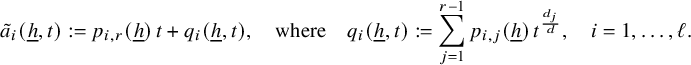

To prove Theorem 2.4, it will be convenient to establish a technically more complicated statement that is better suited for a PET-induction argument. We state it in this subsection.

Throughout, the sequence

![]() $L_N$

is chosen to satisfy

$L_N$

is chosen to satisfy

![]() $(\log {N})^A\prec L_N\prec N^a$

for all

$(\log {N})^A\prec L_N\prec N^a$

for all

![]() $A,a>0$

; so we can take, for example,

$A,a>0$

; so we can take, for example,

With

![]() ${\mathbb R}[t_1,\ldots , t_k]$

, we denote the set of polynomials with real coefficients in k-variables.

${\mathbb R}[t_1,\ldots , t_k]$

, we denote the set of polynomials with real coefficients in k-variables.

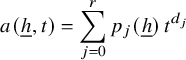

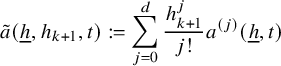

Definition. We say that

![]() $a\colon {\mathbb Z}^k\times {\mathbb R}_+\to {\mathbb R}$

is a polynomial with real exponents and k-parameters if it has the form

$a\colon {\mathbb Z}^k\times {\mathbb R}_+\to {\mathbb R}$

is a polynomial with real exponents and k-parameters if it has the form

$$ \begin{align*} a({\underline{h}},t)=\sum_{j=0}^r p_j({\underline{h}})\, t^{d_j} \end{align*} $$

$$ \begin{align*} a({\underline{h}},t)=\sum_{j=0}^r p_j({\underline{h}})\, t^{d_j} \end{align*} $$

for some

![]() $r\in {\mathbb N}$

,

$r\in {\mathbb N}$

,

![]() $0=d_0<d_1<\cdots <d_r\in {\mathbb R}_+$

, and

$0=d_0<d_1<\cdots <d_r\in {\mathbb R}_+$

, and

![]() $p_0,\ldots , p_r\in {\mathbb R}[t_1,\ldots , t_k]$

. If

$p_0,\ldots , p_r\in {\mathbb R}[t_1,\ldots , t_k]$

. If

![]() $d_1,\ldots , d_r\in {\mathbb R}_+\setminus {\mathbb Z}$

, we call it a fractional polynomial with k-parameters. If

$d_1,\ldots , d_r\in {\mathbb R}_+\setminus {\mathbb Z}$

, we call it a fractional polynomial with k-parameters. If

![]() $p_j$

is nonzero for some

$p_j$

is nonzero for some

![]() $j\in \{1,\ldots , r\}$

, we say that

$j\in \{1,\ldots , r\}$

, we say that

![]() $a({\underline {h}},t)$

is nonconstant. We define the fractional degree of

$a({\underline {h}},t)$

is nonconstant. We define the fractional degree of

![]() $a({\underline {h}},t)$

to be the maximum exponent

$a({\underline {h}},t)$

to be the maximum exponent

![]() $d_j$

for which the polynomial

$d_j$

for which the polynomial

![]() $p_j$

is nonzero and denote it by

$p_j$

is nonzero and denote it by

![]() $\text {f-deg}(a)$

. We call the integer part of its fractional degree the (integral) degree of

$\text {f-deg}(a)$

. We call the integer part of its fractional degree the (integral) degree of

![]() $a({\underline {h}},t)$

and denote it by

$a({\underline {h}},t)$

and denote it by

![]() $\deg (a)$

. We also let

$\deg (a)$

. We also let

![]() $\deg (0):=-1$

.

$\deg (0):=-1$

.

For example, the fractional polynomial with

![]() $1$

-parameter

$1$

-parameter

![]() $h^2 t^{0.5}+(h^2\sqrt {2}+h)t^{0.1}$

has fractional degree

$h^2 t^{0.5}+(h^2\sqrt {2}+h)t^{0.1}$

has fractional degree

![]() $0.5$

and degree

$0.5$

and degree

![]() $0$

.

$0$

.

Definition. We say that a collection

![]() $a_1,\ldots , a_\ell $

of polynomials with real exponents and k-parameters is nice if

$a_1,\ldots , a_\ell $

of polynomials with real exponents and k-parameters is nice if

-

1.

$\text {f-deg}(a_i)\leq \text {f-deg}(a_1)$

for

$\text {f-deg}(a_i)\leq \text {f-deg}(a_1)$

for

$i=2,\ldots , \ell $

, and

$i=2,\ldots , \ell $

, and -

2. the functions

$a_1, \ldots a_\ell $

and the functions

$a_1, \ldots a_\ell $

and the functions

$a_1-a_2, \ldots , a_1-a_\ell $

are nonconstant in the variable t (and as a consequence they have positive fractional degree).

$a_1-a_2, \ldots , a_1-a_\ell $

are nonconstant in the variable t (and as a consequence they have positive fractional degree).

Given a sequence

![]() $u\colon {\mathbb N}\to {\mathbb C}$

, we let

$u\colon {\mathbb N}\to {\mathbb C}$

, we let

![]() $(\Delta _hu)(n):=u(n+h)\cdot \overline {u(n)}$

,

$(\Delta _hu)(n):=u(n+h)\cdot \overline {u(n)}$

,

![]() $h,n\in {\mathbb N}$

, and if

$h,n\in {\mathbb N}$

, and if

![]() ${\underline {h}}=(h_1,\ldots , h_k)$

, we let

${\underline {h}}=(h_1,\ldots , h_k)$

, we let

![]() $(\Delta _{{\underline {h}}})(u(n)):=(\Delta _{h_k}\cdots \Delta _{h_1}) (u(n))$

,

$(\Delta _{{\underline {h}}})(u(n)):=(\Delta _{h_k}\cdots \Delta _{h_1}) (u(n))$

,

![]() $h_1,\ldots , h_k,n\in {\mathbb N}$

. For example,

$h_1,\ldots , h_k,n\in {\mathbb N}$

. For example,

![]() $(\Delta _{(h_1,h_2)})(u(n))= u(n+h_1+h_2)\cdot \overline {u}(n+h_1)\cdot \overline {u}(n+h_2)\cdot u(n)$

,

$(\Delta _{(h_1,h_2)})(u(n))= u(n+h_1+h_2)\cdot \overline {u}(n+h_1)\cdot \overline {u}(n+h_2)\cdot u(n)$

,

![]() $h_1,h_2,n\in {\mathbb N}$

.

$h_1,h_2,n\in {\mathbb N}$

.

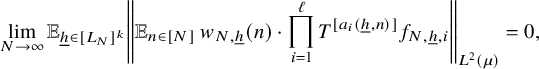

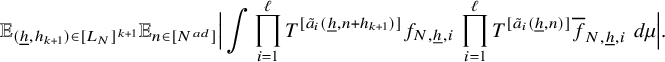

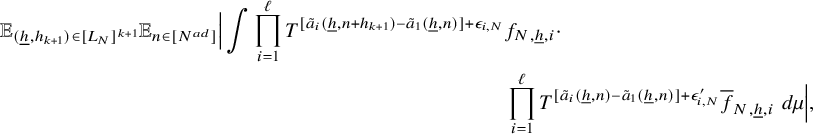

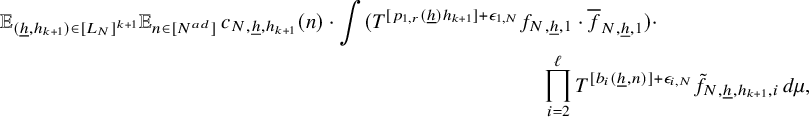

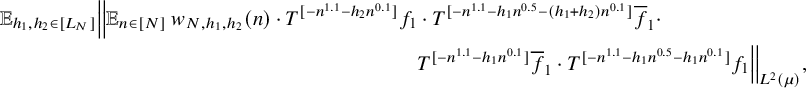

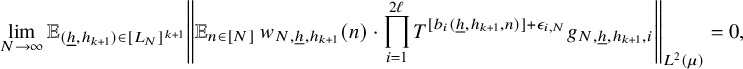

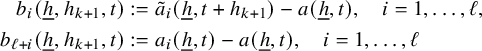

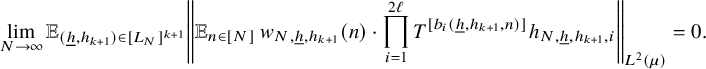

Theorem 3.1. For

![]() $k\in {\mathbb Z}_+, \ell \in {\mathbb N}$

, let

$k\in {\mathbb Z}_+, \ell \in {\mathbb N}$

, let

![]() $a_1,\ldots , a_\ell \colon {\mathbb N}^k\times {\mathbb N}\to {\mathbb R}$

be a nice collection of fractional polynomials with k-parameters and

$a_1,\ldots , a_\ell \colon {\mathbb N}^k\times {\mathbb N}\to {\mathbb R}$

be a nice collection of fractional polynomials with k-parameters and

![]() $(c_{N,{\underline {h}}}(n))$

be a

$(c_{N,{\underline {h}}}(n))$

be a

![]() $1$

-bounded sequence. Then there exists

$1$

-bounded sequence. Then there exists

![]() $s\in {\mathbb N}$

such that the following holds: If

$s\in {\mathbb N}$

such that the following holds: If

![]() $(X,\mu ,T)$

is a system and

$(X,\mu ,T)$

is a system and

![]() $f_{N,{\underline {h}},1},\ldots , f_{N,{\underline {h}},\ell }\in L^\infty (\mu )$

,

$f_{N,{\underline {h}},1},\ldots , f_{N,{\underline {h}},\ell }\in L^\infty (\mu )$

,

![]() ${\underline {h}}\in [L_N]^k,N\in {\mathbb N}$

, are

${\underline {h}}\in [L_N]^k,N\in {\mathbb N}$

, are

![]() $1$

-bounded functions with

$1$

-bounded functions with

![]() $f_{N,{\underline {h}},1}=f_1$

,

$f_{N,{\underline {h}},1}=f_1$

,

![]() ${\underline {h}}\in {\mathbb N}^k,N\in {\mathbb N}$

and

${\underline {h}}\in {\mathbb N}^k,N\in {\mathbb N}$

and

![]() $\lvert \!|\!| f_1|\!|\!\rvert _s=0$

, then

$\lvert \!|\!| f_1|\!|\!\rvert _s=0$

, then

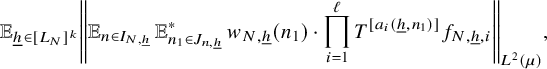

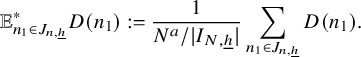

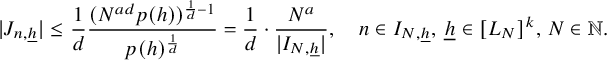

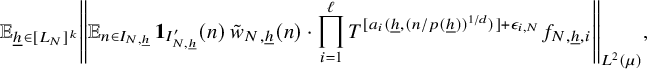

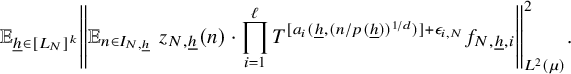

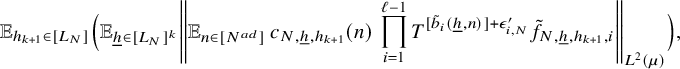

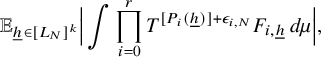

$$ \begin{align} \lim_{N\to\infty} {\mathbb E}_{\underline{h}\in [L_N]^k} \left\Vert {\mathbb E}_{n\in [N]}\, w_{N,\underline{h}}(n)\cdot \prod_{i=1}^\ell T^{[a_i(\underline{h},n)]}f_{N,{\underline{h}},i}\right\Vert_{L^2(\mu)}=0, \end{align} $$

$$ \begin{align} \lim_{N\to\infty} {\mathbb E}_{\underline{h}\in [L_N]^k} \left\Vert {\mathbb E}_{n\in [N]}\, w_{N,\underline{h}}(n)\cdot \prod_{i=1}^\ell T^{[a_i(\underline{h},n)]}f_{N,{\underline{h}},i}\right\Vert_{L^2(\mu)}=0, \end{align} $$

where

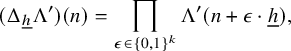

![]() $w_{N,{\underline {h}}}(n):=(\Delta _{\underline {h}}\Lambda ')(n)\cdot c_{N,{\underline {h}}}(n)$

,

$w_{N,{\underline {h}}}(n):=(\Delta _{\underline {h}}\Lambda ')(n)\cdot c_{N,{\underline {h}}}(n)$

,

![]() $ {\underline {h}}\in [L_N]^k, n\in [N], N\in {\mathbb N}$

.

$ {\underline {h}}\in [L_N]^k, n\in [N], N\in {\mathbb N}$

.

Remark. Our argument also works if

![]() $\Delta _{\underline {h}}\Lambda '(n)$

is replaced by other expressions involving

$\Delta _{\underline {h}}\Lambda '(n)$

is replaced by other expressions involving

![]() $\Lambda '$

: for example, when

$\Lambda '$

: for example, when

![]() $k=0$

, one can use the expression

$k=0$

, one can use the expression

![]() $\prod _{i=1}^m\Lambda '(n+c_i)$

, where

$\prod _{i=1}^m\Lambda '(n+c_i)$

, where

![]() $c_1,\ldots , c_m$

are distinct integers.

$c_1,\ldots , c_m$

are distinct integers.

If in Theorem 3.1, we take

![]() $k=0$

, then we get Theorem 2.4 using an argument that we describe next.

$k=0$

, then we get Theorem 2.4 using an argument that we describe next.

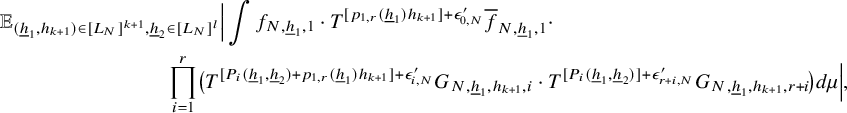

Proof of Theorem 2.4 assuming Theorem 3.1

Let

![]() $a_1,\ldots , a_\ell $

and

$a_1,\ldots , a_\ell $

and

![]() $w_N(n)$

be as in Theorem 2.4. Since the assumptions of Theorem 2.4 are symmetric with respect to the sequences

$w_N(n)$

be as in Theorem 2.4. Since the assumptions of Theorem 2.4 are symmetric with respect to the sequences

![]() $a_1,\ldots , a_\ell $

, it suffices to show that there exists

$a_1,\ldots , a_\ell $

, it suffices to show that there exists

![]() $s\in {\mathbb N}$

such that if

$s\in {\mathbb N}$

such that if

![]() $\lvert \!|\!| f_1|\!|\!\rvert _s=0$

, then equation (7) holds.

$\lvert \!|\!| f_1|\!|\!\rvert _s=0$

, then equation (7) holds.

If

![]() $a_1$

has maximal fractional degree within the family

$a_1$

has maximal fractional degree within the family

![]() $a_1,\ldots a_\ell $

, then if we take

$a_1,\ldots a_\ell $

, then if we take

![]() $k=0$

and all functions to be independent of N in Theorem 3.1, we get that the conclusion of Theorem 2.4 holds. Otherwise, we can assume that

$k=0$

and all functions to be independent of N in Theorem 3.1, we get that the conclusion of Theorem 2.4 holds. Otherwise, we can assume that

![]() $a_{\ell }$

is the function with the highest fractional degree and, as a consequence,

$a_{\ell }$

is the function with the highest fractional degree and, as a consequence,

![]() $\text {f-deg}(a_1)<\text {f-deg}(a_\ell )$

. It suffices to show that

$\text {f-deg}(a_1)<\text {f-deg}(a_\ell )$

. It suffices to show that

$$ \begin{align*} \lim_{N\to\infty} {\mathbb E}_{n\in [N]}\, w_{N}(n)\cdot \int f_{N,0} \cdot \prod_{i=1}^\ell T^{[a_i(n)]}f_{i} \, d\mu=0, \end{align*} $$

$$ \begin{align*} \lim_{N\to\infty} {\mathbb E}_{n\in [N]}\, w_{N}(n)\cdot \int f_{N,0} \cdot \prod_{i=1}^\ell T^{[a_i(n)]}f_{i} \, d\mu=0, \end{align*} $$

where

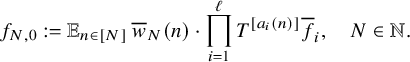

$$ \begin{align*} f_{N,0} := {\mathbb E}_{n\in [N]} \, \overline{w}_{N}(n)\cdot \prod_{i=1}^\ell T^{[a_i(n)]}\overline{f}_i, \quad N\in {\mathbb N}. \end{align*} $$

$$ \begin{align*} f_{N,0} := {\mathbb E}_{n\in [N]} \, \overline{w}_{N}(n)\cdot \prod_{i=1}^\ell T^{[a_i(n)]}\overline{f}_i, \quad N\in {\mathbb N}. \end{align*} $$

Note that since

![]() $f_1,\ldots , f_\ell $

and

$f_1,\ldots , f_\ell $

and

![]() $c_N$

are

$c_N$

are

![]() $1$

-bounded, we have

$1$

-bounded, we have

(the last identity follows from the prime number theorem, but we only need the much simpler upper bound) hence, we can assume that

![]() $f_{N,0}$

is

$f_{N,0}$

is

![]() $1$

-bounded for every

$1$

-bounded for every

![]() $N\in {\mathbb N}$

.

$N\in {\mathbb N}$

.

After composing with

![]() $T^{-[a_{\ell }(n)]}$

, using the Cauchy-Schwarz inequality, and the identity

$T^{-[a_{\ell }(n)]}$

, using the Cauchy-Schwarz inequality, and the identity

![]() $[x]-[y]=[x-y]+e$

for some

$[x]-[y]=[x-y]+e$

for some

![]() $e\in \{0,1\}$

, we are reduced to showing that

$e\in \{0,1\}$

, we are reduced to showing that

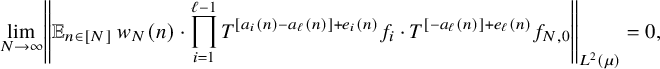

$$ \begin{align*} \lim_{N\to\infty} \left\Vert {\mathbb E}_{n\in [N]}\, w_{N}(n)\cdot \prod_{i=1}^{\ell-1} T^{[a_i(n)-a_\ell(n)]+e_i(n)}f_i\cdot T^{[-a_{\ell}(n)]+e_\ell(n)}f_{N,0}\right\Vert_{L^2(\mu)}=0, \end{align*} $$

$$ \begin{align*} \lim_{N\to\infty} \left\Vert {\mathbb E}_{n\in [N]}\, w_{N}(n)\cdot \prod_{i=1}^{\ell-1} T^{[a_i(n)-a_\ell(n)]+e_i(n)}f_i\cdot T^{[-a_{\ell}(n)]+e_\ell(n)}f_{N,0}\right\Vert_{L^2(\mu)}=0, \end{align*} $$

for some

![]() $e_1(n),\ldots , e_{\ell -1}(n)\in \{0,1\}$

,

$e_1(n),\ldots , e_{\ell -1}(n)\in \{0,1\}$

,

![]() $n\in {\mathbb N}$

. Next, we would like to replace the error sequences

$n\in {\mathbb N}$

. Next, we would like to replace the error sequences

![]() $e_1,\ldots , e_{\ell -1}$

with constant sequences. To this end, we use Lemma 3.6 for I a singleton,

$e_1,\ldots , e_{\ell -1}$

with constant sequences. To this end, we use Lemma 3.6 for I a singleton,

![]() $J:=[N]$

,

$J:=[N]$

,

![]() $X:=L^\infty (\mu )$

,

$X:=L^\infty (\mu )$

,

![]() $A_N(n_1,\ldots ,n_\ell ):=\prod _{i=1}^{\ell -1} T^{n_i}f_i\cdot T^{-n_\ell }f_{N,0}$

,

$A_N(n_1,\ldots ,n_\ell ):=\prod _{i=1}^{\ell -1} T^{n_i}f_i\cdot T^{-n_\ell }f_{N,0}$

,

![]() $n_1,\ldots , n_\ell \in {\mathbb Z}$

, and

$n_1,\ldots , n_\ell \in {\mathbb Z}$

, and

![]() $b_i:=[a_i-a_{\ell }]$

,

$b_i:=[a_i-a_{\ell }]$

,

![]() $i=1,\ldots , \ell -1$

,

$i=1,\ldots , \ell -1$

,

![]() $b_\ell := [-a_\ell ]$

. We get that it suffices to show that

$b_\ell := [-a_\ell ]$

. We get that it suffices to show that

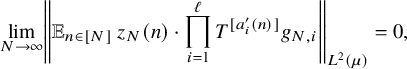

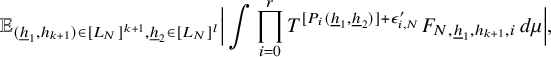

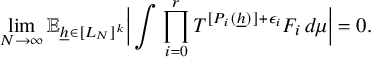

$$ \begin{align} \lim_{N\to\infty} \left\Vert {\mathbb E}_{n\in [N]}\, z_{N}(n)\cdot \prod_{i=1}^\ell T^{[a^{\prime}_i(n)]}g_{N,i}\right\Vert_{L^2(\mu)}=0, \end{align} $$

$$ \begin{align} \lim_{N\to\infty} \left\Vert {\mathbb E}_{n\in [N]}\, z_{N}(n)\cdot \prod_{i=1}^\ell T^{[a^{\prime}_i(n)]}g_{N,i}\right\Vert_{L^2(\mu)}=0, \end{align} $$

where

for some

![]() $1$

-bounded sequence

$1$

-bounded sequence

![]() $(z_{N}(n))$

, where

$(z_{N}(n))$

, where

![]() $g_{N,i}:=T^{\epsilon _i}f_{i}$

,

$g_{N,i}:=T^{\epsilon _i}f_{i}$

,

![]() $i=1,\ldots , \ell -1$

,

$i=1,\ldots , \ell -1$

,

![]() $g_{N,\ell }:=T^{\epsilon _\ell }f_{N,0}$

,

$g_{N,\ell }:=T^{\epsilon _\ell }f_{N,0}$

,

![]() $N\in {\mathbb N}$

, for some constants

$N\in {\mathbb N}$

, for some constants

![]() $\epsilon _1,\ldots , \epsilon _\ell \in \{0,1\}$

. Note that the family

$\epsilon _1,\ldots , \epsilon _\ell \in \{0,1\}$

. Note that the family

![]() $a^{\prime }_1,\ldots , a^{\prime }_\ell $

is nice, and

$a^{\prime }_1,\ldots , a^{\prime }_\ell $

is nice, and

![]() $g_{N,1}=T^{\epsilon _1}f_1$

,

$g_{N,1}=T^{\epsilon _1}f_1$

,

![]() $N\in {\mathbb N}$

, so Theorem 3.1 applies (for

$N\in {\mathbb N}$

, so Theorem 3.1 applies (for

![]() $k=0$

and all but one of the functions independent of N) and gives that there exists

$k=0$

and all but one of the functions independent of N) and gives that there exists

![]() $s\in {\mathbb N}$

so that if

$s\in {\mathbb N}$

so that if

![]() $\lvert \!|\!| f_1|\!|\!\rvert _s=0$

, then equation (9) holds. This completes the proof.

$\lvert \!|\!| f_1|\!|\!\rvert _s=0$

, then equation (9) holds. This completes the proof.

We will prove Theorem 3.1 in Sections 4 and 5 using a PET-induction technique. The first section covers the base case of the induction where all the iterates have sublinear growth, and the subsequent section contains the proof of the induction step. Before moving into the details, we gather some basic tools that will be used in the argument.

3.2 Feedback from number theory

The next statement is well known and can be proved using elementary sieve theory methods (see, for example, [Reference Halberstam and Richert15, Theorem 5.7] or [Reference Iwaniec and Kowalski18, Theorem 6.7]).

Theorem 3.2. Let

![]() ${\mathbb P}$

be the set of prime numbers. For all

${\mathbb P}$

be the set of prime numbers. For all

![]() $k\in {\mathbb N}$

, there exist

$k\in {\mathbb N}$

, there exist

![]() $C_k>0$

such that for all distinct

$C_k>0$

such that for all distinct

![]() $h_1,\ldots , h_k\in {\mathbb N}$

and all

$h_1,\ldots , h_k\in {\mathbb N}$

and all

![]() $N\in {\mathbb N}$

, we have

$N\in {\mathbb N}$

, we have

where

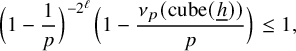

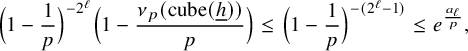

$$ \begin{align} \mathfrak{G}_k(h_1,\ldots, h_k):=\prod_{p\in {\mathbb P}} \Big(1-\frac{1}{p}\Big)^{-k}\Big(1-\frac{\nu_p(h_1,\ldots, h_k)}{p}\Big) \end{align} $$

$$ \begin{align} \mathfrak{G}_k(h_1,\ldots, h_k):=\prod_{p\in {\mathbb P}} \Big(1-\frac{1}{p}\Big)^{-k}\Big(1-\frac{\nu_p(h_1,\ldots, h_k)}{p}\Big) \end{align} $$

and

![]() $\nu _p(h_1,\ldots , h_k)$

denotes the number of congruence classes

$\nu _p(h_1,\ldots , h_k)$

denotes the number of congruence classes

![]() $\! \! \mod {p}$

that are occupied by

$\! \! \mod {p}$

that are occupied by

![]() $h_1,\ldots , h_k$

.

$h_1,\ldots , h_k$

.

We remark that although

![]() $\mathfrak {G}_1=1$

, the expression

$\mathfrak {G}_1=1$

, the expression

![]() $\mathfrak {G}_k(h_1,\ldots , h_k)$

is not bounded in

$\mathfrak {G}_k(h_1,\ldots , h_k)$

is not bounded in

![]() $h_1,\ldots , h_k$

if

$h_1,\ldots , h_k$

if

![]() $k\geq 2$

, and this causes some problems for us. Asymptotics for averages of powers of

$k\geq 2$

, and this causes some problems for us. Asymptotics for averages of powers of

![]() $\mathfrak {G}_k(h_1,\ldots , h_k)$

are given in [Reference Gallagher12] and [Reference Kowalski21, Theorem 1.1] using elementary but somewhat elaborate arguments. These results are not immediately applicable for our purposes, since we need to understand the behavior of

$\mathfrak {G}_k(h_1,\ldots , h_k)$

are given in [Reference Gallagher12] and [Reference Kowalski21, Theorem 1.1] using elementary but somewhat elaborate arguments. These results are not immediately applicable for our purposes, since we need to understand the behavior of

![]() $\mathfrak {G}_k$

on thin subsets of

$\mathfrak {G}_k$

on thin subsets of

![]() ${\mathbb Z}^k$

: for instance, when

${\mathbb Z}^k$

: for instance, when

![]() $k=4$

, we need to understand the averages of

$k=4$

, we need to understand the averages of

![]() $\mathfrak {G}_4(0,h_1,h_2,h_1+h_2)$

. Luckily, we only need to get upper bounds for these averages, and this can be done rather easily, as we will see shortly (a similar argument was used in [Reference Tao and Ziegler28] to handle averages over r of

$\mathfrak {G}_4(0,h_1,h_2,h_1+h_2)$

. Luckily, we only need to get upper bounds for these averages, and this can be done rather easily, as we will see shortly (a similar argument was used in [Reference Tao and Ziegler28] to handle averages over r of

![]() $\mathfrak {G}_k(0,r,2r,\ldots , (k-1)r)$

).

$\mathfrak {G}_k(0,r,2r,\ldots , (k-1)r)$

).

Definition. Let

![]() $\ell \in {\mathbb N}$

, and for

$\ell \in {\mathbb N}$

, and for

![]() ${\underline {h}}\in {\mathbb N}^\ell $

, let

${\underline {h}}\in {\mathbb N}^\ell $

, let

![]() $\text {Cube}({\underline {h}})\in {\mathbb N}^{2^\ell }$

be defined by

$\text {Cube}({\underline {h}})\in {\mathbb N}^{2^\ell }$

be defined by

where

![]() $\underline \epsilon \cdot {\underline {h}}$

is the inner product of

$\underline \epsilon \cdot {\underline {h}}$

is the inner product of

![]() $\underline \epsilon $

and

$\underline \epsilon $

and

![]() ${\underline {h}}$

.

${\underline {h}}$

.

If S is a subset of

![]() ${\mathbb N}^\ell $

, we define

${\mathbb N}^\ell $

, we define

For instance, when

![]() $\ell =3$

, we have

$\ell =3$

, we have

and

![]() $([N]^3)^*$

consists of all triples

$([N]^3)^*$

consists of all triples

![]() $(h_1,h_2,h_3)\in [N]^3$

with distinct coordinates that in addition satisfy

$(h_1,h_2,h_3)\in [N]^3$

with distinct coordinates that in addition satisfy

![]() $h_i\neq h_j+h_k$

for all distinct

$h_i\neq h_j+h_k$

for all distinct

![]() $i,j,k\in \{1,2,3\}$

. Since the complement of

$i,j,k\in \{1,2,3\}$

. Since the complement of

![]() $([N]^\ell )^*$

in

$([N]^\ell )^*$

in

![]() $[N]^\ell $

is contained on the zero set of finitely many (at most

$[N]^\ell $

is contained on the zero set of finitely many (at most

![]() $3^\ell $

) linear forms, we get that there exists

$3^\ell $

) linear forms, we get that there exists

![]() $K_\ell>0$

such that

$K_\ell>0$

such that

for every

![]() $N\in {\mathbb N}$

.

$N\in {\mathbb N}$

.

Proposition 3.3. For every

![]() $\ell \in {\mathbb N}$

, there exists

$\ell \in {\mathbb N}$

, there exists

![]() $C_\ell>0$

such that

$C_\ell>0$

such that

for all

![]() $N\in {\mathbb N}$

, where

$N\in {\mathbb N}$

, where

![]() $\mathfrak {G}_{2^\ell }(\text {cube}({\underline {h}}))$

is as in equation (10).

$\mathfrak {G}_{2^\ell }(\text {cube}({\underline {h}}))$

is as in equation (10).

Remark. If we use kth powers instead of squares, we get similar upper bounds (which also depend on k), but we will not need this.

Proof. In the following argument, whenever we write p, we assume that p is a prime number.

Let

![]() ${\underline {h}}\in [N]^\ell $

. Note that if

${\underline {h}}\in [N]^\ell $

. Note that if

![]() $\nu _p(\text {cube}({\underline {h}}))=2^\ell $

, then

$\nu _p(\text {cube}({\underline {h}}))=2^\ell $

, then

$$ \begin{align*} \Big(1-\frac{1}{p}\Big)^{-2^\ell}\Big(1-\frac{\nu_p(\text{cube}({\underline{h}}))}{p}\Big)\leq 1, \end{align*} $$

$$ \begin{align*} \Big(1-\frac{1}{p}\Big)^{-2^\ell}\Big(1-\frac{\nu_p(\text{cube}({\underline{h}}))}{p}\Big)\leq 1, \end{align*} $$

and if

![]() $\nu _p(\text {cube}({\underline {h}}))<2^\ell $

, then for

$\nu _p(\text {cube}({\underline {h}}))<2^\ell $

, then for

![]() $a_\ell :=2^{\ell +1}-2$

, we have

$a_\ell :=2^{\ell +1}-2$

, we have

$$ \begin{align*} \Big(1-\frac{1}{p}\Big)^{-2^\ell}\Big(1-\frac{\nu_p(\text{cube}({\underline{h}}))}{p}\Big)\leq \Big(1-\frac{1}{p}\Big)^{-(2^\ell-1)}\leq e^{\frac{a_\ell}{p}}, \end{align*} $$

$$ \begin{align*} \Big(1-\frac{1}{p}\Big)^{-2^\ell}\Big(1-\frac{\nu_p(\text{cube}({\underline{h}}))}{p}\Big)\leq \Big(1-\frac{1}{p}\Big)^{-(2^\ell-1)}\leq e^{\frac{a_\ell}{p}}, \end{align*} $$

where we use that

![]() $\frac {1}{1-x}\leq e^{2x}$

for

$\frac {1}{1-x}\leq e^{2x}$

for

![]() $x\in [0,\frac {1}{2}]$

. Note also that if

$x\in [0,\frac {1}{2}]$

. Note also that if

![]() $\nu _p(\text {cube}({\underline {h}}))<2^\ell $

, then there exist distinct

$\nu _p(\text {cube}({\underline {h}}))<2^\ell $

, then there exist distinct

![]() $\underline \epsilon ,\underline \epsilon '\in \{0,1\}^{\ell }$

such that

$\underline \epsilon ,\underline \epsilon '\in \{0,1\}^{\ell }$

such that

![]() $p|(\underline \epsilon -\underline \epsilon ')\cdot {\underline {h}}$

, in which case we have that

$p|(\underline \epsilon -\underline \epsilon ')\cdot {\underline {h}}$

, in which case we have that

![]() $p\in \mathcal {P}({\underline {h}})$

, where

$p\in \mathcal {P}({\underline {h}})$

, where

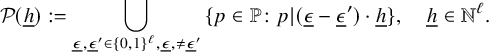

$$ \begin{align*} \mathcal{P}({\underline{h}}):=\bigcup_{\underline\epsilon,\underline\epsilon' \in \{0,1\}^\ell, \underline\epsilon,\neq \underline\epsilon' }\, \{p\in {\mathbb P} \colon p|(\underline\epsilon-\underline\epsilon')\cdot {\underline{h}}\}, \quad {\underline{h}}\in {\mathbb N}^\ell. \end{align*} $$

$$ \begin{align*} \mathcal{P}({\underline{h}}):=\bigcup_{\underline\epsilon,\underline\epsilon' \in \{0,1\}^\ell, \underline\epsilon,\neq \underline\epsilon' }\, \{p\in {\mathbb P} \colon p|(\underline\epsilon-\underline\epsilon')\cdot {\underline{h}}\}, \quad {\underline{h}}\in {\mathbb N}^\ell. \end{align*} $$

We deduce from the above facts and equation (10) that

By [Reference Tao and Ziegler27, Lemma E.1], we have for some

![]() $b_\ell ,c_\ell>0$

that

$b_\ell ,c_\ell>0$

that

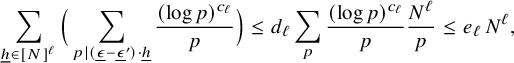

$$ \begin{align} e^{a_\ell \sum_{p\in \mathcal{P}({\underline{h}})}\frac{1}{p}} \leq b_\ell \sum_{p\in \mathcal{P}({\underline{h}})}\frac{(\log{p})^{c_\ell}}{p}= b_\ell \sum_{\underline\epsilon,\underline\epsilon' \in \{0,1\}^\ell, \underline\epsilon\neq \underline\epsilon'}\Big(\sum_{ p|(\underline\epsilon-\underline\epsilon')\cdot {\underline{h}}}\frac{(\log{p})^{c_\ell}}{p}\Big). \end{align} $$

$$ \begin{align} e^{a_\ell \sum_{p\in \mathcal{P}({\underline{h}})}\frac{1}{p}} \leq b_\ell \sum_{p\in \mathcal{P}({\underline{h}})}\frac{(\log{p})^{c_\ell}}{p}= b_\ell \sum_{\underline\epsilon,\underline\epsilon' \in \{0,1\}^\ell, \underline\epsilon\neq \underline\epsilon'}\Big(\sum_{ p|(\underline\epsilon-\underline\epsilon')\cdot {\underline{h}}}\frac{(\log{p})^{c_\ell}}{p}\Big). \end{align} $$

Moreover, we get for some

![]() $d_\ell ,e_\ell>0$

that

$d_\ell ,e_\ell>0$

that

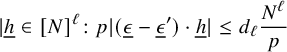

$$ \begin{align} \sum_{{\underline{h}}\in [N]^\ell}\Big(\sum_{ p|(\underline\epsilon-\underline\epsilon')\cdot {\underline{h}}}\frac{(\log{p})^{c_\ell}}{p}\Big)\leq d_\ell \sum_{ p}\frac{(\log{p})^{c_\ell}}{p} \frac{N^\ell}{p}\leq e_\ell\, N^\ell, \end{align} $$

$$ \begin{align} \sum_{{\underline{h}}\in [N]^\ell}\Big(\sum_{ p|(\underline\epsilon-\underline\epsilon')\cdot {\underline{h}}}\frac{(\log{p})^{c_\ell}}{p}\Big)\leq d_\ell \sum_{ p}\frac{(\log{p})^{c_\ell}}{p} \frac{N^\ell}{p}\leq e_\ell\, N^\ell, \end{align} $$

for all

![]() $N\in {\mathbb N}$

, where to get the first estimate, we used the fact that for some

$N\in {\mathbb N}$

, where to get the first estimate, we used the fact that for some

![]() $d_\ell>0$

, we have

$d_\ell>0$

, we have

$$ \begin{align*} |{\underline{h}}\in[N]^\ell\colon p|(\underline\epsilon-\underline\epsilon')\cdot {\underline{h}}|\leq d_\ell \frac{N^\ell}{p} \end{align*} $$

$$ \begin{align*} |{\underline{h}}\in[N]^\ell\colon p|(\underline\epsilon-\underline\epsilon')\cdot {\underline{h}}|\leq d_\ell \frac{N^\ell}{p} \end{align*} $$

for all

![]() $N\in {\mathbb N}$

, and to get the second estimate, we used that

$N\in {\mathbb N}$

, and to get the second estimate, we used that

![]() $\sum _{ p}\frac {(\log {p})^{c_\ell }}{p^2}<\infty $

.

$\sum _{ p}\frac {(\log {p})^{c_\ell }}{p^2}<\infty $

.

If we take squares in equation (12), sum over all

![]() ${\underline {h}}\in [N]^\ell $

and then use equations (13) and (14), we get the asserted estimate.

${\underline {h}}\in [N]^\ell $

and then use equations (13) and (14), we get the asserted estimate.

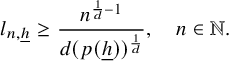

From this we deduce the following estimate that is a crucial ingredient used in the proof of Theorem 2.4:

Corollary 3.4. Let

![]() $\ell \in {\mathbb N}$

. Then for every

$\ell \in {\mathbb N}$

. Then for every

![]() $A\geq 1$

, there exist

$A\geq 1$

, there exist

![]() $C_{A,\ell } ({\underline {h}})>0$

,

$C_{A,\ell } ({\underline {h}})>0$

,

![]() ${\underline {h}}\in {\mathbb N}^\ell $

and

${\underline {h}}\in {\mathbb N}^\ell $

and

![]() $D_{A,\ell }>0$

such that

$D_{A,\ell }>0$

such that

-

1. for all

$N\in {\mathbb N}$

,

$N\in {\mathbb N}$

,

${\underline {h}}=(h_1,\ldots ,h_\ell )\in ({\mathbb N}^\ell )^*, c\in {\mathbb N},$

such that

${\underline {h}}=(h_1,\ldots ,h_\ell )\in ({\mathbb N}^\ell )^*, c\in {\mathbb N},$

such that

$c+ h_1+\cdots +h_\ell \leq N^A$

, we have

$c+ h_1+\cdots +h_\ell \leq N^A$

, we have  $$ \begin{align*} {\mathbb E}_{n\in [N]}\, (\Delta_{\underline{h}} \Lambda')(n+c)\leq C_{A,\ell}({\underline{h}}); \end{align*} $$

$$ \begin{align*} {\mathbb E}_{n\in [N]}\, (\Delta_{\underline{h}} \Lambda')(n+c)\leq C_{A,\ell}({\underline{h}}); \end{align*} $$

-

2.

${\mathbb E}_{{\underline {h}}\in [H]^\ell } (C_{A,\ell }({\underline {h}}))^2\leq D_{A,\ell }$

for every

${\mathbb E}_{{\underline {h}}\in [H]^\ell } (C_{A,\ell }({\underline {h}}))^2\leq D_{A,\ell }$

for every

$H\in {\mathbb N}$

.

$H\in {\mathbb N}$

.

Remark. We will use this result in the proof of Lemma 4.1 for values of c that are larger than N and smaller than

![]() $N^A$

for some

$N^A$

for some

![]() $A>0$

(the choice of A depends on the situation).

$A>0$

(the choice of A depends on the situation).

Proof. Since

![]() $\Lambda '$

is supported on primes and

$\Lambda '$

is supported on primes and

![]() $c+h_1+\cdots +h_\ell \leq N^A$

, we have that

$c+h_1+\cdots +h_\ell \leq N^A$

, we have that

where

![]() $\underline {n+c}$

is a vector with

$\underline {n+c}$

is a vector with

![]() $2^\ell $

coordinates, all equal to

$2^\ell $

coordinates, all equal to

![]() $n+c$

. Note that for

$n+c$

. Note that for

![]() ${\underline {h}}\in ({\mathbb N}^\ell )^*$

, we can apply Theorem 3.2, and we get that there exists

${\underline {h}}\in ({\mathbb N}^\ell )^*$

, we can apply Theorem 3.2, and we get that there exists

![]() $D_{A,\ell }>0$

such that for every

$D_{A,\ell }>0$

such that for every

![]() $N\in {\mathbb N}$

, the last expression is bounded by

$N\in {\mathbb N}$

, the last expression is bounded by

If we let

![]() $C_{A,\ell }({\underline {h}}):=D_{A,\ell }\, \mathfrak {G}_{2^\ell }(\text {cube}({\underline {h}}))$

and

$C_{A,\ell }({\underline {h}}):=D_{A,\ell }\, \mathfrak {G}_{2^\ell }(\text {cube}({\underline {h}}))$

and

![]() ${\underline {h}}\in {\mathbb N}^\ell $

and use Proposition 3.3, we get that properties

${\underline {h}}\in {\mathbb N}^\ell $

and use Proposition 3.3, we get that properties

![]() $(i)$

and

$(i)$

and

![]() $(ii)$

hold.

$(ii)$

hold.

3.3 Two elementary lemmas

We will use the following inner product space variant of a classical elementary estimate of van der Corput (see [Reference Kuipers and Niederreiter22, Lemma 3.1]):

Lemma 3.5. Let

![]() $N\in {\mathbb N}$

and

$N\in {\mathbb N}$

and

![]() $(u(n))_{n\in [N]}$

be vectors in some inner product space. Then for all

$(u(n))_{n\in [N]}$

be vectors in some inner product space. Then for all

![]() $H\in [N]$

, we have

$H\in [N]$

, we have

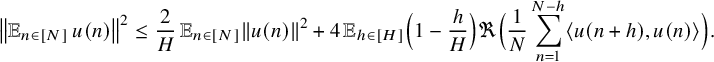

$$ \begin{align*} \left\Vert {\mathbb E}_{n\in [N]}\, u(n)\right\Vert^2\leq \frac{2}{H}\, {\mathbb E}_{n\in [N]}\left\Vert u(n)\right\Vert^2 + 4\, {\mathbb E}_{h\in[H]}\Big(1-\frac{h}{H}\Big)\Re\Big(\frac{1}{N}\sum_{n=1}^{N-h} \langle u(n+h),u(n)\rangle \Big). \end{align*} $$

$$ \begin{align*} \left\Vert {\mathbb E}_{n\in [N]}\, u(n)\right\Vert^2\leq \frac{2}{H}\, {\mathbb E}_{n\in [N]}\left\Vert u(n)\right\Vert^2 + 4\, {\mathbb E}_{h\in[H]}\Big(1-\frac{h}{H}\Big)\Re\Big(\frac{1}{N}\sum_{n=1}^{N-h} \langle u(n+h),u(n)\rangle \Big). \end{align*} $$

We will apply the previous lemma in the following two cases, depending on the range of the shift parameter h (the first case will be used when the relevant sequences are not necessarily bounded).

-

1. If

$M_N:=1+\max _{n\in [N]}\left \Vert u_N(n)\right \Vert {}^2$

,

$M_N:=1+\max _{n\in [N]}\left \Vert u_N(n)\right \Vert {}^2$

,

$N\in {\mathbb N}$

and

$N\in {\mathbb N}$

and

$L_N$

are such that

$L_N$

are such that

$M_N\prec L_N\prec \frac {N}{M_N}$

, then for

$M_N\prec L_N\prec \frac {N}{M_N}$

, then for

$H:=L_N$

, we have (15)where for every fixed

$H:=L_N$

, we have (15)where for every fixed $$ \begin{align} \left\Vert {\mathbb E}_{n\in [N]} \, u_N(n)\right\Vert^2\leq 4\, {\mathbb E}_{h\in [L_N]} \Big|{\mathbb E}_{n\in[N]}\langle u_N(n+h), u_N(n)\rangle \Big| +o_N(1), \end{align} $$

$$ \begin{align} \left\Vert {\mathbb E}_{n\in [N]} \, u_N(n)\right\Vert^2\leq 4\, {\mathbb E}_{h\in [L_N]} \Big|{\mathbb E}_{n\in[N]}\langle u_N(n+h), u_N(n)\rangle \Big| +o_N(1), \end{align} $$

$N\in {\mathbb N}$

, the sequence

$N\in {\mathbb N}$

, the sequence

$(u_N(n))$

is either defined on the larger interval

$(u_N(n))$

is either defined on the larger interval

$[N+L_N]$

or extended to be zero outside the interval

$[N+L_N]$

or extended to be zero outside the interval

$[N]$

. In all the cases where we will apply this estimate, we have

$[N]$

. In all the cases where we will apply this estimate, we have

$M_N\ll (\log N)^A$

for some

$M_N\ll (\log N)^A$

for some

$A>0$

, and we take

$A>0$

, and we take

$L_N=[e^{\sqrt {\log {N}} }]$

,

$L_N=[e^{\sqrt {\log {N}} }]$

,

$N\in {\mathbb N}$

.