Unresolved/disorganized attachment (U) is a classification of the Adult Attachment Interview (AAI; George et al., Reference George, Kaplan and Main1984, Reference George, Kaplan and Main1985, Reference George, Kaplan and Main1996). The coding system for assessing unresolved states of mind was initially developed by Main and colleagues in the late 1980s (Main et al., Reference Main, Kaplan and Cassidy1985, Reference Main and Goldwyn1991). Adults with an unresolved classification (often referred to as having an “unresolved state of mind”) are believed to not have adequately processed experiences of loss and abuse, leading to disorganized or disoriented reasoning or discourse surrounding discussion of these events in response to the AAI (Main & Hesse, Reference Main, Hesse, Greenberg, Cicchetti and Cummings1990). Despite widespread use of the AAI coding system over decades (Van IJzendoorn, Reference Van IJzendoorn1995; Verhage et al., Reference Verhage, Schuengel, Madigan, Fearon, Oosterman, Cassibba and Van IJzendoorn2016), the individual indicators used to classify unresolved states of mind (i.e., lapses in the monitoring of reasoning, discourse, and behavior) have not been psychometrically validated in independent study samples. This raises the question of whether attachment researchers have been measuring the same construct across the various studies. In addition, the indicators of unresolved states of mind may have partially captured discourse idiosyncratic to interviews in the Berkeley study and might not generalize in other samples (i.e., “overfitted” to the initial data). Unresolved states of mind have been found to predict both parenting behavior and disorganized attachment relationships in the next generation (Van IJzendoorn, Reference Van IJzendoorn1995; Verhage et al., Reference Verhage, Schuengel, Madigan, Fearon, Oosterman, Cassibba and Van IJzendoorn2016). This suggests that the coding scale contains valid indicators of unresolved states of mind, but it is not known which indicators are psychometrically valid, or how much heterogeneity there might be.

As argued by Scheel et al. (Reference Scheel, Tiokhin, Isager and Lakens2020), sufficiently defined concepts and valid measurements are needed before testing novel, theoretically derived hypotheses. To strengthen these elements of the “hypothesis derivation chain,” nonconfirmatory research such as descriptive and psychometric work is required. To this end, the current investigation explored how the construct of adults’ unresolved states of mind regarding experiences of loss and abuse has been shaped by the specific indicators described by Main et al. (Reference Main, Goldwyn and Hesse2003), using multiple analytic approaches and by addressing both the construct and predictive validity of unresolved states of mind.

Unresolved states of mind regarding loss and abuse

When studying relations between parents’ narratives about their childhood experiences and the attachment relationships with their infants, Main and Hesse (Reference Main, Hesse, Greenberg, Cicchetti and Cummings1990) discovered that some parents who had experienced loss, such as the death of a parent before adulthood, showed signs of “lack of resolution of mourning.” This was indicated by unusual speech during the discussion of loss, akin to dissociative responses to trauma in the clinical domain. Based on an initial development sample of 88 AAIs (Duschinsky, Reference Duschinsky2020), Main et al. (Reference Main, DeMoss and Hesse1991/1994) developed a scale for rating individuals’ unresolved states of mind with regard to experiences of loss. Main (Reference Main1991) also theorized that experiences of abuse perpetrated by attachment figures may have disorganizing effects on the attachment behavioral system and therefore developed a scale for rating unresolved states of mind regarding childhood abuse that mirrored the unresolved loss scale. These two rating scales are used by coders to classify adults with an unresolved attachment state of mind based on their AAI discourse.

The predictive validity of the unresolved classification has been shown in many subsequent studies. The first and most fundamental source of support for the classification was its association with children’s behavior during the Strange Situation, the classic laboratory-based procedure for assessing parent–child attachment (Ainsworth et al., Reference Ainsworth, Blehar, Waters and Wall1978). Main and Hesse (Reference Main, Hesse, Greenberg, Cicchetti and Cummings1990) theorized that parents with an unresolved state of mind would show forms of frightened, frightening, or dissociated behavior, which alarm their infants. These types of unpredictable experiences of fear in the context of the parent–child attachment relationship were theorized to lead to children’s disorganized attachment behavior in the Strange Situation. Specifically, behaviors such as freezing, stilling, or expressions of apprehension in the presence of the attachment figure are postulated to reflect the infant’s internal conflict of whether to approach or avoid the parent (Main & Solomon, Reference Main, Solomon, Greenberg, Cicchetti and Cummings1990). Meta-analytic findings have supported these predictions, with effect sizes ranging from .21 to .34 for the associations between parents’ unresolved states of mind, parents’ frightening behavior, and children’s disorganized attachment (Madigan et al., Reference Madigan, Bakermans-Kranenburg, Van IJzendoorn, Moran, Pederson and Benoit2006; Schuengel et al., Reference Schuengel, Bakermans-Kranenburg and Van IJzendoorn1999).

The AAI coding system distinguishes three kinds of linguistic indicators of unresolved states of mind: (i) lapses in the monitoring of reasoning, such as irrational beliefs that a loved one who died long ago is still alive or denying the occurrence or consequences of childhood abuse; (ii) lapses in the monitoring of discourse, such as a sudden inability to finish sentences or changing to an odd style of speech during discussion of loss or abuse; and (iii) lapses in the monitoring of behavior, indicating past or ongoing extreme behavioral responses to loss or abuse, such as multiple suicide attempts. AAI coders consider the severity and frequency of these indicators to assign ratings on two 9-point scales: unresolved loss and unresolved abuse. Coders are instructed to use the indicators of these scales interchangeably – indicators from the unresolved loss scale can be used to code unresolved abuse, and vice versa (Main et al., Reference Main, Goldwyn and Hesse2003, p. 131).

If an interview receives a high unresolved score (≥5) on the unresolved loss or the unresolved abuse scale (or both), the unresolved classification is assigned. Interviews can be classified as “unresolved” based on unresolved loss, unresolved abuse, or unresolved for both loss and abuse. In low-risk community samples, around 20% of interviews are classified as unresolved (Verhage et al., Reference Verhage, Schuengel, Madigan, Fearon, Oosterman, Cassibba and Van IJzendoorn2016). In clinical samples and samples considered at-risk (e.g., those with a low socioeconomic status background), the numbers of unresolved classifications are higher (around 32% of at-risk samples and 43% of clinical samples; Bakermans-Kranenburg & Van IJzendoorn, Reference Bakermans-Kranenburg and Van IJzendoorn2009).

The indicators that are used to assess unresolved states of mind (Main et al., Reference Main, Goldwyn and Hesse2003) have not changed since the early 1990s and are still widely used by attachment researchers today. However, some assumptions underlying the construct of an unresolved state of mind have not been examined empirically. Theoretical considerations, rather than psychometric evidence, have guided the construction of the unresolved loss and abuse coding scales and the rules for coding unresolved states of mind, including the selection and interpretation of indicators of unresolved loss/abuse. Some lapses in the monitoring of reasoning, such as feelings of being causal in a death where no material cause was present, are generally believed to be a stronger indicator of an unresolved state of mind than lapses in the monitoring of discourse, such as sudden moves away from discussing experiences of loss or abuse (Main et al., Reference Main, Goldwyn and Hesse2003). Using the examples presented in the coding manual as guidelines, it is up to the coder to determine the relative strength of individual indicators when assigning scores to each indicator found in the transcript on the unresolved loss and unresolved abuse scales. The coding manual presents examples of indicators that are thought to suggest immediate placement in the unresolved category, with relative weighting suggestions of 6–9 on the 9-point scales. Thus, coders may decide to classify an interview as unresolved, based on the presence of a single indicator. If the coder assigns a rating of 5 on either or both of the unresolved loss/abuse scales, based on the presence and relative strength of one or multiple indicators, it is up to the coder to determine if this leads to placement in the U category (Main et al., Reference Main, Goldwyn and Hesse2003).

The extent to which coding practices using the AAI manual can be adequately described using psychometric models remains unknown. Given that most later studies on the intergenerational transmission of attachment patterns had used even smaller sample sizes than the original study (Verhage et al., Reference Verhage, Schuengel, Madigan, Fearon, Oosterman, Cassibba and Van IJzendoorn2016), attachment researchers have not been able to examine the occurrence and the relative strength of the individual indicators of unresolved loss/abuse. However, with sufficiently large datasets, it is possible to explore whether some indicators lead to higher ratings on the unresolved loss and abuse scales, in ways suggested by the coding system. In addition, large datasets make it possible to explore potential patterns of indicators underlying the coding scales, for example, whether there are certain combinations of indicators that often co-occur in interviews. Such findings would contribute to theoretical parsimony of the concept of unresolved states of mind and aid in efforts to produce a scalable version of the AAI (Caron et al., Reference Caron, Roben, Yarger and Dozier2018). Like other attachment measures such as the Strange Situation, coding the AAI is labor-intensive and requires extensive training and practice. Limiting the number of indicators of unresolved loss and abuse would decrease coders’ time investment and improve feasibility of coding and possibly also interrater reliability. As such, the first aim of this study was to investigate which patterns of indicators differentiate interviewees with and without unresolved loss/abuse. The second aim was to search for a psychometric model that may underlie the unresolved state of mind construct, by investigating the contribution of each indicator to overall ratings and classifications of unresolved states of mind.

Predictive validity of unresolved states of mind for infant disorganized attachment

A broader approach to exploring the meaning of unresolved loss/abuse entails considering the degree to which adults’ unresolved states of mind predict infant disorganized attachment. This intergenerational association is especially important from a predictive validity standpoint because this was how the unresolved classification was originally developed. Main and Hesse’s (Reference Main, Hesse, Greenberg, Cicchetti and Cummings1990) model of the transmission of unresolved states of mind to infant disorganized attachment has been generally supported by a large body of research (Van IJzendoorn, Reference Van IJzendoorn1995; Verhage et al., Reference Verhage, Schuengel, Madigan, Fearon, Oosterman, Cassibba and Van IJzendoorn2016). This research has established that the unresolved category as a whole is associated with infant disorganized attachment. However, as previously noted, the unresolved state of mind classification can be based on anomalous reasoning, discourse, or behavior with regard to experiences of loss or experiences of childhood abuse (Main et al., Reference Main, Goldwyn and Hesse2003), and the structure of this rich set of indicators has not yet been explored. This gap exists despite calls, including from Main and Hesse themselves, to examine the implications of such different manifestations of unresolved loss/abuse in parents’ narratives (Hesse & Main, Reference Hesse and Main2006). For example, Lyons-Ruth et al. (Reference Lyons-Ruth, Yellin, Melnick and Atwood2005) suggested that the principles for coding unresolved states of mind may be “more sensitive to processes involved in integrating loss and less sensitive to processes involved in integrating abuse” (p. 18), based on the fact that the guidelines for coding unresolved states of mind were initially developed based on parents’ narratives about experiences of loss. Disaggregating lapses in the monitoring of reasoning, discourse, and behavior may reveal additional clues regarding how parents’ unresolved loss/abuse contribute to infant disorganization and may suggest alternative ways in which such lapses may be usefully aggregated. In addition to the aim of searching for a psychometric model underlying adults’ classification of unresolved, the third aim of this study was to examine the predictive validity of this model by examining its association with infant disorganized attachment.

Other kinds of unresolved trauma

Adverse experiences that do not directly involve individuals’ childhood attachment figures, such as sexual abuse by noncaregivers, witnessing violence, or surviving a serious car accident, may also have significant implications for psychological functioning and parenting behavior. For example, studies have shown associations between unresolved states of mind regarding miscarriage and stillbirth and infant disorganized attachment (Bakermans-Kranenburg et al., Reference Bakermans-Kranenburg, Schuengel and Van IJzendoorn1999; Hughes et al., Reference Hughes, Turton, Hopper, McGauley and Fonagy2001, Reference Hughes, Turton, Hopper, McGauley and Fonagy2004). These experiences might be considered as potential traumas for the parent. However, beyond these studies, research has not yet addressed the question of whether traumatic experiences not directly involving attachment figures are related to infant disorganized attachment. Madigan et al. (Reference Madigan, Vaillancourt, McKibbon and Benoit2012) found that the majority of high-risk, pregnant adolescents who reported experiences of sexual abuse during the AAI were classified as having an unresolved state of mind, and that the majority of perpetrators were nonattachment figures. The authors therefore emphasized the importance of exploring the impact of individuals’ discourse regarding abuse perpetrated by attachment figures versus nonattachment figures for states of mind regarding attachment.

Since the early editions of the AAI coding manual (e.g., Main & Goldwyn, Reference Main and Goldwyn1991), coders are instructed to record the nature of traumatic events perpetrated by nonattachment figures and apply the principles of the unresolved loss and abuse scales to identify any unresolved/disorganized responses. Yet, in the current version of the manual (Main et al., Reference Main, Goldwyn and Hesse2003), ratings of “other trauma” are not taken into account when determining placement into the unresolved category, however coders are instructed to score and include any instances of unresolved “other trauma” on the coding sheet in parentheses as a provisional score/classification. As a first step in exploring the predictive significance of adults’ discourse regarding other potentially traumatic experiences and parent–child attachment, the fourth aim of this study was to examine whether coders’ ratings of unresolved other trauma were associated with infant disorganized attachment, over and above ratings of unresolved loss and unresolved abuse.

The current study

In summary, the following research questions were addressed in this study:

-

1. Which patterns of indicators differentiate interviewees with or without unresolved loss/abuse?

-

2. What psychometric model based on the indicators of unresolved loss/abuse may underlie ratings and classifications of unresolved states of mind?

-

3. What is the relation of this model to infant disorganized attachment?

-

4. What is the association between ratings of unresolved “other trauma” and infant disorganized attachment, over and above ratings of unresolved loss/abuse?

The research questions and study protocol were preregistered on Open Science Framework (https://osf.io/w65fj/).

Method

Study identification and selection

This study was conducted using data gathered by the Collaboration on Attachment Transmission Synthesis (CATS) and built on the first individual participant data meta-analysis on intergenerational transmission of attachment by Verhage et al. (Reference Verhage, Fearon, Schuengel, Van IJzendoorn, Bakermans-Kranenburg and Madigan2018). For the first individual participant data meta-analysis, principal investigators from 88 studies on attachment transmission were invited to participate in CATS by sharing individual participant dataFootnote 1 . These studies had used the AAI to assess adults’ attachment states of mind and included observational measures of parent–child attachment. In total, the CATS dataset contains AAI data (coded using Main and colleagues’ system) and parent–child attachment data from 4,521 dyads from 61 studies. For an overview of the study identification and selection process, see Verhage et al. (Reference Verhage, Fearon, Schuengel, Van IJzendoorn, Bakermans-Kranenburg and Madigan2018).

Data items

In the original CATS dataset (Verhage et al., Reference Verhage, Fearon, Schuengel, Van IJzendoorn, Bakermans-Kranenburg and Madigan2018), information about unresolved loss/abuse in the AAI was limited to the unresolved classification and scores on the unresolved loss and unresolved abuse rating scales. For this study, we requested additional information about the specific indicators of unresolved loss/abuse. Principal investigators in CATS were invited to share the following information per applicable loss and abuse experience reported in the AAI: relationship with the deceased, type of abuse (e.g., physical, sexual), relationship with the abuse perpetrator, highest unresolved score for the event, and the indicators of unresolved loss/abuse that were marked for the event. This study included all 16 possible indicators of unresolved loss and all 16 possible indicators of unresolved abuse listed in the AAI coding system (Main et al., Reference Main, Goldwyn and Hesse2003). The requested data were shared by either providing AAI scoring forms or marked interview transcripts or by entering the information into an Excel spreadsheet, Word document, or SPSS template created for this study. All obtained data were checked for anomalies. In cases where data input errors were suspected, the principal investigators of the original studies were contacted for clarification.

Indicators of unresolved loss/abuse were dichotomized according to their presence in the interview (0 = lapse not present, 1 = lapse present) as the availability of coder ratings of the individual indicators was inconsistent. Participants who did not have an applicable loss or abuse experience were assigned scores of 0 for all the specific indicators of unresolved loss or abuse. In addition, the current study used the following data from the original CATS dataset: scale scores for unresolved loss, unresolved abuse, and unresolved other trauma (continuous; range 1–9); unresolved classification (dichotomous; 0 = not unresolved, 1 = unresolved); reported applicable loss (dichotomous; 0 = no loss reported, 1 = loss reported); reported applicable abuse (dichotomous; 0 = no abuse reported, 1 = abuse reported); infant disorganized attachment rating as measured in the Strange Situation (continuous; range 1–9); infant disorganized attachment classification (dichotomous; 0 = not disorganized, 1 = disorganized); and risk background of the sample (dichotomous; 0 = normative, 1 = at-risk). Consistent with previous research, participants without applicable loss, abuse, or other trauma experiences were given a score of 1 on the corresponding coding scales (e.g., Haltigan et al., Reference Haltigan, Roisman and Haydon2014; Raby et al., Reference Raby, Yarger, Lind, Fraley, Leerkes and Dozier2017; Roisman et al., Reference Roisman, Fraley and Belsky2007).

We requested information about unresolved loss/abuse from 58 studies in CATS. This information was available from 1,009 parent–child dyads from 13 samples. From these samples, 11 had used the Strange Situation to assess the quality of the parent–child attachment relationship and Main and Solomon’s (Reference Main, Solomon, Greenberg, Cicchetti and Cummings1990) coding system to measure infant disorganized attachment (n = 930 parent–child dyads). Interrater reliability of the four-way AAI classifications ranged from κ = 0.57 to κ = 0.90, and interrater reliability of the four-way Strange Situation classifications ranged from κ = 0.63 to κ = 0.87. An overview of the included studies, sample sizes, measures, interrater reliability, and distributions of classifications is reported in Supplement 1. Interrater reliability scores of the unresolved loss and abuse scales were available from three and two studies, respectively. None of the studies reported reliability information for the specific indicators marked for unresolved loss/abuse.

Most of the studies in the original CATS dataset from which the requested information about indicators of unresolved/loss abuse was not available no longer had access to the data (k = 27). Other reasons for not participating were that authors did not respond (k = 10), experienced time constraints (k = 3), or did not wish to share unpublished data (k = 2). For two studies, AAIs were coded in a language not feasible to translate.

Participants

All parents in the current study were female (N = 1,009) and 51% of the children were female. Parents were on average 29.79 years old (SD = 6.76) at the time of the AAI, and the average age of the children at the time of the Strange Situation procedure was 13.69 months (SD = 1.99). Forty percent of AAIs were conducted prenatally. Of the parent–child dyads from which the AAI was conducted after birth, children were on average 22.63 months old (SD = 22.52) when the interview was conducted. In the majority of studies using the Strange Situation procedure (k = 6; 64% of dyads), the AAI was conducted before the Strange Situation, in three studies (25% of dyads), participants were concurrently administered the AAI and the Strange Situation, and in two studies (11% of dyads) Strange Situation data were collected before the AAI. In total, 15% of participants were single, 18% had finished primary education or less, and 62% of the parent–child dyads were considered at-risk due to characteristics such as teenage motherhood, preterm birth, adoptive families, or substance abuse (see Verhage et al., Reference Verhage, Fearon, Schuengel, Van IJzendoorn, Bakermans-Kranenburg and Madigan2018). The 13 studies included in this project were published between 1999 and 2016, and originated from seven countries (Canada, Denmark, Germany, Italy, Japan, the Netherlands, and the United States).

Statistical procedure

The statistical analysis for Research Question 1 was conducted using Mplus (version 7.4; Muthén & Muthén, Reference Muthén and Muthén2015) and the analyses for Research Questions 2, 3, and 4 were performed in R (version 3.5.2; R Core Team, 2018) and Stata (version 16.1; StataCorp, 2019). R packages MplusAutomation (Hallquist & Wiley, Reference Hallquist and Wiley2018) and tidyverse (Wickham et al., Reference Wickham, Averick, Bryan, Chang, D’Agostino McGowan, François and Yutani2019) were used to prepare the data.

Research Question 1

Latent class analysis (LCA) was used to first investigate which patterns of indicators differentiate interviewees with or without unresolved loss/abuse. Separate latent class models were fitted for indicators of unresolved loss and unresolved abuse. Latent classes were estimated using maximum likelihood estimation with robust standard errors. The number of latent classes was decided based on the Bayesian Information Criterion (BIC) value and the interpretability of the classes (Nylund, et al., Reference Nylund, Asparouhov and Muthén2007). As a follow-up, we investigated the association between participants’ at-risk background and the latent classes resulting from the best-fitting models.

Research Question 2

Our second aim was to define a psychometric model that may underlie the construct of an unresolved state of mind by investigating the contribution of each indicator to overall ratings and classifications of unresolved states of mind. To do this, we used predictive modeling (i.e., statistical or machine learning) with R package caret (version 6.0–86; Kuhn, Reference Kuhn2020). To our knowledge, these techniques are rarely used in the field of developmental psychopathology research. Therefore, these methods will be explained in more detail than what would normally be reported in a primary research article.

Aims of predictive modeling

As described by Yarkoni and Westfall (Reference Yarkoni and Westfall2017) most research in psychology has focused on identifying and explaining mechanisms of human behavior, mostly using statistical inference (explanatory) techniques. However, more methodological tools have now become available to test predictive models. For some research questions in psychology, predictive models have the advantage over explanatory models to test how well a set of independent variables predicts a given outcome, with the aim of achieving good prediction accuracy. In the case of searching for a psychometric model that may underlie the construct of an unresolved state of mind, predictive models may thus help us to find a set of indicators of unresolved loss/abuse that do best in predicting unresolved scores and classifications.

When using predictive modeling, it is common practice to fit a model on one part of the dataset (the “training” data) and to evaluate its predictive performance on another part of the dataset (the “testing” or “out-of-sample” data). This approach makes it possible to test how well a predictive model generalizes to new data. In our study, we used 70% of the dataset as training data and 30% as testing data. Further, it is important to consider the trade-off between model complexity and prediction accuracy. Using more complex models can lead to better prediction accuracy, but these models may be more difficult to interpret. In addition, testing more complex models could lead to overfitting. Overfitting refers to situations in which a predictive model fits well on the data on which it is trained, but does not generalize to new data. It is therefore important to find a model that is flexible enough to identify patterns in the data, but to constrain model complexity so that the model achieves good predictive performance on the out-of-sample data. One way to address overfitting in predictive modeling is to use regularization techniques, such as ridge regression and lasso regression. These techniques add penalty terms to the model that shrink or force the coefficients of predictor variables that explain little variance in the outcome variable to zero (James et al., Reference James, Witten, Hastie and Tibshirani2013; Kuhn & Johnson, Reference Kuhn and Johnson2013).

Developing the predictive models

The outcome of the predictive models was participants’ unresolved score (range 1–9). The following variables were used as predictors: indicators of unresolved loss/abuse, at-risk background, reported loss, reported abuse (all dichotomously coded), and study sample (12 dummy variables). Using the training data (70% of the dataset), we fitted nine predictive models: linear regression (ordinary least squares), lasso regression, ridge regression, multivariate adaptive regression splines (MARS), logic regression, random forest, and support vector machines with a linear, polynomial, and radial kernel. These models are commonly used supervised learning models for continuous outcome variables (i.e., regression models). Supervised learning models are used to address questions about the association between predictors (in this case: indicators of U) and a given outcome (in this case: unresolved scores). These models use predictors and labeled outcome values to train the algorithm, attempting to optimize the mean squared error: the extent to which predicted values are close to the actual, observed values.

Some of the models used in this study are linear regression models or cousins of linear regression (linear, lasso, and ridge regression) and some are nonlinear regression models (MARS, logic regression, random forest, and support vector machines). These models vary in complexity, ranging from simpler models (e.g., linear, lasso, or ridge regression) to more complex models (e.g., random forest). We used a variety of predictive models in this study, because we attempted to find a model that would do best in predicting unresolved scores and classifications. In the event that a simple and a complex model would do equally well in predicting the outcome, we would choose the simple model over the complex one as the final model, because complex models may be more difficult to interpret (James et al., Reference James, Witten, Hastie and Tibshirani2013). After fitting the models on the training data, the outcomes were predicted on the testing data (30% of the dataset). The models’ predictive performance for unresolved scores was evaluated by calculating the models’ precision, sensitivity, and specificity for unresolved classifications (unresolved/not unresolved, dichotomously coded). The final model was chosen based on the trade-off between sensitivity and specificity (using Stata package SENSPEC; Newson, Reference Newson2004).

To explore how model performance varies if fewer indicators of unresolved loss/abuse were used to predict unresolved scores, we retested the final model using only the top seven, five, and three most important predictors. The other predictors were not included in these models. Obtaining relatively high out-of-sample predictive performance for unresolved scores using fewer indicators may have implications for theoretical parsimony of the construct of unresolved states of mind as well as scalability of the measure.

Research Question 3

The third aim was to investigate how the psychometric model of unresolved states of mind resulting from Research Question 2 would relate to infant disorganized attachment. To test this, the same nine predictive models as in Research Question 2 were developed using the smaller sample of parent–child dyads who participated in the Strange Situation (n = 930). The predictive models were trained on 70% of the dataset. The outcome of the predictive models was participants’ unresolved score (range 1–9). Predictors were: indicators of unresolved loss/abuse, at-risk background, reported loss, reported abuse (all dichotomously coded), and study sample (12 dummy variables). The predictive validity of these models for infant disorganized attachment was assessed using the testing data (30% of the dataset). The models’ predictive performance for infant disorganization was evaluated by calculating the models’ precision, sensitivity, and specificity for infant disorganized attachment classifications (disorganized/not disorganized, dichotomously coded). As with Research Question 2, the final model was chosen based on the trade-off between sensitivity and specificity (using Stata package SENSPEC; Newson, Reference Newson2004).

Research Question 4

The fourth aim was to investigate the association between unresolved “other trauma” and infant disorganized attachment. Using multilevel modeling to account for the nested data, we investigated the association between ratings of unresolved other trauma and infant disorganized attachment, over and above unresolved loss/abuse. These analyses were conducted using R packages nlme (Pinheiro et al., Reference Pinheiro, Bates, DebRoy and Sarkar2020) and lme4 (Bates et al., Reference Bates, Maechler, Bolker and Walker2015).

Handling missing data

Nearly 5% of the parent–child dyads (n = 45) had missing data on infant disorganized attachment. The way in which missing data were handled depended on the type of analysis. For the predictive models used to address Research Question 3, missing disorganized attachment scores were imputed by the sample mean because these models could not be estimated when the variables contained missing values. The multilevel regression analyses (Research Questions 1 and 4) used full maximum likelihood estimation to deal with these missing data.

Results

Descriptive statistics

The total sample consisted of 1,009 participants clustered in 13 study samples. Ninety-three percent of participants reported at least one applicable loss, 23% reported at least one applicable abuse experience, and 21% reported both loss and abuse during the AAI. Of the participants reporting loss, 78% reported no abuse, and 9% of participants who reported abuse did not report any loss.

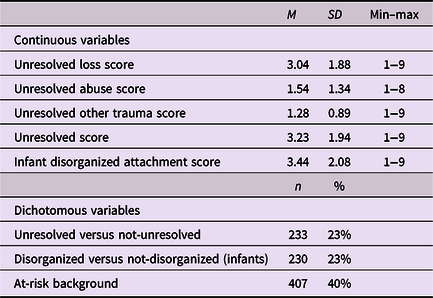

Descriptive statistics of unresolved loss/abuse and infant disorganized attachment are shown in Table 1. The correlation between unresolved loss and unresolved trauma scores was statistically significant (r = .20, p = < .001). The correlation between the highest unresolved scores and infant disorganized attachment score was also statistically significant (r = .13, p = < .001). The raw frequencies of the indicators of unresolved loss and abuse and the correlation matrices are reported in Supplement 2.

Table 1. Descriptive statistics of the study variables

Note. Unresolved score was determined by the highest score from the unresolved loss and abuse scales. Participants without applicable loss or trauma were given a score of 1 on the corresponding rating scales (7% for unresolved loss, 77% for unresolved abuse, and 83% for unresolved other trauma).

Research Question 1: Patterns of indicators that differentiate interviewees with or without unresolved loss/abuse

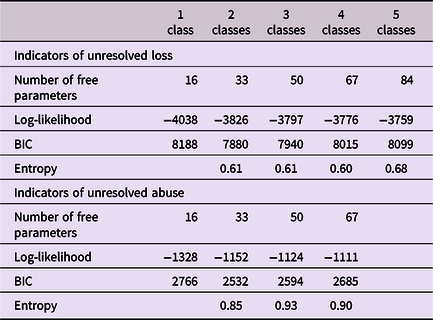

LCA was used to investigate patterns of indicators that differentiate interviewees with and without unresolved loss/abuse. We used a three-step approach (Bolck et al., Reference Bolck, Croon and Hagenaars2004). Separate latent class models were fitted for indicators of unresolved loss and unresolved abuse. Table 2 presents the model fit parameters of the estimated models. For both unresolved loss and abuse, the 2-class models were chosen as the best-fitting models. The 2-class models of unresolved loss and abuse were chosen on the basis of the lowest BIC values, theoretical plausibility, and clear differentiation of interviewees with and without unresolved loss/abuse.

Table 2. Results from the latent class analysis of indicators of unresolved loss and unresolved abuse

Note. BIC = Bayesian Information Criterion. The models with > 5 classes for unresolved loss and >4 classes for unresolved abuse resulted in model nonidentification and were therefore not reported.

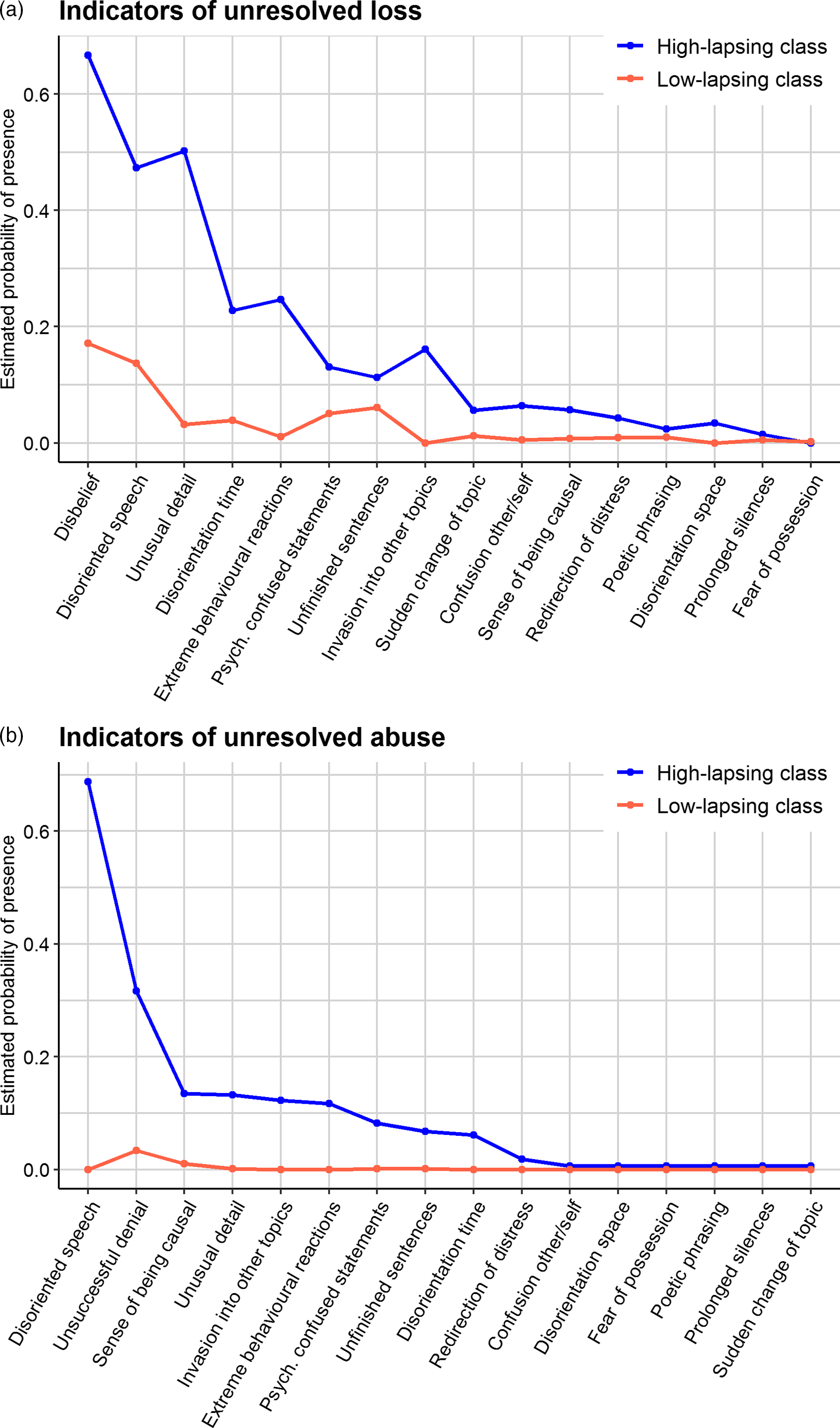

As seen in the profile plots in Figure 1, the two latent classes of unresolved loss (A) were distinguished based on the probability of showing disbelief, disoriented speech, unusual attention to detail, disorientation with regard to time, and extreme behavioral reactions. The two latent classes of unresolved abuse (B) were distinguished based on the probability of showing disoriented speech and unsuccessful denial of abuse. As seen in the figure, the order of indicators discriminating the latent classes closely follows the frequency of occurrence: the indicators providing the most information for discriminating the classes were the ones most frequently identified in the interviews (see Supplement 2 for raw frequencies). The profiles characterized by high probabilities of showing the frequently occurring indicators were designated as high-lapsing classes, and the profiles characterized by low-to-zero probabilities of showing these indicators were designated as low-lapsing classes. Forty percent of the participants with loss experiences belonged to the high-lapsing unresolved loss class and 62% of the participants who reported abuse experiences belonged to the high-lapsing unresolved abuse class. The bivariate residuals, and the associations between the latent classes, unresolved scores, unresolved classifications, and infant disorganized attachment scores are reported in Supplement 2.

Figure 1. Profile plots from the latent class analysis for indicators of unresolved loss (a) and unresolved abuse (b). The lines represent the latent classes from the best-fitting models. The indicators on the x-axis are arranged in descending order of frequency of occurrence.

Associations between the latent classes and at-risk background

As a follow-up, we investigated the association between participants’ at-risk background and the latent classes resulting from the best-fitting models. Multinomial logistic regression analysis showed that at-risk background was significantly associated with belonging to the high-lapsing unresolved loss class (B = 2.17, SE = 0.26, p < .001) and the high-lapsing unresolved abuse class (B = 2.36, SE = 0.41, p < .001). Participant–child dyads considered at-risk had a predicted probability of 0.58 of falling into the high-lapsing unresolved loss class and a predicted probability of 0.25 of falling into the high-lapsing unresolved abuse class. Dyads considered not at-risk had a predicted probability of 0.14 of falling into the high-lapsing unresolved loss class and a predicted probability of 0.03 of falling into the high-lapsing unresolved abuse class.

Research Question 2: Defining a psychometric model underlying classifications of unresolved states of mind

This Research Question aimed to define a psychometric model that may underlie the unresolved state of mind construct. Using predictive modeling, we examined the extent to which indicators of unresolved loss/abuse are uniquely associated with scale scores (range 1–9) and classifications of unresolved states of mind (unresolved/not unresolved, dichotomously coded).

First, we identified indicators of U with zero or near-zero variance and removed these from the dataset. Variables were identified as having near-zero variance if the following two conditions were met: the ratio of frequencies of the most common value over the second most common value was above a cutoff of 95/5, and the percentage of unique values out of the total number of data points was below 10% (Kuhn, Reference Kuhn2008). Of the 16 indicators of unresolved loss, the following eight had sufficient variance to be included in the models: disbelief that the person is dead, disoriented speech, unusual attention to detail, disorientation with regard to time, extreme behavioral reactions, psychologically confused statements, unfinished sentences, and invasion of the loss into other topics. Only two of the 16 indicators of unresolved abuse could be included: disoriented speech and unsuccessful denial of abuse. In addition to the indicators of unresolved loss/abuse, the following predictors were included: at-risk background (because at-risk background was significantly associated with the high- and low-lapsing unresolved loss/abuse latent classes in Research Question 1), reported loss, reported abuse, and 12 dummy variables of the study samples, to control for the nested data. All variables were dichotomously coded.

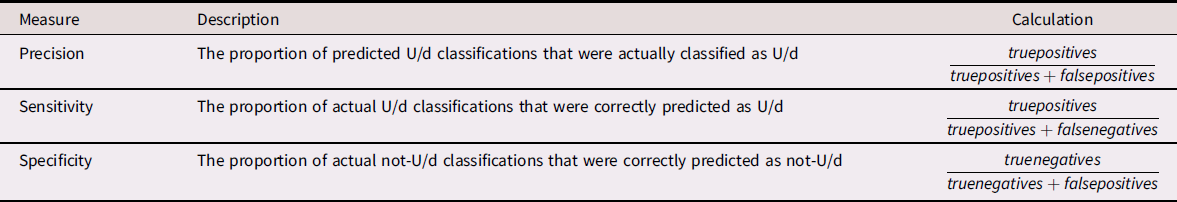

The dataset was randomly split into a 70% training dataset and 30% testing dataset. Both datasets contained no missing values. We fitted nine predictive models on the training data: linear regression (ordinary least squares), lasso regression, ridge regression, MARS, logic regression, random forest, and support vector machines with linear, polynomial, and radial kernels. The outcome of the predictive models was participants’ unresolved score (range 1–9).Footnote 2 The models’ predictive performance for unresolved scores was evaluated by calculating the models’ precision, sensitivity, and specificity for unresolved classifications (unresolved/not unresolved, dichotomously coded). Thus, we used the predicted unresolved scores resulting from the models to predict unresolved classifications. See Table 3 for an overview of the model performance measures used in this study.

Table 3. Measures for evaluating predictive model performance

Note. The examples in the “Description” column refer to Research Question 2 (Definining a psychometric model underlying classifications of U/d). The same model performance measures were used for Research Question 3 (read “infant D” instead of “U/d”).

Step 1: Evaluating model performance by precision and sensitivity for unresolved classifications

We first examined whether the predicted unresolved scores from the predictive models could be used to identify participants’ actual unresolved classifications according to the human coders. We used the precision and sensitivity for unresolved classifications as indicators of model performance. Higher precision and higher sensitivity indicate better predictive performance. The precision of unresolved scores predicting unresolved classifications ranged from 70% (random forest) to 88% (lasso). In other words, 88% of the unresolved classifications identified by the lasso regression model were actually given unresolved classifications. The sensitivity for unresolved classifications ranged from 38% (ridge regression) to 50% (support vector machine with a polynomial kernel). In other words, half of the participants classified as unresolved by the support vector machine were correctly identified by the model. The precision and sensitivity of all the models and for different threshold unresolved scores is reported in Supplement 3.

Step 2: Evaluating model performance by examining the trade-off between sensitivity and specificity for unresolved classifications

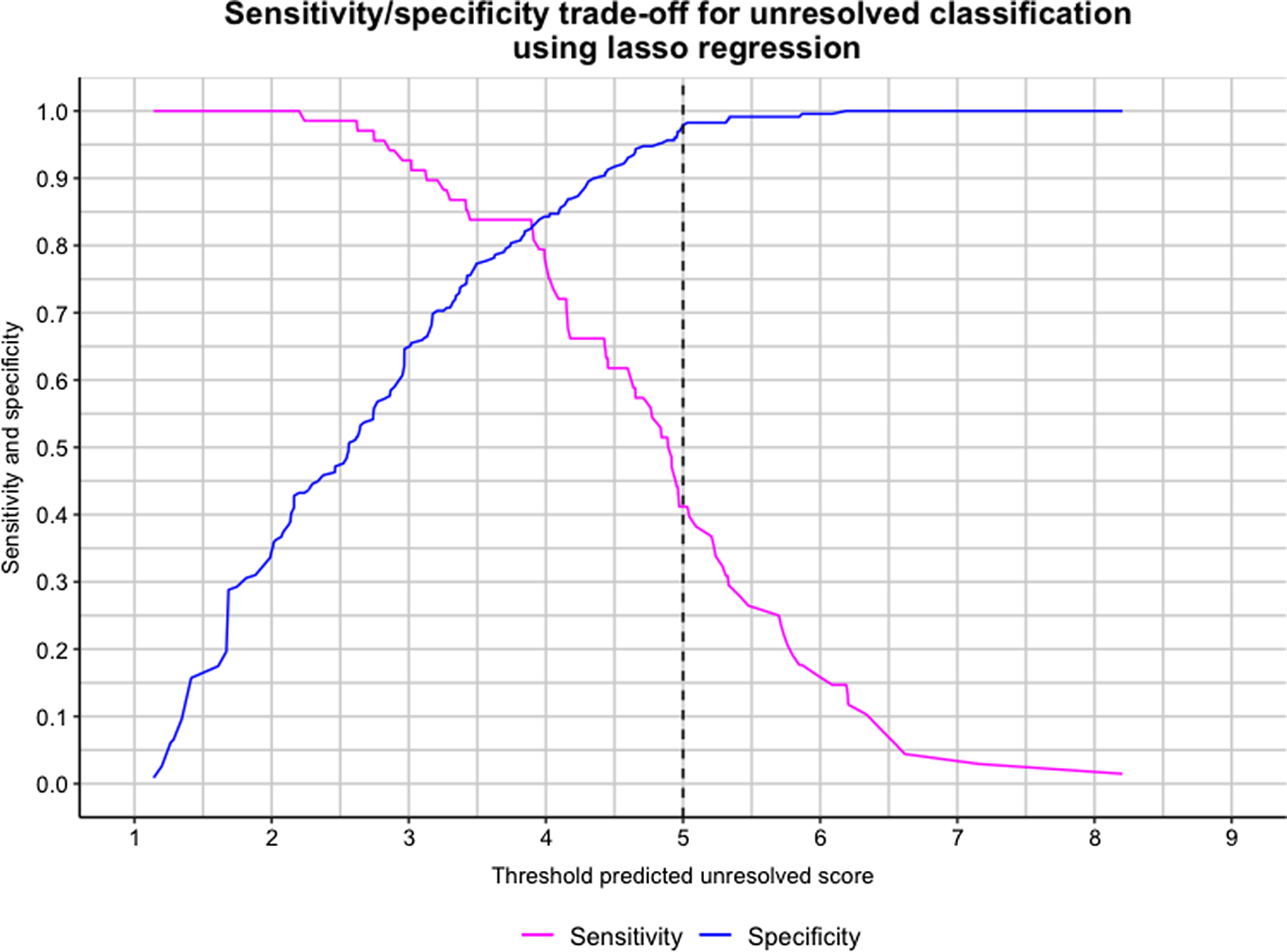

As a more informative way to evaluate the models’ predictive performance, we explored how performance varied if different rules for assigning the unresolved classification were used. In the second step, we therefore examined the trade-off between sensitivity and specificity for unresolved classifications by different threshold unresolved scores predicted by the models (i.e., different rules for assigning the unresolved classification). These analyses were conducted out-of-sample. For each observation, the sensitivity and specificity for classification of unresolved was calculated, with a threshold for classification equal to that participant’s predicted unresolved score. To illustrate: if participant 1 had a predicted unresolved score of 2, score ≥ 2 would be taken as a threshold for classification. In this example, each participant with a predicted unresolved score of ≥ 2 would be given a predicted unresolved classification, and each participant with a predicted unresolved score < 2 would be given a predicted not-unresolved classification. These predicted classifications were then compared with the actual unresolved classifications (according to the human coders) across the entire sample, yielding a number of true positives, true negatives, false positives, and false negatives. This process was repeated for all participants in the sample. Using this approach, we aimed to identify as many transcripts as possible that would likely receive an unresolved classification, without wrongly identifying those without a classification as being a likely candidate. The results were plotted as to examine which threshold gives the best sensitivity and specificity trade-off for unresolved classifications. Of particular interest is the level of sensitivity and specificity for unresolved classifications at around a threshold unresolved score of ≥ 5, because this is the AAI coding manual’s threshold for unresolved classification.

Sensitivity and specificity plots showed that the lasso regression model (Figure 2) had a favorable performance, and was therefore chosen as the final model. At a threshold predicted unresolved score of ≥ 5 (the AAI coding manual’s threshold score for assigning the classification), the sensitivity of unresolved scores predicting unresolved classifications reached .41. Thus, the lasso regression model correctly predicted 41% of participants with an actual unresolved classification, meaning that 59% of unresolved cases were missed. The specificity was 98%, meaning that the model correctly predicted nearly all not-unresolved cases. A more favorable sensitivity and specificity trade-off was observed if the threshold for unresolved classifications was shifted towards unresolved score ≥ 4. At this threshold, around 80% of the actual unresolved cases were correctly predicted, while still achieving a high specificity level (85%).

Figure 2. The trade-off between sensitivity and specificity for unresolved classification, based on thresholds equal to participants’ predicted unresolved score (lasso regression model; tested out-of-sample). The vertical line indicates the AAI coding manual’s threshold score for unresolved classification (≥5).

Regression coefficients of the final model

The estimated regression coefficients (i.e., feature weights) of the lasso regression model are presented in Table 4. As seen in the table, the lasso regression model forced the coefficient of the at-risk background variable to be zero, meaning that at-risk background did not contribute to predicting unresolved scores. The other predictors were retained in the model, meaning that these variables contributed to predicting unresolved scores.

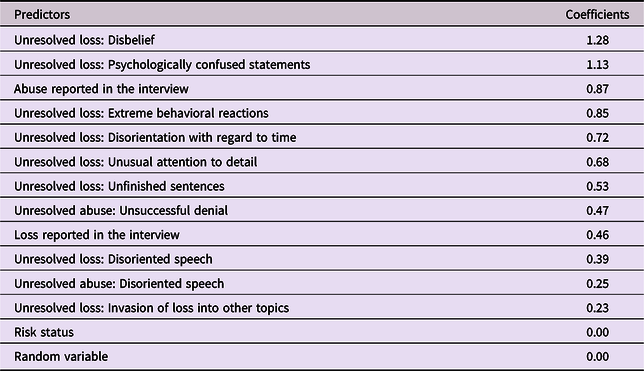

Table 4. Coefficients of the lasso regression model predicting unresolved score

Note. All model predictors except the random variable were dichotomously coded. The coefficients are arranged in decreasing order of size.

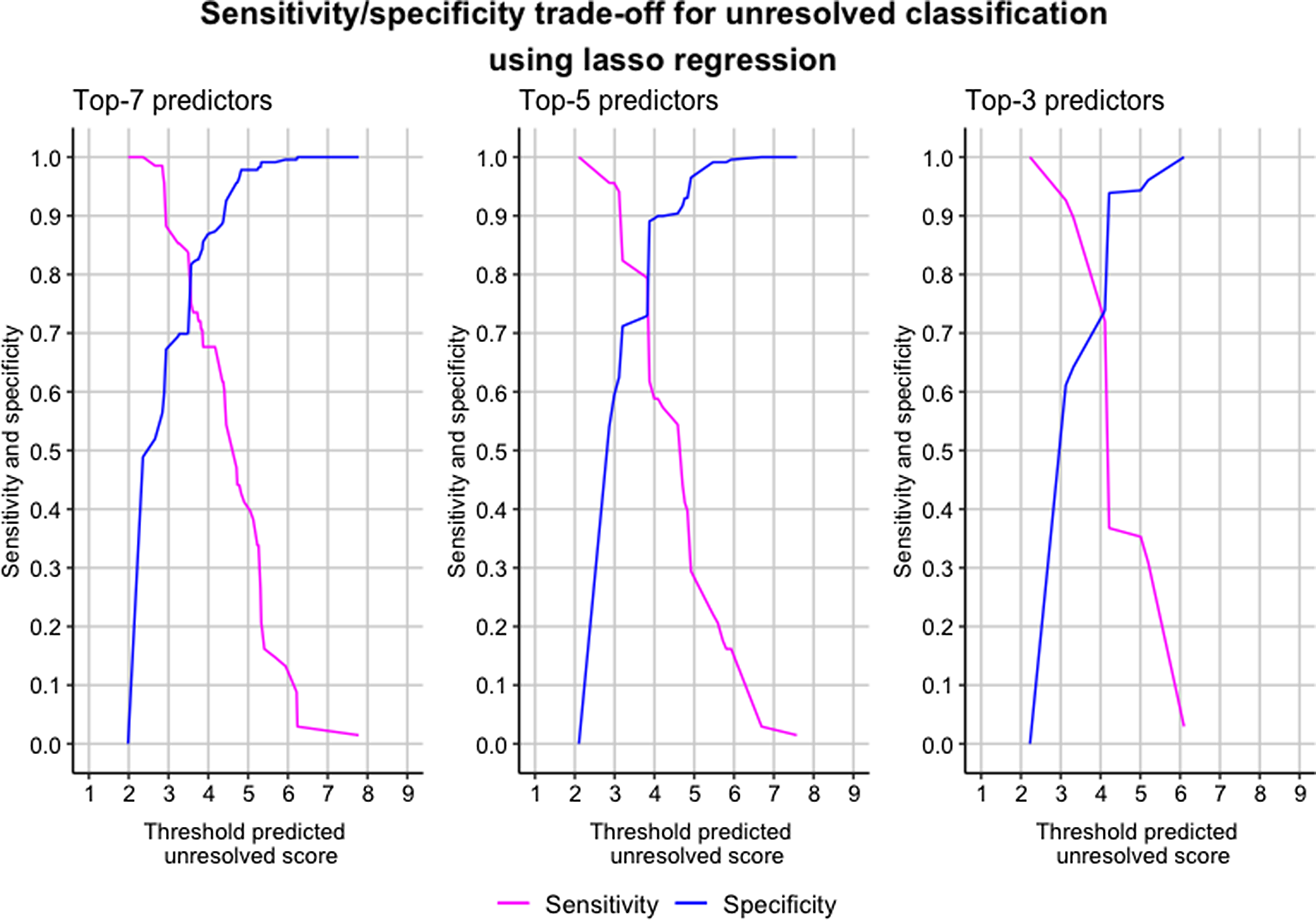

Predicting unresolved states of mind using fewer indicators

With the aim to explore the possibility of using fewer indicators to classify interviews as unresolved, we retested three different versions of the lasso regression model: including only the top seven, five, and three predictors (based on the coefficients presented in Table 4) The other predictors were thus not included in these models. By omitting the other predictors from the top three, five, and seven models, we restrained these predictors from contributing to variance in unresolved scores. Whereas in the full lasso model, even predictors with low relative contribution (i.e., small coefficient sizes) were able to contribute to variance in unresolved scores.

The results from each model were again plotted as to examine which risk threshold gave the best sensitivity and specificity trade-off for unresolved classification. As shown in Figure 3, we were able to obtain good predictive performance: sensitivity and specificity levels were high (≈ 75%) when only the seven, five, and three most important predictors were included.

Figure 3. The trade-off between sensitivity and specificity for unresolved classification, based on thresholds equal to the predicted unresolved score (lasso regression model; tested out-of-sample). The seven, five, and three most important predictors from the lasso regression model in Table 4 were used.

Summary of results: Research Question 2

The aim of this Research Question was to define a psychometric model underlying the unresolved state of mind construct. We tested nine predictive models with unresolved scores as the outcome, using the indicators of unresolved loss/abuse as predictors. Based on the trade-off between sensitivity and specificity for unresolved classifications, the lasso regression model was considered as the final model. The lasso regression model correctly identified 41% of actual unresolved classifications at a threshold predicted unresolved score of ≥ 5 (the AAI coding manual’s threshold for classification of unresolved), meaning that 59% of unresolved cases were missed (sensitivity). Nearly all (98%) not-unresolved cases were correctly identified by the model (specificity). At a threshold predicted unresolved score of ≥ 4, the lasso regression model correctly predicted 80% of the actual unresolved classifications. Around 85% of not-unresolved cases were correctly predicted by the model. According to the AAI coding manual, interviews with unresolved scores of 4.5 should receive the unresolved classification as an alternate category.

As a follow-up analysis, the lasso regression model was retested using only the three, five, and seven most important predictors, all showing good predictive performance for unresolved classifications (sensitivity and specificity levels around 75%). These findings suggest that the following variables take up the lion’s share of the prediction of unresolved states of mind: disbelief and psychologically confused statements regarding loss, and reported abuse.

Research Question 3: Investigating the predictive significance of the psychometric model of unresolved states of mind for infant disorganized attachment

As a follow-up to Research Question 2, we tested the predictive significance of the psychometric model underlying unresolved states of mind for infant disorganized attachment. For these analyses, only the samples with infant disorganized attachment classifications were used (n = 930). The data were randomly split into a 70% training dataset and 30% testing dataset. Both datasets contained no missing values. Similar to Research Question 2, nine predictive models were trained on the training data with unresolved score as the outcome variable, using the same set of predictors (see Results: Research Question 2 for more information about the predictor variables). The models’ predictive validity for infant disorganized attachment was evaluated using the testing (out-of-sample) data. To explore the predictive validity of these models for infant disorganized attachment, the next two steps were followed.

Step 1: Using predicted unresolved scores to calculate the precision and sensitivity for infant disorganized attachment

To investigate whether participants’ predicted unresolved score (based on the indicators of unresolved loss/abuse) can be used to predict infant disorganized attachment, we used the predicted unresolved scores to calculate the precision and sensitivity for the actual infant disorganized attachment classifications (according to the human coders). These analyses were conducted out-of-sample. Precision and sensitivity give a first indication of the predictive validity of indicators of unresolved loss/abuse for infant disorganized attachment. The precision for infant disorganized attachment classification at threshold score 5 (the AAI coding manual’s threshold for unresolved classification) ranged from 0.27 (support vector machine with a radial kernel) to 0.36 (logic regression). In other words, of the disorganized classifications identified by the logic regression model, 36% were actually given disorganized classifications. The sensitivity for infant disorganization ranged from 0.15 (support vector machine with a radial kernel) to 0.27 (random forest). In other words, 27% of infants classified as disorganized were correctly identified by the random forest model. The precision and sensitivity of all the models and for different threshold unresolved scores is reported in Supplement 3.

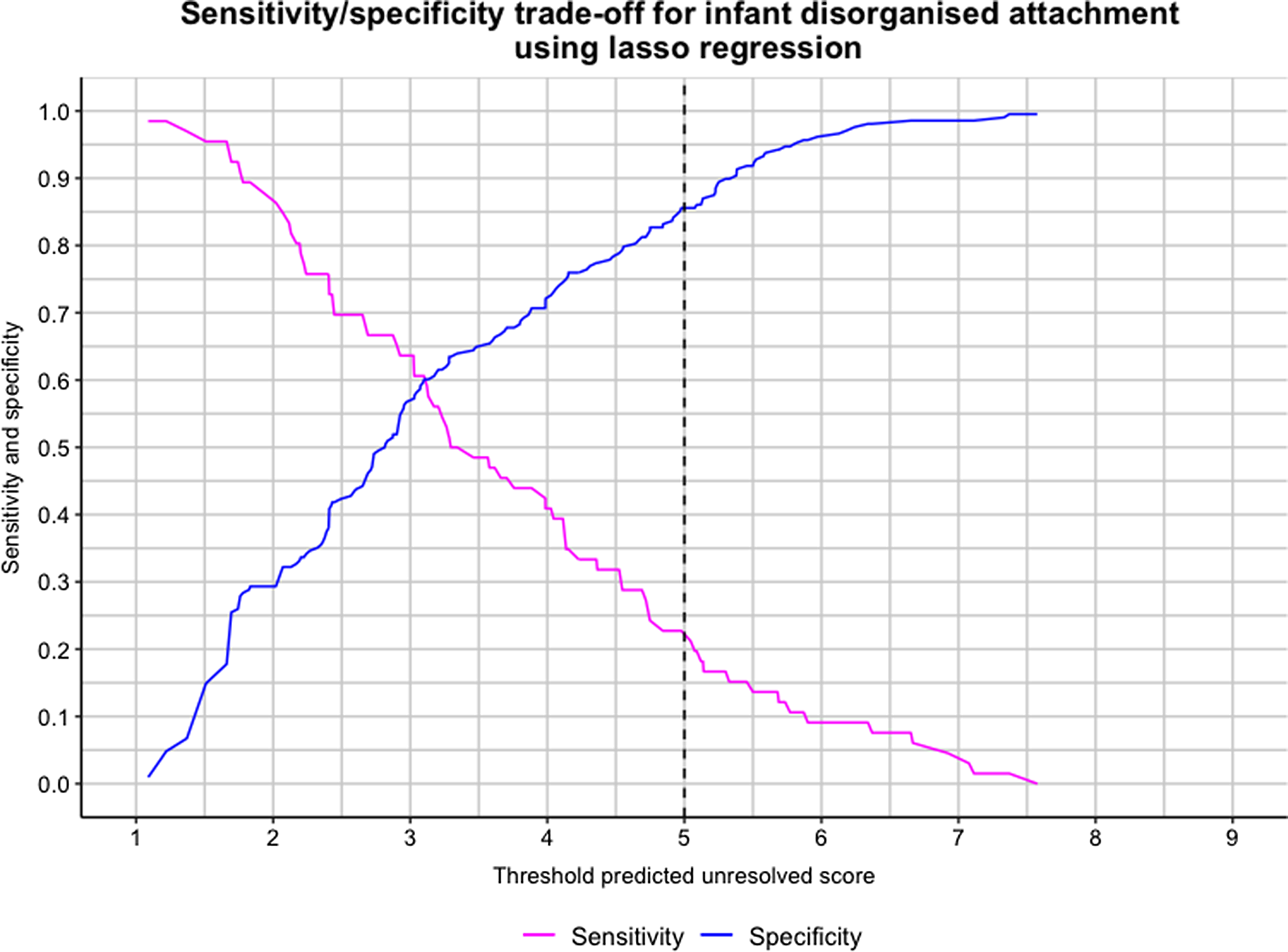

Step 2. Using participants’ predicted unresolved scores to examine the trade-off between sensitivity and specificity for infant disorganized attachment

Next, we examined the trade-off between sensitivity and specificity for infant disorganized attachment classification by different threshold predicted unresolved scores. This way, using adults’ predicted unresolved scores, we aimed to identify as many children as possible who would likely be classified with disorganized attachment, without wrongly identifying those without an actual classification as being a likely candidate. Sensitivity and specificity plots of the predictive models showed similar predictive performance. The lasso regression model (shown in Figure 4) was considered as the final model, because of our preference of choosing a simpler, more convenient model (lasso is a regression model) over a more complex model when models show comparable performance. In addition, lasso regression has the benefit of using regularization techniques that force the coefficients of low-contributing variables to be zero, yielding more parsimonious models (Kuhn & Johnson, Reference Kuhn and Johnson2013).

Figure 4. The trade-off between sensitivity and specificity for infant disorganized attachment classification, based on thresholds equal to participants’ predicted unresolved score (lasso regression model; tested out-of-sample).The vertical line indicates the AAI coding manual’s threshold score for unresolved classification (≥5).

At a threshold predicted unresolved score of ≥ 5 (the AAI coding manual’s threshold for the unresolved classification), the lasso regression model correctly predicted 20% of actual disorganized attachment classifications, and 85% of nondisorganized attachment cases were correctly predicted. If the threshold for classification was shifted towards a predicted unresolved score of ≥ 3, sensitivity for classification would increase up until 60%, meaning that around 60% of actual disorganized attachment classifications would be correctly predicted by the model. However, less specificity would be achieved (60%).

Research Question 4: The association between unresolved “other trauma” and infant disorganized attachment

The final Research Question addressed the association between ratings of unresolved “other trauma” and infant disorganized attachment. Multilevel modeling was used to account for the nested data.

Preliminary analysis

We first investigated whether unresolved loss and unresolved abuse scores were uniquely associated with infant disorganized attachment scores. First, an unconditional means (intercept-only) model was estimated with the 9-point infant disorganized attachment scores as the dependent variable. When unresolved loss scores were included as an independent variable, the model fit did not significantly improve (χ 2 (1) = 3.38, p = .066). This means that the regression model with unresolved loss scores was not a better fit to the data than the unconditional means model. Unresolved loss was also not significantly associated with infant disorganized attachment (B = 0.07, SE = 0.04, p = .066). Unresolved abuse scores were then added to the model as an additional independent variable, which did not significantly improve the model fit (χ 2 (1) = 0.83, p = .363). There was no unique significant association between unresolved abuse and infant disorganization scores (B = 0.05, SE = 0.05, p = .360), controlling for unresolved loss. The association between unresolved abuse and infant disorganized attachment remained nonsignificant in this model (B = 0.06, SE = 0.04, p = .106). Because unresolved loss and unresolved abuse were not uniquely associated with infant disorganized attachment, we used the overall unresolved scores (i.e., the highest score from the unresolved loss and abuse scales) for subsequent analyses.

Main analysis

Then, we tested the association between unresolved other trauma scores and infant disorganized attachment scores, over and above unresolved scores. Adding unresolved other trauma to the unconditional means model with infant disorganized attachment scores as the outcome did not significantly improve the model fit (χ 2 (1) = 1.50, p = .221). Thus, the regression model with unresolved other trauma as an independent variable provided no better fit to the data than the unconditional means model. Unresolved other trauma was not significantly associated with infant disorganized attachment scores (B = 0.10, SE = 0.08, p = .222). When unresolved scores (i.e., the highest score of the unresolved loss/abuse scales) were added to the model as an independent variable, the model fit significantly improved (χ 2 (1) = 4.30, p = .038). This means that the model with unresolved scores and unresolved other trauma as independent variables showed a better fit to the data than the model with only unresolved other trauma. Higher unresolved scores were significantly associated with higher infant disorganization scores (B = 0.08, SE = 0.04, p = .038), over and above unresolved other trauma. The association between unresolved other trauma and infant disorganized attachment scores remained nonsignificant (B = 0.06, SE = 0.08, p = .427). Taken together, these findings suggest that there is no significant association between unresolved other trauma and infant disorganized attachment, over and above unresolved loss/abuse.

Discussion

In the late 1980s, Main and colleagues used a semi-inductive methodology to develop a detailed coding system for assessing adults’ unresolved states of mind regarding attachment. The coding system was initially developed based on a variety of linguistic indicators identified in the AAIs with 88 parents in the Berkeley Social Development Study (Duschinsky, Reference Duschinsky2020; Main et al., Reference Main, Kaplan and Cassidy1985). The manual provides coders with a large set of indicators for classifying interviews as unresolved. However, it is not yet known which indicators are psychometrically valid and which may account for most of the discriminating validity of unresolved classifications. Researchers have not yet had the methodology or large samples to evaluate the relative contributions of individual indicators of unresolved loss/abuse. In this paper, we took a novel approach to examine key criteria used to assign the unresolved states of mind classification, using a large sample of AAI and parent–child attachment data gathered by the CATS.

Indicators of unresolved loss and abuse: Separating the wheat from the chaff

Unresolved states of mind are coded based on marked lapses in reasoning, discourse, or behavior surrounding loss or abuse. Coders are instructed to rate the presence of these indicators and their relative strength on two 9-point scales: unresolved loss and unresolved abuse. Our first aim was to investigate which patterns of indicators may differentiate interviewees with and without unresolved loss/abuse (Research Question 1). The findings from the LCA suggested a group of participants with a high likelihood of displaying indicators from a subset of commonly occurring indicators (disbelief, disoriented speech, unusual attention to detail, disorientation with regard to time, extreme behavioral reactions, psychologically confused statements, unfinished sentences, and invasion of loss into other topics about loss; and disoriented speech and unsuccessful denial regarding abuse) and a group of participants with a near-zero likelihood of showing any indicators.

Eight of 16 indicators of unresolved loss and 14 of 16 indicators of unresolved abuse showed low occurrence (i.e., present in between 0.01% and 3% of interviews). Of these rare indicators, seven were identified not more than once across the full set of 1,009 interviews. For unresolved loss, this was fear of possession, and for unresolved abuse, these were confusion between the abusive person and the self, disorientation with regard to space, fear of possession, poetic phrasing, prolonged silences, and sudden changes of topic/moving away from the topic of abuse. Idiosyncratic as these expressions are, this suggests that these indicators are not part of the unresolved state of mind classifications that are actually observed in practice. The AAI coding manual states that both the unresolved loss and abuse scales may be used to assess all potentially traumatic events (Main et al., Reference Main, Goldwyn and Hesse2003, p. 131) and that the scales will be combined in future editions of the manual (p. 142). Apart from fear of possession by the abusive figure, the five indicators of unresolved abuse that did not occur more than once in our study are originally listed under the unresolved loss scale. Similarly, fear of possession of the deceased person – originally listed under the unresolved abuse scale – was only observed once in our dataset. Future editions of the AAI coding manual might want to include lists of indicators that primarily occur during discussions of loss, indicators that primarily occur during discussions of abuse, and indicators that are rarely seen.

Searching for a psychometric model of unresolved states of mind

Next, we aimed to define a psychometric model that may underlie the unresolved state of mind construct (Research Question 2). Predictive modeling was used to explore associations between the specific indicators of unresolved loss and abuse and participants’ overall unresolved scores and classifications. Except at-risk background, all included variables contributed to the prediction of unresolved scores in the final model (lasso regression). These findings suggest a psychometric model of unresolved states of mind consisting of a linear combination of the following indicators of unresolved loss and abuse, in decreasing order of coefficient size: disbelief and psychologically confused statements regarding loss; reported abuse; extreme behavioral reactions, disorientation with regard to time, unusual attention to detail, and unfinished sentences regarding loss; unsuccessful denial of abuse; reported loss; disoriented speech regarding loss; disoriented speech regarding abuse; and invasion of loss into other topics.

In the final predictive model, the indicators of unresolved loss and abuse correctly predicted nearly all not-unresolved classifications by the human coders but only less than half of the actual unresolved cases when predicted unresolved scores of ≥ 5 were used as the threshold for classification. However, when predicted unresolved scores of 4 or higher were used as the threshold for classification, the model correctly predicted the majority of actual not-unresolved and unresolved cases (around 80%). This finding is in contradiction with the coding manual’s instruction that interviews with a score below 5 should not receive the unresolved classification (but interviews scored 4.5 or 5 may receive U as an alternate category; Main et al., Reference Main, Goldwyn and Hesse2003). A prior study from CATS (Raby et al., Reference Raby, Verhage, Fearon, Fraley, Roisman and Van IJzendoorn2020) suggested that individual differences in unresolved states of mind may be dimensionally rather than categorically distributed. Along with these findings, our results might suggest that the traditional assumption of using scores of 5 as a cutoff for classification be reconsidered.

These findings might partially be due to unreliability of the coding of indicators of unresolved loss and abuse. Measurement error in predictor variables may affect the predictive performance of statistical learning models (Jacobucci & Grimm, Reference Jacobucci and Grimm2020). But the findings may also suggest that the mere presence or absence of indicators provide insufficient information for constructing a model for the way in which unresolved loss and abuse are coded. We could not include the relative strength of individual indicators in the predictive models, because these ratings were not consistently reported in the coders’ notes. Notably, these ratings can come with coder subjectivity, which may be challenging to capture in psychometric models. The coding manual provides examples of indicators of unresolved loss/abuse and their relative strength to help coders with identifying these in interviews. Coders have to “generalize in looking for a fit to their own transcripts” (Main et al., Reference Main, Goldwyn and Hesse2003, p. 132) as lapses can present in a variety of ways. The interpretation and scoring of lapses also depends on the context of the narrative. Such variation may be difficult to operationalize, not least because this would require a more detailed differentiation of indicators than currently described in the coding manual (e.g., different categories of disbelief lapses). This might be undesirable for coders given that the coding system is already detailed and requires extensive training.

In the final predictive model, the indicators “disbelief” and “psychologically confused statements” regarding loss had the largest contribution in the prediction of unresolved scores. Both of these indicators are lapses in the monitoring of reasoning—the first one indicating disbelief that the deceased person is dead, and the second one indicating attempts to psychologically “erase” past or ongoing experiences (as described by Main et al., Reference Main, Goldwyn and Hesse2003, p. 136). The third most important predictor of unresolved scores was reported abuse, meaning that unresolved scores were predicted by reported abuse independent of the presence of indicators of unresolved abuse. When the final predictive model was retested with only these three predictor variables, the model correctly predicted around 75% of not-unresolved and unresolved classifications by the human coders. The predictive performance of this model was similar to the performance of the models with the top five and top seven indicators. This finding underscores that disbelief and psychologically confused statements regarding loss and reported abuse may be especially important for the construct of unresolved state of mind.

The predictive value of reported abuse irrespective of lapses in the monitoring of reasoning or discourse regarding abuse raises the possibility that discussion of abuse may lead to speech acts not currently part of the coding system that are marked as “unresolved.” Alternatively, the presence of abuse in an interview may contribute to other aspects of coder judgement in assigning unresolved scores that are not based on the indicators of unresolved loss and abuse. This finding also raises the concern that has repeatedly been discussed in the literature about potential variation in the degree to which interviewers probe for abuse experiences (e.g., Bailey et al., Reference Bailey, Moran and Pederson2007; Madigan et al., Reference Madigan, Vaillancourt, McKibbon and Benoit2012). When asked for experiences of abuse during the interview, speakers may refuse to talk about these experiences or display so much distress so that the interviewer does not probe further, resulting in fewer opportunities to record indicators of unresolved abuse, while subsequent probing of loss experiences may still elicit indicators of unresolved loss. Another possible explanation for the findings may be that the presence of abuse in combination with loss leads to linguistic disorganization in thinking and reasoning regarding loss, resulting in elevated unresolved scores for loss, but without the presence of indicators of unresolved abuse, due to limited probing.

Predicting infant disorganized attachment using the indicators of unresolved loss and abuse

To explore the predictive significance of the psychometric model of unresolved states of mind, we examined its relation to infant disorganized attachment (Research Question 3), the variable through which the system for coding unresolved states of mind was originally semi-inductively created (Duschinsky, Reference Duschinsky2020). Similar to Research Question 2, we first used the indicators of unresolved loss and abuse to predict unresolved scores assigned by the human coders. The predicted unresolved scores were then used to calculate the specificity and sensitivity for infant disorganized attachment classifications. Using predicted unresolved scores of 3 or higher as a threshold for infant disorganized attachment classifications, we were able to correctly identify 60% of infants with a disorganized attachment classification and 60% of infants without a classification.

It is important to note that the unresolved states of mind category, and the AAI more broadly, is developed for group-level research and is not a diagnostic system for assessing infant disorganized attachment on the individual level (see Forslund et al., Reference Forslund, Granqvist, Van IJzendoorn, Sagi-Schwartz, Glaser, Steele and Duschinsky2021, for a discussion). Therefore, the outcomes of this analysis should not be interpreted as such. Instead, the current exercise should be viewed as an exploratory approach to examine associations between individual indicators of unresolved loss/abuse and infant disorganized attachment in the context of psychometric validity of the unresolved state of mind construct. In addition, the limitations of our approach should be considered, such as the reliability of the input data and the insufficient information about relative strengths of the indicators of unresolved loss/abuse. Nevertheless, the current findings provide no evidence that some indicators of unresolved loss/abuse are potentially more predictive of infant disorganized attachment than other indicators. Our findings indicate that unresolved states of mind are predictive of infant disorganized attachment but that this association is not accounted for by the presence or absence of individual indicators. The behavioral indicators of infant disorganized attachment are rather heterogeneous, with potentially different functions and underlying processes (Solomon et al., Reference Solomon, Duschinsky, Bakkum and Schuengel2017). Hesse and Main (Reference Hesse and Main2006) have predicted that specific manifestations of caregivers’ unresolved loss and abuse will be linked to different parenting behaviors, leading to varying forms of disorganized attachment behavior in the Strange Situation. Future research might follow up Hesse and Main’s proposal to explore associations between individual indicators of unresolved states of mind, parenting behavior, and indices of infant disorganized attachment.

Significance of unresolved “other trauma” for infant disorganized attachment

Research Question 4 concerned the association between unresolved “other trauma” and infant disorganized attachment. We found no significant association between ratings on the unresolved other trauma scale and infant disorganized attachment, controlling for ratings of unresolved loss/abuse. There was also no significant bivariate association between unresolved other trauma and infant disorganized attachment in this sample. These findings appear to contradict previous arguments, especially from clinician-researchers, that the construct of an unresolved state of mind should be expanded to include other experiences than loss and abuse by attachment figures, and that doing so would improve prediction to infant attachment disorganization (George & Solomon, Reference George and Solomon1996; Levinson & Fonagy, Reference Levinson and Fonagy2004; Lyons-Ruth et al., Reference Lyons-Ruth, Yellin, Melnick and Atwood2005). To some extent, this finding may be an artifact of the protocol for delivering the AAI, rather than solely reflecting the weaker relationship between “other trauma” and unresolved states of mind. The unresolved other trauma score is a reflection of a variety of potentially traumatic events that the interviewee may bring up in response to questions asking them whether they have had experiences which they would regard as potentially traumatic, other than any difficult experiences they had already described. Such a broad prompt will lead to a variety of answers, including but not limited to experiences that fall within the domain of trauma, weakening the signal of potential causal relationships. Furthermore, there appears to be marked variation in the extent to which other potentially traumatic experiences are probed by interviewers. The interview guide explicitly states that “many researchers may elect to treat this question [about potential traumatic events] lightly, since the interview is coming to a close and it is not desirable to leave the participant reviewing too many difficult experiences just prior to leave-taking” (George et al., Reference George, Kaplan and Main1996, p. 56). Some of the “other traumatic” events reported in the interview may profoundly affect the parent–child attachment relationship, while others may have no implications whatsoever. In addition, it is possible that some unresolved other trauma may be associated with other relevant outcomes, such as frightening or anomalous parenting. More descriptive work is needed on the experiences marked as unresolved other trauma, and the extent to which these experiences may be associated with parent–child attachment and parenting behavior.

Conceptualization of unresolved states of mind

The findings emerging when we investigated Research Question 2 (Defining a psychometric model that may underlie the construct of an unresolved state of mind) raise important questions regarding the conceptualization of unresolved states of mind. A first question is whether current theory about unresolved states of mind is sufficient for understanding the meaning of these indicators. As noted by Duschinsky (Reference Duschinsky2020), the concept of a “state of mind with respect to attachment” has remained underspecified in the literature, prompting discussion among attachment researchers about what it is that the AAI measures. A similar question may be asked about the concept of an unresolved state of mind. The theoretical definition of an unresolved state of mind has remained unclear, both due to the fact that Main and colleagues have given different explanations about the psychological mechanisms behind the indicators (such as fear and dissociation, e.g., Main & Hesse, Reference Main, Hesse, Greenberg, Cicchetti and Cummings1990; Main & Morgan, Reference Main, Morgan, Michelson and Ray1996), and the relative lack of empirical scrutiny of these proposed mechanisms. So far, the concept of an unresolved state of mind can therefore only be defined by the presence of the indicators described in the coding manual, because these are the only directly observable expressions of an unresolved state of mind. As of yet, there are no other, external indicators that can be used to define an unresolved state of mind, or sufficient theory to predict what such indicators might be. Our findings go some way towards specifying the core indices for the construct of unresolved states of mind at least as operationalized in current coding systems, which in turn might be the basis for renewed and refined theoretical work. Specifically, our findings may suggest that disbelief and psychologically confused statements about loss are core features of the construct of unresolved states of mind. Our findings also suggest that the indicators that were extremely rare in our sample are not relevant for how the unresolved state of mind construct operates in practice.

Another question raised by our findings is the nature of the relation between the indicators and the construct of an unresolved state of mind. An important consideration here is whether the measurement model underlying an unresolved state of mind should be regarded as reflective or formative. Following the framework suggested by Coltman et al. (Reference Coltman, Devinney, Midgley and Venaik2008), a reflective model would consider the construct of an unresolved state of mind to exist independent of the indicators, with the process defined by the construct causing the indicators to manifest themselves (i.e., an unresolved state of mind causes a person to show lapses in the monitoring of reasoning or discourse). A formative model would consider that the construct of an unresolved state of mind is formed by the lapses, with the presence of the lapses bringing the construct into being. It could also be that neither of these measurement models are appropriate, as reflective and formative models may not be categorically distinctive (VanderWeele, Reference VanderWeele2020). Current theory about unresolved states of mind does not provide clues about whether a reflective or formative measurement model may underlie the unresolved state of mind construct. Some argue that it might be important to start with clear, precise definitions of a construct before proposing indicators that measure the construct and conducting empirical analyses to test these associations (VanderWeele, Reference VanderWeele2020). However, the construct of an unresolved state of mind was developed semi-inductively by Main and colleagues: features of interviews of parents of children classified as disorganized in the Strange Situation were identified inductively and then were elaborated deductively (Duschinsky, Reference Duschinsky2020). The resulting group of indicators was then interpreted as a group named “unresolved state of mind.” This initial work was then enriched with valuable theoretical reflections, both by Main and colleagues (e.g., Main & Morgan, Reference Main, Morgan, Michelson and Ray1996) and other developmental researchers (e.g., Fearon & Mansell, Reference Fearon and Mansell2001). However, the conceptualization process based on mechanistic empirical work has stagnated for some decades, leaving the theory about unresolved states of mind still highly underspecified regarding: (i) how attachment-related memories about loss and trauma are processed, and (ii) how these psychological processes affect parenting behavior. Future work in this area may be advised to focus on improving the theoretical definition of an unresolved state of mind, by collecting observational and experimental data to test proposed mechanisms of unresolved states of mind and clarifying underspecified aspects of the theory. In addition, further research is needed to assess whether a more parsimonious model of an unresolved state of mind (i.e., consisting of fewer indicators) is predictive of relevant outcomes beyond infant disorganized attachmentFootnote 3 , such as parenting behavior.

Strengths and Limitations