1. Introduction

Due to its advantages, design thinking (DT), a human-centred approach to innovation (Brown Reference Brown2009; Lockwood Reference Lockwood2010), has been integrated into school education for its benefits, including as a teaching strategy, curriculum redesign tool and real-world problem-solving approach (Panke Reference Panke2019). In the National Education Policy (2020), DT was enlisted as one of the contemporary subjects that should be offered at appropriate school levels to help students develop various crucial abilities. Given recent calls for DT’s inclusion in schools and the increased focus on its implementation, there is an opportunity for scholarly work to explore and understand new emergent approaches to effectively delivering DT in educational settings.

Key questions for teaching DT to school students include determining what content should be covered and finding the most effective and cost-efficient delivery methods. Additionally, widespread implementation of DT courses must address challenges such as scalability, time constraints and the availability of resources for guidance and evaluation. Digital learning plays an important role in effectively teaching school subjects and has the potential to address these challenges. Digital learning integrates modern pedagogical tools and methods (Drijvers Reference Drijvers2015) and enhances student experiences (Alhabeeb & Rowley Reference Alhabeeb and Rowley2017) and engagement through interactive learning experiences and direct teacher support (Abdulwahed et al. Reference Abdulwahed, Hasna and Froyd2015; Harnegie Reference Harnegie, O’Neil, Fisher and Rietschel2015). Therefore, educators need to shift from traditional, teacher-centred methods to interactive, ICT-facilitated instruction (Buabeng-Andoh Reference Buabeng-Andoh2012). Programmed instruction (PI) is a self-instructional strategy wherein the learner encounters many small learning frames or pieces of information presented in a logical sequence (Lee Reference Lee2023). It is often integrated into digital learning platforms to deliver scalable and flexible educational content. Despite the surge in PI tailored for education, there is limited research exploring its benefits in design education.

This study is part of a broader initiative to integrate DT into the K-12 curriculum in Indian schools, through the development of courses, curricula and technologies tailored specifically for K-12 students (Bhatt & Chakrabarti Reference Bhatt and Chakrabarti2022; Bhatt et al. Reference Bhatt, Suressh and Chakrabarti2021; Bhaumik et al. Reference Bhaumik, Bhatt, Kumari, Raghu Menon and Chakrabarti2019; Bhatt et al. Reference Bhatt, Bhaumik, Moothedath Chandran and Chakrabarti2019). As a part of this research, this study aims to teach DT concepts to secondary school students while addressing key educational challenges such as accessibility, personalised learning, real-time assessment and resource efficiency. This study focuses on the development of PI to teach and evaluate DT concepts to secondary school students. It measures the effect of PI on students’ understanding of concepts. In addition, the study also assesses the suitability of PI for various grades of students and to gain insights into the developmental readiness of students for PI and DT concepts. It shows the association between school students’ ability to learn DT concepts using PI and students’ grade level, as well as student’s overall academic performance in conventional subjects taught in schools in India. Finally, the study measures the combined effect of DT concepts using PI and DT process (DTP) on students’ problem-finding and solving skills. The data on individual performance on the DT concept, team performance of the DTP and feedback was collected in the form of a test score, outcome evaluation and questionnaire, respectively. The results of this study indicate that the PI help students to learn concepts, which are prerequisites for performing the activities and documenting the outcomes effectively. In addition, the result shows the appropriateness of the content of DT concepts for secondary school students in India. Before discussing the development and evaluation of PI, an explanation of the need for nurturing DT in school education (Section 1.1), DT characteristics and their relevance in teaching (Section 1.2), the DT course (Section 2) and its pedagogical components (Section 2.1) and the implementation challenges in the classroom (Section 3) are explained.

1.1 Need for nurturing DT in school education

DT is a human-centred problem-finding and problem-solving approach. This approach relies on a set of mindsets (Carlgren et al. Reference Carlgren, Rauth and Elmquist2016), skillsets and toolsets that help to solve real-life, open-ended and complex problems which are ill-structured in nature, do not have a single solution, are affected by multiple factors, and require interdisciplinary thinking to address (Simon Reference Simon1973). The outcome can be an idea, product, service, system or policy. Due to its distinctive qualities (such as creative, empathic, iterative, collaborative, divergent etc.) and advantages (such as enhancing innovation, value, profit etc.), organisations have begun adopting DT. To prepare students for the workforce, this approach is used in both higher education (like engineering and management programs) and K-12 schools.

DT has demonstrated its effectiveness in higher education through multiple avenues. For instance, it has been found to enhance individuals’ creative thinking abilities (Lim & Han Reference Lim and Han2020) and facilitate transformative learning experiences (Taimur et al. Reference Taimur, Onuki and Mursaleen2022). Along with higher education, emphasising nurturing a DT mindset right from school education is becoming more widespread. Various studies suggest the need to introduce DT at the secondary school level due to its positive effects. For instance, a study conducted by Freimane (Reference Freimane2015) revealed that children could create innovative product concepts and comprehend the systems approach of DT. Furthermore, Aflatoony and Wakkary (Reference Aflatoony and Wakkary2015) showed that students can effectively transfer DT skills to address challenges beyond design courses. In addition to promoting open-ended, complex problem-finding and solving skills, DT offers affordance in numerous ways. It is a contemporary subject that can help enhance 21st century skills like collaboration, communication, metacognition and critical thinking (Rusmann & Ejsing-Duun Reference Rusmann and Ejsing-Duun2022). It allows students to engage in different activities that contain instructions of a higher level of cognitive processes, such as applying, analysing, evaluating and creating (Bhatt et al. Reference Bhatt, Suressh and Chakrabarti2021). Besides, students can learn about technology, society and culture. The design process is ideal for STEM content integration because it not only brings all disciplines on an equal platform but also provides an opportunity to locate intersections and build connections among disciplines (National Research Council 2012). It creates an opportunity to apply scientific knowledge and provides an authentic context for learning mathematical reasoning for informed decisions during the design process (Kelley & Knowles Reference Kelley and Knowles2016). It helps students think as designers and can help them deal with challenging situations and find solutions to complex problems in their studies, jobs and life. Noel & Liu (Reference Noel and Liu2016) synthesised existing literature and showed that DT principles in children’s education can provide a solid foundation. This foundation benefits children not only in seeking to enter a design profession in the future but also in moving into any profession in the future and will lead to higher engagement at school and greater success in life. Because of these benefits, DT should be taught in schools, as it is a strategy to bridge the gap between the current education system and future needs.

1.2 Characteristics of DT and its relevance to education

Much like regular courses, the effective implementation of DT courses requires the effective transfer of instructions and assessment activities to measure learning effectiveness. However, learning DT involves more stakeholders than conventional courses, such as problem owners, mentors and experts, in addition to facilitators and students. In addition, due to its distinctive characteristics, learning DT differs from learning routine subjects such as math and science. Some of the elementary characteristics synthesised from the literature (Buchanan Reference Buchanan1992; Owen Reference Owen2005; Brown Reference Brown2008), such as wickedness, human-centredness, creativity, collaboration, a culture of prototyping and so forth, along with their implications on the educational experience, are discussed below:

-

• Wickedness: Instead of working on the well-defined problems provided by the instructor or listed in the textbooks, students engage in discovering and analysing real-life, open-ended problems which are highly ill-structured in nature. This makes the study of habitat (that comprises people doing any work or at play) one of the fundamental activities where students observe, experience and interact with habitat to identify problems and set goals.

-

• Human-centricity: DT is a user-centred problem-solving approach. In order to understand users’ needs, meet those needs by generating solutions and receive feedback on the various outcomes, a learner needs to interact with problem owners. As a result, users or problem owners become important stakeholders in the process of learning.

-

• Creativity: Creativity is one of the fundamental characteristics of the approach. Learners get the opportunity to generate diverse and novel solutions. Besides, there is not one correct definitive answer. Instead, each solution gets evaluated based on its good or bad.

-

• Collaboration: The DTP encompasses group activities and methods involving teams working together to understand the problem in depth and generate various ideas or concepts to solve the problem effectively. Therefore, teamwork is required rather than working alone to complete a task.

-

• Culture of prototyping: Learners are required to engage in hands-on activities such as making sketches, prototypes and mock-ups through which they can visualise, test and communicate the ideas and concepts.

Due to its unique characteristics (such as teamwork, field study and hands-on activities) and involvement of various stakeholders, teaching DT in a traditional school setting brings complexity and requires thought processes on how to impart it effectively in the existing grammar of schooling (regular structure and rules) (Tyack & Tobin Reference Tyack and Tobin1994). There are various challenges in implementing a DT course, such as defining the goals and objectives, scheduling useful lecture units, providing course-compatible resources and IT infrastructure, managing the team processes and didactic aspects and defining the challenge (Lugmayr et al. Reference Lugmayr, Stockleben, Zou, Anzenhofer and Jalonen2014). In addition, human resources (e.g., teachers) need to be trained as facilitators, mentors or evaluators before assisting students in performing these roles effectively. If teachers teach students and monitor activities without any prior experience, it may affect the effectiveness of learning. Nevertheless, given their current responsibilities, teachers seldom have the time to get trained and provide training to students in such a contemporary subject. Furthermore, Students in school are occupied with studying existing subjects. As a result, there is a minimum time left to introduce a new course in the middle of the academic calendar. The time required to train students in DT greatly relies on the activities the educators wish to teach and the level of DT expertise they want to foster. Many times, accelerated approaches (such as 90-minute workshops, online training and boot camps) without effective instruction create a large number of people who are eager to embrace DT but, at the same time, unable to translate their eagerness into human-centred approaches (Schell Reference Schell2018). Addressing these challenges encourages us to develop a supportive environment that includes contemporary learning theories and technologies so that the fundamental-level course of DT can be successfully implemented while relying on fewer resources and time. Given the unique characteristics of DT courses, successfully introducing it requires careful consideration of the pedagogical elements (e.g. learning, assessment) first.

2. Context: IISC DTP

There exist multiple models and approaches to teaching DT. Earlier, the authors developed a DT curriculum tailored to teach novice learners the fundamentals of DT, encompassing four stages of the process abbreviated as IISC. IISC is a DT model that consists of four broad stages: Identify the problems; Ideate the solutions; Consolidate the solutions into tangible, testable, effective ones and Select the best solution among many (Bhaumik et al. Reference Bhaumik, Bhatt, Kumari, Raghu Menon and Chakrabarti2019). The four stages are explained in detail below.

-

1. Identify: The activities of Identify stage help to capture problems, identify needs and requirements from the gathered problems and decide which problems are important to solve.

-

2. Ideate: The activities of Ideate stage help to come up with various ideas to solve identified problems and combine alternative ideas into concepts.

-

3. Consolidate: The activities of Consolidate stage help to convert concepts into tangible, testable, effective and coherent by modelling and prototyping.

-

4. Select: The activities of Select stage allow revising the list of requirements; evaluating concepts against revised requirements; combining individual evaluations into aggregated scores; and comparing aggregated scores to select the best solution.

The four broad stages of the IISC DTP are further divided into various activities. Each activity includes explanations, concrete examples, step-by-step instructions and desired outcomes that are expected at the end of the activities. The instructions are designed to make it easy for school students with no prior or little experience. The content of the activities is provided either in digital form (a web-based tool that can be hosted from a server and accessed using a pc or laptop) or physical form (in case of limited digital resources). To complete all four stages of the IISC model, learners in a team undergo various activities and document the outcomes (such as problems, needs, ideas, solutions etc.) in multiple forms (such as text, sketches, cardboard models, posters etc.).

2.1 DT concepts and learning framework

In previous works, Bhatt and Chakrabarti (Reference Bhatt and Chakrabarti2022) introduced essential stages in the learning process: development and evaluation of concepts and terms to develop conceptual understanding in learners before engaging them in performing activities and documenting outcomes.

‘Concept’ is an abstract idea or a general notion used to specify the features, attributes or characteristics of a phenomenon in the real or phenomenological world that they are meant to represent and that distinguishes the phenomenon from other related phenomena (Podsakoff et al. Reference Podsakoff, MacKenzie and Podsakoff2016). According to the Merriam-Webster dictionary, ‘term’ is a word or expression that has a precise meaning in some uses or is peculiar to a science, art, profession or subject. Each discipline has a collection of common concepts, terms and glossaries that people of that field utilise for communication, classification, abstraction and generalisation effectively. Like other domains, design scholars have shown design and DT concepts, terms and glossaries as important elements in research, practice and teaching. For example, Chakrabarti et al. (Reference Chakrabarti, Murdoch and Wallace1995) explained the importance of a glossary (i.e., to foster unambiguous communication, develop and test theories and aid an efficient query) and developed a framework for a glossary of design terms for the research community. Razzouk and Shute (Reference Razzouk and Shute2012) created a DT competency model, a hierarchically arrayed set of variables for assessment and diagnostic purposes in education, where understanding terms was considered one of the three fundamental variables. Likewise, Ingle (Reference Ingle2013) identified the 35 most common terminologies and provided their definitions to assist entrepreneurs and small-business owners in applying DT.

In more recent work, Bhatt and Chakrabarti (Reference Bhatt and Chakrabarti2022) found that concept evaluation and understanding improve learners’ performance in performing activities and documenting outcomes. Thus, the learning process follows a particular sequence of steps where every step serves as a foundation for the one that comes after it: learning activity-related concepts, understanding instructions of activities, performing those activities and documenting the outcomes that are produced at the end of each activity. Furthermore, each of the steps is associated with the evaluation and feedback process because, in addition to facilitating learning, learners must be placed in situations where their understanding can be tested and verified before they move on to the next step. Figure 1 shows the overall learning process. The dotted arrows indicate that if a learner’s performance is weak for a particular step, one may be required to go back and review the earlier steps.

Figure 1. Overview of design thinking learning process framework.

2.2 IISC DT workshops

As a part of an ongoing research project, to teach DT courses at schools, the authors have been conducting 3–5 days of workshops/boot camps, depending on the availability of time and resources, where the IISC DT model has been used to train students to acquire skills and mindset. Since most workshops happen in the school, the habitats within the premises are taken as a study. Typical school habitats include classrooms, staffrooms, laboratories, libraries, playgrounds, security cabins, canteens, parking areas and washrooms. These types of habitats are the source of real-life, open-ended problems. This allows students to conduct field studies, spend time observing habitats and interact with habitats while remaining in proximity to the school. The workshops involve similar activities as a hackathon, wherein they not only provide guidance and support for the design process to steer participants towards achieving successful design and learning outcomes but also include tasks like team member introductions, problem identification and preparation for the solution pitch and demo (Flus & Hurst Reference Flus and Hurst2021). The roles of various stakeholders in the workshop contexts are explained here:

-

1. The instructor introduces the subject and educates learners by demonstrating specific activities such as grouping problems, selecting the critical problems, generating ideas and combining ideas into concepts.

-

2. Students understand instructions given by the instructor or agent (in digital or physical form), perform the activities and document the outcomes.

-

3. Mentor engages in formative assessment. A mentor (assigned to each team) ensures that the team correctly understands instructions, performs activities and documents outcomes. In addition, a mentor provides feedback about where students are struggling to the instructor, which helps to improve the instructions.

-

4. Habitat users or problem owners interact with students and communicate their problems or needs. They also engage in feedback and formative assessment activities such as requirement or solution evaluation.

-

5. Experts engage in summative assessment. In the end, experts evaluate the final outcomes prepared by the team, provide a score based on the team’s performance and provide feedback to students, mentors and instructors.

In previous DT workshops, when each team followed the DTP, they were expected to engage in various design activities. Each activity was accompanied by corresponding concepts to be grasped and instructions to be followed, both of which were embodied into activity cards. However, there was a lack of mechanisms to assess students’ comprehension of DT concepts prior to executing the activity instructions. Consequently, this deficiency resulted in inadequate understanding and execution of certain activities. Recognising these limitations, Bhatt and Chakrabarti (Reference Bhatt and Chakrabarti2022) implemented a mechanism aimed at evaluating students’ comprehension of concepts and terms before engaging in activities. Nevertheless, these evaluations were conducted at the team level, whereby the responses provided by each team reflected the collective understanding of all team members about the concepts. Individual student performance was not evaluated in these assessments (Bhatt et al. Reference Bhatt, Bhaumik, Moothedath Chandran and Chakrabarti2019). This inspired us to develop a system that evaluates each student’s understanding of DT concepts individually. However, the manual and individual assessments can place a heavy burden on mentors and consume valuable classroom time. This constraint served as motivation for us to devise a mechanism aimed at offering understanding and assessment of DT concepts at the individual level. Section 3 examines the challenges associated with scalability in the assessment of DT courses, whereas Section 3.1 elaborates on PI and its potential to address these scalability issues in the evaluation of DT courses.

3. Scalability issues of assessment in DT courses

Assessment is a crucial aspect of DT education since it helps students by providing feedback and preparing them to deal with future challenges. It also helps educators and researchers measure the learning outcomes and get insights about the areas of improvement. One of the ways to assess a student’s performance in DT is to evaluate the design outcomes, the result of the work done by students while completing design activities. Multiple rubrics have been proposed to support outcome evaluation. For instance, to assess the outcomes of student design projects, Elizondo et al. (Reference Elizondo, Kisselburgh, Hirleman, Cipra, Ramani, Yang and Carleton2010) developed innovation assessment metrics that include differentiability, creativity, need satisfaction and probability of adoption. Another way is to set up exams, conduct interviews and so forth, to measure gained knowledge or skillsets or change in the mindsets. For instance, to evaluate high school students’ DT skills, such as human-centredness, problem-solving and collaboration skills, Aflatoony et al. (Reference Aflatoony, Wakkary and Neustaedter2018) used various assessment methods, including participant observation, pre- and post-activity questions and document analysis to gather the data. Similarly, ninth-grade students’ perceptions of the design-thinking mindset were assessed before and after the project by assessing students’ perceptions of various aspects of DT (i.e., being comfortable with uncertainty and risks, human-centeredness, mindfulness of the process and impacts on others, collaboratively working with diversity, orientation to learning by making and testing and being confident and optimistic about using creativity) (Ladachart et al. Reference Ladachart, Cholsin, Kwanpet, Teerapanpong, Dessi, Phuangsuwan and Phothong2022). In other studies, researchers used self-assessment, peer assessment and expert assessment methods to assess university students’ problem-solving and creativity skills (Guaman-Quintanilla et al. Reference Guaman-Quintanilla, Everaert, Chiluiza and Valcke2022).

However, when we think of the scalability of DT education, the conventional forms of design assessment may not be suitable for coping with time and resource constraints. Thus, there is a need for efficient and scalable assessment techniques. Recent contributions were made in which the researchers have attempted to reduce the dependency of teachers or experts by providing scalable assessment solutions. For instance, with the aim of reducing the burden of assessment, Arlitt et al. (Reference Arlitt, Khan and Blessing2019) developed a computational approach to evaluate students’ DT competencies and mindset. The text data were assessed with the help of feature engineering – a machine learning technique – to compare students’ responses to design methodology questions before and after taking a DT course called Design Odyssey. The results indicated that identifying text features can enable scalable measurement of user-centric language and DT concept acquisition. In another study, to assess conceptual design problems in a shorter time, Khan et al. (Reference Khan, Maheshwary, Arlitt and Blessing2020) developed a framework that comprises open-ended questions that prompt students to express their design concepts and supporting rationale using text and sketches. The researchers validated this framework in a design program at a secondary school.

All the above-mentioned studies (i.e., Ladachart et al. Reference Ladachart, Cholsin, Kwanpet, Teerapanpong, Dessi, Phuangsuwan and Phothong2022; Khan et al. Reference Khan, Maheshwary, Arlitt and Blessing2020; Arlitt et al. Reference Arlitt, Khan and Blessing2019) focus on assessing outcomes, perceptions and understanding of DT after students have completed DT workshops. Although post-workshop assessment is essential for measuring the effectiveness of the workshops, techniques such as rubrics and Likert scales do not capture real-time assessment methods, which can track students’ understanding or how students engage with and internalise DT concepts during the learning process. Finally, there is a lack of research that focuses on continuous evaluation during the learning to identify areas of struggle, misconceptions or growth in students’ understanding of DT concepts.

In conventional teaching, concepts are generally taught by providing lectures and reading materials, and these concepts are tested through assignments or tests. When we thought of teaching DT courses in the existing school setting (as discussed in the previous section), these conventional ways may not be the best ways to teach and evaluate individual learning for several reasons. First, the restricted time and the large number of students make it difficult for teachers to cover all topics and give feedback on each student’s response. In particular, integrating DT courses into the Indian school education system encounters several potential challenges when involving school teachers in the process, as revealed through interviews with teachers. They have expressed valid concerns about their current academic duties, which often result in heavy workloads and limited time for training and mentoring in new courses like DT. Second, standardised teaching may only suit some learners’ learning styles (Felder & Spurlin Reference Felder and Spurlin2005) and may not accommodate the diverse learning paces of individual students. Finally, passive instruction with limited interaction may lead to a lack of learner engagement and attention and does not provide an opportunity for immediate feedback and reflection for each individual learner.

Despite the fact that a number of studies examine elements of pedagogy and developed assessment schemes to test various skillsets, mindsets and outcomes, little attention has been paid to the investigation of teaching and assessment of DT concepts, a fundamental element of pedagogy to novice school students. Moreover, it is crucial to determine how to implement effective teaching and assessing techniques for learning DT concepts in the existing challenges of schools, such as limited classroom time and resources, individual-level assessment and scalability. The above discussion justifies the need for developing support to teach and evaluate DT concepts in a scalable manner at the individual level, with minimum dependence on the teacher. The aforementioned need led to the development of an efficient and scalable method for teaching and assessing DT concepts.

4. Programmed instructions

One of the foundations for the development of e-learning courses is instructional design, as it guides the process of analysing learners’ needs, defining learning objectives, designing instructional strategies, developing instructional materials and evaluating the effectiveness of the learning experience. Goodyear and Retalis (Reference Goodyear and Retalis2010) defined instructional design as a ‘rational, technical enterprise, concerned with optimising learning and instruction through the application of objective scientific principles’. Instructional design is useful since it uses systematic design procedures that make instructions more effective and efficient (Gustafson & Tillman Reference Gustafson, Tillman, Briggs, Gustafson and Tillman1991). A PI is a highly structured set of sequences of instructional units with frequent opportunities for the learner to respond via problems or questions, typically accompanied by immediate feedback (Bullock Reference Bullock1978). It is a technique for introducing new content to learners through a graduated progression of guided steps and corresponding exercises in multiple-choice test questions, providing feedback when a learner selects the answer. Because of its important advantages, such as self-administrating, self-paced, active engagement and immediate feedback (Lysaught & Williams Reference Lysaught and Williams1963), elements of PI are nowadays used by most computer-based teaching programs (e.g., brilliant.org/). As noted by Jaehnig and Miller (Reference Jaehnig and Miller2007), the characteristics of PI have changed over time; where modern PI typically uses larger step sizes, unlike the small step size in conventional PI and utilises multiple-choice questions (MCQs) due to the ease of programming. The primary components of PI are still included in computer-based instruction, which is commonly utilised in both educational and business training settings Jaehnig & Miller (Reference Jaehnig and Miller2007). With the help of instructions, learners can engage with new learning material with little or no assistance. The literature also underscores the effectiveness of PI, highlighting their impact on enhanced learning outcomes and performance across diverse pedagogical contexts. For example, Anger et al. (Reference Anger, Rohlman, Kirkpatrick, Reed, Lundeen and Eckerman2001) developed PI to teach essential skills and information in the course of health and safety, to a broad range of workers in a cost-effective manner. The researchers demonstrated that PI is effective in providing personalised and adaptive learning experiences, resulting in substantial and significant gains in knowledge and performance. In another study, Olaniyi & Hassan (Reference Olaniyi and Hassan2019) compared the effectiveness of PI and conventional teaching methods on secondary school students’ achievement in Physics and found that the PI significantly outshone their counterparts that were taken through the conventional teaching method alone. In contrast to standardised teaching and passive instructions, PI allows learners to set their own pace while learning. Furthermore, PI incorporates opportunities for immediate feedback and reflection, therefore allowing learners to monitor their progress and identify areas for improvement in real time.

The inherent characteristic of PI – the use of sequenced frames for learning – offers significant advantages in the instruction of DT. It is not necessary to introduce all DT concepts at the outset. For instance, at the initial stages of the process, raw user statements are progressively transformed into need statements, requirements, functions, ideas and concepts. Consequently, relevant concepts can be introduced incrementally, aligning with each specific step in the process. Therefore, PI emerges as methodologically appropriate approach for the structured instruction of teaching DT concepts.

The authors developed a set of PI: a teaching method implemented with an e-learning approach that utilises digital technologies to deliver educational content and maximises time utilisation, reduces workload at the school and improves students’ understanding of the content, thereby leaving the school time available for them to get engage in hands-on activities and field study. The following work explains the development of the PI.

4.1 Development of PI

The development of the PI aims to provide support for the initial step of the IISC DT learning process framework (Figure 1), which involves learning and evaluating DT concepts, terminology and glossary. This step is depicted as the first step within the IISC DT learning process framework, as illustrated in Figure 1. In this study, the development of PI has been done using the following commonly used and empirically validated instructional design frameworks: 1. ADDIE models: Analyse, Design, Develop, Implement, Evaluate (Branch Reference Branch2009); 2. General instructional design model: Identify needs and goals, Organise the course, Write objectives, Prepare assessments, Analyse objectives, Design strategy of instructions, Design modules and Conduct evaluation (Gustafson & Tillman Reference Gustafson, Tillman, Briggs, Gustafson and Tillman1991) and 3. PI developmental guidelines: Specification of Content and Objectives, Learner Analysis, Sequencing of Content, Frame Composition, Evaluation and Revision (Lockee et al. Reference Lockee, Moore and Burton2013). The overall development process was synthesised using the key components of each design framework, based on a thorough understanding of each. The process includes the following steps: analysing the needs, creating learning objectives, collecting knowledge, composing the structure and sequence of the content and evaluating and revising the content. The overall process is depicted in Figure 2 and explained in the following section. The PI development guidelines (Lockee et al. Reference Lockee, Moore and Burton2013) were particularly useful in the formulation of learning objectives, the design of modules and submodules, the sequencing of these submodules and the evaluation process.

Figure 2. Development process of programmed instructions (PI), adapted from ADDIE models (Branch Reference Branch2009), general instructional design model (Gustafson & Tillman Reference Gustafson, Tillman, Briggs, Gustafson and Tillman1991) and PI developmental guidelines (Lockee et al. Reference Lockee, Moore and Burton2013).

First, the DT activity instructions were analysed and various concepts and terms to be learnt by students before they engaged in the activities were identified. In addition, the data collected from the workbooks of the various teams in the previous three workshops also revealed the areas where students’ comprehension was lacking (Bhatt & Chakrabarti Reference Bhatt and Chakrabarti2022). Based on the identified concepts and terms, the learning objectives were derived. The content to be included to fulfil these objectives was identified from two sources: 1. design, innovation and DT textbooks, reference books and research publications and 2. Creative Engineering DT course running at the department (Department of Design and Manufacturing, where the authors are currently based). The objectives and the content were then divided in the form of modules. The sequence was maintained such that advancement in DT concepts would reflect advancement in DTP. Each module contains submodules that are related to the module’s main theme. The submodules focus on a specific topic and provide an understanding of concepts with their definition and meaning, their relationship with other concepts and examples. In developing instructions, the principles of multimedia design provided by Mayer (Reference Mayer2009), along with the theoretical and empirical rationale, were adopted.

-

1. Multimedia principle: Students learn better from words and pictures than words alone.

-

2. Spatial contiguity principle: Students learn better when corresponding words and pictures are presented near rather than far from each other on the page or screen.

-

3. Temporal contiguity principle: Students learn better when corresponding words and pictures are presented simultaneously rather than successively.

-

4. Coherence principle: Students learn better when extraneous material is excluded rather than included.

Most learning content was supported by icons, diagrams or flow charts. Pictures and text were presented together and simultaneously on the screen. The content is written so learners would find the narration fascinating, simple and clear. Each submodule provides the optimum chunk of information. In addition, the new information always starts with a new submodule. Since the DT concept was developed to help school students understand it, examples used to illustrate the concepts were taken from children’s everyday experiences, such as those at school, at home, on the playground and so forth, to make it easier for them to relate. The current version contains 16 modules and covers more than 50 numbers of concepts. All the concepts, terms and glossaries are associated with instructions for process activities and intend to help learners in one way or another while performing those activities. The modules and related objectives in the form of questions are provided in Table 1.

Table 1. Information about the design thinking modules and submodules

Some submodules include concepts followed by an assessment of that concept in the form of MCQs and immediate feedback (verification) and tell if the answer was right or wrong. It should be noted that not all modules contain the questions. The questions are given to only those concepts that are identified as critical to be evaluated and have significant consequences on the activities of the DTP if not learned correctly. Although developing the questions, care was taken to ensure constructive alignment (Biggs Reference Biggs2012) with the content and learning objectives. Besides, to ensure that the questions effectively measure the transfer of learning, they are formulated in contexts different from the examples provided during the instructional phase. Therefore, answering them requires students to demonstrate a deep understanding of the concepts rather than just finding information from the content. The PI aims to help the learners build an understanding of DT concepts, which will eventually help learners perform the activities of DTP in the classroom. The PI of the DTC was developed as a front-end web tool using HTML, CSS and JavaScript. Figure 3 shows the UI of the tool where the left, right and middle columns show a list of modules, a progress bar and the content of submodules, respectively. Each submodule further contains new concepts and/or assessments related to the previously taught concept. The exemplary page of Figure 3 displays content related to Module 1 and Submodule 5, accompanied by associated MCQs. The written content is enhanced with corresponding icons (multimedia principle), which are strategically placed alongside the relevant textual information (spatial contiguity principle). Additionally, both the text and icons are displayed simultaneously for better coherence and understanding (temporal contiguity principle).

Figure 3. User interface of design thinking concepts programmed instructions (PI). The exemplary page displays content related to Module 1 and Submodule 5, accompanied by associated multiple-choice question (MCQ).

Once the PI was developed, it was reviewed by five independent design researchers who had taken the department’s design course and are currently pursuing PhD in design theory and/ or design education area. Also, two novice learners (non-designers) used PI to understand concepts and their comments were recorded. Based on the feedback from researchers and learners, some of the instructions were revised for clarity and simplicity to maximise effectiveness.

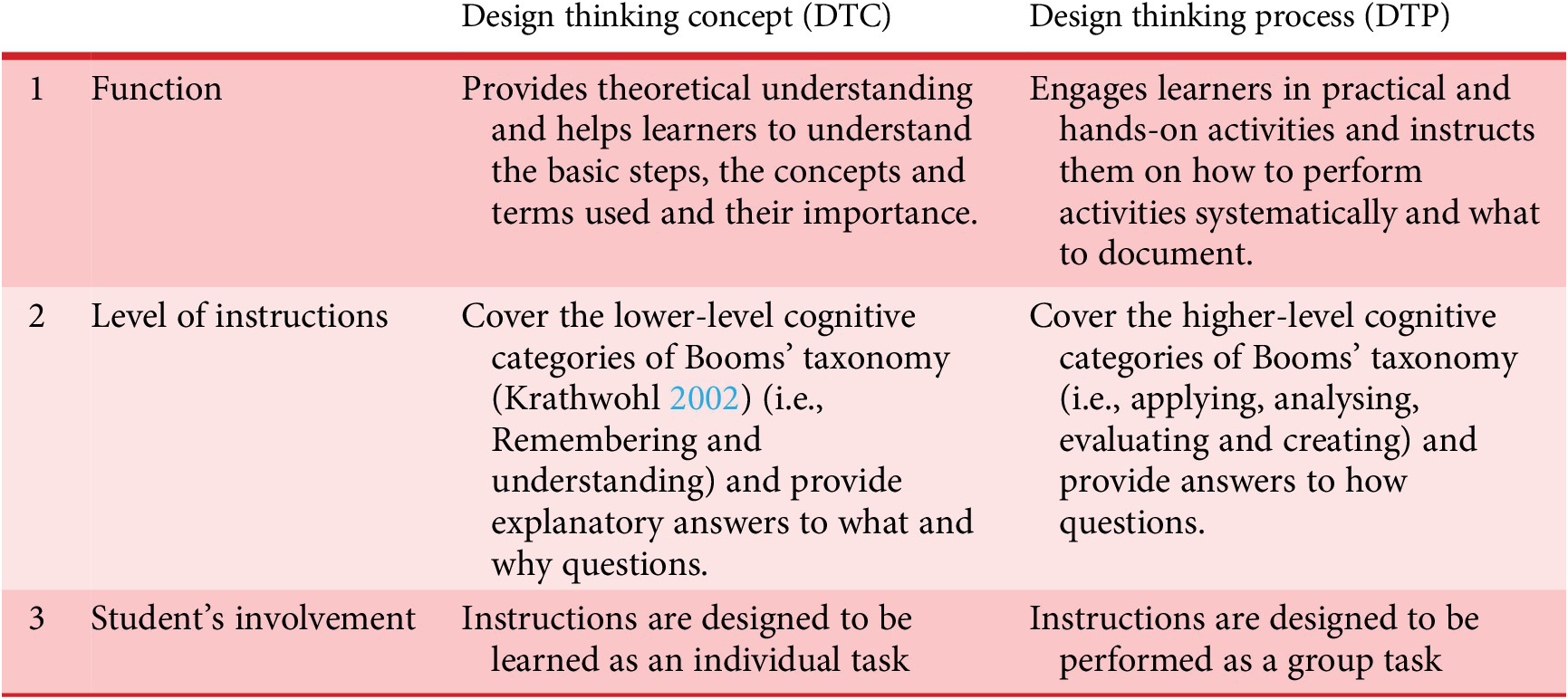

The PI of DT concept is the online tool that is hosted on the department website. The features of PI are compatible with contemporary learning principles. First, the web tool is an enabler of creating a flipped classroom environment in which students use the tool, learn the set of modules at home or school individually (i.e., distributed learning) and come prepared in class with DT concepts and engage themselves in the group activities of DTP. This helps to utilise classroom time effectively. Second, the learner has the flexibility to complete the modules at their convenience and can progress at their own pace (i.e., asynchronous learning), which may enhance the learning compared to synchronous learning in a limited classroom time. Third, the questions provided immediately test the learner’s understanding of the concept and inform the learner of the accuracy of their response, thus creating an active learning environment. Table 2 outlines the attributes of the two essential stages in learning DT: 1. Utilization of PI for teaching and evaluating DT concepts. 2. Application of knowledge gained from DT concepts through practical classroom activities, encompassing comprehension of activity instructions, execution of tasks and documentation of outcomes, aimed at cultivating student proficiency in DTP. For example, module 7 of the PI, along with its submodules, instructs and evaluates students on converting problem statements, user preferences and proposed improvements into need statements. Students individually learn and gain an understanding of this concept. During team-based DTP activities, students are instructed to convert each problem statement into a need statement, utilising their understanding to perform the activity and document the outcomes. The differences between instructions for DT concepts and processes in terms of their purposes, types and involvement of students are depicted in Table 2.

Table 2. Comparison of design thinking concepts and process

5. Testing of PI

By applying PI as a part of the learning process, authors were interested to see (i) if students are able to successfully learn the DT concepts and terms with the help of PI, (ii) how students’ grade level and overall academic performance plays a role in their ability to understand concepts and (iii) how concepts learning process through PI helps students to improve their problem finding and solving skills. To assess the effectiveness of the PI that was developed, based on these three research objectives, the following are taken as the research questions for this study. 1. What is the effectiveness of PI in understanding DT concepts in school students? 2. What is the association between school students’ ability to learn DT concepts using PI, students’ grade level as well as their ability to learn conventional school subjects? 3. What is the combined effect of learning DT concepts using PI and DTP on students’ problem-finding and solving skills?

5.1 Experimental setup

In order to understand the effectiveness of PI and answer the research questions, a workshop was carried out with school students from grades 6 to 9. A total of 33 students (eight students each from grades 7, 8 and 9 while nine from grade 6) participated in the workshop. The selection of these students was made by a school authority. For process activities, the students were divided into eight teams (T1–T8), two teams from each grade and each team comprised four or five students. Students were assigned randomly to each team. The workshop was held for 3 days, 6 hours per day. During the initial session on the first day, students were introduced to the concept of DT and its significance in real-life scenarios and education. They were briefed on the activities planned for the following 3 days. Additionally, they were notified that the workshop would involve group competition, with the winning group determined by the highest score at the end of the workshop.

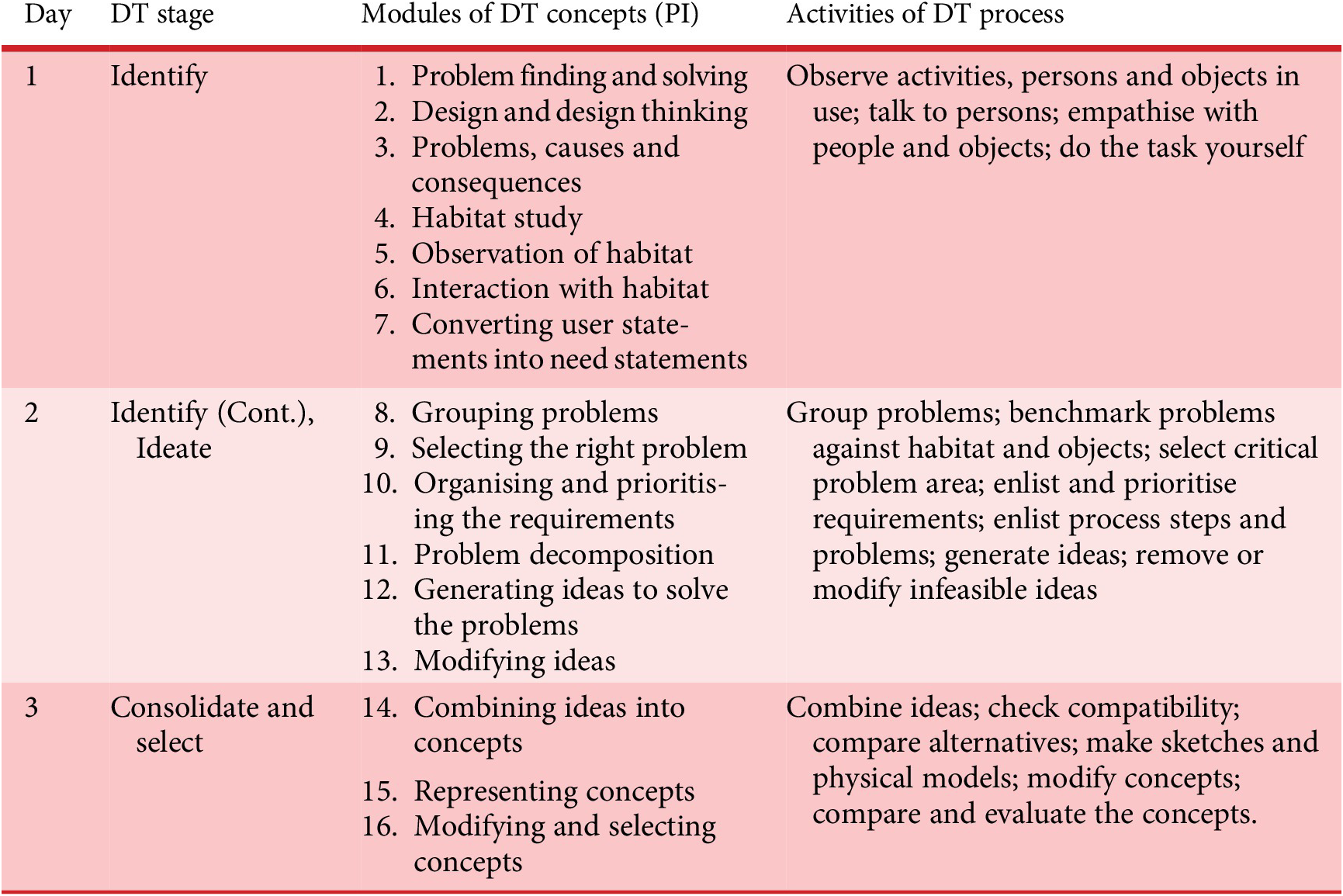

The activities of the process were split up across 3 days in a uniform manner such that on day 1, teams identified problems from the habitats; on day 2, teams selected critical problems and generated ideas and on day 3, teams created concepts, sketches and prototypes. Each day began with an individual task for learning concepts through PI before moving on to a group task. The students were required to finish only those concepts necessary to carry out process activities that day.

The PI was hosted online on the department website. It is worth noting that the instructions were developed to create a flipped classroom environment in which students learn the set of modules at home (distributed learning) and, progress at their own pace (asynchronous learning) and come prepared in class with concepts and engage themselves in the group activities of the process. However, since one of the objectives is to measure the effectiveness of PI on students’ understanding, researchers decided to assess the learning in a controlled environment where all the students are kept under the continuous observation of teachers and mentors. Although using PI, two identical computer labs along with internet facilities were used, where each student had access to an individual lab computer to access the PI. All the students were asked to read the content given in the PI, provide answers to the questions and complete the given set of modules each day. Detailed information about the modules and question themes is given in Table A1 of the appendix. As the current software version is unable to store question responses for later evaluation, an optical mark recognition (OMR) sheet was given to each student to record their responses to MCQs. Since researchers were interested in measuring the students’ understanding of DT concepts, the feedback function was kept disabled*. (*The aim was to assess the effectiveness of the provided concepts by determining whether students gained understanding on their first attempt.) Doing this did mean that the students did not receive the pre-programmed question feedback, impacting their learning experience, but in the context of this study, allowed student performance to be evaluated using the current software iteration. The students were allowed to complete DTC modules at their own pace. During the first 2 days, students spent an average of 40 minutes completing modules 1–7 and 8–13, respectively. On the last day, students spent an average of 20 minutes completing modules 14–16.

Upon completing the required concepts, students were shifted to the audiovisual (AV) room of the school to carry out design activities for that day. The AV room was equipped with a blackboard, audio and speakers’ system, tables and chairs for each team and mentor. The mentors were doctoral students pursuing doctorate degrees in various sub-areas of design and having familiarity with DT; all of them attended a course on DT during their coursework. The activities were provided in digital form, which was accessed by students using a laptop given to each team. To complete all four stages of the IISC model, students in a team performed the activities and documented the outcomes (such as problems, needs, ideas, concepts, etc.) in various forms (such as text, sketches, cardboard models, posters, etc.). Activities like observing, interacting with users and benchmarking were done at various school habitats. In contrast, activities such as clustering problems, idea generation, concept generation, sketching, prototyping, etc., were done in the AV room. The students were given necessary stationery materials that helped them perform various activities (e.g., clustering, ordering, ranking, sketching, prototyping). Workbook templates were given for the documentation activities. Students were also given access to PI while performing design activities so that upon having concept-related queries, they could refer to the content given in the PI and check if the answer lies there. In addition, when students made mistakes in performing activities or documenting outcomes, mentors pointed out which concepts should be referred, to rectify the error. Thus, PI acted as a reference at various learning steps and provided feedback to the students. Appropriate caution was exercised during the implementation of the experiment to mitigate the effects of confounding variables. During the DTP, mentors were not supposed to answer students’ queries directly. They were trained and instructed to guide students to revisit the PI modules if they had doubts or if students did not understand or perform activities correctly. Detailed information on the day-wise breakdown of the DT concepts modules and DTP activities is given in Table 3.

Table 3. Day-wise division of DT concepts module and process activities

The one-shot case study design (one-group pre-experimental design) is illustrated in Table 4.

Table 4. One-shot case study design

5.2 Methodology for analysis

To address the first research question (i.e., the effectiveness of PI), the authors examined the student’s individual performance of the test questions (provided in PI at various modules of the DT concepts). The students were asked to give answers to 20 questions while learning concepts using PI. The answers given in the OMR sheet were evaluated. Each question carried one mark. There was no negative marking for the wrong answers. It should be noted that nine of the 20 MCQs had four choices, five had three choices and six had two choices for answering. Thus, in order to statistically determine the passing score and see whether the average score received by the students exceeded the passing score, the questions were divided into three clusters based on the number of choices available and analysis was performed separately for each cluster where z-test was taken as the test statistics* (*Note that the clusters are not formulated based on the day-wise questions. The clusters are formulated based on the MCQs having a similar number of choices (either 2, 3 or 4)). Since the MCQ answers follow binomial distributions, the population means of each cluster were taken as a passing score. The population mean indicates the average score students can get if the students have no knowledge of the concepts and guess at each question. Table 5 shows the cluster of questions along with the population mean (passing score). The total score was calculated by aggregating individual scores and normalising them to a scale of 100. In addition, aggregate results of individual grades and questions were also obtained, and the analysis was done on the same. Furthermore, at the end of the workshop, a questionnaire form was given to each student for feedback. The questions asked to the students were related to the quality of content, examples, the relevance of questions, the sequence of instructions and the effect of learning concepts on performing activities and documenting outcomes. An analysis has been carried out on the data obtained from these feedback forms.

Table 5. Information about the question types and their cluster

To address the second research question (association between students’ ability to learn concepts, grade level and academic performance in conventional school subjects), the grade scores were analysed. Furthermore, the score received by individual students in PI was correlated with the result of the school examination taken by students in the recent past (3 months before the workshop) and a statistical analysis was performed to test the correlation between these two variables. The data on students’ PI scores and examination results are given in Table A2 of the appendix.

For addressing the third research question (effect of learning concepts on students’ problem-finding and solving skills), at the end of the workshop, three design experts evaluated design outcomes (e.g., problems, concepts etc.), which were communicated in the form of prototypes and an oral presentation. The experts were institute alumni who enrolled in design courses for their master’s and currently work as entrepreneurs. The criteria used to assess the students’ problem-finding skills were as follows: 1. if the team has identified enough problems, 2. if the selected problems are essential to be solved, and 3. if the selected problems have any existing, satisfactory solutions. High points were given to the team if they identified more problems, if the selected problems were highly important to solve, and if there were no immediate solutions available and vice versa. The criteria used to assess the students’ problem-solving skills were: if the solutions proposed are 1. feasible, 2. novel and 3. likely to solve the problems. High points were given to the team if the proposed solutions were feasible, novel and likely to solve the problems and vice versa. The criteria used for the assessment, along with the points, are given in Table 6. The total score for each team was calculated by adding expert evaluations. Depending on their performance, a team could receive a minimum of 18 and a maximum of 54 from the three experts after completing the process. Based on these upper and lower bounds, the average score that a team could achieve is 36 (mean of 18 and 54). As a result, a score of 36 in the evaluation scheme was considered one of the baseline criteria for assessing the effectiveness of the DT concepts and process on students’ problem-finding and solving skills.

Table 6. Evaluation metrics for problem-finding and solving skills (to be used by the experts)

6. Results and discussions

Below, a discussion is provided on how the results address the research questions.

RQ 1: What is the effectiveness of PI in understanding DT concepts in school students?

The average score received by 33 students was 14.5 out of 20 questions (72.58%). The cluster-wise performance was also measured, which is shown in Table 7. For cluster 1 (9 questions), the average score received by students was 7.58 out of 9, above 84% (z(33) is 23.55, the result is significant at p < .01). For cluster 2 (five questions), the average score received by students was 3 out of 5, which is 60% (z(33) is 7.26, the result is significant at p < .01). For cluster 3 (six questions), the average score received by students was 3.93 out of 6, above 65% (z(33) is 4.40, the result is significant at p < .01). For all three clusters, the p-values are below the significance level, indicating strong evidence that learning through PI helps students to perform well in the DT concepts tests.

Table 7. Individual students’ performance on concept assessment questions

The questions for which average students’ performance was poor (average correct response less than 55%) were related to classifying requirements into demand or wish, classifying requirements into scaling or non-scaling requirements, identifying correctly articulated problem statements and identifying problems that affect more people. Exploring the causes through mentor interaction, the authors learned that students had trouble comprehending these ideas. Also, participants encountered difficulties when asked to do specific activities or document results relating to these concepts. This indicates that the content of such concepts cannot effectively convey knowledge. Furthermore, these ideas come from engineering design practices, which are inherently complex. This raises the question of whether the rudimentary course on DT should include concepts such as demand, wishes, scaling and non-scaling requirements and how skipping these topics affects learners’ overall problem-solving skills.

Additionally, feedback gathered from the students’ questionnaires revealed that the students perceived the content and examples as effective in understanding concepts. All students agreed that the content’s quality was high or very high in terms of facilitating conceptual understanding (Q-1, Figure 4). Almost 97% of the students believed that the examples provided were of high or very high quality and helped them understand the concepts (Q-2, Figure 4). Furthermore, almost 91% of the students agreed that the majority of the questions presented in the module were related to the concepts (Q-3, Figure 5).

Figure 4. Perceived quality of content and examples (N = 32).

Figure 5. Perceived relevance of questions (N = 32).

The majority of students—roughly 97%—agreed or strongly agreed that the questions provided in the modules were adequate for testing DT concepts (Q-4, Figure 6). All of the students agreed or strongly agreed that the sequence of the modules and their submodules made learning simple, kept them interested in what they were learning and assisted them in carrying out activities and accurately documenting the results (Q-5 to Q-8, Figure 6). Finally, 97% of the students either agreed or strongly agreed that the modules motivated them to learn the concepts (Q-9, Figure 6).

Figure 6. Perceived use of design thinking modules (N = 32).

RQ 2: What is the association between school students’ ability to learn DT concepts using PI, students’ grade level as well as their ability to learn conventional school subjects?

Table 8 presents the average PI scores and school examination scores segregated by student grade levels. The grade-wise distribution of PI scores and school examination scores is graphically depicted in Figure 7. Upon examination of the PI scores, it is evident that students in Grade 6 exhibit the lowest average score (58), followed by grade 7 (68), grade 9 (79) and grade 8 (86). Conversely, analysis of the school examination scores reveals a consistent pattern across all grades. Hence, a direct correlation between students’ grade levels and their PI scores cannot be established, as their performance might be influenced by both their academic talent and age.

Table 8. Students’ grade-wise average PI score and school examination score

Figure 7. Students’ grade-wise average PI score and school examination score.

The statistical analysis tests the correlation between the student’s individual scores received in the DT concepts test and school exams. In Figure 8, the student’s scores in the DT concepts test are plotted on the X-axis and the school exams’ results on the Y-axis. The Pearson Correlation is performed to check for correlation; it was found that there is a strong positive correlation between students’ scores received in DT concepts test and school exams results (r(32) is 0.7928, the result is significant at p < .01). This means students who performed well in school exams also performed well in DT concepts test. Thus, given that students are good performers in their school curriculum (moderating factor), there is a high chance that it would be easy for them to learn DT concepts using PI. This may be due to the reason that the skills and knowledge required to perform well in conventional subjects are also applicable to DT courses, and that can have a positive impact on DT test performance. In addition, the positive correlation suggests that the DT course is a valuable addition to the curriculum, as it contributes to the development of skills and knowledge that are relevant to other subjects and to academic success more broadly.

Figure 8. Correlation between students’ individual performance in DT concept test and school results. (Trendline equation = 3.927*X + 20.49)

RQ 3: What is the combined effect of learning DT concepts using PI and DTP on students’ problem-finding and solving skills?

The total score calculated for each team by the experts is shown in the table. The average score obtained by eight teams (N = 8) in the workshop was 42.38 (M), equivalent to 78.5%, which exceeded 36 (which is the average value of performance) out of 54 points. Since performance with exceeding the average value indicates an above-average performance (t(8) is 4.92379, the result is significant at p < .01), the above indicates an above-average impact. The score reflects the students’ problem-finding and solving skills, which are the combined effects of learning DT concepts and performing instructions for the process. This shows the effectiveness of the DT workshop in nurturing problem-finding and solving skills. Table 9 shows the team performance on problem-finding and solving skills and the average team score in the DT concepts test.

Table 9. Team performance on problem-finding and solving skills

Also, the authors looked for a correlation between how well a team performed on concept exams on average and how well they performed on problem-solving and problem-finding skills. In order to examine the correlation between these two variables, Spearman’s Rho non-parametric statistical test was used to compare each student’s PI score with the results of the expert outcome evaluation given for each team. Spearman’s Rho test shows that the association between the two variables would not be considered statistically significant (rs(8) is −0.556, the result is not significant at p > 0.1), as shown in Figure A1. of the appendix.

The findings show that although the average team performance in learning concepts is high, it may not necessarily produce better outcomes. There can be several reasons for this. In each group, there are four students, and their average DT concept scores are calculated. Furthermore, the mentor’s inputs revealed that several elements, including team motivation, team dynamics, individual engagement and attention span, influence the quality of outputs generated by the teams. Furthermore, it was observed that, occasionally, mentors stepped in to clarify concepts during the process and provided additional support for learning. This also underscores the significance of having mentors present during the learning DTP. Overall, it indicates that while understanding concepts is necessary for delivering high-quality outcomes, it is not enough. To deliver high-quality results, one must also be motivated, a good team player, attentive and able to successfully apply concepts in practice.

7. Conclusion, summary and future work

The effort to create an efficient DT course for schools highlights the importance of optimising classroom time, which is often limited. Innovative teaching approaches, such as the flipped classroom, can be valuable in maximising hands-on activities during face-to-face class time. The introduction of the Programme Instructions tool offers an interactive and distributed approach to learning DT concepts. This tool not only allows students to explore DT concepts asynchronously but also evaluates their comprehension immediately after learning, providing timely answers and feedback. The learning and assessment activities of DT concepts with the help of PI can be easily scaled to accommodate large numbers of learners simultaneously, saving time and resources compared to traditional teaching and assessment methods.

The results of testing the Programme Instructions tool with school students revealed its effectiveness in helping students learn and evaluate their understanding of DT concepts. Interestingly, students who performed well in traditional classroom subjects also excelled in DT concept questions, emphasising the interconnectedness of various areas of education. However, it is worth noting that high performance in DT concept tests did not necessarily translate into excellent marks in the outcome evaluations. This finding suggests that while understanding DT concepts is a crucial foundation, it is not sufficient by itself. Effective application and execution of DT principles in real-world scenarios require additional skills, problem-solving abilities and adaptability that extend beyond theoretical knowledge. In essence, the development of an efficient DT course for schools, alongside the use of tools like Programme Instructions, is a promising step towards introducing DT concepts into education.

This research describes a study that aimed to develop PI to teach DT concepts to novice school students and to investigate whether these instructions were effective in improving the student’s understanding of these concepts. The study found that the PI positively affected the students’ understanding of DT concepts. This was determined through an analysis of the test results and students’ feedback, which showed that the students who received the PI performed well in the test. The study also found a positive association between school students’ ability to learn DT concepts and conventional subjects taught in schools. Furthermore, the study found that the DT concepts and process activities, together, have a positive effect on students’ problem-finding and solving skills. Based on these results, the study concludes that the use of PI in secondary school curricula can be justified as a means of advancing DT concepts. This suggests that incorporating such instructions into the curriculum can be an efficient way to deliver educational content. As compared to traditional teaching, we can report that PI of the DT concepts module have the following potentials:

-

• PI enables students to deeply understand and be aware of the design concepts that are prerequisites for the DTP.

-

• Before engaging in DTP activities, PI ensures that students have a thorough comprehension of the DT concepts. With prompt feedback on each individual response, PI assists learners in providing real-time assessments in a scalable manner. The assessment using PI provides consistency and fairness by uniformly applying predefined criteria to all students, thus removing subjective biases.

-

• PI can reduce the workload at schools while improving students’ understanding. As a result, it leaves school time available for them to engage in hands-on activities and field study.

To integrate a PI-enabled DT course into the classroom, concept learning and evaluation activities can be shifted to students’ home study, thereby freeing up classroom time for hands-on, interactive activities. This can be achieved by introducing DT as a co-curricular activity, reallocating some time from conventional subjects to DT classes or incorporating DT methodologies into traditional subject teaching. These strategies may facilitate the integration of DT into the curriculum while maintaining the breadth and depth of other essential content.

The study does, however, have some limitations. Students who responded to the question received no feedback or reinforcement because the feedback function remained disabled throughout the experiment. Since the feedback and contingency feature enable active learning and real-time evaluation, it can be used to create a flipped classroom setting where most information-transmission teaching can be moved out of class, class time can be used for learning activities that are active and social, and students are required to complete pre- and/or post-class activities to fully benefit from in-class work (Abeysekera & Dawson Reference Abeysekera and Dawson2015). Furthermore, while the assessment with PI is fair and consistent because it follows set criteria for all students, the final outcomes still rely on the evaluator’s expertise. This means subjectivity is still involved in assessment, and research needs to be done on methods and tools to eliminate the subjectivity effect from the assessment process.

Besides, the tool only provides text as a modality of learning. It is essential to consider each learner’s unique learning style (Soloman & Felder Reference Soloman and Felder2005; Felder & Spurlin Reference Felder and Spurlin2005). Some learners learn best with text material, while others learn best with auditory or visual aids. As a result, study materials must be tailored to different learning styles so that all learners can understand and retain the information. Thus, to make the tool more student-centric, video, audio and visual aids in the form of animation need to be added.

Furthermore, the content of DT concepts is generic, regardless of student age and school level. Students’ cognitive development changes as they grow, as does their ability to understand and process information (Lefa Reference Lefa2014). This means to make the instructions effective, they must be tailored to their level of comprehension.

Currently, when providing answers to the questions given in the PI, students only get instructional feedback messages in the form of verification. However, another important and separate component is elaboration. The elaboration component consists of all substantive information contained in the feedback message (Kulhavy & Stock Reference Kulhavy and Stock1989). Based on the type of information they include, the elaborations can be classified as (a) task-specific (restatement of the correct answer or inclusion of multiple-choice alternatives), (b) instruction-based (explanations of why a certain response is correct, or representation of the instructional text in which the correct answer was contained) and (c) extra-instructional (new information in the form of examples or analogies that clarifies its meaning) (Kulhavy & Stock Reference Kulhavy and Stock1989).

As of now, the PI of the DTC does not have the backend functionalities such as measuring individual student scores, overall progress, time spent on each module and its submodules and the number of times a student visits a specific module. Thus, a backend system with these essential functions needs to be developed so that the tool can not only be used as a crucial component of a learning management system (Turnbull et al. Reference Turnbull, Chugh and Luck2020) for courses in design, DT or innovation, but also act as an efficient data collection and storage system useful for research purposes.

Finally, a comparative study with a control group is necessary to assess the effectiveness of PI compared to traditional classroom methods in teaching DT concepts to novice school students. This study would enable a more comprehensive evaluation of PI’s effectiveness in achieving concept understanding compared to conventional teaching approaches. Additionally, exploring the long-term impact of PI on students’ retention of DT concepts and their ability to apply them in real-world problem-solving scenarios could be a promising avenue for future research.

Acknowledgements

Both the authors extend their deepest appreciation to all reviewers for their invaluable support and feedback for improving the quality of the manuscript. The principal, teachers and staff at Air Force School Jalahalli are sincerely appreciated their assistance in organising the design thinking workshop. Both the authors would especially like to thank Anubhab, Charu, Vivek, Sanjay, Kausik, Naz and Ishaan. Your mentorship and support during the Design Thinking workshop were instrumental in the successful execution of my research experiments.

Competing interest

The authors declare no conflict of interest.

Funding support

The authors did not receive support from any organisation for the submitted work.

Appendix

Table A1. Design thinking concepts modules and associated questions related information

Table A2. Student-wise PI score and result of school examinations

Figure A1. Correlation between students’ team performance in the DT concept test and DT process.