Efforts to disseminate evidence-based psychological treatments to the developing world have long been hampered by the high costs of traditional training methods, which can be prohibitive, particularly for those who do not speak English, are geographically scattered or live in countries with financial constraints.Reference Rakovshik, McManus, Westbrook, Kholmogorova, Garanian and Zvereva1

Over the past few years, several research trials have found internet-based teaching methods to be as effective or even superior to traditional methods such as use of manuals or instructor-led (face-to-face) teaching (a review of these articlesReference Rakovshik, McManus, Westbrook, Kholmogorova, Garanian and Zvereva1–Reference Dimeff, Koerner, Woodcock, Beadnell, Brown and Skutch5 can be found in Table 1).

Table 1 Previous studies assessing the effects of computer-based training on knowledge and skills gained

The current Covid-19 pandemic has shone a light on the accumulating empirical evidence in favour of online training over face-to-face training. A meta-analysis by the US Department of Education found that, on average, students in online learning conditions performed better than those receiving face-to-face instruction. The results were derived for the most part from studies carried out in medical training and higher education settings.Reference Means, Toyama, Murphy, Bakia and Jones6

The current study

This study aimed to evaluate the efficacy of two teaching methods: an internet-based lecture versus face-to-face lecture for teaching CBT assessment and formulation skills to a group of mental health practitioners in Sudan. The outcomes of the training were assessed using methods consistent with the first three levels of Kirkpatrick'sReference Kirkpatric2 model of evaluating a training programme. These three levels are: evaluation of the acceptability and feasibility of the training, evaluation of participants’ knowledge of the training content, and evaluation of behavioural skills by inclusion of an objective method of skills assessment.

Method

Study design

This was a two-arm, single-blind randomised controlled study. Participants were recruited from mental health institutions in Khartoum, the capital of Sudan. Exclusion criteria were holding a postgraduate degree in CBT or practicing CBT under supervision.

Thirty-six eligible individuals were randomised to either internet-based recorded lectures or a face-to-face lecture. A computer-generated random allocation of block size was used (Sealed Envelope, TM) to balance prior exposure to unsupervised CBT practice across the two groups.

Participants allocated to the internet-based lecture group (n = 17) were given access to a 3 h pre-recorded online training programme on a set date. The rest of the cohort (n = 19) attended a scheduled 3 h lecture with the first author.

Prior to viewing the online teaching or attending the face-to-face teaching, all participants underwent a baseline assessment of their behavioural skills in CBT assessment and formulation. They were given a brief written case history of a patient with agoraphobia and panic disorder to read. Participants then met the simulated patient, role-played by an experienced therapist. The assessments were video recorded. All participants were then asked to handwrite a CBT formulation of the patient they had just assessed.

After viewing the online recorded lecture or attending live teaching, all participants repeated the simulated patient assessment task and wrote a CBT case formulation. They also completed a training satisfaction questionnaire.

At a later date, the third author (S.A.), an experienced CBT therapist, scored the video recordings and the handwritten case formulations. S.A. was blind to the time of assessment (pre- or post-intervention) and group allocation (face-to-face lecture or computer-based lecture). The first author scored the training satisfaction questionnaire.

Teaching materials

Internet-based video-recorded lecture

This was adapted and translated into Arabic, with permission, from an online training module available on the Oxford Cognitive Therapy Centre website (https://www.octc.co.uk/training). The video-recorded lecture was delivered by the first author.

Face-to-face lecture

The first author delivered the live lecture following the Arabic scripted material. The live lecture followed the same lesson plan as the video recordings.

Assessments

The acceptability of the training was rated using the Training Acceptability Rating Scale (TARS). Participants’ performance in the video-recorded assessment of the simulated patient was assessed by the CBT Theory and Assessment Skills Scale (C-TASS). The quality of the handwritten case formulation was rated using the Formulation Rating Scale (FRS). For further details on how these scales were developed, see Ref. Reference Rakovshik, McManus, Westbrook, Kholmogorova, Garanian and Zvereva1.

The two scales were found to have good interrater reliability, as stated previously.Reference Rakovshik, McManus, Westbrook, Kholmogorova, Garanian and Zvereva1 This was determined by calculating the intraclass correlation coefficients for C-TASS and FRS, which were found to be 0.87 and 0.97, respectively.

Statistical analysis

Power calculation

Previous comparable studies found effect sizes of d = 1.05Reference Sholomskas, Syracuse-Siewert, Rounsaville, Ball, Nuro and Carroll7 and d = 0.77–1.10.Reference Rakovshik, McManus, Westbrook, Kholmogorova, Garanian and Zvereva1 A two-tailed power calculation using G*Power showed that a sample size of n = 32 (16 per group) had 90% power to detect an effect size of d = 1.05 at P = 0.05 for the primary comparison.

Intention to train

Participants who consented to take part in the study and completed at least one measure at baseline were considered intention-to-train (ITT) samples (N = 36). For the ITT analysis, the last available data point was carried forward for missing data (see Fig. 1 for details of attrition).

Fig. 1 Consort diagram showing the flow of participants through the trial.

Feasibility and acceptability, satisfaction and perceived effect

An independent t-test was used to compare differences between mean scores for feasibility and acceptability, satisfaction and perceived effect between the two groups.

Improvement in CBT skills

The C-TASS and FRS scores were not normally distributed and could not be rendered normally distributed through Winsorising and log transformation. Consequently, the following non-parametric statistical tests were used.

(a) Related-sample Friedman's two-way analysis of variance by rank was used to evaluate the effects of training on CBT assessment and formulation skills, as evidenced by changes in the outcome measures across time and between the two groups (condition) × 2 (time).

(b) Independent-samples Mann–Whitney U-test was used to assess the effects of training on formulation skills and CBT theory and assessment skills (primary outcomes) by calculating mean differences in post-intervention scores between the two groups (face-to-face lecture versus computer-based recorded lecture).

(c) A post hoc analysis was used to examine the effects of prior CBT exposure on FRS and C-TASS scores.

The primary study analyses were carried out in SPSS 22 for Windows.8

Ethical approval

Ethical approval was sought and granted by the Oxford University ethics committee.

Results

Sample description

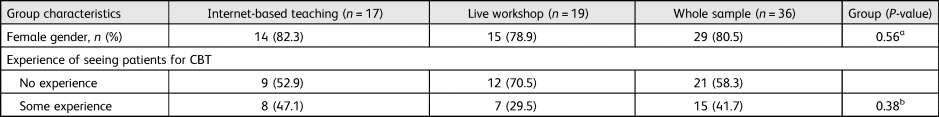

There were no differences in demographic characteristics or participants’ prior levels of experience in the practice of CBT, when compared using chi-squared or Fisher's exact test for categorical variables (Table 2).

Table 2 Baseline characteristics of sample

a. Fisher's exact test.

b. Chi-squared test.

Participant flow and retention

Of the 36 participants who were randomised and completed baseline measures, 29 completed the final assessments, yielding an 80% retention rate. The proportion of participants who dropped out was comparable to the dropout rate in other training studies (e.g. Refs Reference Davis, Crabs, Rogers, Zamora and Khan4 and Reference Rakovshik, McManus, Westbrook, Kholmogorova, Garanian and Zvereva1).

Outcome analysis

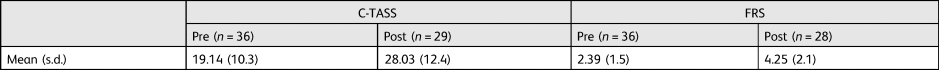

Comparison of mean scores

Comparison of C-TASS and FRS mean scores from pre-intervention to post-intervention demonstrated a significant positive effect of training in both groups on both primary measures, as can be seen in Table 3.

Table 3 Descriptive statistics for outcome measures

Analysis of feasibility and acceptability, satisfaction and perceived effect

Thirty participants completed the TARS after receiving the training (83% of the total sample). The overall mean scores for the two scales yielded similar results: 12.73 (s.d = 0.94) for face-to-face teaching and 12.25 (s.d = 1.14) for computer-based teaching as can be seen in Table 4. A t-test comparing the means between training conditions demonstrated no statistically significant difference between the two for ratings of feasibility and acceptability.

Table 4 Means and standard deviations for satisfaction and acceptability scale

Effects of allocation to face-to-face lecture or internet-based lecture on assessment and formulation skills

Comparison of post-intervention means between the two groups indicated that the post-intervention formulation scores measured by the FRS for the internet-based lecture group (median (Mdn) = 13) did not differ significantly from those for the post-intervention face-to-face lecture group (Mdn = 12.83); U = 122.50, P = 0.254.

However, the post-intervention CBT theory and assessment skills, measured by C-TASS scores, were significantly higher for the computer-based lecture group (Mdn = 20.43) than for the face-to-face lecture group (Mdn = 9.93); U = 181.00, P = 0.001. The effect size was large at r = 0.74.

Discussion

Sudanese mental health professionals found both teaching methods to be feasible and acceptable, and endorsed them with equally high satisfaction scores. In addition, the current study demonstrated large positive changes in knowledge and clinical skills from pre- to post-teaching for both methods.

When directly compared, the two teaching methods resulted in similar improvements in formulation skills. However, the computer-based lecture resulted in greater improvements in CBT assessment of the simulated patient tasks. One possible reason for this superiority of computer-based teaching could be that clinicians were able to watch the 3 h recorded materials at a pace and at times that suited their learning styles and learning needs.

Participants in the current study had had very limited experience in the practice of CBT, hence the significant gain in knowledge and skills from pre- to post-intervention in both arms. The results of our study may, therefore, need to be interpreted cautiously and only within the context of this particular population.

One of the strengths of this study was the use of an objective method of assessing clinical skills, which relied on an observer rating of an interview with a simulated patient objective structured clinical examination, as in Refs Reference Davis, Crabs, Rogers, Zamora and Khan4 and Reference Means, Toyama, Murphy, Bakia and Jones6. This is considered one of the most reliable methods of assessing competence.Reference Dimeff, Harned, Woodcock, Skutch, Koerner and Linehan9

The outcome measures used in the study to assess competence, the FRS and C-TASS, were designed for a similar previous study and have not undergone a thorough psychometric evaluation.

It was not possible to categorically verify whether participants allocated to the computer-based teaching group actually accessed and watched the teaching materials. It was also not possible to document the reasons for drop out. However, given that the drop-out rate was equal in both arms of the study, it was unlikely that it strongly influenced the outcomes of the study. The extent to which the training influenced the real clinical practice of participants was not tested, as this would have required testing real-life clinical encounters with patients.

Teaching or training using internet-based methods may offer a unique opportunity for developing and non-English-speaking countries. These countries may be able to benefit from the knowledge and expertise of expatriates, who will be able to contribute remotely to the training of their natives for a fraction of the cost of delivering the training face to face.

Computer-based training could have a specific role in training programmes, for example, training in psychotherapy, by improving the basic knowledge and basic skills of participants before other methods of training such as face-to-face teaching or supervision are used to develop more complex skills. This will represent a unique opportunity for disseminating a much-needed mode of treating mental health problems to disadvantaged communities.

Author contributions

A.I. drafted the final version of the text. S.R. contributed to the content and writing of the final version.

Declaration of interest

ICMJE forms are in the supplementary material, available online at https://doi.org/10.1192/bji.2020.60.

eLetters

No eLetters have been published for this article.