1. Introduction

Bilinguals do not “turn off” their inactive language, even when they are in a monolingual context. Specifically, they are able to access sublexical information from both languages simultaneously (Dijkstra, Reference Dijkstra and Kroll2005; Kroll et al., Reference Kroll, Dussias, Bogulski, Kroff and Ross2012). Evidence for such cross-language co-activation has been observed experimentally in several contexts. For example, the phonology of words in a bilingual's inactive language can influence processing of words in the target (active) language. In a spoken language visual world eye-tracking paradigm, Russian–English bilinguals looked longer at distractor items for which the English translation shared phonological features with the target Russian word, e.g., shovel and sharik (“balloon” in Russian) (Marian & Spivey, Reference Marian and Spivey2003). This effect occurred in the other direction as well, with influence from Russian distractors on English target words. Similar cross-linguistic activation has also been observed in written word processing. For example, Thierry and Wu (Reference Thierry and Wu2007) provided evidence from event-related potentials (ERPs) that Chinese–English bilinguals unconsciously access the Chinese translations of English words, as evidenced by an N400 priming effect for English word pairs whose translations shared a Chinese character.

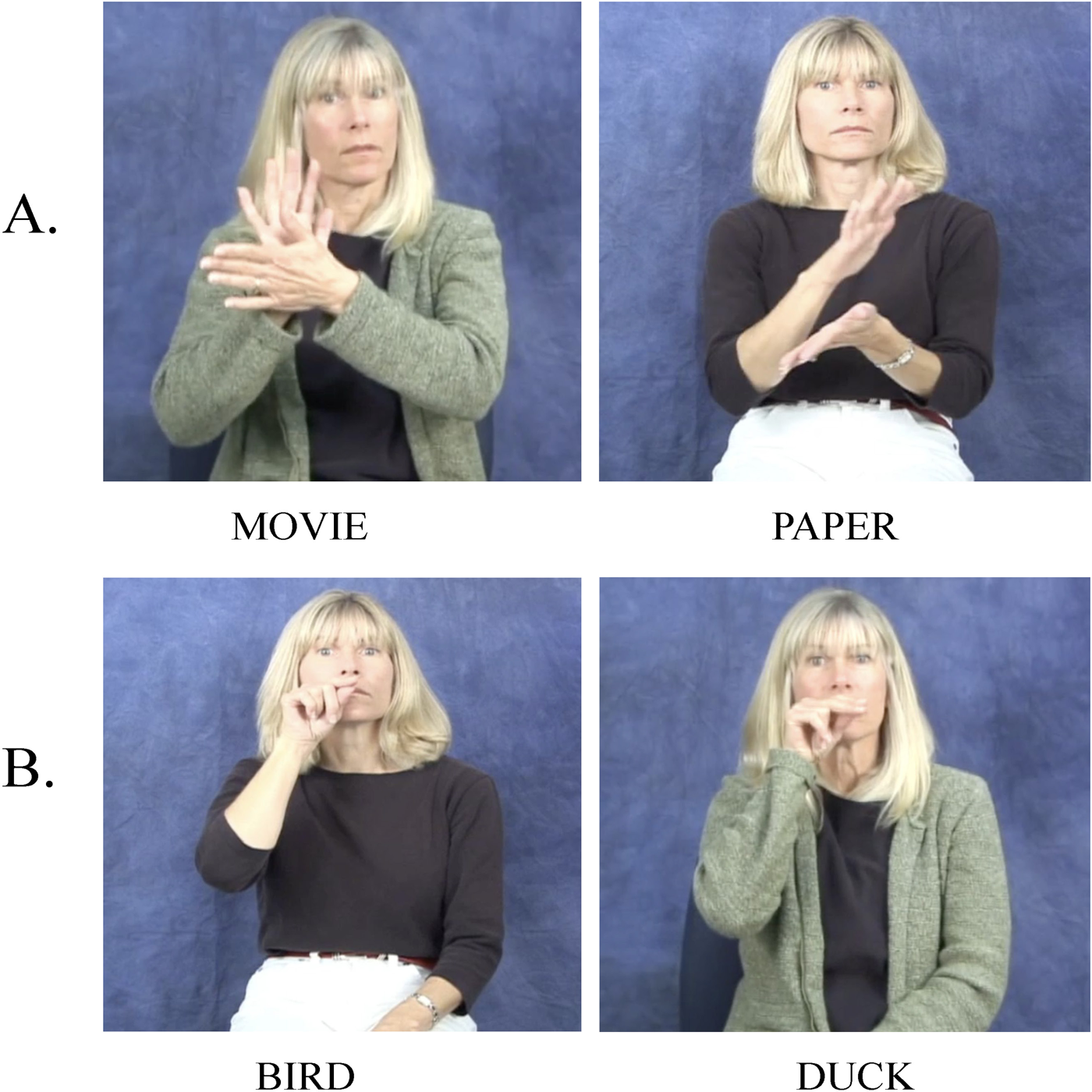

Clearly, bilinguals who know two unrelated spoken languages are affected by their inactive language, even in a monolingual context. Prior research on cross-language activation and non-selective lexical access has largely focused on unimodal bilinguals. However, language exists in multiple modalities. Understanding the nature of bilingualism requires us to consider the dynamics of language activation in bimodal bilinguals. Cross-language activation has been observed in several studies with bimodal bilinguals (deaf and hearing) that parallel the results with unimodal bilinguals. For example, both Shook and Marian (Reference Shook and Marian2012) and Giezen et al. (Reference Giezen, Blumenfeld, Shook, Marian and Emmorey2015) carried out eye-tracking experiments with hearing American Sign Language (ASL)–English bilinguals that were parallel to the Marian and Spivey (Reference Marian and Spivey2003) study with Russian–English bilinguals. The bimodal bilinguals were asked to click on the picture corresponding to a spoken English target word, and the picture display contained a distractor picture whose ASL translation was phonologically related to the ASL translation of the target word. Target–distractor pairs were considered phonologically related if the ASL signs shared at least two formational parameters (i.e., handshape, location or movement). See Figure 1 for examples of form-related pairs in ASL. Bilinguals looked significantly more at the ASL-related distractor picture compared to monolingual English speakers. This result indicates that hearing an English word automatically activated the visual–manual representation of its ASL translation. Similar findings were also reported for hearing Spanish-lengua de signos española (LSE) bilinguals (Villameriel et al., Reference Villameriel, Costello, Dias, Giezen and Carreiras2019). These results provide strong evidence that cross-linguistic activation occurs even for languages that differ in modality.

Figure 1. (A) Semantically unrelated English word pair with phonologically related ASL translations. (B) A semantically related word pair with phonologically related ASL translations. Note: Images of ASL signs in this and subsequent figures are from the ASL-LEX database (Caselli et al., Reference Caselli, Sehyr, Cohen-Goldberg and Emmorey2017; Sehyr et al., Reference Sehyr, Caselli, Cohen-Goldberg and Emmorey2021).

Cross-language activation has also been observed in the written modality for deaf bimodal bilinguals for both children (Ormel et al., Reference Ormel, Hermans, Knoors and Verhoeven2012; Villwock et al., Reference Villwock, Wilkinson, Piñar and Morford2021) and adults (Morford et al., Reference Morford, Wilkinson, Villwock, Piñar and Kroll2011, Reference Morford, Kroll, Piñar and Wilkinson2014, Reference Morford, Occhino, Zirnstein, Kroll, Wilkinson and Piñar2019). Morford et al. (Reference Morford, Wilkinson, Villwock, Piñar and Kroll2011) designed a semantic relatedness task (parallel to the task used by Thierry & Wu, Reference Thierry and Wu2007) in which adult deaf signers were asked to determine whether two written English words were semantically related to each other. A subset of the word pairs was phonologically related when translated into ASL. There was an interaction between semantic relationship and ASL phonological relationship, such that signers were slower to correctly reject semantically unrelated pairs that shared ASL phonology (Figure 1A). Signers were also faster to accept semantically related pairs that shared ASL phonology in addition to their semantic relationship (Figure 1B). English monolinguals showed no effect of the translation manipulation. Similar cross-language activation effects using this paradigm have been found for deaf German-Deutsche Gebärdensprache (DGS) bilinguals (Kubus et al., Reference Kubus, Villwock, Morford and Rathmann2015) and hearing Spanish-lengua de signos española (LSE) bilinguals (Villameriel et al., Reference Villameriel, Dias, Costello and Carreiras2016). In addition, these results were replicated in an ERP experiment by Meade et al. (Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017). Specifically, deaf ASL–English bilinguals were slower to reject semantically unrelated pairs that shared ASL phonology, and these pairs also elicited reduced N400 amplitudes, indicating a priming effect from the ASL relationship.

Several of these studies also found that deaf bilinguals with higher reading skill in English had a smaller effect of cross-language activation from ASL (Meade et al., Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017; Morford et al., Reference Morford, Wilkinson, Villwock, Piñar and Kroll2011, Reference Morford, Kroll, Piñar and Wilkinson2014, Reference Morford, Occhino, Zirnstein, Kroll, Wilkinson and Piñar2019). This pattern is consistent with the notion in the bilingualism literature that as proficiency in the L2 increases, reliance on the L1 decreases. More proficient bilinguals access semantic information directly from their L2 without having to go through their L1 (Kroll & Stewart, Reference Kroll and Stewart1994), and faster L2 comprehension may also allow less time for the L1 to influence L2 processing. Nevertheless, cross-language activation is observed for bilinguals across the proficiency spectrum, and occurs bidirectionally (Van Hell & Dijkstra, Reference Van Hell and Dijkstra2002). For unimodal and bimodal bilinguals alike, non-selective lexical access that activates information from both lexicons is hypothesized to be a general characteristic of bilingualism, rather than a strategy adopted solely by less-proficient speakers.

Together, the results from these studies indicate that cross-language activation occurs for both deaf and hearing bimodal bilinguals, and that sign language translations are activated for both spoken and written words. The vast majority of evidence for cross-language activation in bimodal bilinguals comes from studies that investigated single word processing. Unlike semantic judgment or picture selection tasks with isolated words, sentence reading can capture automatic activation effects without interference from particular task demands. It has been suggested that sentences may introduce enough linguistic context to mitigate the influence of the inactive language (van Heuven et al., Reference van Heuven, Dijkstra and Grainger1998). However, some studies have shown that cross-language activation occurs even in sentence contexts (see Lauro & Schwartz, Reference Lauro and Schwartz2017 for a review). Most of these studies document cognate effects in sentences for unimodal bilinguals, where processing was facilitated in natural reading tasks when target words were cognates between the readers' two languages. However, the extent of cross-linguistic influence appears to depend on the semantic constraints of the sentence. Cognate facilitation, for example, appears in certain tasks (i.e., lexical decision primed by a previous sentence) and in less-constraining semantic environments (Schwartz & Kroll, Reference Schwartz and Kroll2006). In eye-tracking studies of natural reading, cognate facilitation effects are found for both early measures (e.g., first fixation duration [FFD]) and late measures (e.g., total reading time) for low-constraint semantic contexts (Duyck et al., Reference Duyck, Van Assche, Drieghe and Hartsuiker2007; Libben & Titone, Reference Libben and Titone2009), but only early processing measures show cognate effects for high-constraining contexts (Libben & Titone, Reference Libben and Titone2009).

Eye-tracking studies with French–English bilinguals offer additional support for early activation of words in the non-target (inactive) language during reading (Friesen et al., Reference Friesen, Ward, Bohnet, Cormier and Jared2020a, Reference Friesen, Whitford, Titone and Jared2020b; Friesen & Jared, Reference Friesen and Jared2012). Using a French–English homophone error paradigm, Friesen et al. (Reference Friesen, Ward, Bohnet, Cormier and Jared2020a) found that readers were sensitive to phonological similarity across languages (i.e., the homophones “mow” and “mot”), with homophone effects observed in early eye-tracking measures. Similarly, Friesen et al. (Reference Friesen, Whitford, Titone and Jared2020b) demonstrated that readers exhibited shorter fixations on homophone errors as opposed to spelling control errors, particularly on high-frequency words. The researchers concluded that cross-language phonology effects occur during early stages of word recognition during sentence processing, indicating that initial word recognition is language non-selective.

Due to the modality differences between spoken and signed languages, neither cognates nor cross-language homophones are possible because there are no shared phonological or orthographic representations.Footnote 1 Furthermore, evidence from production studies suggests that bimodal bilinguals experience less cross-language competition and less need to suppress their inactive language because there is no competition between output modalities (see Emmorey et al., Reference Emmorey, Giezen and Gollan2016 for a review). For bimodal bilinguals, cross-language activation needs to occur either at the semantic (conceptual) level or at the lexical level between word and sign representations. For example, reading the word “movie” can activate the ASL translation-equivalent MOVIE either through shared semantics or via lexical-level links (see Shook & Marian, Reference Shook and Marian2012). Activation of MOVIE then spreads to phonologically related signs, such as PAPER (see Figure 1A), which could impact later processing of the English word “paper” within a sentence. For unimodal bilinguals, however, cross-language activation can also happen via a shared phonological level, as well as via lexical links or shared semantics.

Notwithstanding the modality difference between signed and spoken languages, there is some evidence for co-activation of sign phonology in sentence reading for deaf signers of Hong Kong Sign Language who are also proficient in Chinese, a logographic language (Chiu et al., Reference Chiu, Kuo, Lee and Tzeng2016). In two studies using parafoveal preview paradigms, Pan et al. (Reference Pan, Shu, Wang and Yan2015) and Thierfelder et al. (Reference Thierfelder, Wigglesworth and Tang2020) found that deaf signers had longer fixation durations on a target word when words with phonologically related sign translations were presented in the parafoveal preview window. This result indicates that signers accessed enough information from the preview to begin processing the phonology of the sign-related translation, which then slowed their processing of the word. These results are consistent with previous studies that found behavioral interference from co-activation effects (Meade et al., Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017; Morford et al., Reference Morford, Wilkinson, Villwock, Piñar and Kroll2011). However, the Chinese sentence reading paradigms included display changes that introduce a processing conflict that interferes with the task of reading; the effect of co-activation in natural reading remains less clear. An unpublished pilot experiment conducted by Bélanger et al. (Reference Bélanger, Morford and Rayner2013) found some evidence suggesting that an ASL relationship between a prime and target word embedded in a sentence can impact target word reading, but the direction of the effects was mixed and dependent on reading level. Less-skilled deaf readers showed facilitated processing of the target, but more skilled readers showed evidence of inhibited processing.

Eye-tracking is an ideal method to address the role of language co-activation in natural sentence reading because it captures online processing, removing the influence of experimental task demands such as lexical or semantic decisions and can reveal automatic activation effects. Eye-tracking also allows us to investigate the time course of cross-language co-activation. By capturing both early (first fixation, gaze duration [GD]) and late (total reading time, regression probability) processing streams, we can distinguish how cross-language activation affects multiple stages of the reading process. Early measures capture initial, word-level processes at the lexical level, while later measures reflect context integration and full sentence processing (Rayner, Reference Rayner2009).

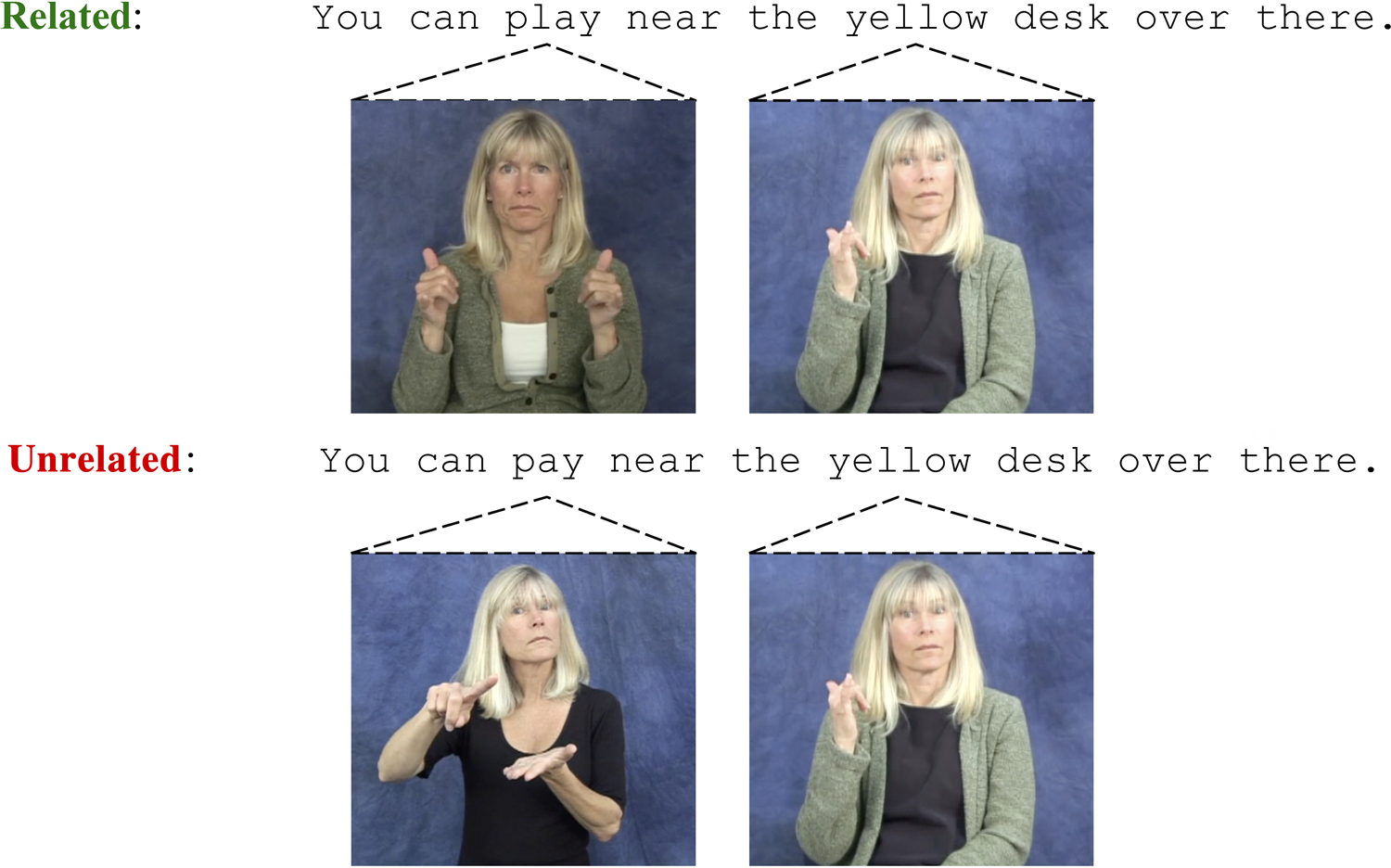

In the present study, we investigated whether cross-language activation of ASL occurs during natural reading of English sentences without task demands. English word pairs whose translations share ASL phonological parameters were embedded in sentence frames. To directly compare the influence of an ASL-related prime word to an ASL-unrelated prime word, additional sentences were created that were identical except that the prime word had an ASL translation that did not share any phonological parameters with the target word's translation (see Figure 3). Eye-tracking measures at the word level (first fixations, GDs, go-past time) and sentence level (total reading time, probability of regressions, skipping rates) were analyzed. FFD refers to the duration of a reader's fixation on a word only the first time they land on it (excluding later refixations). GD includes FFD and any additional refixations on the word before moving the eyes to any other word. Go-past time includes GD and any additional refixations after moving the eyes backward (regressing) but before moving forward past the word (Rayner, Reference Rayner1998; Rayner et al., Reference Rayner, Chace, Slattery and Ashby2006).

If co-activation of ASL phonology occurs during natural reading, we should observe a difference in eye movement patterns between sentences with ASL-related prime/target pairs and those with ASL-unrelated pairs. Previous research has found mixed evidence on whether co-activation facilitates or inhibits processing. If spreading activation from ASL sign translations facilitates reading processes, then we should observe shorter fixations on the target word, fewer regressions to the target and faster total reading times for sentences containing ASL-related pairs. On the other hand, if spreading activation from ASL interferes with reading processes, then longer target word fixations, more regressions and longer reading times are predicted for sentences with ASL-related prime words. Further, whether language co-activation effects are observed for word-level eye-tracking measures or sentence-level measures will provide insight into whether language co-activation impacts lexical access or sentence comprehension processes. Finally, in line with previous studies of ASL co-activation (Meade et al., Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017; Morford et al., Reference Morford, Kroll, Piñar and Wilkinson2014; Reference Morford, Occhino, Zirnstein, Kroll, Wilkinson and Piñar2019), we predict that less-skilled deaf readers will show a stronger ASL co-activation effect (measured as larger differences between the related and unrelated prime conditions).

2. Methods

2.1 Participants

We recruited 25 severely to profoundly deaf adults, but data from two participants were excluded due to chance performance on comprehension questions indicating that they were either not paying sufficient attention or did not understand the sentences. Twenty-three participants were therefore included in the final analysis (10 women; mean age = 35, SD = 9 years), all of whom were proficient signers of ASL. Eleven were native signers (born into a signing family) and 12 were exposed to ASL before age 8 (mean age of ASL acquisition = 1.4 years, SD = 2 years). All had normal or corrected-to-normal vision and none reported reading or cognitive disabilities.

All participants were naïve to the ASL phonological manipulation of the experiment. Prior to the experiment, all participants provided informed consent according to San Diego State University Institutional Review Board procedures.

2.2 Language assessments

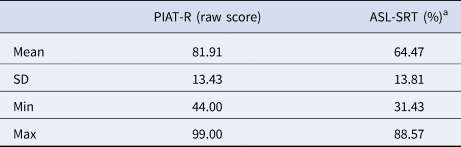

Participants' reading skill was measured using the Peabody Individual Achievement Test – Reading Comprehension – Revised (PIAT-R; Markwardt, Reference Markwardt1989): participants read single sentences then choose a picture out of four possible options that best matches the content of the sentence. Participants' proficiency in ASL was measured using the ASL Sentence Repetition Task (ASL-SRT; 35 sentence version; Supalla et al., Reference Supalla, Hauser and Bavelier2014): participants view sentences in ASL that increase in complexity and are asked to repeat the sentence verbatim. Reading and ASL scores are summarized in Table 1.

Table 1. Summary of language assessment scores

a For comparison, the average ASL-SRT score for our lab's database of 125 native signers is 63.7% accuracy.

2.3 Stimuli

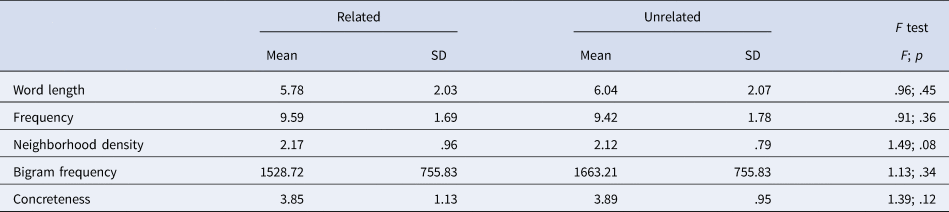

The prime–target stimuli consisted of 50 English word triplets made up of one target word and two prime words. One prime word was phonologically related to the target when translated into ASL (sharing at least two phonological parameters) and the other prime word was unrelated to the target. The related pairs varied in which two parameters they shared (handshape, location or movement), but most pairs (82%) shared at least location (place of articulation). The related pairs were selected from previously compiled lists of prime–target English words that had phonologically related ASL translations used by Meade et al. (Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017). For each pair, an unrelated prime was chosen that did not share any ASL parameters with the target but shared a number of lexical characteristics with the related prime. Specifically, the unrelated prime words were matched to the related prime words with respect to first letter, part of speech, word length (±1 letter), frequency, English neighborhood density and concreteness using the English Lexicon Project database (Balota et al., Reference Balota, Yap, Cortese, Hutchison, Kessler, Loftis, Neely, Nelson, Simpson and Treiman2007) and concreteness ratings from Brysbaert et al. (Reference Brysbaert, Warriner and Kuperman2014) (see Table 2 for a summary). Neither prime was semantically related to the target. Furthermore, none of the word primes were phonologically or orthographically similar to their targets in English.

Table 2. Descriptive statistics for the ASL-related and -unrelated word primes

Following compilation of the word triplets, one prime and its corresponding target were embedded into sentences, separated by 6–10 characters (mean = 7.8, SD = 1.1) (1.7–2.9 degrees of visual angle). For each triplet, two sentences were created: one using the related prime, and one using the unrelated prime in the same position in the sentence. The sentences were identical otherwise. See Figure 2 for an example sentence and word pairs.

Figure 2. Example of sentences containing ASL-related (top) and ASL-unrelated (bottom) word pairs.

Translation equivalents were deemed consistent if at least 75% of a separate group of 16 deaf signers produced the same sign for an English word (from Meade et al., Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017). For additional words not included in the Meade et al. (Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017) experiment, three online dictionaries and databases (SigningSavvy.com, Lifeprint.com, ASL-LEX: https//asl-lex.org) were used to determine the “standard” (expected) English translation of the sign. An English translation was considered standard if it was consistent across the three online ASL dictionaries. Because ASL signs have regional and generational variants, translations of the signs by the actual participants were collected after they completed the eye-tracking study to ensure that they used the expected sign (see Section 2.5 for details).

The target words in the two sentences were normed for predictability to ensure that neither the related nor unrelated prime would allow participants to consistently predict the target word. Norming was done using an MTurk survey of 20 English speakers where participants were asked to read sentences (both with the related and unrelated primes), and the target word was replaced with a blank. Participants were asked to fill in the word they thought would best fit in the blank. Sentence frames were adjusted if more than 50% of respondents correctly predicted the target word (n = 4), resulting in 100 sentences that were not predictable in either the related or unrelated conditions (1.5% and .7% correctly predicted, respectively).

We also controlled overall sentence plausibility using another MTurk survey (with 20 different participants) to ensure that neither type of prime would make the sentences more plausible (or implausible) than the other. Participants rated sentences for plausibility using a Likert 1–7 scale (1: very implausible, 7: very plausible). No significant effect of prime relatedness was found for plausibility (related: M = 5.02, SD = .92; unrelated: M = 4.46, SD = 1.06; F = .76, p = .17).

2.4 Apparatus

Eye movement data were collected using an Eyelink 1000+ in a desktop configuration (SR Research, Ontario, Canada) at 1000 Hz. Viewing was binocular, but only right eye movements were recorded.

2.5 Procedure

Participants were told that they would read sentences on a computer monitor and occasionally respond to yes/no comprehension questions. All instructions were given in ASL by either a fluent hearing signer or a deaf native signer. Using a chin and head rest to minimize movements, participants sat in front of the computer screen as they read sentences in 14-point black Courier New font on a light gray background. Sentences were presented on a 24″ LCD screen (resolution: 768 × 1024) 60 cm from the participants' eyes, providing about 3.5 letters/degree of visual angle. Before beginning the experiment, participants completed a standard three-point horizontal calibration and validation at a threshold of <0.3. Following successful calibration, participants completed 12 practice trials to familiarize themselves with the task; these practice trials did not include any of the experimental sentences. At the beginning of each trial, participants fixated on a black gaze box situated at the left edge of the screen, which triggered the sentence to appear. Participants were instructed to read the 100 experimental sentences silently at their normal speed. All participants saw all sentences. In order to maximize the distance between sentences with the same target word and reduce any repetition effects, the experiment was blocked by condition. Order of the conditions was counterbalanced such that half of the participants saw the ASL-related block of sentences first, and the other half saw the ASL-unrelated sentences first. To ensure they were reading for comprehension, participants also used a gamepad to answer yes/no questions about the preceding sentence that appeared after 20% of the trials. For example, the question that would have followed the sentence “You can play near the yellow desk over there” was “Was the desk green?.” Questions were randomly distributed throughout the experiment, and the participant was provided feedback on their answer with either a green circle for a correct answer or red for incorrect.

After completing the eye-tracking study, participants performed a translation task in which they translated the English prime and target words into ASL to determine whether they produced the expected sign translations. If a participant provided an unexpected translation for the word that did not maintain the phonological overlap in ASL, the data for that trial (and for the corresponding trial in the unrelated condition) were excluded from analysis for that participant, resulting in 4.7% of trials being excluded. If the participant's alternate translation maintained the phonological overlap of the expected translation, the data were retained. Participants were also asked debriefing questions (i.e., “What did you notice about the words you translated?”; “What did you notice about the sentences you read?”) to determine whether they noticed the phonological manipulation. No participant reported being aware of the phonological overlap in the translations until it was pointed out to them.

2.6 Analysis

Continuous eye-tracking data (fixation durations, GDs, etc.) were analyzed using linear mixed-effects models (LMEs), with items and participants as random effects and prime condition (related or unrelated) as a fixed effect. Binomial data (skips, regressions, refixations rates and accuracy) were analyzed with generalized (logistic) LMEs (GLMEs). Models were fitted with the lmer (for LMEs) and glmer (for GLMEs) functions from the lme4 package (Bates et al., Reference Bates, Maechler, Bolker, Walker, Christensen, Singmann, Dai, Scheipl, Grothendieck, Green, Fox, Bauer, Krivitsky, Tanaka and Jagan2009) in the R statistical computing environment. Fixed effects were deemed reliable if |t| or |z| >1.96 (Baayen et al., Reference Baayen, Davidson and Bates2008).

3. Results

Participants answered the comprehension questions with relatively high accuracy (M = 80.4%; SD = 10), indicating that they were attending to and comprehending the text.

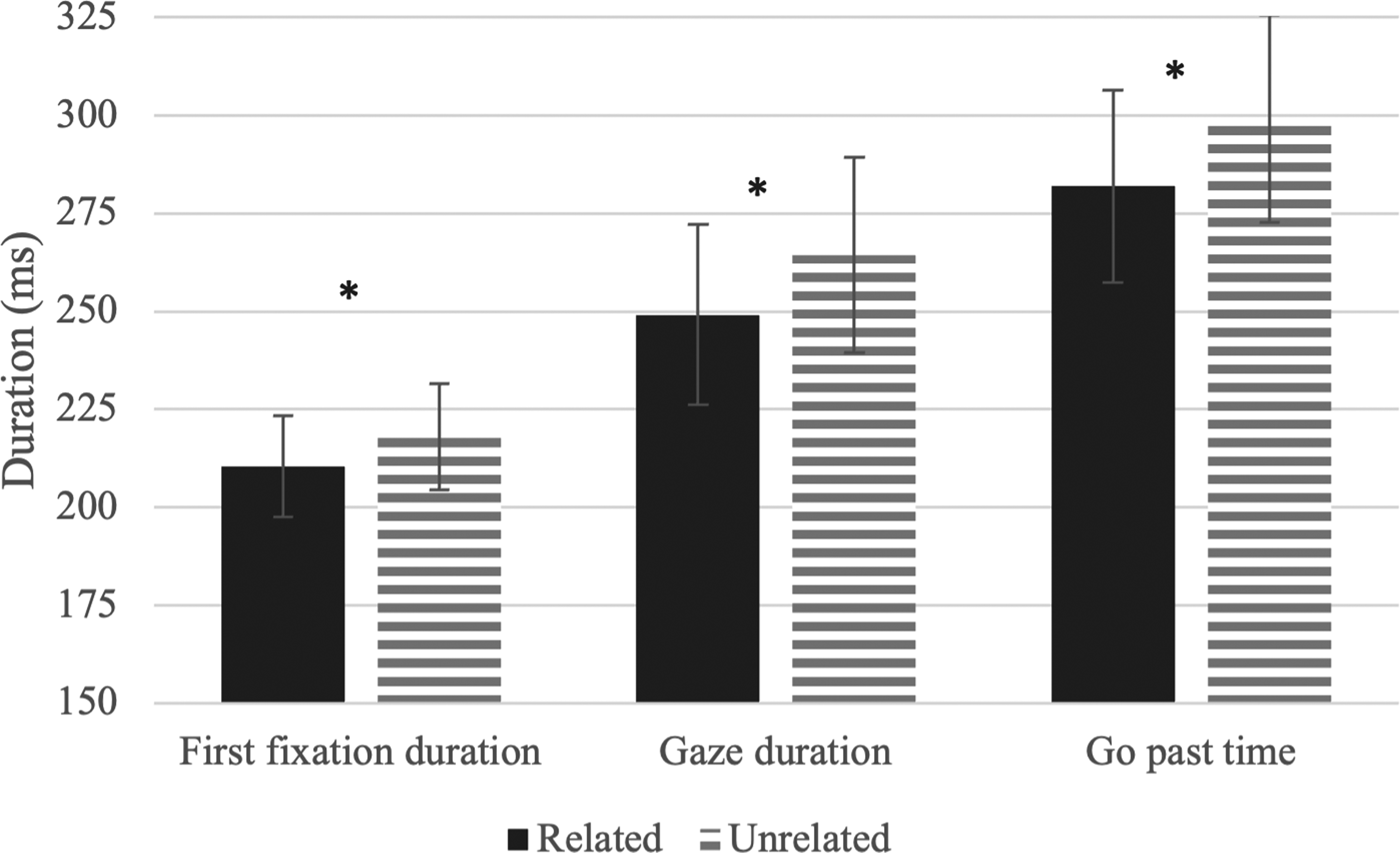

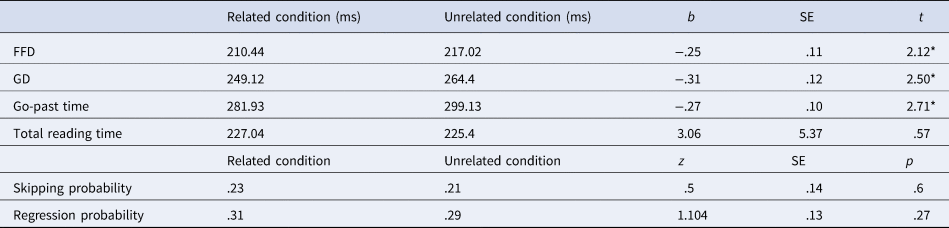

As illustrated in Figure 3, measures of early processing revealed effects of ASL co-activation.Footnote 2 FFDs, GDs and go-past time for the target word were significantly shorter when preceded by an ASL-related prime word than an unrelated prime word (see Table 3 for descriptive statistics).

Figure 3. Main effect of prime condition on early processing measures. Error bars reflect 95% confidence interval. Asterisks indicate a significant difference (|t| > 1.96).

Table 3. Descriptive statistics for eye-tracking measures

There were no main effects of prime condition for measures of later processing; participants had similar regression probabilities (z = .27), skipping rates (z = .6) and total reading time (t = .57) on the target word in both conditions.

3.1 Correlations between ASL co-activation and reading skill

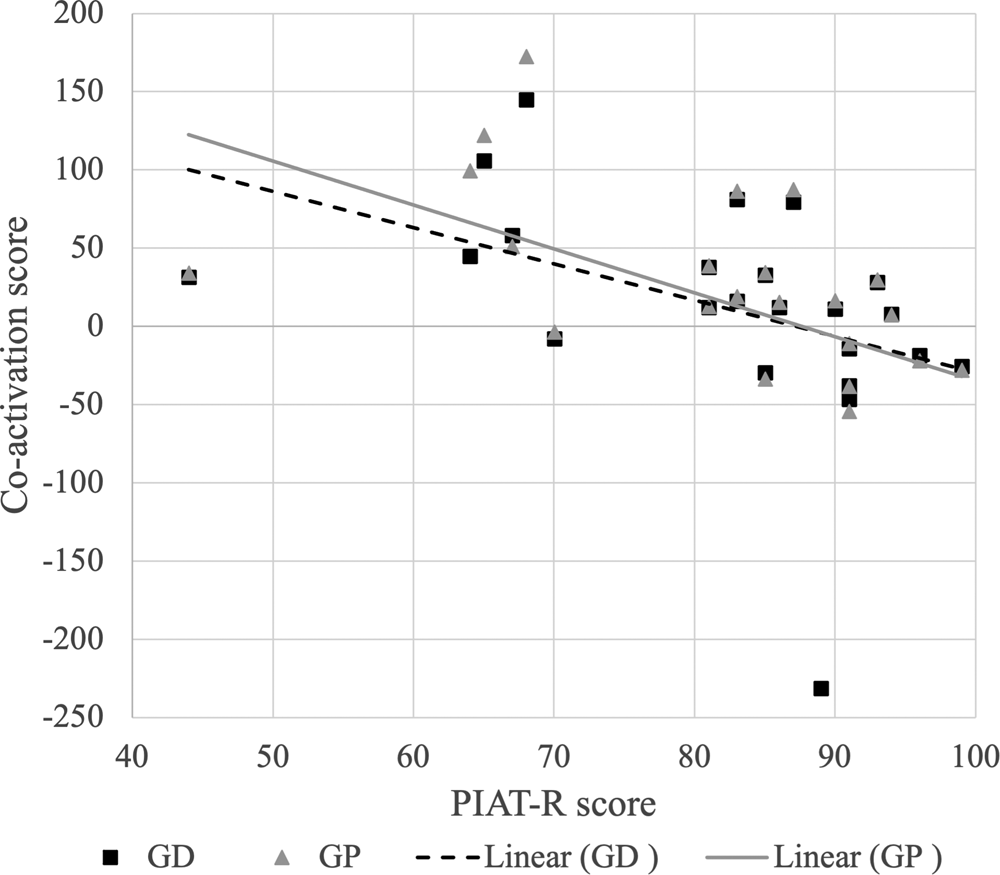

Because fixation durations are known to be affected by reading skill, we analyzed a measure of the difference between the conditions for each participant, rather than the fixation durations themselves. This method provides a baseline for each participant (i.e., their fixation durations in the unrelated condition)Footnote 3 which is compared to their fixation durations in the related condition to determine whether they were affected by the ASL translations.

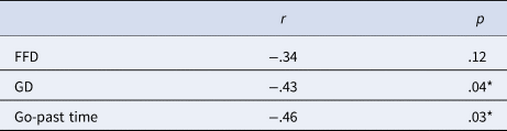

In this way a co-activation score was calculated for each participant by subtracting the average fixation duration in the related condition from the average fixation duration in the unrelated condition. Thus, a positive co-activation score reflects shorter fixations in the related condition and a negative score reflects shorter fixations in the unrelated condition. We calculated correlations between co-activation scores of the measures that showed a significant main effect and reading comprehension ability (PIAT-R raw scores; see Section 2). We conducted significance tests on each correlation coefficient. Co-activation scores for GD and go-past time were both significantly correlated with reading skill – poorer readers exhibited greater ASL co-activation (see Table 4; Figure 4).

Table 4. Summary of r values between co-activation scores and PIAT-R scores

* p < .05.

Figure 4. Correlation between reading skill and ASL co-activation scores for GD and go-past times (GP).

3.2 Correlation between ASL co-activation and ASL skill

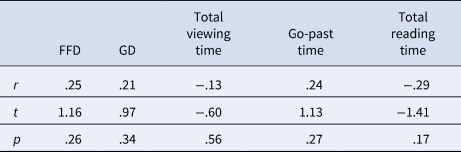

There were no significant correlations between ASL skill (as measured by the ASL-SRT) and co-activation scores for any of the eye-tracking measures (Table 5).

Table 5. Summary of non-significant correlations between ASL-SRT raw scores and co-activation

4. Discussion

The current study investigated the effect of automatic cross-language activation of ASL on English sentence reading for deaf readers using eye-tracking. Sentences were constructed that contained a target word and a prime word that either shared ASL phonological parameters (related) or did not share any parameters (unrelated) in their translations. Significant facilitation from the presence of an ASL phonological relationship was observed in early reading measures (FFDs, GDs and go-past time), but not in later reading measures (total reading time, regression probability). The degree of this facilitation was negatively correlated with reading skill such that better readers showed a smaller degree of facilitation in two of the early reading measures (GD and go-past time).

Previous research on cross-language activation produced varied results regarding whether co-activation facilitates or inhibits processing of the target language. In visual world paradigms, a phonological relationship in the words' translations draws participants' attention to incorrect distractor items (Giezen et al., Reference Giezen, Blumenfeld, Shook, Marian and Emmorey2015; Marian & Spivey, Reference Marian and Spivey2003). In semantic decision paradigms (Meade et al., Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017; Morford et al., Reference Morford, Wilkinson, Villwock, Piñar and Kroll2011), a mismatch between the semantic relationship and phonological relationship (i.e., semantically unrelated but phonologically related, see Figure 1A) results in slower reaction times for semantic relatedness decisions. However, ERP results with the same task tell a different story; phonological relationships between word translations result in reduced N400 amplitudes elicited by the related pairs, indicating facilitation (Meade et al., Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017; Thierry & Wu, Reference Thierry and Wu2007). In the current study, phonological relationships in the inactive language facilitated early processing of a target word, resulting in shorter fixations during first-pass reading. This priming effect occurred even with several words intervening between the prime and target, unlike in the aforementioned ERP and behavioral studies which presented isolated word pairs and required an overt decision or task. We suggest that without the influence of a particular task, the automatic effects of cross-language activation become clearer. Deaf readers were able to access and process the target word more quickly when preceding words shared ASL phonology in their translations.

Sentence reading studies with unimodal bilinguals have shown that the effect of cross-language activation (e.g., cognate facilitation) can be reduced by constraining the semantic context (Elston-Güttler et al., Reference Elston-Güttler, Gunter and Kotz2005; Libben & Titone, Reference Libben and Titone2009; Van Assche et al., Reference Van Assche, Duyck and Hartsuiker2012). By creating a context that biased the participant toward the word in the target language, the impact of automatic cross-language activation was diminished. In the current study, all sentences were non-constraining; cloze task norming ensured that target words were not predictable in either condition. Future research could manipulate semantic context to determine whether high-constraint sentences reduce effects of co-activation as they do for unimodal bilinguals. However, given that those experiments focused on cognate facilitation, it is unclear whether we would in fact observe a similar pattern without the possibility of a cognate relationship between English and ASL, i.e., when cross-language activation can only occur at the lexical-semantic level and not at the phonological/orthographic level. In addition, it is possible that the need to suppress activation of the non-target language is less for bimodal bilinguals due to a lack of form-level competition (see Emmorey et al., Reference Emmorey, Giezen and Gollan2016; Meade et al., Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017). If so, robust language co-activation might be observed for ASL–English bilinguals even with highly constraining sentence contexts.

In the current study, co-activation effects were only found with early reading measures (first fixations, GDs, go-past times), indicating that the influence of ASL co-activation occurs during lexical access of the target English word. This result is consistent with previous findings that cross-language effects arise early in word recognition. For example, Duyck et al. (Reference Duyck, Van Assche, Drieghe and Hartsuiker2007) concluded that early cross-language lexical interactions drove the cognate facilitation effects observed in early eye-tracking measures (FFDs, GDs) during sentence reading. Together, these results indicate that during natural reading, bilinguals' access to linguistic information in the non-target language likely influences lexical-level processing, rather than influencing whole-sentence semantic processing in the target language.

It is unlikely that deaf readers actively translated the English sentences into ASL as a reading strategy because no participant reported being aware of the translation manipulation until it was pointed out to them following the debrief translation task. In contrast, Meade et al. (Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017) reported a subgroup of deaf bimodal bilinguals who reported being aware of the relationship between the ASL translations of the English words in the prime–target word pairs presented for semantic-relatedness decisions. Critically, the effects of ASL phonology were weaker for this subgroup than the subgroup who were unaware of the manipulation. In the present study, participants may have been less likely to notice the relationship because the prime and target words were never presented as an isolated pair until the post-experiment translation task. Participants would therefore not be aware of which words were critical items while they were reading the sentences. Thus, we argue that the ASL co-activation effects observed here during natural sentence reading reflect automatic spreading activation, rather than an overt translation effect.

Finally, we found a significant correlation between reading skill and the degree of co-activation for GD and go-past time measures such that weaker readers (lower PIAT-R scores) demonstrated larger ASL co-activation effects. This result aligns with previous research reporting that deaf signers with weaker English skills showed a greater degree of ASL co-activation, measured by reaction times (Morford et al., Reference Morford, Kroll, Piñar and Wilkinson2014) and ERPs (Meade et al., Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017). This pattern is consistent with models of bilingualism that predict a stronger link to L1 translations for less-proficient bilinguals (Revised Hierarchical Model; Kroll & Stewart, Reference Kroll and Stewart1994). ASL co-activation facilitated the processing of the individual English words, indicating that ASL co-activation is not necessarily detrimental for weaker readers and may even support reading. This finding is perhaps not surprising, given the strong positive relationship between ASL skill and reading skill. In studies of both adults and children, better signers tend to be better readers (Chamberlain & Mayberry, Reference Chamberlain and Mayberry2008; McQuarrie & Abbott, Reference McQuarrie and Abbott2013; Sehyr & Emmorey, Reference Sehyr and Emmorey2022).

In summary, our findings confirm the presence of automatic activation of ASL signs during English sentence reading for a relatively small sample of skilled deaf readers. We found that automatic activation of ASL phonology facilitated access to English words during natural reading that did not involve additional task demands. Facilitation was observed in early eye-tracking measures but not in later measures, indicating that co-activation occurs at lexical access and does not influence sentence-level comprehension processes. Furthermore, there was a significant correlation between reading skill and ASL co-activation. Less-skilled deaf readers showed a larger co-activation effect, which is consistent with models of bilingualism that predict a stronger influence from L1 for less-proficient bilinguals (Kroll & Stewart, Reference Kroll and Stewart1994). While future research should confirm the findings with a larger sample size in order to strengthen the claims of the present study, these results provide support for the idea that ASL ability is connected to deaf adults' reading experience, and further illustrate the nature of cross-language activation for bimodal bilinguals.

Data availability statement

Stimuli, analysis scripts, and raw data are available on the Open Science Framework at the following link: https://osf.io/exfw3/

Acknowledgments

We extend our thanks to Cindy Farnady for her assistance with recruitment and data collection, and to Dr. Brittany Lee for her assistance with experiment and analysis programming.

Funding statement

This work was supported by grants from the National Science Foundation (BCS-2120546; BCS- 1651372).

Competing interests

The authors have no competing interests to disclose.