As students transition from primary to secondary grades, it is expected that they are no longer ‘learning to read’ but are instead ‘reading to learn’ (Wanzek et al., Reference Wanzek, Wexler, Vaughn and Ciullo2010). Older students are required to read increasingly difficult texts to build domain-specific knowledge, and those who lack foundational reading skills are likely to experience considerable difficulty acquiring more sophisticated knowledge and deeper understandings (Vaughn et al., Reference Vaughn, Roberts, Wexler, Vaughn, Fall and Schnakenberg2015). Students with limited reading skills are likely to experience a lack of success and may begin to disengage from school, and possibly drop out (Wanzek et al., Reference Wanzek, Wexler, Vaughn and Ciullo2010).

With one in five Australian adults demonstrating low literacy or numeracy skills, or both, it is evident the effects of poor reading skills are not limited to young people in educational settings (OECD, 2017). Individuals who lack the foundational reading skills needed to cope equitably with life and work are more likely to be unemployed or hold low paying, unpleasant or insecure jobs (OECD, 2017). Furthermore, poor literacy is a significant contributor to inequality and increases the likelihood of poor physical and social-emotional health, workplace accidents, participation in crime and welfare dependency (e.g., Cree, Kay, & Steward, Reference Cree, Kay and Steward2022; Georgiou & Parrila, Reference Georgiou, Parrila, Friedman and Markey2022). As Castles et al. (Reference Castles, Rastle and Nation2018) noted, reading is the basis for the acquisition of knowledge, cultural engagement, participation in a democracy and success in the workplace. The future employment opportunities that we are preparing present day adolescents for require the 21st century literacy skills of locating relevant information from a constant overflow of texts and messages from different sources of variable credibility, and interpreting, analysing and synthesising that information for use (OECD, 2021; Parrila & Georgiou, Reference Parrila, Georgiou, Troop-Gordon and Neblettin press) — none of this is possible without the foundational reading skills we expect students to master.

At the same time, we know that many students do not master those foundational skills. For example, results from the 2021 National Assessment Program – Literacy and Numeracy (NAPLAN) tests indicate that of the 89.7% of Australian Year 9 students who participated, 8.6% scored below the national minimum standard in reading (Australian Curriculum, Assessment and Reporting Authority, n.d.-a). Students who have not met the national minimum standard have not achieved the learning outcomes expected for their year level (Australian Curriculum, Assessment and Reporting Authority, n.d.-b). Given the significant number of students who are unable to read at the expected year level that represent the minimum required for functional literacy skills, it is imperative that educators implement the most effective interventions available to remediate these difficulties.

Findings from international studies over the past several decades indicate that even when a strong general education is provided to students who struggle with reading, it is unlikely they will be provided with appropriate special education services to address their reading difficulties (see Vaughn & Wanzek, Reference Vaughn and Wanzek2014, for a review). In the context of Australian schools, educators report low confidence in meeting the needs of struggling readers and demonstrate difficulty in distinguishing between evidence-based and non-evidence-based practices for students with reading difficulties (Serry et al., Reference Serry, Snow, Hammond, McLean and McCormack2022). Furthermore, Australian secondary teachers do not perceive that there are adequate supports and strategies in place to meet the needs of struggling readers in their schools (Merga et al., Reference Merga, Roni and Malpique2021). Vaughn and Wanzek (Reference Vaughn and Wanzek2014) state that the majority of students with reading difficulties spend most of their learning time completing independent work that does not provide adequate feedback they require. These students also spend considerable amounts of time learning passively in large groups, with instruction that is not differentiated for their needs. Furthermore, opportunities for engagement in explicit reading instruction are generally minimal. Overall, there is a strong indication that the quality of reading instruction for struggling readers in most classrooms is inadequate (Vaughn & Wanzek, Reference Vaughn and Wanzek2014). As some of the patterns of reading failure in adolescents can be attributed to inadequate literacy instruction during the primary school years, de Haan (Reference de Haan2021) stresses the urgency of providing evidence-based reading instruction for struggling secondary readers as early as possible.

In response to the need for evidence-based reading instruction, a number of empirical syntheses have been conducted to summarise the research findings of interventions for older students (e.g., Flynn et al., Reference Flynn, Zheng and Swanson2012; Scammacca et al., Reference Scammacca, Roberts, Vaughn and Stuebing2015; Solis et al., Reference Solis, Ciullo, Vaughn, Pyle, Hassaram and Leroux2012; Steinle et al., Reference Steinle, Stevens and Vaughn2022). Findings from a meta-analysis of 36 studies on reading interventions for struggling readers in Grades 4 to 12 indicate that reading interventions can be effective for these students (Scammacca et al., Reference Scammacca, Roberts, Vaughn and Stuebing2015). Interventions included word study, fluency, vocabulary, reading comprehension or multiple components. The analysis yielded a mean Hedges’s g effect of 0.49, which indicates that the intervention had a moderate positive effect (Scammacca et al., Reference Scammacca, Roberts, Vaughn and Stuebing2015). A meta-analysis of 10 studies that provided reading interventions for struggling readers aged between 9 and 15 years also suggests that intervention should not be ignored for older readers (Flynn et al., Reference Flynn, Zheng and Swanson2012). Moderate effect sizes were found on measures of word identification (Mean Hedges’s g = 0.41), decoding (Mean g = 0.43) and comprehension (Mean g = 0.73), and small effect sizes for fluency (Mean g = −0.29; Flynn et al., Reference Flynn, Zheng and Swanson2012). The synthesis of studies of reading comprehension interventions for students with a learning disability in Grades 6–8 conducted by Solis et al. (Reference Solis, Ciullo, Vaughn, Pyle, Hassaram and Leroux2012) revealed medium to large effect sizes for researcher-developed comprehension measures and medium effect sizes for standardised comprehension measures.

The existing studies have, however, a few shortcomings that limit their generalisability to the Australian context. First, although several studies that have examined the effectiveness of reading interventions for students in Years 4–12 exist, there are no existing meta-analyses that focus solely on the effectiveness of reading interventions for students in the secondary school grades (Years 7–12). Given that Years 4–6 are primary grades in Australia, syntheses that include these year groups do not reflect the context of Australian secondary schools. Second, the vast majority of studies included in the meta-analyses have been single case studies with very few participants, with larger group-comparison studies that would produce more generalisable findings being mostly absent. Finally, the reviews that examined systematically the methodological quality of the included studies have without exception noted that most studies have methodological shortcomings that further compromise the generalisability of the findings (see, e.g., Steinle et al., Reference Steinle, Stevens and Vaughn2022; Stewart & Austin, Reference Stewart and Austin2020). Given these shortcomings, the purpose of the current paper is to examine whether there are enough high-quality reading intervention studies for students in Years 7–12 to establish an evidence base for reading interventions for students in secondary grades. We consider this step to be crucial before conducting any meta-analyses on this topic. The gold standard for intervention research is randomised controlled trial (RCT) design, and we limited the search to studies using RCT before examining the methodological quality of them in more detail using the standards provided by the Council for Exceptional Children (CEC; 2014).

Method

Literature Search

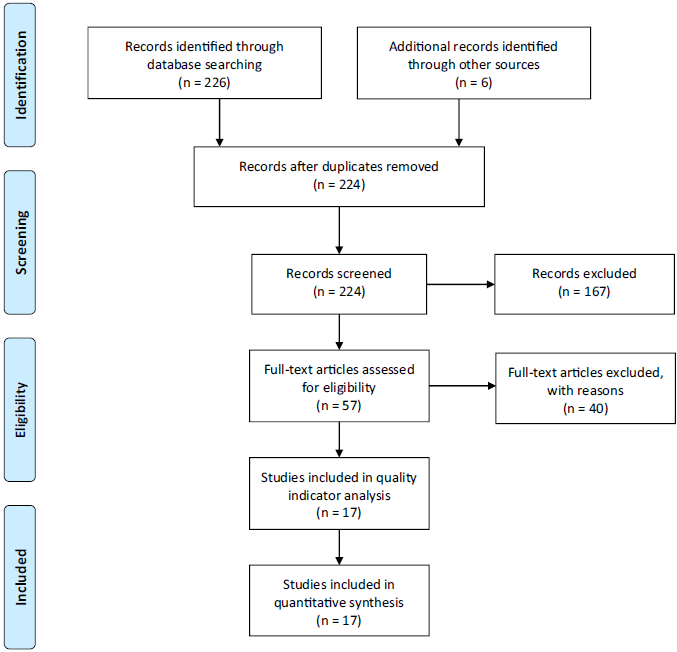

The ERIC and PsycINFO databases were searched to locate peer-reviewed studies published within the last 10 years (between January 2012 to August 2022), using descriptors or root forms of descriptors that replicated those used by Scammacca et al. (Reference Scammacca, Roberts, Vaughn and Stuebing2015). Descriptors of the populations of interest (reading difficult*, learning disab*, LD, mild handi*, mild disab*, reading disab*, at-risk, high-risk, reading delay*, learning delay*, struggling reader, dyslex*) were combined with each of the dependent variables (read*, comprehen*, vocabulary, fluen*, word, decod* and English Language Arts). Additionally, the abstracts of published research syntheses and meta-analyses related to reading interventions for students in secondary school were reviewed to ensure that all pertinent studies were included in the search. Furthermore, a hand search was conducted of all articles published between 2012 through 2022 in 11 major journals. These journals were those searched by Scammacca et al. (Reference Scammacca, Roberts, Vaughn and Stuebing2015): Exceptional Children, Journal of Educational Psychology, Journal of Learning Disabilities, Literacy Research and Instruction, Journal of Literacy Research, Journal of Special Education, Learning Disabilities Research & Practice, Learning Disabilities Quarterly, Reading Research Quarterly, Remedial and Special Education, and Scientific Studies of Reading. The results from this search yielded 226 studies. An ancestral search of the studies that had been originally identified resulted in an additional six studies being identified.

Inclusion criteria

After removing duplicate studies, 224 studies were identified in the literature search and their abstracts were examined against the following inclusion criteria:

-

1. The studies employed an RCT design.

-

2. Participants of the studies were in Years 7 to 12, or otherwise aged above 12 years old, in a secondary school. Studies were not included if there were participants in kindergarten to Year 6 and the data were not disaggregated by age or year group.

-

3. The studies examined the effect of one or more of the following reading skills: reading fluency, reading comprehension, decoding, vocabulary or multiple component interventions.

-

4. The studies reported on dependent measures that assessed reading skills, such as vocabulary, decoding, reading fluency and reading comprehension. Data from measures of other constructs, including attitudes, content acquisition, behaviour and motivation, were not included.

After applying these inclusion criteria to the abstracts of 224 studies, 167 studies were eliminated. Of these, 109 studies were excluded as they did not use an RCT design, 24 studies did not examine the effects of at least one of the specified reading skills, 18 studies did not report on measures that assessed reading skills, and 16 studies did not report on outcomes for students in Years 7–12. The remaining 57 full-text articles were assessed for eligibility, and a further 40 studies were excluded. Twenty-eight studies were excluded as they did not use an RCT design, nine studies reported data for kindergarten to Year 6 and did not disaggregate data by year group, and three studies did not report on measures that assessed reading skills. Figure 1 summarises the search, inclusion, and exclusion decisions.

Figure 1. Flow Diagram for the Search and Inclusion of Studies.

Quality coding procedures

The CEC Standards for Evidence-Based Practices in Special Education (2014) were used to evaluate the methodological quality of the studies included in this review. Although not all participants in the studies were identified as having a disability or special education needs, we used the CEC standards to follow the same process as that used in two recent studies by Naveenkumar et al. (Reference Naveenkumar, Georgiou, Vieira, Romero and Parrila2022) and Stewart and Austin (Reference Stewart and Austin2020). Each of the 17 studies in this review was evaluated against the standards, which comprise eight quality indicators (QI) that consist of one or more indicator elements.

A similar code sheet to that used by Naveenkumar et al. (Reference Naveenkumar, Georgiou, Vieira, Romero and Parrila2022) was employed to evaluate the studies against each of the eight QIs. The sheet consisted of two columns used to mark whether the study satisfactorily met each QI. For a QI to be satisfactorily met, all indicator elements within that QI had to be satisfactorily addressed. For example, if both indicator elements in the intervention agents’ QI (description of the role of intervention agent and description of agents’ training or qualifications) were present, it was determined that the QI had been satisfactorily met. Studies that met all QIs relevant to group-comparison studies (not including indicators relevant only to single-subject research studies) were considered as methodologically sound (CEC, 2014). The QI categories and their indicator components from the CEC standards (2014) are described as follows:

-

QI1. Context and setting. The first QI requires studies to provide a description of the critical features of the context or setting in which the intervention is taking place — for example, the type of school, curriculum or geographical location.

-

QI2. Participants. The second QI requires that studies provide sufficient information in describing the demographics of the participants, including gender, age and language status. This QI also requires that the study describes any disability or at-risk status of participants, and the method of determining their disability or at-risk status.

-

QI3. Intervention agent. The first element of this QI requires the study to provide information describing the role of the intervention agent — for example, teacher, paraprofessional or researcher. The second element of this QI requires the study to describe the qualifications or training that the intervention agent possesses.

-

QI4. Description of practice. This QI firstly requires the study to provide a detailed description of the intervention or practice. This includes detailed intervention procedures such as critical elements, procedures and dosage. The QI also requires detailed information regarding actions of the intervention agent, including prompts and physical behaviours. The second element of this QI requires a description of materials used (e.g., manipulatives and worksheets) or citations of accessible sources that provide this information.

-

QI5. Implementation fidelity. This QI requires that studies assess and report on implementation fidelity related to adherence to ensure critical aspects of the intervention were addressed, and fidelity related to dosage or exposure of the intervention. Studies were also required to assess and report on implementation fidelity periodically throughout the intervention and across each interventionist, setting and participant.

-

QI6. Internal validity. This QI requires that studies address several indicator elements to establish whether an independent variable is under the control of the researcher. The first element ensures that the researcher controls and systematically manipulates the independent variable. The second element requires a description of the control conditions, such as the curriculum or an alternative intervention. The third element requires that the control condition has no access or extremely limited access to the treatment intervention. The fourth element requires a clear assignment of groups, whether it be random or non-random. The fifth, sixth and seventh elements of this QI were not addressed in this review as they were relevant only to single-subject studies. The eighth element requires studies to have a low attrition rate across groups (less than 30%). The ninth and final element requires that the differential attrition rate is low (e.g., less than 10%) or can be controlled for by adjusting for non-completers’ earlier responses.

-

QI7. Outcome measures/dependent variables. The first element of this QI requires that the outcome measures are socially important — that is, the outcome is linked to improved quality of life, an important learning outcome or both. The second element requires that the study defines and describes the measurement of the dependent variable. The third element requires that the study reports the effects of the intervention on all outcome measures, not just those for which a positive effect is found. The fourth element was not addressed in this review, as it was not relevant to the research designs being reviewed. The fifth element required that studies provided evidence of adequate reliability. The sixth and final element required that the studies provided sufficient evidence of validity.

-

QI8. Data analysis. The first element of this QI requires that appropriate data analysis techniques are used for comparing changes in performance of two or more groups (e.g., t tests, ANOVAs/MANOVAs, ANCOVAs/MANCOVAs). The second element of this QI was not addressed in this review as it was relevant only to single-subject studies. The third element requires that the studies report on one or more appropriate effect size statistics for all outcomes relevant to the review being conducted, regardless of statistical significance.

Procedure

Two researchers (the first and the second authors) coded all 17 studies in 23 criteria altogether. The interrater agreement was 88.24%. The last author examined the disagreements and provided the final decision.

Results

The methodological ratings for the 13 studies that met the inclusion criteria are presented in Table 1. Of the 17 studies, two met 100% of all the QIs (Solis et al., Reference Solis, Vaughn, Stillman-Spisak and Cho2018; Williams & Vaughn, Reference Williams and Vaughn2020). All 17 studies achieved 100% in two QIs (context and settings, and intervention agent). The QIs for description of practice and data analysis were met by 94% of the studies. Internal validity was the least frequently met QI, with only 53% of the studies providing sufficient information.

Table 1. Methodological Rigour by Quality Indicator (QI)

QI1. Context and Setting

All studies met this QI. Most studies (n = 13; 76%) were conducted in the United States; the remaining four studies (24%) took place in the United Kingdom. Of the 17 studies, 11 were conducted in secondary schools, five were conducted in middle schools, and one study was conducted in a residential treatment centre for juvenile delinquents. Other school variables that were reported were percentages of students on free or reduced-price lunches, or students receiving special education or English Language Learner (ELL) services. Studies that included students with a disability or ELLs were required to provide additional information regarding the school setting. Ten studies met this requirement by providing additional information, such as the percentage of the school population receiving special education services or describing the type of program for ELLs or students with a disability (Bemboom & McMaster, Reference Bemboom and McMaster2013; Clarke et al., Reference Clarke, Paul, Smith, Snowling and Hulme2017; Gorard et al., Reference Gorard, See and Siddiqui2014, Reference Gorard, Siddiqui and See2015; O’Connor et al., Reference O’Connor, Sanchez, Beach and Bocian2017; Reynolds, Reference Reynolds2021; Swanson et al., Reference Swanson, Wanzek, Vaughn, Fall, Roberts, Hall and Miller2017; Vaughn et al., Reference Vaughn, Wexler, Leroux, Roberts, Denton, Barth and Fletcher2012, Reference Vaughn, Roberts, Wexler, Vaughn, Fall and Schnakenberg2015; Williams & Vaughn, Reference Williams and Vaughn2020).

QI2. Participants

Sixteen of 17 studies met this QI. Of the studies that met this QI, 15 involved poor readers or below-average readers. These students were identified using state-mandated tests or standardised assessments (Bemboom & McMaster, Reference Bemboom and McMaster2013; Clarke et al., Reference Clarke, Paul, Smith, Snowling and Hulme2017; Denton et al., Reference Denton, York, Francis, Haring, Ahmed and Bidulescu2017; Gorard et al., Reference Gorard, See and Siddiqui2014, Reference Gorard, Siddiqui and See2015; Scammacca & Stillman, Reference Scammacca and Stillman2018; Schiller et al., Reference Schiller, Wei, Thayer, Blackorby, Javitz and Williamson2012; Sibieta, Reference Sibieta2016; Solís et al., Reference Solís, Vaughn and Scammacca2015, Reference Solis, Vaughn, Stillman-Spisak and Cho2018; Swanson et al., Reference Swanson, Wanzek, Vaughn, Fall, Roberts, Hall and Miller2017; Vaughn et al., Reference Vaughn, Wexler, Leroux, Roberts, Denton, Barth and Fletcher2012, Reference Vaughn, Roberts, Wexler, Vaughn, Fall and Schnakenberg2015; Warnick & Caldarella, Reference Warnick and Caldarella2016; Williams & Vaughn, Reference Williams and Vaughn2020). O’Connor et al. (Reference O’Connor, Sanchez, Beach and Bocian2017) included students who received special education services and reported the percentage of identified disabilities within this group. Reynolds (Reference Reynolds2021) was the only study that did not describe a disability or the risk status of the participants.

QI3. Intervention Agent

All 17 studies met this QI, having provided sufficient information regarding the critical features of the intervention agent. The interventions in four studies were conducted by classroom teachers (Gorard et al., Reference Gorard, Siddiqui and See2015; Scammacca & Stillman, Reference Scammacca and Stillman2018; Schiller et al., Reference Schiller, Wei, Thayer, Blackorby, Javitz and Williamson2012; Swanson et al., Reference Swanson, Wanzek, Vaughn, Fall, Roberts, Hall and Miller2017). Intervention teachers were hired and conducted the interventions in five studies (Solís et al., Reference Solís, Vaughn and Scammacca2015, Reference Solis, Vaughn, Stillman-Spisak and Cho2018; Vaughn et al., Reference Vaughn, Wexler, Leroux, Roberts, Denton, Barth and Fletcher2012, Reference Vaughn, Roberts, Wexler, Vaughn, Fall and Schnakenberg2015; Williams & Vaughn, Reference Williams and Vaughn2020). Teacher assistants acted as the intervention agent in two studies (Clarke et al., Reference Clarke, Paul, Smith, Snowling and Hulme2017; Sibieta, Reference Sibieta2016). Tutors were trained to implement the intervention in Denton et al. (Reference Denton, York, Francis, Haring, Ahmed and Bidulescu2017) and Reynolds (Reference Reynolds2021). A special education teacher delivered the intervention in one study (O’Connor et al., Reference O’Connor, Sanchez, Beach and Bocian2017). A range of staff members, including special education coordinators, teacher assistants, librarians and teachers, delivered the intervention in Gorard et al. (Reference Gorard, See and Siddiqui2014). The lead author was the intervention agent in Warnick and Caldarella (Reference Warnick and Caldarella2016). Both teachers and students acted as the intervention agent in the study conducted by Bemboom and McMaster (Reference Bemboom and McMaster2013).

QI4. Description of Practice

In total, 16 studies (94%) met this QI, having provided sufficient information regarding the intervention procedures and materials so that the study could be readily replicated. There were a range of reading interventions implemented in the included studies, with seven studies exploring the effects of a multicomponent intervention, including word study strategy instruction, vocabulary instruction and comprehension strategy instruction (Clarke et al., Reference Clarke, Paul, Smith, Snowling and Hulme2017; Denton et al., Reference Denton, York, Francis, Haring, Ahmed and Bidulescu2017; O’Connor et al., Reference O’Connor, Sanchez, Beach and Bocian2017; Solís et al., Reference Solís, Vaughn and Scammacca2015, Reference Solis, Vaughn, Stillman-Spisak and Cho2018; Vaughn et al., Reference Vaughn, Roberts, Wexler, Vaughn, Fall and Schnakenberg2015; Williams & Vaughn, Reference Williams and Vaughn2020). Two studies examined the effects of systematic phonics instruction (Gorard et al., Reference Gorard, Siddiqui and See2015; Warnick & Caldarella, Reference Warnick and Caldarella2016). Two studies examined the effects of an intervention that included the development of background knowledge and vocabulary, and used text-based discussions (Scammacca & Stillman, Reference Scammacca and Stillman2018; Swanson et al., Reference Swanson, Wanzek, Vaughn, Fall, Roberts, Hall and Miller2017). Bemboom and McMaster (Reference Bemboom and McMaster2013) explored the effect of a peer-mediated instructional program in which students work in pairs to develop their reading fluency and comprehension. Gorard et al. (Reference Gorard, See and Siddiqui2014) administered a levelled-book reading intervention, combined with a number of instructional strategies (prompting, praise, error correction and performance feedback). Reynolds (Reference Reynolds2021) provided scaffolded paraphrasing instruction while reading complex texts. Schiller et al. (Reference Schiller, Wei, Thayer, Blackorby, Javitz and Williamson2012) employed an intervention that explicitly taught reading comprehension, vocabulary and motivation strategies. Finally, Vaughn et al. (Reference Vaughn, Wexler, Leroux, Roberts, Denton, Barth and Fletcher2012) implemented programs that were tailored to meet the reading needs of each participant and included a range of phonics, comprehension, word reading, fluency and vocabulary interventions.

O’Connor et al. (Reference O’Connor, Sanchez, Beach and Bocian2017) did not provide sufficient detail on how the readability of chapter books was determined and was therefore deemed to have not met this QI.

QI5. Implementation Fidelity

Fourteen studies (82%) met this QI. Most studies included direct observations of intervention sessions (Bemboom & McMaster, Reference Bemboom and McMaster2013; Denton et al., Reference Denton, York, Francis, Haring, Ahmed and Bidulescu2017; Gorard et al., Reference Gorard, See and Siddiqui2014, Reference Gorard, Siddiqui and See2015; O’Connor et al., Reference O’Connor, Sanchez, Beach and Bocian2017; Scammacca & Stillman, Reference Scammacca and Stillman2018; Schiller et al., Reference Schiller, Wei, Thayer, Blackorby, Javitz and Williamson2012; Solís et al., Reference Solís, Vaughn and Scammacca2015; Vaughn et al., Reference Vaughn, Wexler, Leroux, Roberts, Denton, Barth and Fletcher2012, Reference Vaughn, Roberts, Wexler, Vaughn, Fall and Schnakenberg2015). Three studies used intervention-agent recorded sessions (Solis et al., Reference Solis, Vaughn, Stillman-Spisak and Cho2018; Swanson et al., Reference Swanson, Wanzek, Vaughn, Fall, Roberts, Hall and Miller2017; Williams & Vaughn, Reference Williams and Vaughn2020). One study used self-reporting measures (Reynolds, Reference Reynolds2021).

Three studies did not meet this QI. All of the studies that did not meet this QI (Clarke et al., Reference Clarke, Paul, Smith, Snowling and Hulme2017; Sibieta, Reference Sibieta2016; Warnick & Caldarella, Reference Warnick and Caldarella2016) failed to report data on treatment fidelity and dosage.

QI6. Internal Validity

Nine of the 17 studies (53%) provided adequate information regarding internal validity to meet this QI (Clarke et al., Reference Clarke, Paul, Smith, Snowling and Hulme2017; Gorard et al., Reference Gorard, See and Siddiqui2014, Reference Gorard, Siddiqui and See2015; Reynolds, Reference Reynolds2021; Schiller et al., Reference Schiller, Wei, Thayer, Blackorby, Javitz and Williamson2012; Sibieta, Reference Sibieta2016; Solis et al., Reference Solis, Vaughn, Stillman-Spisak and Cho2018; Warnick & Caldarella, Reference Warnick and Caldarella2016; Williams & Vaughn, Reference Williams and Vaughn2020).

The eight studies that did not meet this QI demonstrated either high differential attrition, or both high general and differential attrition. Four studies reported high differential attrition (O’Connor et al., Reference O’Connor, Sanchez, Beach and Bocian2017; Solís et al., Reference Solís, Vaughn and Scammacca2015; Swanson et al., Reference Swanson, Wanzek, Vaughn, Fall, Roberts, Hall and Miller2017; Vaughn et al., Reference Vaughn, Wexler, Leroux, Roberts, Denton, Barth and Fletcher2012) and another four studies reported high general and differential attrition (Bemboom & McMaster, Reference Bemboom and McMaster2013; Denton et al., Reference Denton, York, Francis, Haring, Ahmed and Bidulescu2017; Scammacca & Stillman, Reference Scammacca and Stillman2018; Vaughn et al., Reference Vaughn, Roberts, Wexler, Vaughn, Fall and Schnakenberg2015).

QI7. Outcome Measures/Dependent Variables

Thirteen (76%) studies met this QI (Bemboom & McMaster, Reference Bemboom and McMaster2013; Clarke et al., Reference Clarke, Paul, Smith, Snowling and Hulme2017; Denton et al., Reference Denton, York, Francis, Haring, Ahmed and Bidulescu2017; O’Connor et al., Reference O’Connor, Sanchez, Beach and Bocian2017; Reynolds, Reference Reynolds2021; Scammacca & Stillman, Reference Scammacca and Stillman2018; Solís et al., Reference Solís, Vaughn and Scammacca2015, Reference Solis, Vaughn, Stillman-Spisak and Cho2018; Swanson et al., Reference Swanson, Wanzek, Vaughn, Fall, Roberts, Hall and Miller2017; Vaughn et al., Reference Vaughn, Wexler, Leroux, Roberts, Denton, Barth and Fletcher2012, Reference Vaughn, Roberts, Wexler, Vaughn, Fall and Schnakenberg2015; Warnick & Caldarella, Reference Warnick and Caldarella2016; Williams & Vaughn, Reference Williams and Vaughn2020).

Four studies did not report evidence of adequate reliability of the outcome measures (Gorard et al., Reference Gorard, See and Siddiqui2014, Reference Gorard, Siddiqui and See2015; Schiller et al., Reference Schiller, Wei, Thayer, Blackorby, Javitz and Williamson2012; Sibieta, Reference Sibieta2016).

QI8. Data Analysis

Sixteen (94%) studies met this QI. The studies employed techniques appropriate for comparing change of two or more groups, or provided a justification if atypical procedures were used. Specifically, the techniques included ANOVAs (Denton et al., Reference Denton, York, Francis, Haring, Ahmed and Bidulescu2017; Swanson et al., Reference Swanson, Wanzek, Vaughn, Fall, Roberts, Hall and Miller2017; Warnick & Caldarella, Reference Warnick and Caldarella2016), ANCOVAs (Clarke et al., Reference Clarke, Paul, Smith, Snowling and Hulme2017; O’Connor et al., Reference O’Connor, Sanchez, Beach and Bocian2017; Solís et al., Reference Solís, Vaughn and Scammacca2015, Reference Solis, Vaughn, Stillman-Spisak and Cho2018; Vaughn et al., Reference Vaughn, Wexler, Leroux, Roberts, Denton, Barth and Fletcher2012; Williams & Vaughn, Reference Williams and Vaughn2020), regression analysis (Bemboom & McMaster, Reference Bemboom and McMaster2013; Gorard et al., Reference Gorard, See and Siddiqui2014, Reference Gorard, Siddiqui and See2015; Reynolds, Reference Reynolds2021; Schiller et al., Reference Schiller, Wei, Thayer, Blackorby, Javitz and Williamson2012), latent variable growth modelling (Vaughn et al., Reference Vaughn, Roberts, Wexler, Vaughn, Fall and Schnakenberg2015), and t tests (Sibieta, Reference Sibieta2016).

Scammacca and Stillman (Reference Scammacca and Stillman2018) did not report appropriate effect size statistics.

Discussion

The purpose of this methodological review was to examine, using the standards provided by CEC (2014), whether there are enough high-quality reading intervention studies for students in Years 7–12 to establish an evidence base for reading interventions for students in secondary grades. Of the 17 studies reviewed, only two met all CEC (2014) QIs (Solis et al., Reference Solis, Vaughn, Stillman-Spisak and Cho2018; Williams & Vaughn, Reference Williams and Vaughn2020). This result is not entirely surprising given the earlier reports on methodological shortcomings of reading intervention studies (see, e.g., Steinle et al., Reference Steinle, Stevens and Vaughn2022; Stewart & Austin, Reference Stewart and Austin2020). It is nonetheless concerning for schools wanting to implement evidence-based interventions as it suggests that sufficient high-quality evidence (Cook et al., Reference Cook, Buysse, Klingner, Landrum, McWilliam, Tankersley and Test2015) is likely not yet available. We will return to the implications of our results at the end of the discussion.

Methodological Quality of Reading Intervention Studies for Secondary Students

All 17 studies included in this review provided sufficient evidence regarding the critical features of the context or setting (QI1) and the critical features of the intervention agent (QI3). In general, the external validity of the studies was at a high level: we know who received the intervention and in what contexts, and we know what the interventions were about and who delivered them (typically professionals or paraprofessionals available in school contexts). This is all important for schools wanting to implement some of the described interventions with their students.

Of the QIs that were not met by all studies, the most frequently missed was internal validity (QI6), with eight (47%) studies failing to provide sufficient evidence to demonstrate that the independent variable caused the change in the dependent variables. The critical issue was attrition, with all eight studies reporting unacceptable levels of differential attrition and four of them also reporting high levels of general attrition. Attrition is particularly problematic for intervention studies as they typically have relatively low numbers of participants to start with, making it difficult to adequately examine the impact of attrition on the final results. But high attrition can also threaten external validity when it is not clear who left the study prematurely and why. Reading intervention research has a complex history with discontinued treatments and attrition, and the results of the current review suggest that more attention to this issue is still required.

The second most frequently missed QI was QI7 (outcome measures/dependent variables). Four studies (34%) were unable to demonstrate that their outcome measures were appropriate for gauging the effect of the intervention. Implementation fidelity (QI5) was not reported by three studies (18%). In missing this QI, the identified studies were unable to ascertain the degree to which each intervention was administered as intended. However, we should note that the percentage of studies that missed this QI is slightly lower than the 25% reported recently in a systematic review that focused on treatment fidelity of intervention studies for elementary students with or at risk for dyslexia (Dahl-Leonard et al., Reference Dahl-Leonard, Hall, Capin, Solari, Demchak and Therrien2023).

One study failed to report adequate information to identify the participants involved in the intervention (QI2). By providing sufficient identifying information about the participants, the findings can be generalised to other similar populations. Another study was unable to provide sufficient information describing the intervention (QI4). The provision of information regarding critical features of each intervention ensures that they are easily understood and able to be replicated by others. A single study was unable to provide evidence that data analysis was conducted in an appropriate manner (QI8).

In general, while only two studies met all the QIs, we should note on the positive side that another 11 met all but one of the QIs and no study missed on more than two. This result is undoubtedly partly due to us limiting the included studies to those that used RCTs and were published within the last 10 years; in other words, these studies were typically carefully designed and reported, and the problems they encountered were mostly ones that may be difficult to anticipate and prepare for, such as differential attrition.

Limitations

Some limitations of this study must be noted. First, we used the CEC standards to evaluate the methodological quality of studies rather than approaches that are considered more rigorous in some methodological areas, such as the What Works Clearinghouse standards (Cook et al., Reference Cook, Buysse, Klingner, Landrum, McWilliam, Tankersley and Test2015). If the What Works Clearinghouse standards were applied in this review, it is likely that even fewer studies would have qualified as rigorous. Second, while 11 international journals were hand searched, we did not hand search any Australian journals. This may have resulted in some Australian studies being overlooked. Third, as only RCT studies were included in this review, studies that employed a quasi-experimental or single-subject design were excluded, which may have influenced the results. Fourth, by focusing on published studies, our review may suffer from publication bias. Additionally, some studies may have been conducted in accordance with the CEC standards (2014) but failed to explicitly report the necessary information to verify this.

Finally, as a wide variety of reading interventions were explored in the reviewed studies, we acknowledge that the usefulness of this review for secondary schools’ literacy intervention decision-making is limited.

Implications

Students transitioning into secondary school are expected to read independently to acquire large amounts of content knowledge and read teacher feedback. Students who lack the ability to comprehend written text are likely to experience failure unless effective remediation is provided. Due to constraints such as budgets, staffing and time, school leaders must be judicious in selecting the interventions that are implemented for struggling readers in secondary schools. As evident in the results of this review, the studies examining the effects of reading interventions for secondary students are still of mixed quality and the rigour of future studies examining the effects of interventions needs to be improved if specific reading interventions are to be considered as evidence-based practices. In the meantime, schools need to make choices and the results of many of the studies included in this review are promising. However, the scarcity of evidence of their effectiveness necessitates that when a school implements any of them, the teachers need to collect evidence of their impact broadly and frequently. Only proper progress monitoring will establish that the practice is effective when implemented in a particular school with their particular students.

Author note

Rauno Parrila is currently at the Australian Centre for the Advancement of Literacy at the Australian Catholic University.