Archaeologists have long debated best practices in data recording because, as a destructive process, excavation of in situ contexts can be done only once. Therefore, archaeological recording must allow excavating archaeologists to draw reliable inferences about the site and to facilitate the use of those observations by others for future research. It should clearly delineate between observations and interpretations, making it easy for others to understand and challenge previous researchers’ interpretations. In projects with multiple trenches, this delineation is particularly important so that site interpretations can be synthesized. One common way to ensure uniform data recording across an excavation is through clear written guidelines.

Written guidelines are defined here as any written instructions intended to clarify excavation methods, standards, and documentation so that the excavation team records data that meet project needs and expectations. Many excavation projects, especially field schools training new students, use written guidelines to explicitly define how the project creates excavation data. These guidelines often take the form of site manuals, which in some cases are adapted from established standards (e.g., the Museum of London's Archaeological Site Manual [Museum of London Archaeology Service [MOLAS] 1994). They describe excavation processes and archaeological data recording, and they may include standard forms to document the kinds of data that should be recorded during excavation. They may also include exemplars of descriptive and interpretive frameworks for recording.

Written guidelines are a codification and documentation of preferred or agreed-on practices for excavating, creating data, and handling data and, as such, cannot be considered independently of those practices. As a social science discipline that straddles the scientific method and humanistic inquiry, archaeology approaches data recording in two distinct ways. Some archaeologists emphasize standardized practices to create uniform data across excavations that would be easier for others to use (Dever and Lance Reference Dever and Darrell Lance1982; Spence Reference Spence, Harris, Brown and Brown1993). Berggren and Hodder (Reference Berggren and Hodder2003) argue that, historically, standardized recording reflects the hierarchical structures of many excavations and ultimately creates a disconnect between those excavating and the interpretation of their data. This means that “analysis and interpretation become removed from the trowel's edge” (Berggren and Hodder Reference Berggren and Hodder2003:425), centering interpretation in the hands of project directors alone. In this and related publications (Berggren et al. Reference Berggren, Dell'Unto, Forte, Haddow, Hodder, Issavi, Lercari, Mazzucato, Mickel and Taylor2015; Hodder Reference Hodder2000), Berggren and Hodder question how archaeological site manuals, created and disseminated by project directors, reflect an excavation's social hierarchies. Their post-processual critique of written guidelines integrates more reflexive archaeological recording to create datasets that emphasize descriptive interpretations during fieldwork. Even though these rich descriptions are harder to compare, they offer a clearer window into the interpretive process, repositioning interpretation into the hands of excavators.

These two approaches address separate issues regarding the usability of archaeological data: standardization addresses the potential to use observations for comparative research, whereas reflexive recording addresses the need to create, document, and question archaeological interpretations throughout the excavation process. Ideal recording should allow for both standardized data that can be compared and descriptive, reflexive interpretations that can be questioned by future researchers. Existing research, however, suggests that both approaches have inherent issues that affect their resulting archaeological datasets.

Methods and recording forms are often revised to meet the needs of individual excavations, regions, or cultural resource management (CRM) companies. Pavel (Reference Pavel2010) analyzed various recording forms to evaluate how archaeologists structure data. Masur and coworkers (Reference Masur, May, Hiebel, Aspöck, Börner and Uhlirz2014) similarly compared data recorded with forms to assess how archaeological data from different sites can be compared using a shared ontology. These studies found that both forms and site manuals developed in archaeology are site- or region-specific, and because of those specificities, the resulting datasets are difficult to compare.

In other instances, incomplete information limited comparability. Huvila, Börjesson, and Sköld (Huvilla, Börjesson, and Sköld Reference Huvila, Börjesson and Sköld2022; Huvila, Sköld, and Börjesson Reference Huvila, Sköld and Börjesson2021) found that archaeological field methods and operations are so conventional that they are routinely excluded in archaeological reports or primarily referenced through the tools used during excavation rather than its processes. This makes it difficult to compare data because a project's methods are unknown. Iwona and Jean-Yves (Reference Iwona and Jean-Yves2023) similarly argued that archaeological datasets and reports are often published without sufficient paradata—data describing the processes of archaeological data collection—leading to a significant loss of information on projects that memory alone cannot replace. For example, Sobotkova (Reference Sobotkova2018) attempted to reuse data from archaeological reports for a large-scale, regional study of Bulgarian burial mounds but found that key information, such as mound locations and dimensions, was frequently missing or incomplete. Although these issues were more pervasive in data collected in the twentieth century, Sobotkova still found incomplete information for 20% of datasets collected after the year 2000.

Reflexive data recording is also not without its issues. Chadwick (Reference Chadwick2003) encourages reflexive archaeology but questions whether such recording at sites like Çatalhöyük results in greater interpretation. Watson (Reference Watson2019) argues there is a persistent disconnect between reflexive archaeology in theory versus practice, with few commercial archaeology projects engaged in recording that democratizes the interpretive process. In archaeological reports, project participants other than the project director are rarely mentioned; instead, their authorship of archaeological data is only noted in project databases and paper archives (Huvila et al. Reference Huvila, Sköld and Börjesson2021). Consequently, even though multiple interpretations may exist in project data, they do not necessarily filter into a democratized interpretive framework in archaeological reports. Moreover, archaeological data can become difficult to reuse when the resulting archives (including excavation notebooks, publications, and databases) have multiple and conflicting interpretations, insufficient information about the production of digital data, and heterogeneous data (Börjesson et al. Reference Börjesson, Sköld, Friberg, Löwenborg, Pálsson and Huvila2022). These examples demonstrate that many proponents of reflexive archaeology still identify its practice as too limited to document and encourage interpretation in archaeological fieldwork at the trowel's edge in a way that is accessible for future archaeologists.

All these issues speak to broader questions about whether existing archaeological data practices can be scaled for “big data” (Huggett Reference Huggett2020) and “open data” (Kansa and Kansa Reference Kansa and Kansa2013; Lake Reference Lake2012; Marwick et al. Reference Marwick, Guedes, Michael Barton, Bates, Baxter, Bevan and Bollwerk2017). These issues are further compounded by a lack of resources and incentives to make data reusable. In their research into making born-digital archaeological field data more findable, accessible, interoperable, and reusable (FAIR), Ross and colleagues (Reference Ross, Ballsun-Stanton, Cassidy, Crook, Klump and Sobotkova2022) found that researchers, limited by time and resources, largely focused on features that helped them achieve their immediate analysis and publication needs, rather than those that would facilitate future reuse of their data by others.

Issues with reuse should be concerning for any archaeological project, given the increasing importance of creating reusable datasets. Archaeologists are more frequently reusing legacy data as a research focus (Garstki Reference Garstki2022; Kansa and Kansa Reference Kansa and Kansa2021), a form of apprenticeship and professionalization (Kriesberg et al. Reference Kriesberg, Frank, Faniel and Yakel2013), and a means to support larger regional studies (Anderson et al. Reference Anderson, Bissett, Yerka, Wells, Kansa, Kansa, Myers, Carl DeMuth and White2017). A growing number of public and private funding agencies, such as the National Science Foundation, National Endowment for the Arts, Institute of Museum and Library Services, and National Endowment for the Humanities, also expect data to be published and available for future reuse, requiring detailed data management plans as part of grant applications. Data are increasingly available to a wider array of stakeholders, leading to a call for archaeologists to follow the CARE (Collective benefit, Authority to control, Responsibility, and Ethics) principles when deciding how to make data reuse possible (Gupta et al. Reference Gupta, Martindale, Supernant and Elvidge2023).

Despite these advances, archaeological data reuse remains limited. This is true more broadly for disciplines on the “long tail of science”—those focused on individual or team-based data collection that is local and specific (Wallis et al. Reference Wallis, Rolando and Borgman2013). Although funding agencies may mandate data sharing, barriers to reuse remain, including inaccessible, unstructured, or incomprehensible data (Borgman et al. Reference Borgman, Scharnhorst and Golshan2019). Low rates of data reuse in archaeology and the high costs of making data reusable are the result of numerous social, technological, and environmental factors (Huggett Reference Huggett2018; Sobotkova Reference Sobotkova2018).

Written guidelines represent some of the earliest steps in the data life cycle when archaeologists envision their data structures and collection needs. Although making data reusable will require a variety of interventions, this article focuses on how improving written guidelines can create more usable datasets for a project team and future reusers. Despite ongoing scholarship on best practices in archaeological recording and the importance of data reuse in archaeology, existing research has not evaluated the ways in which written guidelines affect the usability of data created during fieldwork. Given the ubiquity of written guidelines for archaeological data recording, the site-specific needs of these guidelines, and their importance for training future archaeologists, it is especially critical to consider best practices for developing, applying, and revising them.

This article draws on data collected during the Secret Life of Data (SLO-data) project, including observations and interviews during archaeological fieldwork and interviews with archaeological data reusers. It describes ways to improve the usability of data at the point of creation through thoughtfully crafted written guidelines. By identifying current successes and limitations experienced when using written guidelines during excavation, the findings illustrate ways for archaeologists to leverage written guidelines to create more consistent, comparable, and comprehensible data.

RESEARCH METHODOLOGY

The SLO-data project had two major aims. The first was to examine data creation, management, and documentation practices of current archaeological excavations. The project team conducted observations and interviews at four excavation sites, identifying where data challenges were occurring, recommending changes to minimize the challenges, and capturing the outcome of the recommendations. The second aim was to identify how issues at the point of data creation affect reuse experiences downstream in the data life cycle. The project team interviewed 26 archaeologists who were identified as potential reusers of the data created by the four excavation teams (i.e., those interested in data from the same periods, regions, or specializations).

The SLO-data team selected four archaeological field schools with diverse locations, data practices, and teams to understand broader trends in the ways archaeologists document data during fieldwork. To maintain anonymity, the projects are referred to as Europe Project 1, Europe Project 2, the Africa Project, and the Americas Project. Additionally, the analysis referenced one set of archaeological terms for all projects (e.g., trench, unit) to avoid regionally specific terminology.

Europe Project 1 was a codirected, short-term excavation of an archaeological site where previous teams had conducted research intermittently over the past century. The project had 40 team members, more than half of whom were new or returning students. One aim of the field school was to prepare students for jobs in CRM. Consequently, the project directors used written guidelines based on established standards (i.e., the Museum of London Archaeology Service's Archaeological Site Manual [MOLAS 1994]) to train field-school students.

Europe Project 2 was a long-standing excavation with more than 50 years of ongoing fieldwork. The project consisted of 60 team members, of whom 40 were students. Graduate students and experienced returning undergraduates were trench supervisors and reported to a field director. These trench supervisors used an apprenticeship model to teach new students. The project director did not provide formal written guidelines; instead, the trench supervisors relied on notebooks and site reports from prior years as a model. Additionally, one trench supervisor developed and shared an informal one-page reference sheet with other team members that detailed important information to include in notebooks.

The Africa Project was a new excavation in an area where archaeological research was still developing. Consequently, the project director and team members were still refining their research questions and methods. The project team consisted of 30 people, including nine field school students. The project director provided an extensive manual that was modified from the MOLAS manual. Project members recorded data with tablets during the SLO-data team's first site visit, but due to technical problems, the project changed to paper recording the next year. Consequently, written guidelines shifted from how to record project data using specific software in the first season to written guidelines outlining how to fill out paper forms in the second season.

The Americas Project was a new excavation. The project director led a team of 23, 15 of whom were field-school students. The project director's previous excavations were not directly parallel to this site, and so there were no previous written guidelines to reference. Although the local government provided mandatory excavation forms, its needs differed from those of the project. To meet the needs of the project, trench supervisors used verbal instruction to help their teams record daily observations.

Excavation Project Observations and Interviews

Before visiting the excavations, the SLO-data team conducted semistructured interviews with each project director and other key project members to learn more about the project and their roles. Most of the SLO-data team's data collection occurred during the excavation seasons in separate two-week periods over two consecutive years. At each excavation, a SLO-data team member conducted nonparticipant observation, transcribed observations they made during fieldwork, and conducted semistructured and unstructured interviews with project staff. All interviews were audio recorded and transcribed. The interviews and observations covered topics such as data collection, recording, and management practices; tools and software; data standards and written guidelines; and data sharing.

To analyze the data, the SLO-data team developed a set of codes based on the interview and observation protocols and a sample of the transcripts. Coding results were assessed using Scott's pi (Scott Reference Scott1955), a method used to evaluate interrater reliability that compares observed coder agreement to the expected agreement that would occur by chance. Two members of the team completed three rounds of coding to reach a Scott's pi interrater reliability score of 0.81. For each round, both team members coded a transcript separately, calculated their agreement, and met to resolve discrepancies and make changes to the codebook. Once an acceptable level of agreement was reached, the two coders worked independently to code the remaining data in Nvivo 12 software. They used the annotations feature in Nvivo when they had coding questions and met periodically to discuss and resolve them. To develop findings and recommendations, the team selected for further analysis a subset of codes related to data practices (e.g., linking, transferring, updating, validating), workflows (satisfaction, problems, workarounds, changes, schedules), local and global standards, and naming and identifiers.

Recommendations for Excavations

After identifying key findings and recommendations for each excavation project, the SLO-data team met with each project director to present findings and recommendations and to receive feedback on how well the findings reflected project experiences and whether the recommendations were plausible solutions. The project directors decided which recommendations to implement prior to the SLO-data team's second year of data collection. The SLO-data team collected data on the results of implementing the recommendations during second-year observations.

Reuser Interviews

The SLO-data team audio recorded and transcribed semistructured interviews with 26 researchers who were unaffiliated with the four excavations. These individuals, with a variety of career stages and specialties, were identified by the SLO-data team as potential reusers of data created by the excavation teams. Interviews lasted approximately 45–60 minutes and covered topics related to their data collection, recording, and management practices; their last experience reusing archaeological data; aspirations for their future reuse experiences; and the influence reuse had on their data practices. To maintain anonymity, researchers’ names were removed from transcripts and replaced with a sequential identifier (e.g., R01).

For analysis, the SLO-data team created a set of codes for reuser interviews based on the data reuse literature, the interview protocol, and a review of five transcripts. Three members of the SLO-data team coded the transcripts. They conducted four rounds of coding. The fourth-round Scott's pi interrater reliability score for each pair of coders was 0.65, 0.66, and 0.77. Given the range of pairwise scores, the coders paid particular attention to codes where they experienced disagreements by using the annotations feature in NVivo to ask questions about coding and meeting periodically to discuss and resolve them.

For this study, the SLO-data team focused on excavation data related to how data recording practices were decided, documented, and circulated among team members, including findings from first- and second-year interviews and observations and first-year recommendations. The SLO-data team also analyzed codes related to reusers’ aspirations for their future reuse and the influence reuse had on their data practices. These codes reflect reuser responses to the following three questions:

(1) If you could request one thing from excavation teams that would make your reuse easier, what would it be?

(2) What would be your ideal way of accessing archaeological data and associated information?

(3) How has reusing data influenced your data practices (e.g., data collection, recording, management)?

The findings presented here focus on how reuser responses align, contradict, or augment the findings and recommendations from the excavation projects related to written guidelines.

RESULTS

The project directors had a wide range of approaches to written guidelines for their projects, including trench-specific guidelines provided verbally by each trench supervisor; project guidelines primarily provided through verbal instruction, reference sheets, and documentation from prior years; written project guidelines based on a local standard; and highly detailed, site-specific written guidelines. Despite the approach used, all projects experienced problems with the comparability, consistency, and interpretability of the recorded data. Findings showed three major areas where written guidelines could be created or improved to address these problems: (1) descriptions of excavation methods, (2) transitions between field seasons, and (3) site-specific naming practices. These findings were confirmed in interviews with archaeological data reusers. This section presents these findings along with recommendations for written guidelines that archaeologists can adopt to improve the quality and usability of excavation data.

Describing Excavation Methods for Comparable Data

Excavation Project Observations and Interviews

Although several projects developed written guidelines to make field methods uniform, the SLO-data team observed how evolving research questions and team feedback led to differences in excavation methods at three field projects. In some cases, feedback led to excavation methods changing within one trench and across trenches. These differences, however, were not captured in project documentation, which resulted in unexplained differences in excavation methods that led to inaccurate interpretations of excavation data.

The project director on the Americas Project did not initially provide written guidelines. Instead, each trench supervisor gave their team oral instructions for excavating and recording finds based on their research interests and preferred data practices. One trench supervisor focused on DNA, so their team wore gloves when handling human bones, although other teams did not. In another trench, a student was researching shells, so these were carefully sieved and collected only in that trench but not in others. There were also differences in what information was recorded about finds. One trench mapped both diagnostic and nondiagnostic pottery sherds and took elevations on each diagnostic sherd. Another only photographed diagnostic sherds and did not record nondiagnostic sherds at all because the large concentration of sherds they were excavating daily made higher-level recording unfeasible. These differences led to data that were less comparable across trenches.

The Africa Project also experienced variation in how finds were being excavated and recorded, even though printed manuals with site-specific guidelines were used during training and fieldwork. During a team meeting, a lithicist on the Africa Project noted abundant microliths in one trench compared to others, suggesting it could be a production area. Discussion revealed, however, that this trench team had collected all chert in their sieves, whereas other trench teams had only collected larger pieces. Thus, differences in the number of microliths across trenches may have instead resulted from different collection practices. Bones were also handled differently across trenches, with one trench team using the tip of the trowel to avoid handling fragments and another collecting fragments from a sieve with their bare hands. Notably, the zooarchaeologist who was working at the dig house was unaware of these differences because conversations about how to handle bones were only happening at the excavation site.

On Europe Project 2, trench teams were responsible for recording small finds (finds recorded individually with coordinates) and bulk finds (classes of finds collected in groups without individually recorded coordinates) into their notebooks. Guidelines were in the form of a reference sheet that directed what trench teams wrote in their daily logs and narratives. However, the reference sheet was not a formal, required document, and it was also incomplete, leading some to seek guidance in notebooks and site reports from prior years. Notebooks provided trench-specific and unit-specific guidance from one year to the next, whereas site reports described tools used, pottery types and fabrics, soil textures, and the like. In some cases, excavation priorities led trench supervisors to deviate from their trench's existing collection practices. For example, after discovering human bones among the bulk animal bones, one trench characterized every bone fragment as a small find to better understand the location of possible human bone fragments. Eventually, the abundance of bones forced the trench to return to recording them in bulk. This resulted in relatively more individually recorded small finds in one trench during one season, without any documentation noting a change in excavation practices. The field director also noticed a lack of comparability across trenches because trench supervisors were overburdened by the recording of small finds and had rushed to complete their notebooks at the end of the season.

Recommendations for Excavation Projects

Based on findings from the three excavation projects, the SLO-data team made multiple recommendations to improve the consistency and documentation of the project's excavation methods. A key recommendation for the Americas Project was to develop and implement project-wide written guidelines. To create such guidelines, the project director formed a team of trench supervisors, including bioarchaeologists, who could expand on methods for excavating human remains. The team created a 30-page manual that outlined excavation methods and recording requirements, including details on excavating human remains, such as the size of the mesh screen used to sift and the protective equipment necessary to avoid DNA contamination (i.e., gloves, face mask, and hairnet). According to the project director, the new manual had the added benefit of acting as “a pilot's takeoff checklist” for their upcoming field season. As the team developed written guidelines for their excavation methods and recording requirements, they had to reflect on the specific equipment needed for each trench. The project director allowed sampling strategies to diverge from the project's main research questions and to focus on individual interests (e.g., collecting shell). However, these similarities and differences across trenches were required to be recorded in forms and notebooks, which allowed the team to know when data and interpretations across trenches could be compared.

The director of the Africa Project already had extensive project guidelines in place that were site-specific. Therefore, recommendations focused on encouraging more interactions between trench supervisors and specialists to facilitate discussion. One recommendation refocused team meetings from status updates to in-depth discussions about specific trenches and specialist data to elucidate differences across trenches. In addition, the project director wanted specialists and trench supervisors to join students during lectures because their post-excavation analysis relied on the extent to which trench teams excavated, handled, and recorded classes of artifacts similarly. These interactions aimed to extend trench teams’ training and review of excavation methods and documentation throughout the field season, thereby taking corrective actions to support specialists’ downstream work.

The key recommendation for Europe Project 2 was to make the transition from informal to formal written guidelines to be used by all trench teams. In consultation with the project director, the SLO-data team recommended a new data-entry system that integrated these guidelines. Trench teams would enter excavation data into project-specific digital forms. Trench supervisors worked with the SLO-data team to identify relevant elements from the reference sheet, appropriate prompts, and optimal data-entry workflows to ensure consistency. Based on their feedback and SLO-data team observation of formal and informal training sessions, forms included controlled vocabularies to streamline data entry and make recording more consistent. This avoided the need for written guidelines on excavation vocabulary by automatically restricting what data could be recorded.

Reuser Interviews

Several reusers indicated that data could end up being useless without the kinds of clear and detailed descriptions of methods that the excavation projects began recording after improving their written guidelines. The biggest challenge faced by reusers was understanding whether or how they could compare values across datasets. R11 was accustomed to analyzing data that were not perfectly comparable by changing the level of resolution of the analysis. However, ambiguity in the meaning of a value when the description of methods was missing—for example, whether length measurements included both incomplete and complete finds—hampered R11's decision making around data reuse.

In some cases, reusers needed information that may have seemed obvious during excavation but was critically missing years later during reuse. R24 explained that many excavations did not describe in their reports methods that were commonly used at the time of excavation: even present-day reports often omitted descriptions of sieving practices, how strata were determined, whether specialists were involved, and methods for drawing plans. Both R24 and R19 attempted to address this by including exhaustive and detailed methods in their publications, but peer reviewers and publishers told them that they were too lengthy or unnecessary. R19 included them instead as appendices.

Reusers also emphasized the importance of documenting decision-making criteria in clear, detailed ways. R13 took photos while recording observations to make it easier for others to understand and use their scoring criteria. Similarly, R20 used a detailed textual description to enable students and team members to score criteria the same way every time. R14 noted factors that seemed self-explanatory, like how much of a bone needed to be present to be counted, but that required elaboration for their students. The reusers urged that even seemingly self-explanatory terms like “present” be defined for anyone accessing their database or using their recording method.

Learning from their own experiences using other people's data, some reusers developed strategies to ensure they collected and recorded data consistently to improve comparability across their projects. One strategy was to adapt standard methods as necessary by either translating any new method to a standard or recording values for both a standard method and their new method. Another strategy was to involve specialists when developing projects. R08 discussed the need for project directors and specialists to work together early in the project to identify ways specialists could guide the excavation team, such as explaining what data, excavation methods, and sampling strategies were needed and why. These practices would ensure that even when a specialist was not present during fieldwork, finds could be collected in a way that allowed data comparability within and outside that excavation.

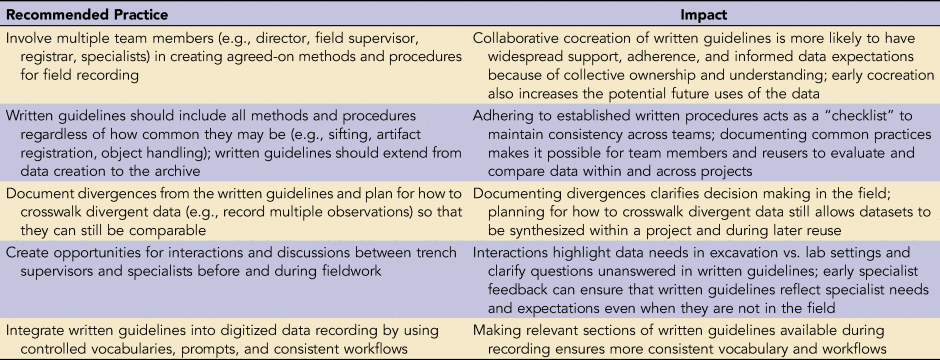

Based on excavation team and reuser experiences, excavations can adopt several practices to improve the development, implementation, and use of written guidelines for excavation methods (Table 1). These practices help excavation leaders consider how their data will be used by team members with different research interests, use consistent methods across trenches and team members, document divergent practices, and ensure all methods are recorded. Doing so makes data more comparable and interpretable and broadens its potential uses, improving usability both for team members and future reusers.

Table 1. Recommended Practices for Written Guidelines for Excavation Methods.

End-of-Season Documentation for Comprehensible Data

Excavation Project Observations and Interviews

In addition to issues of data comparability within projects, the SLO-data team identified cases where a lack of written guidelines for the end of the field season made data incomprehensible for new team members. In Europe Project 1 and the Africa Project, new trench supervisors reopened previously excavated trenches. Yet no new trench supervisors were able to fully reconcile previous documentation with what they were seeing during reexcavation, and they could not rely on their memories from the previous season to fill gaps in documentation. Instead, they pieced together incomplete documentation to reconstruct what they thought happened in the prior season and then wrote new documentation to support it.

When two new trench supervisors joined Europe Project 1, they did not have the information they needed, such as which units were still active and their locations, to determine where excavators in the previous season had stopped working. This was due in part to the project's reliance on the written guidelines found in the MOLAS manual, which originally supported urban rescue excavations completed in a single field season. When units were first opened, the team only recorded a one-sentence description, waiting to complete the unit sheet, plans, and photographs until the unit was fully exposed. Consequently, partially excavated units were described as “cannot be completed,” and unit sheets were left mostly blank. Other previous year's unit sheets were incomplete, and there was no indication whether units were fully excavated. Additionally, some maps on unit sheets did not have meter markings, making it difficult to place units spatially. Without photographs or more detailed descriptions, new trench supervisors were unsure how to identify the location or extent of units when reopening a trench. They also found discrepancies between unit sheets and the master plan, but because the project directors did not collect notebooks, the new supervisors did not have access to trench supervisor notebooks from the prior year to reconcile these differences. To make sense of this missing and incorrect information, the new trench supervisors attempted to contact the previous supervisor, make their own master plan of the previous year's units, and correct contradictory data. These delays consumed nearly one-fifth of their field season.

Two new trench supervisors working on the Africa Project had similar experiences even though they had access to past trench supervisors’ notebooks. These new trench supervisors described working with the previous season's data as “puzzle-solving.” One issue was that the notebooks referenced units by number without other descriptions. Another challenge was insufficient documentation of previous trench supervisors’ decisions. One new trench supervisor found that one unit was sectioned off, with a smaller, deeper excavation within it. The trench supervisor's notebook from the prior year did not explain why this was done, so the new team was left wondering whether it was a separate unit or a test pit. It was also difficult to reconcile GIS data from one year to the next because prior documentation did not include reference points with final elevations. The new trench supervisor was unsure whether discrepancies in elevations were due to differences in the newly established datum or measurements from the previous year. Consequently, the elevations consistently differed between seasons, making it impossible to know the extent of partially excavated units. After reviewing notebooks and plans and making their own maps of excavated areas that brought relevant data together, the new trench supervisors were able to develop descriptions of trenches and units. All this had to occur before they began excavating.

Recommendations for Excavations

The SLO-data team made several recommendations to improve how written guidelines could ensure a smoother transition between field seasons. The team recommended that the project directors for Europe Project 1 and the Africa Project seek feedback from new trench supervisors to establish written guidelines for the end of the field season that all trench supervisors could follow. The SLO-data team recommended that the guidelines outline what needs to be recorded at the end of the season, what supplementary information (e.g., notebooks, photos) needs to be shared and archived with other project data, and how this information could be accessed in the future.

The new trench supervisors on both projects mentioned wanting the same three things. The first was a unit list that included each unit's identifier followed by a one-sentence description and its status (e.g., active, completely excavated). This information would make it easier to navigate references to unit numbers and would also allow trench supervisors to quickly assess which units remain in the trench. They also wanted a final narrative summary of how the trench was left at the end of the season, including how it was backfilled, which units were still open, and any outstanding research questions. Lastly, they wanted access to notebooks from prior seasons so they could understand the rationale behind excavation decisions. Additionally, the new trench supervisors on the Africa Project recommended that end-of-season documentation include clear, consistent elevation data so trench supervisors could align elevation data across seasons.

Because these recommendations came at the end of the excavation season for both projects, the SLO-data team could not observe their implementation. However, the project directors agreed that establishing written guidelines for end-of-season documentation was important and planned to integrate the new trench supervisors’ feedback into the project's written guidelines.

Reuser Interviews

Like new trench supervisors, reusers sought to reconstruct the site and the process of excavation. They needed sufficient documentation to make the data they were reusing comprehensible. They also wanted to be able to independently understand and evaluate interpretations of site units and to re-create an artifact's position, especially relative to other objects. The information they needed either did not exist in the project database or older data records or was not systematic enough to use for this purpose.

Reusers wanted excavations to provide photographs or final drawings to make it easier to determine how objects related to each other spatially. For R20, these images were necessary because they addressed “ephemeral questions that are lost after excavation.” Similarly, reusers wanted access to project notebooks because they preserved details about the site, the excavation process, and excavators’ interpretations that were only captured in narrative form. R21 pointed out that these notebooks ideally should be digitally preserved to make them more accessible. Reusers also wanted a comprehensive unit list with preliminary phasing so that they had basic parameters for understanding the nature of a given unit. This information would help them prioritize which units to study and to offer more informed interpretations of their findings.

When considering how reuse changed their own recording practices, reusers noted that they had added more time at the end of the season to complete their documentation. As R01 recalled, “I had seen other people have, at the end of the field season, they have like a thousand unlabeled photos [laughter].” Based on reuser practices, end-of-season instructions should also require that excavations provide the time and structure necessary for team members to complete documentation before leaving the field.

Excavation team and reuser experiences highlight several practices that projects can adopt to improve the development, implementation, and use of written guidelines for transitions between field seasons (Table 2). These practices enable more thorough and consistent documentation of units and improve the comprehensibility of the site and the project's data for both project team members and future reusers.

Table 2. Recommended Practices for Written Guidelines on Transitioning between Field Seasons.

Using Site-Specific Naming Practices to Better Link Data

Excavation Project Observations and Interviews

Archaeological data not only need to be comparable and comprehensible but also linked and interconnected within a project. This is most often accomplished through the use of identifiers—the unique names and numbers that projects use to identify trenches, units, and finds. However, across excavation projects, teams experienced difficulties creating and using identifiers. When these naming practices were not specified in written guidelines, team members used different identifiers, disagreed, and recorded inconsistently. Some teams used complex identifiers, making them hard to understand and difficult to record. In addition, identifiers were often designed with only one stage of the data life cycle in mind (e.g., recording), which led to problems in other stages, particularly when searching, accessing, and linking data in project databases.

The Americas Project faced several challenges related to naming practices. As a site focused on bioarchaeology, one of its challenges was determining the identifiers used to label bone. The identifiers were designed to be descriptive enough to locate and return specimens to their proper storage locations. However, they were then too long to record on small bones and fragmentary elements. In addition, trench teams learned the naming practices they needed to record for notebooks, handwritten sheets, finds bags, and bag tags through verbal instruction from trench supervisors. Yet, the supervisors did not always agree on some of the basic elements of recording, leading to tension and disagreements during fieldwork. One recorded the “Site” after the hill they were on, but another named the “Site” after the entire set of hills in the region. Finds bags were supposed to be recorded in uppercase letters, but one trench team used a combination of uppercase and lowercase letters, which led to frustration when they had to relabel every bag. Trench supervisors also experienced language barriers because most spoke English or Spanish but not both, which made it difficult to discuss and reconcile naming practices. Moreover, the time spent correcting documentation reduced time spent in the field.

Lastly, handwritten documentation was often freeform, leading to additional recording discrepancies. The order of information varied across documents and recorders. Some recorded category names (e.g., Name, Material, Site) to indicate the type of identifier that followed, whereas others did not. Some recorded their names completely; others used only first names or initials. They also recorded dates differently, using two- and four-digit years. These variations made it difficult to review the accuracy and completeness of documentation and identify missing information.

Europe Project 2 also did not have written guidelines around naming practices. Team members learned how to label finds by referencing find tags from previous years. However, the numbering system for small finds was complex and confusing. The project followed a long-standing practice of numbering small finds daily starting at “1” in every trench. As one team member explained, “They'll have their [small] find number, but the problem is they keep renumbering the [small] finds every day. So you can't say, you know, T88, find number 5, ’cause every day we'll have a find number 5.” This meant the team needed to know the trench number and the recording date to distinguish among several objects labeled “small find #5.” In addition, the process of labeling photos and associating them with trenches and objects occurred independently of the project database and could only be validated at the end of the season, risking labeling errors that could go unchecked. One or two students would be tasked with organizing and labeling all photos generated by the project based on information written on the whiteboard in the lab. At the end of the season, all the photos would be uploaded to the database and associated with the units and objects they described.

The project directors for Europe Project 1 used the MOLAS manual's written guidelines to create and record identifiers for the names of the site, units, elevations, plans, finds, and environmental samples. Even so, trench teams experienced problems. Workflow slowed because the MOLAS manual required that units and elevations be documented in one master registry. Trench supervisors had to walk to the laboratory to receive an identifier from the data manager for new units or elevations. To avoid waiting in line, some generated their own identifiers, leading to duplicates. Students also ran into recording problems. Per the MOLAS manual, some identifiers were overlaid with symbols (e.g., square brackets around unit numbers, triangles around sample numbers). Because the MOLAS manual was designed to support paper-based recording, this worked well for handwritten unit sheets, but it did not carry over into the database. Students were given verbal instructions for data entry but could not remember all the data transfer rules; for example, do not use square brackets when entering unit numbers into the database. Moreover, the database entry reference sheet did not mention data transfer rules, and the database did not return error messages when these rules were broken.

Recommendations for Excavations

The SLO-data team recommended that project directors include naming practices in their written guidelines with examples of their use throughout the data life cycle. In addition, project directors were advised to reduce the complexity of identifiers and streamline their creation process.

To meet the dual needs of trench teams recording identifiers on physical finds during fieldwork and future researchers locating those same finds in storage boxes, the SLO-data team recommended that the Americas Project use a shorter identifier that included the site code, unit number, and a sequential number and was more likely to fit on smaller elements and still be descriptive enough so they could be found and returned to their proper storage location. As a result, new naming conventions were put into practice when creating identifiers for bones, pottery, and finds bags. The SLO-data team also recommended developing written guidelines for naming practices so that everyone had a shared understanding about what to record. Rather than using freeform recording on finds bags, it was recommended that bag tags be printed with category names and their order of appearance across documentation. During the next field system, the team of trench supervisors created and started placing printable bag tags inside finds bags. Additionally, three recommendations were made to bridge language differences in the written guidelines:

(1) Include a list of relevant archaeological terms in both English and Spanish and exemplar documentation to reference (e.g., completed notebook pages, bag tags).

(2) Appoint a field supervisor to evaluate and supervise fieldwork and documentation.

(3) Make the written guidelines available in English and Spanish along with the templates.

The project director reviewed the manual with team members and gave each a copy to use during the field season. As one trench supervisor explained, the guidelines reduced time spent resolving conflicts: “Well, what was easier . . . we had a much more structured protocol and paperwork . . . last year, it went by more slowly because there was a lot more confusion, so there was more time [spent] between . . . supervisors who had two different methodologies.” The new manual not only saved time during fieldwork but also, more importantly, reduced tension among team members and resolved potential disagreements before they started. The project director appointed a field supervisor and worked with her so that both English and Spanish speakers could get instruction when needed. The director and supervisor visited trenches to evaluate progress, answer questions, and do quality checks. They also reviewed documentation before it was digitized.

On Europe Project 2, the SLO-data team recommended that trench supervisors record small finds using a continuous sequence of numbers over the course of the field season. The SLO-data team also recommended designing a new data-entry system for trench supervisors. This recommendation was implemented, and the system's new documentation included how to use data-entry forms and information about the importance of identifiers and best practices for writing them. The forms included written instructions and required fields, with limited choices for data entry, which streamlined and structured the process. Drop-down lists of prepopulated trench numbers reduced errors in data entry and the number of disambiguated locus and small find identifiers. Additionally, a new form for photography meant that team members could label their photos automatically with the correct identifier and associate them with the areas and objects they described. The photographer wrote a detailed set of guidelines describing this workflow for future use. Dedicated students were no longer needed for photo labeling, which allowed them to participate in other tasks.

To address the bottleneck associated with issuing and managing identifiers at Europe Project 1, the SLO-data team recommended that the project directors go against MOLAS guidelines by (1) assigning batches of identifiers for units and levels to each trench and (2) giving trench supervisors the authority to mint and assign identifiers within their assigned batch. The project directors implemented this suggestion, which was expected to eliminate bottlenecks, minimize duplicate identifiers, and show the order of excavation within each trench. Because the MOLAS guidelines supported paper-based recording only, the SLO-data team recommended on-screen error messages and additional written guidelines for data entry, including screenshots that explain what data to enter where, how to fix common data-entry mistakes, and frequently asked questions. The project directors accepted the recommendation and revised their written guidelines to include on-screen error messages. These revised guidelines were used during the next field season and were expected to smooth students’ transitions between paper recording and database entry.

Reuser Interviews

Although none of the interviewed reusers specifically mentioned identifiers, several of the problems they encountered and the changes they implemented in their data practices related to using identifiers to link and integrate data and documentation. Context and specialist data were of particular concern. Many reusers wanted more specific, systematic, and better-integrated information about archaeological context. However, context data were often stored in separate tables and consequently inaccessible. R23 emphasized the need to keep enough contextual information with artifact data so finds could be analyzed from those tables alone, even if they were not necessary for the original analysis: “Even if that orphan table is all that anybody ever has 50 years from now, that there's enough information in the data to that table that somebody would know it's from this site, it's from this room, it's from this feature number, it's from this [level].”

Similarly, when archaeological context data were not thoroughly integrated into the database, it was difficult for reusers to sort or query the data and, consequently, to interpret it holistically. One reuser described the need for contextual data: “I feel that there is too much compartmentalization of our archaeological research in the sense that most sites divide, still, material by type. . . . But none of us know how it all fits together. And that defies the purpose of what we're doing as archaeologists.” This lack of intellectual integration is reflected in the lack of data integration within project datasets and databases. R05 summed up both the goal and the challenge: “It would be really great to have an integrated database where you've got the context list, and then you've got the specialist databases that all link into it using the context number. . . . But that is a bit of a chicken and egg situation because as a specialist you're asking them for that . . . and you've got to give your database at some point.” Specialists are particularly aware of the complicated nature of creating and integrating archaeological data. Planning for this process in advance can help smooth the integration of these complex and iterative data.

Unique identifiers are an essential part of integrating data in meaningful and understandable ways because they enable the tracking and disambiguation of finds and their associated contextual data. Several reusers were proponents of recording in relational databases and using value lists within those databases to facilitate consistent data entry, more easily link data together, and retain descriptions with the data. Doing so sped up their data entry and made it easier to share data. They also emphasized the need to keep data together and to keep supporting documentation (e.g., photographs, reports) with the data, so reusers could assess the data's content, scope, and relevance: “I don't want to have to go back to every one of 250+ publications, I want—if I've got something in the database, I want to understand it in the database” (R11). Reusers therefore suggested that both specialist and excavation data structures (whether databases or spreadsheets) need to better integrate contextual information with other project data. One way of accomplishing this is through clearer written guidelines that describe the project's approach to assigning identifiers that are unique within the project so data from across the project can be linked and archived.

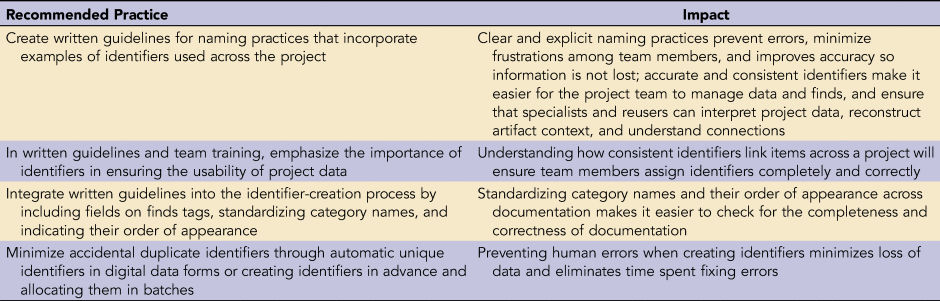

Based on excavation team and reuser experiences, excavation projects can adopt several practices to improve the development, implementation, and use of written guidelines for creating unique identifiers (Table 3). These will help streamline the process of creating identifiers, ensure that identifiers meet needs both in the field and in the database, and create identifiers that are accurate and complete so project data can be meaningfully integrated. This helps improve the clarity and interpretability of data both for project team members and future reusers.

Table 3. Recommended Practices for Written Guidelines on Naming Practices.

Limitations and Future Research

Although findings and recommendations from this study are based on a rich variety of specialists and archaeological research projects in diverse locations, they still cannot represent the full diversity of archaeological research. Specifically, all the proposed best practices for written guidelines may not be applicable to CRM. Even so, broader research has demonstrated that CRM projects face some of these same challenges. Ortman and Altschul (Reference Ortman and Altschul2023) argue that there is and, increasingly, will be a need for CRM-based archaeological data to have more explicit methods and better data integration standards given current disparities in data collection. Future research would do well to determine whether and how the findings and recommendations from this SLO-data study would apply to CRM.

Because all the excavation sites were field schools, a good portion of the improvements to written guidelines were designed for archaeologists in training, not experts. This raises the question of whether and how written guidelines for excavation projects comprising experts in archaeology and other areas (e.g., specialists, catalogers, photographers, illustrators) might differ. Although there are instances where findings show the influence that experts can have on shaping written guidelines, project directors would do well to consider how to draw on team expertise to explicate and refine existing written guidelines.

Additionally, this study focused on data created during fieldwork; there were not many opportunities to observe how written guidelines affect data created in labs and post-fieldwork processing. This is particularly important because findings show that existing written guidelines rarely address the data life cycle beyond excavation. The recommendations and checklist consider some of the ways that integrating specialist feedback can be useful even if their data collection occurs after fieldwork; however, project directors should also consider how written guidelines can improve other aspects of the data life cycle such as storage and management.

Another limitation to this study is the focus of most of the observed projects on paper-based recording. Increasingly, archaeologists are turning to digital solutions for data collection. These recommendations begin to address some of the unique ways that digital data collection can incorporate written guidelines (i.e., controlled vocabularies, prompts, and established workflows), but further research is needed to evaluate the impact that such built-in written guidance has on born-digital records.

Finally, the SLO-data team only observed projects over two seasons, limiting opportunities to assess the implementation of many recommendations and their impacts on data usability. Project recommendations integrated input from the project directors who agreed that these recommendations would improve their data creation. Nevertheless, future interviews would help to understand the longer-term adoption and impacts of the recommended changes.

DISCUSSION AND CONCLUSION

Written guidelines alone do not encourage the creation of datasets that are consistent, comparable, and comprehensible in ways that enable use by an excavation team over time and personnel changes. To achieve these goals, there also needs to be communication and collaboration around the development, understanding, and application of written guidelines throughout the excavation process. The SLO-data team created a checklist of recommended practices and their implementation for each stage of an archaeological excavation to facilitate this collaborative approach (see Supplemental Text 1). Whereas project directors may implement these recommendations to help create data that better meet their needs, findings from interviews with reusers indicate that these same actions also make project data more reusable for others.

Treating written guidelines as living documents can help project directors ensure that their teams’ needs are being met and that changes from year to year are documented. Project directors' openness to change was critical for creating more comparable, comprehensible, and integrated data. For the Americas Project, this meant bringing together a team to create written guidelines that standardized excavation methods and documentation that had previously relied on varying verbal instructions. For Europe Project 2, it meant replacing informal guidelines passed down verbally each year with a data-entry system using formalized naming conventions (i.e., controlled vocabulary, identifiers) to reduce errors and enable linking among data and documentation. For the Africa Project, it meant supplementing written guidelines with conversations among students, trench supervisors, and specialists to create a mutual understanding of how the guidelines should be applied to ensure everyone's needs were met. For Europe Project 1, this meant altering an established standard for written guidelines to better meet the needs of the full excavation team.

Despite debate between proponents of standardized and reflexive data practices, findings indicate projects needed to integrate both when recording and archiving their excavations to create usable data. Within the excavation projects, standardized data practices ensured comparability and allowed the team to carry out its work consistently. Reflexive data, in contrast, allowed the team to better understand the excavation site and one another's practices within it. It was common for excavation team members to refer to prior years’ notebooks, including their own, to get a fuller picture of what had been done and how people were interpreting the site. Similarly, reusers wanted to be able to reconstruct the excavation contexts, the process of excavation, and artifact locations. They needed information that preserved details about the site, like visuals (e.g., photographs and final drawings) and narratives (e.g., notebooks), to contextualize and understand the data at hand, even when those data conformed to standard practices. Naming conventions, particularly identifiers, are critical for supporting these efforts because they link data and documentation as they move through the life cycle. Identifiers integrate the data physically and digitally so that archaeologists can connect data intellectually. Projects need to collect and archive standardized and reflexive data to make their data usable for teams and reusers alike.

Regardless of where projects situate themselves on the continuum between standardized and reflexive practices, findings suggest that the usability of their data benefits from democratizing the entire process of data creation. When team members were not consulted about methods, or when methods were allowed to be tacit rather than explicit, practices varied in often unknown or unrecognized ways, reducing the comparability of data and preventing certain types of analyses. Cocreating and documenting procedures in advance and allowing for documented divergence that meets the needs of the team help ensure that everyone has what they need, is on the same page, and can achieve their research objectives. This documentation is also exactly what reusers need when evaluating, analyzing, and interpreting others’ data. Without these descriptions, reusers often did not know whether data were comparable and could not comprehend exactly what the data represented.

To cocreate written guidelines, ongoing communication about different data needs must involve multiple team members and consider the various settings in which project data are (or will be) used. When team members do not understand each other's needs, they cannot create and document data in ways that meet those needs. Surfacing the different needs and approaches of various team members helps project directors think more carefully about future uses of project data. A lack of communication among team members also created issues when guidelines were interpreted differently and used inconsistently. It was essential to provide both structured and unstructured opportunities for ongoing communication to bring to the surface and resolve conflicting practices and make changes when agreed-on practices were no longer serving project needs. Rather than thinking about democratization only as decentering project directors’ interpretations of a site, involving varied team members in planning, creating, implementing, and communicating data creation practices and the written guidelines surrounding these practices ensures a more genuine cocreation of knowledge.

Acknowledgments

We thank the article's anonymous reviewers for their constructive recommendations and comments. No permits were required for this work.

Funding Statement

The Secret Life of Data project was made possible by a grant from the National Endowment for the Humanities (PR-234235-16). The views, findings, conclusions, and recommendations expressed in this article do not necessarily represent those of the National Endowment for the Humanities.

Data Availability Statement

The findings we report here result from the analysis of qualitative data that our team collected from interviews and observations conducted during the SLO-Data Project. We used strict naming conventions to manage the interview and observation data, according to the project's Institutional Review Board. To the extent possible, in consultation with the Institutional Review Board, we will make the full results available through the California Digital Library Merritt repository after conclusion of the grant and after we have concluded analysis and prepared the data for sharing.

Competing Interests

The authors declare none.

Supplemental Material

For supplemental material accompanying this article, visit https://doi.org/10.1017/aap.2023.38.

Supplemental Text 1. Written Guidelines Checklist.