Nomenclature

- UA

unmanned aircraft

- DNNs

deep neural networks

- LSS

Low Slow Small

- CFAR

constant false alarm rate

- MTI

moving target indicator

- RCS

radar cross section

- MIMO

multi input multi output

- FMCW

frequency modulated continuous wave

- DTMB

digital terrestrial multimedia broadcast

- m-D

micro-Doppler

- STFT

short time Fourier transform

- SVM

support vector machine

- NBC

naive Bayes classifier

- FFT

Fast Fourier transform

- SVD

singular value decomposition

- CVD

cadence velocity diagram

- EMD

empirical mode decomposition

- PCA

principal component analysis

- LTCI

long time coherent integration

- NUSP

non-uniform sampling order reduction

- RFT

Radon Fourier Transform

- CNNs

convolutional neural networks

- SNR

signal to noise ratio

- MLP

multi-layer perceptron

- YOLO

You Only Look Once

- MFCCs

Mel-frequency cepstral coefficients

- RDF

Radio Direction Finding

- TDOA

time difference of arrival

1.0 Introduction

At present, unmanned aircraft (UA) are widely used around the world, in fields including aerial photography, express transportation, emergency rescue, electric power inspection, agricultural plant protection, border monitoring, mapping, fire monitoring and environmental protection. However, the rapid popularisation of UA also brings serious security problems [Reference Chen, Wan and Li1]. In recent years, the media have reported dozens of public security incidents caused by UA intrusion, whose main targets are airports, prisons, public buildings and other sensitive places [Reference Jin and Shang2].

UA detection systems generally use radar as the core, supplemented by photoelectric (visible and infrared), acoustic, radio detection and other sensors, aiming at achieving all-round situation awareness in the guarantee of major events and the defense of important places. When multiple sensors are deployed, both visible and infrared devices have some classification ability and have more accurate positioning and ranging functions. Visible-light cameras are usually cheap, while infrared cameras are expensive, but both are sensitive to environmental conditions. In addition, although an acoustic sensor is not as sensitive to the environments, its limited detection range limits its application. Radio detection technology is sensitive to complex electromagnetic environments, and is ineffective to electromagnetically silent UA. Therefore, in view of radar’s accurate positioning ability and large detection range, as well as its better target classification ability and environmental adaptability, it has become the most common UA detection means.

Since current multi-sensor UA detection systems cannot realise automatic operation, it is necessary to confirm the detected target manually. For example, the early detection results from the radar might guide the operator to observe the direction of the target through the optical camera for confirmation [Reference Luo and Yang3]. In recent years, the demand for multi-source information fusion in various applications is increasing day by day, which makes the data fusion technology widely important. The goal of data fusion is to make up for the weakness of a single sensor, so as to obtain more accurate detection results. On the other hand, artificial intelligence and deep neural networks (DNNs) have become a very attractive data processing method, which can find high-order abstract features that are difficult to find by typical feature extraction methods. Therefore, they are widely used in massive multi-source data processing, and have achieved good results in UA detection and classification.

This paper focuses on the research results of UA detection and classification using radar, visible light, infrared, acoustic sensors and radio detection as the data acquisition tools and deep learning as the main data analysis tool. In recent years, there has been a growing demand for multi-source information fusion in various applications, and data fusion technology has been widely considered [Reference Li, Zha and Zhang4]. The sensors selected in this paper have excellent performance in the typical monitoring system, and complement each other in the multi-sensor information fusion scheme. Radar is generally considered as reliable detection equipment, but in most cases, it is still difficult to distinguish small UA from birds [Reference Chen, Liu and Chen5]. Taking the airport clearance area as an example, birds and UA are the two main dangers that threaten the safety of flight during takeoff and landing. The airport needs to take different countermeasures after discovering the target. After the UA target is found, the airport will first issue an early warning, guide the takeoff and landing flights to avoid the target and coordinate with the airport and local public security for disposal. After a bird target is found, the airport will use a variety of bird repulsion equipment to drive it away from the dangerous area or take certain avoidance measures based on a bird driving strategy. Therefore, it is necessary to identify both UA and birds. Due to the wide variety of UAV and bird targets and their different size, shape and motion characteristics, the targets have different radar scattering and Doppler characteristics. Therefore, they belong to typical Low Slow Small (LSS) targets with low observability. At present, the detection methods of LSS targets mainly involve constant false alarm rate (CFAR), moving target indicator (MTI), moving target detection (MTD), coherent accumulation, feature detection and other methods. When using the existing technology to detect LSS targets, due to the complex environment, many false targets, strong target mobility, short effective observation time and many other problems, it leads to low target detection probability, high-clutter false alarm, low accumulation gain and unstable tracking, which bring great difficulties to target detection and judgment, making the detection of birds and UA a worldwide problem.

This paper summarises the research progress of unmanned rotorcraft and bird target detection and recognition technology based on multi-source sensors in complex schemes in recent years, and points out its development trend. The paper is organised as follows. Section 2 introduces the radar detection technology for UA and bird targets and introduces the relevant system applications. Section 3 focuses on the technical methods of UA radar target detection and classification. Firstly, from the aspects of target characteristic cognition and feature extraction, echo modeling and micro-motion characteristic cognition methods are introduced to realise the fine feature description of the target. The method of distinguishing UA from birds according to the difference of motion trajectory and polarisation characteristics is discussed. Finally, combined with machine learning or deep learning methods, the effective technical approaches and related achievements of target intelligent recognition are summarised. Section 4 introduces multi-source detection methods of non-cooperative UA targets, such as visible light, infrared, acoustic and radio detection, and discusses the target detection and classification methods based on various technical means. On the basis of comparing the detection ability and performance of various sensors, Section 5 introduces several typical cases of multi-sensor fusion detection systems, and recommends a construction scheme of UA detection systems based on four types of sensors. Section 6 summarises the full text and proposes a research and development trend in future.

2.0 Radar technology

As the main means of air and sea target surveillance and early warning, radar is widely used in the field of national defense and public security. Compared with other technologies, radar is actually the only technology that can realise long-range detection from several to tens of kilometres, and is almost unaffected by unfavourable light and weather conditions. However, traditional surveillance radar is generally used to detect moving targets with relatively large radar cross section (RCS) and high speed, and it is not suitable for detecting low-altitude flying targets such as UA with very small RCS and slow speed (LSS) targets. In addition, the similarity between UA and birds is very high, so reliable classification of two kinds of targets is another problem. Therefore, special design is required for avian radar according to the above demanding requirements [Reference Chen and Li6]. The scheme based on deep learning arranges the original data into a specific data structure, greatly reduces the workload of manual annotation through fusion processing and ensures the recognition effect of the system under the condition of low amount of data and low annotation [Reference Li, Sun and Sun7].

2.1 UA detection system

The traditional surveillance radar usually uses a mechanically scanning antenna to search the whole airspace, so that the residence time on a single target is short. It can only obtain limited target data such as the azimuth, angle, radial velocity and RCS, so it is difficult to detect LSS targets. However, with the continuous development of radar technology in recent years, the ability to detect weak targets is steadily improving, which provides a new way to detect and recognise LSS targets. In recent years, new radar systems for weak target such as UA have become a research focus [Reference Luo8].

Some research institutions and enterprises, such as the University of London, Warsaw University of Technology, Fraunhofer-Gesellschaft, French National Aerospace Research Center, Robin Radar of the Netherlands, Aveillant Radar of the UK and the Wuhan University, conduct a series of UA detection and identification research projects with advanced radar technologies of active phased array, multi input multi output (MIMO), frequency modulated continuous wave (FMCW), holographic and passive radar, which have developed preliminary engineering capacity. Figure 1 shows the Robin phased array radar, Aveillant holographic radar and Fraunhofer-Gesellschaft LORA11 passive radar for UA detection.

Figure 1. Typical UA detection radar system.

Active radar requires frequency allocaiton and strong electromagnetic radiation. However, in some application scenarios, such as airports, strict electromagnetic access systems are in place. The management department is usually cautious about the use of active radar, where even scientific experiments need to go through a strict approval process. However, passive radar has the advantages of low power consumption, concealment and good coverage. As an economical and safe means of non-cooperative target detection, it has attracted extensive attention in academic circles in recent years. Especially with the improvement of hardware performance and the development of available external radiation sources such as communication and wireless network, passive radar has attracted universal attention and extensive research in various countries. The radio wave propagation laboratory of Wuhan University has carried out a series of research projects on UA detection using passive radar [Reference Liu, Yi and Wan9–Reference Dan, Yi and Wan11]. It uses digital terrestrial multimedia broadcast (DTMB) signals to carry out UA detection experiments, and studies time-varying strong clutter suppression in urban complex environment. Experimental results show that after clutter suppression, the detection distance is more than 3km for a typical DJI Phantom 3. Figure 2 shows an example of a UA target surveillance application based on DTMB signal passive radar.

Figure 2. UA target surveillance application based on DTMB signal passive radar [Reference Liu, Yi and Wan9].

2.2 Avian radar system

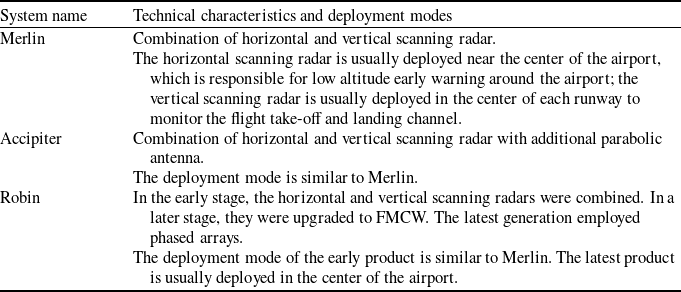

Since 2000, a number of avian radar systems have been developed, the most representative of which are Merlin radar from the United States, Accipiter radar from Canada and Robin radar from the Netherlands.

Merlin radar was the first avian radar in the world. It is equipped with two navigation radars of different wavebands, one being S-band horizontal scanning, the other being X-band vertical scanning, both of which adopt standard T-shaped waveguide array antennae [Reference Anderson12]. This dual-radar system has also become the most typical technical scheme for other avian radar systems. Figure 3(a) shows a typical Merlin radar system. For large birds or flocks, the detection range of the system can reach 4 to 6nmi (7 to 10km) horizontally and 15,000feet (4,500m) vertically. For small- and medium-sized birds, the detection range of the system can reach 2 to 3nmi (4 to 6km) horizontally and 7,500feet (2,300m) vertically.

Figure 3. Typical avian radar system.

The Accipiter radar has also developed a similar avian radar system with horizontal and vertical scanning radars, which has been applied in some airports in the United States and Canada. In order to obtain 3D target information, some Accipiter radars replace the waveguide slot antenna with a parabolic antenna, and integrate the data processor, control system, GPS, mobile power supply and other auxiliary operation systems on a mobile trailer, as shown in Fig. 3(b). The X-band parabolic antenna used in the Accipiter radar is usually placed on top of the trailer. However, compared with the waveguide slot antenna with larger beam width, the detection efficiency of this kind of antenna is lower. In order to make up for the deficiency of parabolic antenna detection efficiency, Accipiter has developed a variety of avian radar antennae, such as multi-beam parabolic antennae, dual axis scanning parabolic antennae and two parabolic antennae with different tilt angles, to improve the coverage of parabolic antennae in the vertical plane [Reference Weber, Nohara and Gauthreaux13, 14].

The first generation Robin avian radar, which appeared after 2010, continues the dual-radar scheme of S-band horizontal scanning radar and X-band vertical scanning radar adopted by Merlin [Reference Hoffmann, Ritchie and Fioranelli15]. The antenna length of the S-band horizontal scanning radar is 3.6m. The rotating speed reaches 45rpm, which is the fastest among the existing avian radars. The S-band radar can detect large birds within a radial distance of 10km and a vertical height of 2km. The antenna of the X-band vertical scanning radar, which is 8feet (2.4m) long, can scan the airspace vertically and rotate at 24rpm, detecting small birds within 2.5km and large birds and flocks within 5km. The information of the overlapped coverage area of the two radars can be associated and integrated to obtain three-dimensional target information in this area. In addition, Robin has developed a mechanically scanned FMCW radar to replace the X-band vertical scanning radar with an average output power of only 400mW, as shown in Fig. 3(c). Different from pulse radar with only one antenna, FMCW avian radar adopts two separate antennae. One antenna continuously transmits electromagnetic waves of different frequencies, and the other continuously receives echo information. FMCW radar has more powerful bird detection ability with three working modes of scanning, tracking and staring, which is especially suitable for professional ornithological research. The latest generation of Robin radar shown in Fig. 1(a) adopts technologies of X-band FMCW and phased arrays, with an average output power of 20W. It can obtain the three-dimensional information of the target in the whole airspace within 15km, and the target trajectory update rate is 1s. At the same time, it has the ability to distinguish between flying birds and UA based on the micro-Doppler (m-D) feature.

Table 1 compares the technical characteristics and deployment modes of three typical avian radars. In the remainder of this section, the latest research results of UA detection and classification with radar will be introduced, including the traditional target feature-based classification method and the latest deep learning method.

Table 1. Comparison of typical avian radars

3.0 Radar target detection and recognition method

3.1 M-D feature extraction

The micro-motion of moving target components will introduce modulation sidebands near the radar Doppler signal generated by the motion of the target body, resulting in Doppler spectrum broadening, which is called m-D signal [Reference Zhang, Hu and Luo16]. At present, as the most commonly used radar fine signal feature in automatic target classification, the m-D feature has been widely used in automatic target classification, such as ground moving target classification, ship detection, human gait recognition and human activity classification, and has become a very popular research direction in UA radar detection application in recent years [Reference Li, Huang and Yin17].

The internal motion of the target, including blade rotation of the rotor UA or helicopter, the turbine propulsion of a jet, the wing flapping of a bird and so on, can be statistically described by m-D features [Reference Ritchie, Fioranelli and Griffiths18]. The m-D feature could be generated by the flapping of bird wings and the rotation of a UA rotor, which is the main technical means of radar target recognition [Reference Chen19]. Figure 4 shows the simulation results of m-D characteristics of bird and rotor UA at X-band. It can be seen that the m-D of bird targets is generally concentrated in the low-frequency region with long period, which is closely related to the flapping motion characteristics of different types of bird wings, while the m-D characteristics of UA targets show obvious periodic characteristics, significantly different from birds. The echo of a rotor UA is the superposition of Doppler signals of the main body and rotor components, therefore, the m-D characteristics of UA with different types and numbers of rotors are also different.

Figure 4. Simulation results of m-D characteristics of flying birds and rotor UA [Reference Chen19].

The modeling of m-D characteristics and fine characteristic cognition of rotor UA are the preconditions for subsequent detection and target classification. The echo signal of the rotor UA is the superposition of the Doppler signal of the main body of the UA and the m-D signal of the rotor components, as shown in Fig. 5 [Reference Jahangir and Baker20].

Figure 5. m-D characteristics of four-rotor UA [Reference Jahangir and Baker20].

Firstly, the main body motion and micro-motion of UAV target with micro-motion components are modeled, analysed and parameterised, and then the corresponding relationship among Doppler frequency, rotation rate, number and size of blades is deduced [Reference Singh and Kim21]. For multi-rotor UAV, assuming that the RCS of all rotor blades is the same and the RCS value is 1, based on the helicopter rotor model, the target echo model of multi-rotor UAV is as follows [Reference Song, Zhou, Wu and Ding22]

\begin{align}{s_{\sum} }(t) &= \sum_{m = 1}^M {L\,\textrm{exp}\! \left\{ { - j\frac{{4{{\pi }}}}{\lambda }\left[ {{R_{{0_m}}} + {z_{{0_m}}}\sin {\beta _m}} \right]} \right\}} \; \cdot \sum_{k = 0}^{N - 1} {\sin {\textrm{c}}} \left\{ {\frac{{4{{\pi }}}}{\lambda }\frac{L}{2}\cos {\beta _m}\cos\! \left( {{\Omega _m}t + {\varphi _{{0_m}}} + k2{{\pi }}/N} \right)} \right\} \nonumber\\&\quad \textrm{exp} \left\{ { - {\textrm{j}}{\Phi _{k,m}}\!\left( t \right)} \right\} \end{align}

\begin{align}{s_{\sum} }(t) &= \sum_{m = 1}^M {L\,\textrm{exp}\! \left\{ { - j\frac{{4{{\pi }}}}{\lambda }\left[ {{R_{{0_m}}} + {z_{{0_m}}}\sin {\beta _m}} \right]} \right\}} \; \cdot \sum_{k = 0}^{N - 1} {\sin {\textrm{c}}} \left\{ {\frac{{4{{\pi }}}}{\lambda }\frac{L}{2}\cos {\beta _m}\cos\! \left( {{\Omega _m}t + {\varphi _{{0_m}}} + k2{{\pi }}/N} \right)} \right\} \nonumber\\&\quad \textrm{exp} \left\{ { - {\textrm{j}}{\Phi _{k,m}}\!\left( t \right)} \right\} \end{align}

where, M is the number of rotors, N is the number of blades per rotor, L represents the length of blade,

![]() ${R_{{0_m}}}$

is the distance from the radar to the centre of the m

th rotor,

${R_{{0_m}}}$

is the distance from the radar to the centre of the m

th rotor,

![]() ${z_0}_{_m}$

represents the height of the m

th rotor blade,

${z_0}_{_m}$

represents the height of the m

th rotor blade,

![]() ${\beta _m}$

is the pitch angle from the radar to the m

th rotor,

${\beta _m}$

is the pitch angle from the radar to the m

th rotor,

![]() ${\Omega _m}$

is the rotation angle frequency of the m

th rotor with the unit of rad/s, and

${\Omega _m}$

is the rotation angle frequency of the m

th rotor with the unit of rad/s, and

![]() ${\varphi _{{0_m}}}$

is the initial rotation angle of the m

th rotor.

${\varphi _{{0_m}}}$

is the initial rotation angle of the m

th rotor.

The instantaneous Doppler frequency of the echo signal can be obtained from the time derivative of the phase function of the signal, and the equivalent instantaneous m-D frequency of the k th blade of the m th rotor is obtained as

It can be seen from the above formula that the m-D characteristic of a multi-rotor UA is composed of

![]() $M \times N$

sinusoidal curves and is affected by carrier frequency, number of rotors, rotor speed, number of blades, blade length, initial phase and pitch angle. Among them, the carrier frequency, blade length and pitch angle are only related to the amplitude of the m-D frequency, while the number of rotors, rotor speed, blade number and initial phase will affect the amplitude and phase of the m-D characteristic curve.

$M \times N$

sinusoidal curves and is affected by carrier frequency, number of rotors, rotor speed, number of blades, blade length, initial phase and pitch angle. Among them, the carrier frequency, blade length and pitch angle are only related to the amplitude of the m-D frequency, while the number of rotors, rotor speed, blade number and initial phase will affect the amplitude and phase of the m-D characteristic curve.

Due to the influence of environmental noise, it is difficult to measure the m-D characteristics in the outfield, which requires the radar to have strong accumulation ability of target Doppler echo information. Figure 6 compares the field measurement and data processing results of the m-D characteristics of an owl and a DJI S900 UA. Since the wing width of an owl is large, its flapping frequency is significantly lower than the rotor speed of the UA, resulting in the m-D intensity of UAV is significantly weaker than that of owl, while the m-D period is significantly faster than that of owl [Reference Rahman and Robertson23]. Therefore, the obvious difference of m-D characteristics between them lays a theoretical foundation for the establishment of a classification and recognition algorithm for birds and UA, which has good discrimination and high reliability without prior information. At present, the main difficulties of using m-D features in avian radar to recognise and classify such LSS targets include weak echo and low time-frequency resolution, and the unclear corresponding relationship between m-D features and the micro motion of target components.

Figure 6. Measurement results of m-D characteristics of flying birds and UA targets [Reference Rahman and Robertson23].

In Refs [Reference De Wit, Harmanny and Premel-Cabic24, Reference Harmanny, De Wit and Cabitc25], the m-D feature was used to classify UA, and the short time Fourier transform (STFT) was used to extract m-D features, where the method of extracting key features such as speed, tip speed, rotor diameter and rotor number from radar signals was studied, so as to classify different rotor UA. Following a similar method, Molchanov et al. [Reference Molchanov, Egiazarian and Astola26] used STFT to generate m-D features, which extracted feature pairs from the correlation matrix of m-D features, and trained three classifiers, including a linear and a nonlinear support vector machine (SVM) classifier, and a naive Bayes classifier (NBC), and realised the classification of 10 types of rotorcraft and birds. Chinese Academy of Sciences [Reference Ma, Dong and Li27] proposed a m-D feature extraction algorithm based on instantaneous frequency estimation of a Gabor transform and Fast Fourier transform (FFT) to estimate the number of rotors, speed and blade length of UA.

DeWit et al. [Reference De Wit, Harmanny and Molchanov28] followed a signal processing flow similar to Ref. [Reference Molchanov, Egiazarian and Astola26] before applying singular value decomposition (SVD) to spectra. In order to achieve fast classification, three main features of target speed, spectrum periodicity and spectrum width were extracted. Similarly, in Ref. [Reference Fuhrmann, Biallawons and Klare29], the author used three common signal characterisation methods to generate m-D signals, namely STFT, cepstrum and cadence velocity diagram (CVD), and then used the combination of SVD feature extraction and an SVM classifier to classify the measured data of fixed wing, rotor UA and flying birds. The Chinese Academy of Sciences [Reference Song, Zhou and Wu30] proposed a method of estimating UA rotor rotation frequency based on autocorrelation cepstrum joint analysis, which can estimate the UAV rotor rotation frequency more effectively through weighted equalisation.

In order to use the phase spectrum in the m-D feature extraction process, Ren et al. [Reference Ren and Jiang31] proposed a robust signal representation method, namely two-dimensional regularised complex logarithm Fourier transform and object-oriented dimension reduction technology, where the subspace reliability analysis was designed for a binary UAV classification problem to distinguish UA and bird targets. Another m-D label extraction algorithm was proposed in Ref. [Reference Oh, Guo and Wan32], where the author used empirical mode decomposition (EMD) to classify UA automatically. On the basis of Ref. [Reference Oh, Guo and Wan32], Ma et al. [Reference Ma, Oh and Sun33] studied the feasibility of six entropies of a group of intrinsic mode functions extracted from EMD for UA classification, and proposed to fuse the extracted features into three kinds of entropies, and obtained the features through signal down sampling and normalisation as the input of a nonlinear SVM classifier. In Ref. [Reference Sun, Fu and Abeywickrama34], the problem of UA wing type and UA positioning was studied. They treated the positioning as a classification problem and expanded the number of different categories according to a group of positions of each UA. The method combined EMD and STFT to generate m-D features, which were analysed by principal component analysis (PCA), and the problem of UA target classification and location based on nearest neighbor, random forest, NBC and SVM was studied.

In addition to the typical radar with single antenna, some studies consider multi-antenna, multi-station radar and passive radar. In Ref. [Reference Fioranelli, Ritchie and Griffiths35], the author proposed an NBC and discriminated analysis classifier based on Doppler and bandwidth centeed features of m-D signal, where the experiment considered the actual measurement of rotorcraft, including the loading and unloading of potential payload. In a similar study, Hoffman et al. [Reference Hoffmann, Ritchie and Fioranelli36] proposed a UA detection and tracking method with multi-station radar, which combines the m-D features with constant false alarm rate (CFAR) detector to improve the detection effect of UA, using an extended Kalman filter for tracking.

In Ref. [Reference Zhang, Yang and Chen37], the author uses two radars of different bands to extract and fuse m-D features, so as to classify three kinds of rotorcraft. Tsinghua University [Reference Zhang, Li and Huo38] proposed a UA recognition method based on micro-motion feature fusion with multi-angle radar observation. Wuhan University [Reference Liu, Yi and Wan39] carried out the m-D effect experiment of multi-rotor UA using multi-illuminator-based passive radar, which confirmed the technical feasibility of micro-motion feature extraction of UA with passive radar.

In recent years, with the continuous development of phased array, holographic, MIMO and multi-static radar technology, the gain and velocity resolution of the target signal can be improved by prolonging the observation accumulation time, and the high-precision extraction and description of complex m-D features can be realised [Reference Singh and Kim40–Reference Rahman and Robertson42]. Traditional MTD methods usually use the combination of range migration compensation and Doppler migration compensation, but the algorithm is complex and cannot achieve long-term and fast coherent accumulation of target echoes [Reference Chen, Guan and Liu43]. The long-time coherent integration (LTCI) method determines the search range based on the multi-dimensional motion parameters of the target, and accumulates the observed values of the extracted target by selecting specific transformation parameters. This technique involves a large amount of calculation and is difficult to apply [Reference Chen, Guan and Chen44]. On the basis of LTCI, the target long-time coherent integration method based on non-uniform sampling order reduction and variable scale transformation (NUSP-LTCI) is adopted to reduce the high-order signal to first-order, which greatly reduces the amount of calculation and creates conditions for engineering application, whose algorithm flow is shown in Fig. 7(a) [Reference Chen, Guan and Wang45]. Figure 7(b-d) compares the low-altitude radar target coherent integration results of different algorithms, where the NUSP-LTCI algorithm has higher parameter estimation accuracy and stronger clutter suppression ability, compared with the traditional MTD algorithm and the classical Radon Fourier Transform (RFT) algorithm.

Figure 7. Processing flow of NUSP-LTCI algorithm and comparison of low-altitude radar target coherent accumulation results [Reference Chen, Guan and Wang45].

3.2 Feature extraction of target echo and motion

Traditional surveillance radar usually uses mechanical scanning antennae to detect and track multiple targets, whose design purpose is to find new targets by constantly searching the space. Since this kind of radar is always searching in all directions, the time of focusing on a single target is usually very short, which makes it difficult to extract m-D features. Therefore, only the RCS and other echo signal features or motion features of the target can be used to classify detected targets [Reference Patel, Fioranelli and Anderson46].

Chen et al. proposed a target classification method based on motion model, using traditional surveillance radar data to classify UA and birds [Reference Chen, Liu and Li47]. Based on Kalman filter tracking, a smoothing algorithm is proposed to enlarge the difference between the conversion frequency estimation of bird and UA target models. The method is based on the assumption that birds have higher manoeuverability. Figure 8 shows the flow of the algorithm and an example of target classification in low-altitude clutter environment. Firstly, multiple motion models are established to track the target trajectory, and then the manoeuverability characteristics of the target are extracted by estimating the conversion frequency of the target model, and finally the classification of bird and UA targets is realised. It is shown in Fig. 8(b) that the flight path of a UA target is relatively straight and stable, while the trajectory of a flying bird target is shorter and more variable. It should be noted that there are also some special cases that UA manoeuvers along the curve while bird keeps flying in a straight line, which inevitably results in some limitations of this method.

Figure 8. Classification of flying birds and UA based on flight trajectory characteristics [Reference Chen, Liu and Li47].

Messina and Pinelli [Reference Messina and Pinelli48] studied the problem of UA classification using two-dimensional surveillance radar data. The method was divided into two steps. Firstly, the UA and bird targets were classified, and then rotary wing and aircraft were classified. By creating a set of feature sets based on the target RCS, signal-to-noise ratio, tracking trajectory and speed information, SVM was selected as the classifier, and a subset of 50 features was established to achieve high classification accuracy.

Polarisation feature is another effective method to distinguish birds and UA. Figure 9(a) shows the periodic echo maps of a DJI UA under HH, HV and VV polarisation conditions, where the HH polarisation has the strongest fluctuation characteristics. Figure 9(b) shows the classification results for four types of targets (two types of UA, gliding bird and wing-flapping bird) with different polarisation parameters and sampling intervals [Reference Torvik, Olsen and Griffiths49].

Figure 9. Examples of target scattering characteristics and classification results based on polarisation characteristics [Reference Torvik, Olsen and Griffiths49].

3.3 UA target detection and classification based on deep learning

In recent years, deep learning methods have been successfully applied in audio and video data processing, but the application on radar data has just started. Typical deep learning schemes usually need to label a large amount of real data, which is easy to generate for audio and video data, but the labeling for radar data is still very limited. Despite the above difficulties, the work of combining radar data with deep learning has increased in recent years.

In Ref. [Reference Kim, Kang and Park50], convolutional neural networks (CNNs) were first used to directly learn m-D characteristic spectra and classify UA. The spectra of UA were measured and treated by GoogleNet, where two UA were tested in indoor and outdoor environments. Mendis et al. [Reference Mendis, Randeny and Wei51] developed a UA classification algorithm based on deep learning for an S-band radar. They tested three different UA types of two rotary-wing and one aircraft, where the autocorrelation function (SCF) was used to identify the modulation components.

Wang et al. [Reference Wang, Tang and Liao52] proposed a range Doppler spectrum target detection algorithm based on CNNs, which was compared with the traditional CFAR detection method, achieving satisfactory results. The structure of the network was an eight-layer CNN trained with different range Doppler fixed windows under multiple signal to noise ratio (SNR) conditions. The detection problem was treated as the classification task of target and clutter, in which the fixed size window slid on the whole range Doppler matrix to check all units.

In Ref. [Reference Samaras, Diamantidou and Ataloglou53], the range profile derived from the range Doppler matrix and the manually selected features were used as the input of DNNs to classify UA. Regev et al. [Reference Regev, Yoffe and Wulich54] developed a multi-layer perceptron (MLP) neural network classifier and parameter estimator, which can determine the number of propellers and blades of UA and realise the diversification of micro-motion analysis of UA. This method was the first attempt to input the received complex signal directly into the learning network. The network architecture consisted of five independent branches, which received in quadrature (IQ), time, frequency and absolute data. There were two unique MLP classifiers, which first analysed the characteristics of propellers, then analysed the number of blades, and then fed them into the estimation algorithm. Simulation results showed that the classification accuracy was closely related to SNR.

Habermann et al. [Reference Habermann, Dranka and Caceres55] studied the classification of UA and helicopters using the point cloud features from radar. Based on the geometric differences between the point clouds, 44 features were extracted. Using artificial data training neural network to solve two classification problems, one is the classification of seven types of helicopters, the other is the classification of three types of rotor UA.

Mohajerin et al. [Reference Mohajerin, Histon and Dizaji56] proposed a binary classification method, which used the data captured by surveillance radar to distinguish the trajectory of UA and birds. Twenty features based on motion, velocity and RCS are used. The feature manually extracted is combined with an MLP classifier, achieving high classification accuracy.

Most UA detection and classification methods based on m-D features are listed in Table 2, where the recognition rate of all methods is more than 90%, and some of them are close to or reach 100%. Unfortunately, because only part of the literature used the same data set, it is difficult to compare the performance of all methods directly.

Table 2. Summary of radar based UA classification results in existing literatures

4.0 Other auxiliary detection technologies

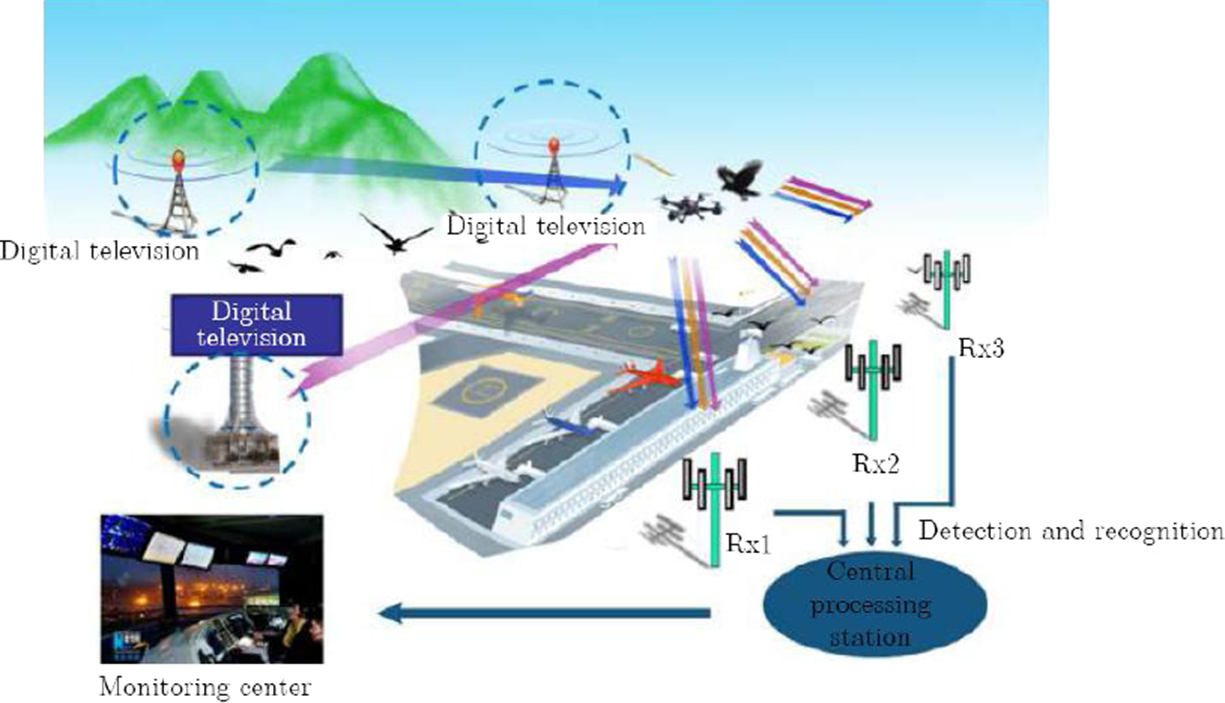

At present, some advanced low-altitude surveillance radars have the ability of classification and recognition of UA, birds and other targets. The development of m-D feature extraction, deep learning and other technologies further promotes progress in this field. At the same time, much experience shows that in engineering applications, the UA detection technology with radar as the main body, supplemented by photoelectric, acoustic, radio detection and other multi-source sensor fusion has become the mainstream, as shown in Fig. 10. In this section, the technical characteristics of various auxiliary sensors and the data processing methods based on deep learning are discussed.

Figure 10. Multi-sensor detection technology of UA.

4.1 Visible light

Compared with radar, the target image information obtained by visible light detection equipment is more abundant, providing advantages in target recognition. However, a single visible light camera usually does not have target ranging capability, so it is necessary to obtain the target distance through multi-station cross positioning or adding laser ranging equipment. Optical cameras are also affected by environmental factors, and the detection distance is much lower than that of radar, which makes it difficult to use alone in general. Therefore, visible light cameras are usually only used as supplementary confirmation equipment for radar. Figure 10(a) shows a typical photoelectric system, which can obtain visible and infrared images of the target at the same time. Figure 11 shows an example of the images of UA and bird targets captured by a standard visible-light camera, with a detection range of about 2km.

Figure 11. Visible image.

With the development of neural network and deep learning algorithms, optical images have become one of the most valuable information sources in UA detection. Since the deep learning method was successfully applied to the image classification of the ImageNet dataset in 2012, the research around deep learning has been developing [Reference Krizhevsky, Sutskever and Hinton57]. Most UA target recognition research using DNNs adopts the general target detection structure, and takes the powerful DNNs as the classification model. Most UA target recognition research using DNNs adopts the general target detection structure, and uses powerful DNNs as the classification model. In such research, DNNs are usually trained in advance on general data sets such as ImageNet, and then optimised with UA data to improve the recognition performance by adjusting the parameters.

Saqib et al. [Reference Saqib, Khan and Sharma58] tested the Faster-RCNN model for UA detection. They used VGG-16 [Reference Simonyan and Zisserman59], ZF-net [Reference Zeiler and Fergus60] and other models to carry out experiments in the detection scheme. It is shown that VGG-16 performs the best. In addition, they trained birds as a separate category, which effectively reduced the false alarm rate. It has been proposed in recent studies to add a preprocessing module before the formal detection process to improve the detection effect. In one study, a U-net was inserted in front of the detector, which was a network that calculated the motion of continuous frames and generated a frame, which can contain the UA with a certain probability [Reference Craye and Ardjoune61]. In other work, a super-resolution processing module was added in front of the detector to improve the detection ability of UA targets with fewer pixels in the image due to long detection distance [Reference Vasileios Magoulianitis, Anastasios Dimou and Daras62].

Aker et al. [Reference Aker and Kalkan63] used a YOLO (You Only Look Once) [Reference Redmon, Divvala, Girshick and Farhadi64] detector to detect UA targets quickly and accurately. In addition, they extracted UA targets from some public images and added them to the natural images with various complex backgrounds, and constructed a new artificial data-set to train UA deep learning models in different scales and backgrounds, so as to solve the scarcity of UA marked public data.

Rosantev et al. [Reference Rozantsev, Lepetit and Fua65] established a detection framework based on spatiotemporal cube video data at different scales. This method used reinforcement trees and CNNs for classification. The experimental results showed that time domain information played an important role in small target detection such as UA, and CNNs performed better in accuracy recovery.

Gökçe et al. [Reference Gökçe, Üçoluk and Sahin66] proposed a UA detection method with an optical camera based on gradient histogram feature and cascade classification. The detection part evaluated the increasingly complex features at different stages through the cascade classification method, and it was regarded as detecting the target if it successfully passed through all levels of classifiers. In addition, the target distance was estimated by support vector regression.

4.2 Infrared

Compared with visible light cameras, the main advantage of infrared cameras is that they are not affected by light or weather conditions and can still operate normally even in complete darkness. Generally, the resolution of infrared camera imaging is low, and the cost is high. Therefore, it is only used in military applications, but with the progress of technology, its cost is steadily declining. Figure 12 shows image data of a UA and a bird target taken by a typical infrared camera at a distance of 2km.

Figure 12. Infrared image.

In UA detection systems for security of prisons and other facilities, infrared cameras are generally placed on top of buildings or monitoring towers. In most multi-sensor systems, infrared cameras are usually combined with visible light as auxiliary equipment for radar, but there are some public reports of using infrared sensors alone to detect, track or classify UA. Like visible light cameras, infrared cameras cannot measure the distance to the target, so it cannot locate the target. Anthony-Thomas et al. [Reference Anthony Thomas, Antoine Cotinat and Gilber67] proposed a method of UA target location by using the images from multiple infrared cameras.

At present, many UA detection systems integrated with infrared sensors have realised a UA target recognition function based on infrared images. The target recognition algorithm usually draws lessons from the existing deep learning model, but there is little literature on the topic. The rest of this section will review some published results of the application of infrared vision in other target detection, tracking and classification tasks, in order to provide reference for the application scenarios of UA target recognition.

Liu et al. [Reference Liu, Zhang and Wang68] designed and evaluated different pedestrian target detection solutions based on Faster-RCNN architecture and multispectral input data. They started from different branches of each input data, and established a VGG-16 basic network at each branch, exploring the feature fusion technologies at low, medium and high levels of the network.

Cao et al. [Reference Cao, Guan and Huang69] adjusted the general pedestrian detector to the multispectral domain and used the complementary data captured by visible light and infrared sensors, which not only improved the pedestrian detection performance, but also generated additional training samples without manual labeling. Pedestrian detection was realised by the proposed network, while unsupervised automatic labeling was based on a new iterative method to mark moving targets from calibrated visible and infrared data.

John et al. [Reference John, Mita and Liu70] used CNNs to classify candidate pedestrian targets. Firstly, the infrared image was segmented by fuzzy clustering, and the candidate pedestrians were located. The candidate pedestrians were screened according to the human body posture characteristics and the second central moment ellipse. Then the cropped image block around each candidate object was adjusted to a fixed size and input into eight-layer CNNs for binary classification. Finally, the training was carried out based on the sample data set.

Ulrich et al. [Reference Ulrich, Hess and Abdulatif71] realised human target detection based on the fusion of infrared image and m-D radar data based on dual stream neural network. Firstly, the Viola-Jones framework [Reference Viola and Jones72] was used to detect human targets in infrared images, and then the calculated sensor distance was used to perform associated processing between infrared and radar targets. Finally, the information obtained by each sensor was fused in the feature layer of a single joint classifier.

Liu et al. [Reference Liu, Lu and He73] redesigned the CNN training framework for infrared target tracking based on the relative entropy theory, and its performance was verified on two public infrared data sets. In this method, multiple weak trackers were constructed by using the features extracted from different convolution layers pre-trained on ImageNet, and the response diagram of each weak tracker was fused with the strong estimation of target position.

4.3 Acoustic

Acoustic technology uses the special “audio fingerprint” generated by UA flight to detect and identify. As a passive technology, acoustic technology has become an important technical means of multi-sensor fusion detection of UA, and can be a useful supplement to radar, photoelectric and other detection technologies, which does not interfere with other detectors.

Based on acoustic detection technology, relevant research institutions and companies in South Korea, France, Hungary, Germany, the Netherlands and the United States have developed corresponding UA detection systems and proposed relevant solutions. Figure 13 shows SkySentry, an acoustic system developed by MicroflownAvisa in Netherlands, which can accurately locate small UA in urban environments [74]. The core technology of SkySentry is an acoustic multi-mission sensor with an acoustic vector measurement function, which can track and locate low-altitude small UA by placing sensor array in a certain area. The capability is based upon either ground-based or vehicle-based node sensor that could be used stand-alone or networked. Depending upon the deployment of the sensors nodes, a perimeter can be sealed off against incoming intruder UA or a complete area can be covered.

Figure 13. SkySentry system example.

A general acoustic detection system consists of three main modules: detection, feature extraction and classification. The detection module captures the original audio signal of the target in the noise environment; the feature extraction module automatically extracts features from the original signal as the input of the classifier; the classification module assigns the extracted features to the corresponding classes. The ability of deep learning networks to extract unique features from raw data makes them highly suitable to acoustic detection. Lee et al. [Reference Lee, Pham and Largman75] produced one of the earliest research results of unsupervised learning of audio data using convolutional deep belief networks, where the features learned from the neural network correspond to the phonemes in the speech data one by one. Piczak [Reference Piczak76] tested a simple CNN architecture with ambient audio data, whose accuracy is close to the most advanced classifier. Lane et al. [Reference Lane, Georgiev and Qendro77] created a mobile application program that can perform very accurate speech binarisation and emotion recognition using deep learning. Recently, Wilkinson et al. [Reference Wilkinson, Ellison and Nykaza78] performed unsupervised separation of environmental noise sources, added artificial Gaussian noise to the pre-labeled signal, and used an automatic encoder for clustering. However, the background noise in environmental signals is usually non-Gaussian, which makes this method only suitable for specific data sets.

In UA detection, Park et al. [Reference Park, Shin and Kim79] proposed a method of fusing radar and acoustic sensors to realizse the detection, tracking and identification of rotor UA based on feed-forward neural networks. Liu et al. [Reference Liu, Wei and Chen80] used the Mel-frequency cepstral coefficients (MFCCs) and an SVM classifier to detect UAV. Kim et al. [Reference Kim, Park and Ahn81] introduced a real-time detection and monitoring system of UA based on microphones, which used nearest neighbor and graph learning algorithms to learn the characteristics of FFT spectra. Kim and Kim [Reference Kim and Kim82] expanded on their own work, and improved the classification accuracy of the system from 83% to 86% by using artificial neural networks. Jeon et al. [Reference Jeon, Shin and Lee83] proposed a binary classification model, which used audio data to detect UA, used an F-score as performance measure, compared CNNs with Gaussian mixture model and recurrent neural network, and synthesised the original UA audio with different background noise. In addition, many research institutions used the improved MFCC acoustic feature extraction method to continuously improve the UA target recognition ability in different ways [Reference Ma, Zhang and Bao84, Reference Wang, An and Ou85].

4.4 Radio detection

Radio detection uses radio frequency scanning technology to monitor, analyse and measure the data transmission signals, image transmission signals and satellite navigation signals of civil UAV in real time. Firstly, the spectrum characteristics of UA data and image transmission signals are extracted, and the UAV feature database is established to realise the discovery of UA; then, the UA positioning, tracking and recognition are realised by using the technology of Radio Direction Finding (RDF) [Reference Zhu, Tu and Li86] or the technology of time difference of arrival (TDOA) positioning [Reference Wang, Zhou and Xu87].

In RDF, a 3D array antenna is usually used to receive the electromagnetic signal transmitted by UA in real time, and the received signal is analysed by spectrum analyser and monitoring software. At the same time, the spectrum analyser and monitoring software collect and analyse the frequency, bandwidth, modulation mode, symbol rate and other data of the signal, and compare them with the established UA signal feature library to identify the signal features. Figure 14 shows the target azimuth and frequency data of UA obtained by a typical radio detection system.

Figure 14. UA target data obtained by radio detection system.

The biggest advantage of radio detection systems is that they do not emit electromagnetic waves and belong to green passive technology. At present, a large number of companies and scientific research institutions have developed relevant systems using radio detection technology, and some of them have been widely used in the security of airports, prisons, stadiums, public buildings and major events. However, the biggest problem with radio monitoring technology is that it may not be able to detect “silent” UA that turn off data transmission and image transmission signals.

TDOA is a method of positioning by using time difference, which determines the location of a signal source by comparing the time difference of signals arriving at multiple monitoring stations. TDOA positioning systems have the characteristics of low cost and high accuracy, but usually need to place more than three stations, whose equipment includes antenna, receiver and time synchronisation module. Figure 2(c) shows typical TDOA station equipment and Figure 15 shows the UA target data obtained by a typical TDOA system.

Figure 15. UA target data obtained by TDOA system.

Radio signal recognition is the core technology of radio detection system, which is essentially the same as the recognition technology for radar, visible light and infrared signals. It uses fixed rules or machine learning classifiers based on the extraction of key features of signals. In recent years, the application of deep learning theory has sprung up in the field of artificial intelligence. In 2016, O’Shea et al. took the lead in applying deep learning algorithm to radio signal recognition, which used the CNN framework to automatically recognise 11 kinds of modulation signals [Reference O’Shea, Corgan and Clancy88]. Compared with traditional machine learning algorithms, the recognition rate was greatly improved. Since then, there have been a lot of research results of radio signal recognition based on deep learning. Karra et al. first distinguished analog modulation from digital modulation, and then identified the specific modulation type and order by using the cascade identification method of CNN networks [Reference Karra, Kuzdeba and Pertersen89]. West and O’Shea [Reference West and O’Shea90] further optimised the network structure by combining the CNN network with the signal timing characteristics. On the basis of the model proposed in Ref. [Reference O’Shea, Corgan and Clancy88], Arumugam et al. [Reference Arumugam, Kadampot and Tahmasbi91] used cyclostationary characteristic parameters as the input of CNN networks, which improved the recognition results at the expense of greater computational complexity. Schmidt et al. [Reference Schmidt, Block and Meier92] identified three kinds of radio communication standard signals by using FFT results as the input to CNNs.

5.0 Multi-sensor system performance

The purpose of data fusion based on multiple sensors is to combine the data from different modes to produce an effect that cannot be achieved by a single sensor. In recent years, the application of artificial intelligence and deep neural networks in multi-source data processing has been widely pursued, providing a possible solution for complex multi-modal learning problems [Reference Baltrušaitis, Ahuja and Morency93].

5.1 Performance analysis

The performance and working characteristics of various types of sensors are compared in Table 3. This section further summarises and analyses their advantages and disadvantages, potential limitations and development prospects.

Table 3. Comparison of main performances of various UA detection sensors

Radar can effectively detect potential UA and track multiple targets in the whole airspace. Radar systems that can detect small UA usually work in X-band, but the cost of such systems is usually high, accompanied by a certain false alarm rate. Except for passive radars, most radars may cause frequency interference, whose deployment needs to be approved by the local radio regulator. Radar based UA target detection and tracking is mainly realised by classical radar signal processing techniques such as CFAR, Doppler processing and hypothesis testing. At present, the most commonly used radar signal feature in UA classification is the m-D feature, which is mainly affected by the number of rotor blades, incidence angle range, pulse repetition frequency and radar irradiation time [Reference Molchanov, Egiazarian and Astola26]. In fact, most of the current work is carried out under the ideal condition of close range [Reference Molchanov, Egiazarian and Astola26, Reference Oh, Guo and Wan32, Reference Ma, Oh and Sun33], and rarely on the original radar data, because the echo data of small UA targets is difficult to obtain under strong noise conditions in the field. Otherwise, the traditional surveillance radar uses mechanical scanning antennae, which have a short residence time for a single target and cannot produce m-D features, when it can only rely on other information sources such as the trajectory characteristics [Reference Chen, Liu and Li47], RCS [Reference Messina and Pinelli48] and polarisation characteristics [Reference Torvik, Olsen and Griffiths49] of the target. However, compared with m-D methods, this kind of research is relatively rare and the scope of application is limited. The trajectory classification method based on deep learning has been successfully applied in general motion model applications, which may be transplanted to UAV trajectory classification and become a potential research direction [Reference Endo, Toda, Nishida and Ikedo94].

Optical technology can image UA targets in the field of vision in visible or infrared light. There are visible light image public data sets for UA detection and classification [95, 96]. The common deep learning architectures for visible light image processing include Faster-RCNN [Reference Saqib, Khan and Sharma58], VGG-16 [Reference Simonyan and Zisserman59] and YOLO [Reference Redmon, Divvala, Girshick and Farhadi64], whose performance is far superior to the traditional computer vision methods of manual calibration features [Reference Gökçe, Üçoluk and Sahin66]. Note that it is not enough to transplant the existing target detection algorithms directly to UA image data. It is necessary to improve these methods by studying the characteristics of UA target detection, so as to avoid false alarm while detecting small targets. Although existing UA detection systems are generally equipped with infrared cameras, such commercial cameras are unsuitable to detect small UA due to their low resolution, and the high price of high-resolution infrared equipment may be the direct reason for the small number of such research papers published. In fact, the processing mechanism of infrared image is similar to that of visible images. With the decreasing cost of high-resolution infrared equipment, combined with deep learning algorithms, the research prospects of UA target detection and classification using infrared data are promising.

Acoustic sensors are light and easy to install. They can be used in mountainous areas or highly urbanised areas where other devices are not suitable. At the same time, the passive acoustic sensor does not interfere with the surrounding communication, and the power consumption is very low. However, in noisy environments, the detection range of a single acoustic sensor is less than 150m [Reference Jeon, Shin and Lee83]. The research on multichannel audio shows that the detection performance can be significantly improved by using the most advanced beam-forming algorithms and fusing with other sensors such as optical cameras. Therefore, the establishment of a UA audio signal public database is of great significance to promote the research and development in this field. In addition, marking such data sets is prone to human errors, which mislead the machine learning algorithm. Therefore, in the field of environmental audio monitoring, unsupervised deep learning algorithms have attracted great attention in recent years, and its application in UA target detection and recognition worth pursuing [Reference Lee, Pham and Largman75].

Radio detection technology is a traditional tool of radio spectrum management, and has become an important technical means of UA detection and identification. Compared with radar, photoelectric, acoustic and other technical means, the biggest advantage of radio detection technology is that it can detect the position of the UA operator, and the biggest problem is the technology may fail for the “silent” UA without communication links. In addition, the complex electromagnetic environment could cause interference to the radio detection technology, affecting its detection range and performance. Radio signal recognition technology based on deep learning can adapt to the complex electromagnetic environment and ensure a certain recognition rate under conditions of low signal to noise ratio (SNR), which represents the development direction of current radio detection technology [Reference Yuan, Wang and Zheng97]. At the same time, we notice that TDOA technology has been successfully applied in a UA detection and positioning system based on various sensors such as radio detection, MIMO radar and microphone arrays.

5.2 Some existing cases

In recent years, for UA target detection, recognition and tracking systems based on multi-sensor fusion, scientific research institutions and related enterprises have put forward a variety of construction schemes and data fusion methods.

Shanghai Jiao Tong University [Reference Zhang and Hu98] proposed a low-altitude target monitoring and tracking method using radar and visible light. This method uses radar as the main tracker and a visible light camera as the detector to track the target through an interactive multiple model, and realises the monitoring and tracking simulation of rotor UA and other low-altitude targets.

Park et al. [Reference Park, Shin and Kim79] proposed a small UA detection system combining radar and acoustic sensors. The system uses low-cost radar to scan the interesting area and an acoustic sensor array to confirm the target. The system uses a pre-trained deep learning framework composed of three MLP classifiers to vote whether the UA exists or not. The cost of the system is low, but the detection distance is only about 50m.

Liu et al. [Reference Liu, Wei and Chen80] proposed a solution of joint detection using a camera array and acoustic sensors. The system is composed of 30 cameras, three microphones, 8 workstation nodes and several network devices. The UA image and audio data are recognised by an SVM classifier. For the UA target with a height of 100m and horizontal distance of 200m, high detection accuracy is achieved.

Jovanoska et al. [Reference Jovanoska, Brötje and Koch99] built a UA detection, tracking and positioning system based on bearings-only multi-sensor data fusion, and adopted a centralised data fusion method based on multiple hypothesis tracker. The system first distinguishes the target and false alarm from different sensors, identifies the targets and estimates the contribution of each single sensor to the detection. It then uses a fusion algorithm to predict the target position and modify the target location by fusing the measurement results of different sensor systems.

Hengy et al. [Reference Hengy, Laurenzis, Schertzer, Hommes, Kloeppel, Shoykhetbrod, Geibig, Johannes, Rassy and Christnacher100] proposed a UA detection, positioning and classification system based on optical, acoustic and radar multi-sensor fusion. The system uses a multi-signal classification method to realise acoustic array positioning, uses radar technology to improve the detection rate and reduce the false alarm rate, and combines an infrared image with a visible image to realise easier and faster detection of UA targets under clutter, smoke or complex background conditions based on optical sensors.

Laurenzis et al. [Reference Laurenzis, Hengy, Hammer, Hommes, Johannes, Giovanneschi, Rassy, Bacher, Schertzer and Poyet101] studied the detection and tracking of multiple UA in an urban environment. The system consists of a distributed sensor network with static and mobile nodes, including optical, acoustic, lidar, microwave radar and other sensors. The experimental results show that the average error between the UA positioning results of the system and the real data is about 6m.

5.3 Suggestions on system construction

Due to the advantages and disadvantages of various kinds of sensors, it is almost impossible to provide the required situation awareness by using a single sensor for UA detection and identification. However, if all kinds of technologies can be mixed and complemented, it is possible to find an effective solution. Figure 16 recommends a construction scheme of UA detection system, which realises all-round situation awareness in a robust way by fusing the information of four types of sensors.

Figure 16. Proposal of UA surveillance system.

A long-range radar is placed in the centre of the sensor coverage area. Radar is a reliable early warning method, among which the holographic radar, which can obtain all-round target fine features with high data rate, is the best, so as to provide data support for extracting target m-D features. In order to further correct the range and azimuth information of the detected target, multiple panoramic infrared cameras need to form complementary and cross validation at the edge of the coverage area to reduce the false alarm rate. Due to the sensitivity of infrared cameras in bad weather conditions, a visible light camera with rotation and zoom functions is placed near the radar to further improve the recognition ability. In addition, in order to avoid ground object occlusion, microphone arrays or TDOA stations can be distributed around the protected area to provide another complementary and alternative solution. In addition, the deployment of the TDOA array will help to complement other sensors and help trace the UA operator. Based on the deep learning method, the deep learning network for UA detection and recognition can utilise the recorded data of each sensor. Finally, the single source warning signals and features generated by each single source deep learning network are integrated with the deep learning network of multi-sensor fusion to improve the target recognition ability of the invading UA. The heterogeneity of multi-sensor data requires deep learning methods to construct joint representation data by using its internal relationship to effectively deal with the diversity of data representation. The simultaneous interpreting of different signals from different sensors can provide important knowledge aggregation compared with single sensors, which is the advantage of this scheme using multi-sensor fusion deep learning.

6.0 Conclusions and prospects

In this paper, the research work of UA detection and recognition methods based on radar, photoelectric, acoustic, radio detection sensors and multi-sensor information fusion algorithm is reviewed. The m-D feature reflects the micro-motion of the target body and components, which shows a promising ability of detection and classification. Photoelectric detection is still an important means of UA target detection and recognition. In the UA detection mission, the deployment cost of microphone arrays is low, which will help to form a complementary and robust system framework when combined with other sensors. The radio detection and positioning technology represented by TDOA can lock the position of the UA operator, which plays an irreplaceable role in multi-sensor UA detection. In addition, the application of deep learning may lead to major breakthroughs in this field in the next few years.

With the rapid development of multi-source sensor performance and signal processing and fusion technology, the development trend of UA detection and recognition is mainly reflected in the following aspects:

-

(a) The progress of radar hardware technology provides a solid foundation for the development of radar target detection refinement and intelligent information processing technology. At the same time, new radar systems such as digital array systems, external emitter radar, holographic radar, MIMO radar, software radar and intelligent radar expand the available signal dimension and provide a flexible software framework for the refinement of radar target detection and the integrated processing of detection and recognition. In addition, in the complex environment, the comprehensive use of the information of photoelectric, acoustic, radio and other multi-source sensors to make up for the limitations of radar can further improve the recognition efficiency and accuracy.

-

(b) The fusion of signal and data features is an effective way to improve the accuracy of target classification. The long-time observation mode of staring radar make it possible to extract high-resolution m-D features, and can obtain the fine signal features of UA and birds. The m-D features of UA and bird targets are obviously different, which lays a foundation for classification and recognition. In addition, the motion trajectories of UA and birds have a certain degree of discrimination, and the mature target tracking algorithm approximates the real motion state of the target by establishing a variety of motion models. Therefore, the fusion of signal and data features of UA and birds can expand the difference in feature space and improve the classification probability.

-

(c) Deep learning networks provide a new means for intelligent recognition of UA and bird targets. Using the idea of intelligent learning such as deep learning, through the construction of multilayer convolution neural network, the complex structure in high-dimensional data could be found, whose strong feature expression ability and high classification and recognition accuracy have been verified in the fields of image recognition and speech recognition. When classifying UA and bird signals by using only the m-D features obtained from a time-frequency map, the feature space is limited and the accuracy is low. Therefore, the idea of multi-feature and multi-channel CNN fusion processing can be adopted to input the echo sequence diagram, m-D time-frequency diagram, range Doppler diagram, transform domain diagram and motion trajectory diagram of the target into multi-channel CNNs, so as to make better use of CNNs for feature learning and target recognition.

Acknowledgements

The research work is jointly funded by the National Natural Science Foundation of China (NSFC) and Civil Aviation Administration of China (CAAC) (U2133216, U1933135), and also supported by BeiHang Zhuo Bai Program (ZG216S2182).

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/aer.2022.50