1 Introduction

Consider the symbolic dynamical system

![]() $(\mathcal {A}^{\mathbb {N}},\mathscr {F},\mu ,\theta )$

in which

$(\mathcal {A}^{\mathbb {N}},\mathscr {F},\mu ,\theta )$

in which

![]() $\mathcal A$

is a finite alphabet,

$\mathcal A$

is a finite alphabet,

![]() $\theta $

is the left-shift map and

$\theta $

is the left-shift map and

![]() $\mu $

is a shift-invariant probability measure, that is,

$\mu $

is a shift-invariant probability measure, that is,

![]() $\mu \circ \theta ^{-1}=\mu $

. We are interested in the statistical properties of the return time

$\mu \circ \theta ^{-1}=\mu $

. We are interested in the statistical properties of the return time

![]() $R_n(x)$

, the first time the orbit of x comes back in the nth cylinder

$R_n(x)$

, the first time the orbit of x comes back in the nth cylinder

![]() $[x_0^{n-1}]=[x_0,\ldots ,x_{n-1}]$

(that is, the set of all

$[x_0^{n-1}]=[x_0,\ldots ,x_{n-1}]$

(that is, the set of all

![]() $y\in \mathcal {A}^{\mathbb {N}}$

coinciding with x on the first n symbolsFootnote

1

).

$y\in \mathcal {A}^{\mathbb {N}}$

coinciding with x on the first n symbolsFootnote

1

).

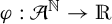

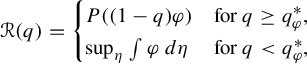

The main contribution of this paper is the calculation of the return-time

![]() $L^q$

-spectrum (or cumulant generating function) in the class of equilibrium states (a subclass of shift-invariant ergodic measures; see §2.1). More specifically, consider a potential

$L^q$

-spectrum (or cumulant generating function) in the class of equilibrium states (a subclass of shift-invariant ergodic measures; see §2.1). More specifically, consider a potential

![]() $\varphi $

having summable variation (this includes Hölder continuous potentials for which the variation decreases exponentially fast). Our main result, Theorem 3.1, states that if its unique equilibrium state, denoted by

$\varphi $

having summable variation (this includes Hölder continuous potentials for which the variation decreases exponentially fast). Our main result, Theorem 3.1, states that if its unique equilibrium state, denoted by

![]() $\mu _\varphi $

, is not of maximal entropy, then

$\mu _\varphi $

, is not of maximal entropy, then

where

![]() $P(\cdot )$

is the topological pressure, the supremum is taken over shift-invariant probability measures, and

$P(\cdot )$

is the topological pressure, the supremum is taken over shift-invariant probability measures, and

![]() $q_\varphi ^*\in ]-1,0[$

is the unique solution of the equation

$q_\varphi ^*\in ]-1,0[$

is the unique solution of the equation

We also prove that when

![]() $\varphi $

is a potential corresponding to the measure of maximal entropy, then

$\varphi $

is a potential corresponding to the measure of maximal entropy, then

![]() $q^*_\varphi =-1$

and

$q^*_\varphi =-1$

and

is piecewise linear (Theorem 3.2). In this case, and only in this case, the return-time spectrum coincides with the waiting-time

![]() $L^q$

-spectrum

$L^q$

-spectrum

that was previously studied in [Reference Chazottes and Ugalde7] (see §2.2 for definitions). It is fair to say that the expressions of

and

are unexpected, and that it is surprising that they only coincide if

![]() $\mu _\varphi $

is the measure of maximal entropy.

$\mu _\varphi $

is the measure of maximal entropy.

Below we will list some implications of this result, and how it relates to the literature.

1.1 The ansatz

$R_n(x)\longleftrightarrow 1/\mu _\varphi ([x_0^{n-1}])$

$R_n(x)\longleftrightarrow 1/\mu _\varphi ([x_0^{n-1}])$

A remarkable result [Reference Ornstein and Weiss15, Reference Shields18] is that, for any ergodic measure

![]() $\mu $

,

$\mu $

,

where

![]() $h(\mu )=-\lim _n ({1}/{n}) \sum _{a_0^{n-1}\in \mathcal {A}^n} \mu ([a_0^{n-1}]) \log \mu ([a_0^{n-1}])$

is the entropy of

$h(\mu )=-\lim _n ({1}/{n}) \sum _{a_0^{n-1}\in \mathcal {A}^n} \mu ([a_0^{n-1}]) \log \mu ([a_0^{n-1}])$

is the entropy of

![]() $\mu $

. Compare this result with the Shannon–McMillan–Breiman theorem which says that

$\mu $

. Compare this result with the Shannon–McMillan–Breiman theorem which says that

Hence, using return times, we do not need to know

![]() $\mu $

to estimate the entropy, but only to assume that we observe a typical output

$\mu $

to estimate the entropy, but only to assume that we observe a typical output

![]() $x=x_0,x_1,\ldots $

of the process. In particular, combining the two previous pointwise convergences, we can write

$x=x_0,x_1,\ldots $

of the process. In particular, combining the two previous pointwise convergences, we can write

![]() $R_n(x) \asymp 1/\mu ([x_0^{n-1}])$

for

$R_n(x) \asymp 1/\mu ([x_0^{n-1}])$

for

![]() $\mu $

-almost every x

Footnote

2

. This yields the natural ansatz

$\mu $

-almost every x

Footnote

2

. This yields the natural ansatz

when integrating with respect to

![]() $\mu _\varphi $

. However, it is a consequence of our main result that this ansatz is not correct for the

$\mu _\varphi $

. However, it is a consequence of our main result that this ansatz is not correct for the

![]() $L^q$

-spectra. Indeed, for the class of equilibrium states we consider (see §2.2),

$L^q$

-spectra. Indeed, for the class of equilibrium states we consider (see §2.2),

which means that the

![]() $L^q$

-spectrum of the measure and

$L^q$

-spectrum of the measure and

are different when

![]() $q<q^*_\varphi $

.

$q<q^*_\varphi $

.

1.2 Fluctuations of return times

When

![]() $\mu _\varphi $

is the equilibrium state of a potential

$\mu _\varphi $

is the equilibrium state of a potential

![]() $\varphi $

of summable variation, there is a uniform control of the measure of cylinders, in the sense that

$\varphi $

of summable variation, there is a uniform control of the measure of cylinders, in the sense that

![]() $\log \mu _\varphi ([x_0^{n-1}])=\sum _{i=0}^{n-1} \varphi (x_i^{\infty })\pm \text {Const}$

, where the constant is independent of x and n. Moreover,

$\log \mu _\varphi ([x_0^{n-1}])=\sum _{i=0}^{n-1} \varphi (x_i^{\infty })\pm \text {Const}$

, where the constant is independent of x and n. Moreover,

![]() $h(\mu _\varphi )=-\int \varphi \,d\mu _\varphi $

, so it is tempting to think that the fluctuations of

$h(\mu _\varphi )=-\int \varphi \,d\mu _\varphi $

, so it is tempting to think that the fluctuations of

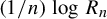

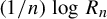

![]() $({1}/{n})\log R_n(x)$

should be the same as that of

$({1}/{n})\log R_n(x)$

should be the same as that of

![]() $-({1}/{n})\sum _{i=0}^{n-1} \varphi (x_i^{\infty })$

, in the sense of the central limit and large deviation asymptotics. Indeed, when

$-({1}/{n})\sum _{i=0}^{n-1} \varphi (x_i^{\infty })$

, in the sense of the central limit and large deviation asymptotics. Indeed, when

![]() $\varphi $

is Hölder continuous, it was proved in [Reference Collet, Galves and Schmitt8] that

$\varphi $

is Hölder continuous, it was proved in [Reference Collet, Galves and Schmitt8] that

![]() $\sqrt {n}(\log R_n/n-h(\mu ))$

converges in law to a Gaussian random variable

$\sqrt {n}(\log R_n/n-h(\mu ))$

converges in law to a Gaussian random variable

![]() $\mathcal {N}(0,\sigma ^2)$

, where

$\mathcal {N}(0,\sigma ^2)$

, where

![]() $\sigma ^2$

is the asymptotic variance of

$\sigma ^2$

is the asymptotic variance of

![]() $(({1}/{n})\sum _{i=0}^{n-1} \varphi (X_i^{\infty }))$

Footnote

3

. This was extended to potentials with summable variation in [Reference Chazottes and Ugalde7]. In plain words,

$(({1}/{n})\sum _{i=0}^{n-1} \varphi (X_i^{\infty }))$

Footnote

3

. This was extended to potentials with summable variation in [Reference Chazottes and Ugalde7]. In plain words,

![]() $(({1}/{n})\log R_n(x))$

has the same central limit asymptotics as

$(({1}/{n})\log R_n(x))$

has the same central limit asymptotics as

![]() $(({1}/{n})\sum _{i=0}^{n-1} \varphi (x_i^{\infty }))$

Footnote

4

.

$(({1}/{n})\sum _{i=0}^{n-1} \varphi (x_i^{\infty }))$

Footnote

4

.

In [Reference Collet, Galves and Schmitt8], large deviation asymptotics of

![]() $(({1}/{n})\log R_n(x))$

, when

$(({1}/{n})\log R_n(x))$

, when

![]() $\varphi $

is Hölder continuous, were also considered. It is proved therein that, on a sufficiently small (non-explicit) interval around

$\varphi $

is Hölder continuous, were also considered. It is proved therein that, on a sufficiently small (non-explicit) interval around

![]() $h(\mu _\varphi )$

, the so-called rate function coincides with the rate function of

$h(\mu _\varphi )$

, the so-called rate function coincides with the rate function of

![]() $(-({1}/{n})\sum _{i=0}^{n-1} \varphi (x_i^{\infty }))$

. The latter is known to be the Legendre transform of

$(-({1}/{n})\sum _{i=0}^{n-1} \varphi (x_i^{\infty }))$

. The latter is known to be the Legendre transform of

![]() $P((1-q)\varphi )$

. Using the Legendre transform of the return-time

$P((1-q)\varphi )$

. Using the Legendre transform of the return-time

![]() $L^q$

-spectrum, a direct consequence of our main result (see Theorem 3.3) is that, when

$L^q$

-spectrum, a direct consequence of our main result (see Theorem 3.3) is that, when

![]() $\varphi $

has summable variation, the coincidence of the rate functions holds on a much larger (and explicit, depending on

$\varphi $

has summable variation, the coincidence of the rate functions holds on a much larger (and explicit, depending on

![]() $q^*_\varphi $

) interval around

$q^*_\varphi $

) interval around

![]() $h(\mu _\varphi )$

. In other words, we extend the large deviation result of [Reference Collet, Galves and Schmitt8] in two ways: we deal with more general potentials and we get a much larger interval for the values of large deviations.

$h(\mu _\varphi )$

. In other words, we extend the large deviation result of [Reference Collet, Galves and Schmitt8] in two ways: we deal with more general potentials and we get a much larger interval for the values of large deviations.

Notice that a similar result was deduced in [Reference Chazottes and Ugalde7] for the waiting time, based on the Legendre transform of the waiting-time

![]() $L^q$

-spectrum. In any case, this strategy cannot work to compute the rate functions of

$L^q$

-spectrum. In any case, this strategy cannot work to compute the rate functions of

![]() $(({1}/{n})\log R_n)$

and

$(({1}/{n})\log R_n)$

and

![]() $(({1}/{n})\log W_n)$

, because the corresponding

$(({1}/{n})\log W_n)$

, because the corresponding

![]() $L^q$

-spectra fail to be differentiable. Obtaining the complete description of large deviation asymptotics for

$L^q$

-spectra fail to be differentiable. Obtaining the complete description of large deviation asymptotics for

![]() $(({1}/{n})\log R_n)$

and

$(({1}/{n})\log R_n)$

and

![]() $(({1}/{n})\log W_n)$

is an open question to date.

$(({1}/{n})\log W_n)$

is an open question to date.

1.3 Relation to the return-time dimensions

Consider a general ergodic dynamical system

![]() $(M,T,\mu )$

and replace cylinders by (Euclidean) balls in the above return-time

$(M,T,\mu )$

and replace cylinders by (Euclidean) balls in the above return-time

![]() $L^q$

-spectrum, that is, consider the function

$L^q$

-spectrum, that is, consider the function

![]() $q\mapsto \int \tau _{B(x,\varepsilon )}^q(x) \,d\mu (x)$

, where

$q\mapsto \int \tau _{B(x,\varepsilon )}^q(x) \,d\mu (x)$

, where

![]() $\tau _{B(x,\varepsilon )}(x)$

is the first time the orbit of x under T comes back to the ball

$\tau _{B(x,\varepsilon )}(x)$

is the first time the orbit of x under T comes back to the ball

![]() $B(x,\varepsilon )$

of center x and radius

$B(x,\varepsilon )$

of center x and radius

![]() $\varepsilon $

. The idea is to introduce return-time dimensions

$\varepsilon $

. The idea is to introduce return-time dimensions

![]() $D_\tau (q)$

by postulating that

$D_\tau (q)$

by postulating that

![]() $\int \tau _{B(x,\varepsilon )}^q(x) \,d\mu (x)\approx \varepsilon ^{D_\tau (q)}$

, as

$\int \tau _{B(x,\varepsilon )}^q(x) \,d\mu (x)\approx \varepsilon ^{D_\tau (q)}$

, as

![]() $\varepsilon \downarrow 0$

. This was done in [Reference Hadyn, Luevano, Mantica and Vaienti11] (with a different ‘normalization’ in q) and compared numerically with the classical spectrum of generalized dimensions

$\varepsilon \downarrow 0$

. This was done in [Reference Hadyn, Luevano, Mantica and Vaienti11] (with a different ‘normalization’ in q) and compared numerically with the classical spectrum of generalized dimensions

![]() $D_\mu (q)$

defined in a similar way, with

$D_\mu (q)$

defined in a similar way, with

![]() $\mu (B(x,\varepsilon ))^{-1}$

instead of

$\mu (B(x,\varepsilon ))^{-1}$

instead of

![]() $\tau _{B(x,\varepsilon )}(x)$

(geometric counterpart of the ansatz (1)). They studied a system of iterated functions in dimension one and numerically observed that return-time dimensions and generalized dimensions do not coincide. This can be understood with analytical arguments. For recent progress, more references and new perspectives, see [Reference Caby, Faranda, Mantica, Vaienti and Yiou6]. Working with (Euclidean) balls in dynamical systems with a phase space M of dimension higher than one is more natural than working with cylinders, but it is much more difficult. It is an interesting open problem to obtain an analog of our main result even for uniform hyperbolic systems. We refer to [Reference Caby, Faranda, Mantica, Vaienti and Yiou6] for recent developments.

$\tau _{B(x,\varepsilon )}(x)$

(geometric counterpart of the ansatz (1)). They studied a system of iterated functions in dimension one and numerically observed that return-time dimensions and generalized dimensions do not coincide. This can be understood with analytical arguments. For recent progress, more references and new perspectives, see [Reference Caby, Faranda, Mantica, Vaienti and Yiou6]. Working with (Euclidean) balls in dynamical systems with a phase space M of dimension higher than one is more natural than working with cylinders, but it is much more difficult. It is an interesting open problem to obtain an analog of our main result even for uniform hyperbolic systems. We refer to [Reference Caby, Faranda, Mantica, Vaienti and Yiou6] for recent developments.

1.4 Further recent literature

We now come back to large deviations for return times and comment on other results related to ours, besides [Reference Collet, Galves and Schmitt8]. In [Reference Jain and Bansal12], the authors obtain the following result. For a

![]() $\phi $

-mixing process with an exponentially decaying rate, and satisfying a property called ‘exponential rates for entropy’, there exists an implicit positive function I such that

$\phi $

-mixing process with an exponentially decaying rate, and satisfying a property called ‘exponential rates for entropy’, there exists an implicit positive function I such that

![]() $I(0)=0$

and

$I(0)=0$

and

where h is the entropy of the process. In the same vein, [Reference Coutinho, Rousseau and Saussol9] considered the case of (geometric) balls in smooth dynamical systems.

1.5 A few words about the proof of the main theorem

For

![]() $q>0$

, an important ingredient of the proof is an approximation of the distribution of

$q>0$

, an important ingredient of the proof is an approximation of the distribution of

![]() $R_n(x)\mu _\varphi ([x_0^{n-1}])$

by an exponential law, with a precise error term, recently proved in [Reference Abadi, Amorim and Gallo1]. Using this result, the computation of

$R_n(x)\mu _\varphi ([x_0^{n-1}])$

by an exponential law, with a precise error term, recently proved in [Reference Abadi, Amorim and Gallo1]. Using this result, the computation of

![]() is straightforward. The range

is straightforward. The range

![]() $q<0$

is much more delicate. To get upper and lower bounds for

$q<0$

is much more delicate. To get upper and lower bounds for

![]() $\log \int R_n^q \,d\mu _{\varphi }$

, we have to partition

$\log \int R_n^q \,d\mu _{\varphi }$

, we have to partition

![]() $\mathcal {A}^{\mathbb {N}}$

over all cylinders; in particular, we cannot only take into account cylinders which are ‘typical’ for

$\mathcal {A}^{\mathbb {N}}$

over all cylinders; in particular, we cannot only take into account cylinders which are ‘typical’ for

![]() $\mu _\varphi $

. A crucial role is played by orbits which come back after less than n iterations under the shift in cylinders of length n. Such orbits are closely related to periodic orbits. What happens is roughly the following. There are two terms in competition in the ‘

$\mu _\varphi $

. A crucial role is played by orbits which come back after less than n iterations under the shift in cylinders of length n. Such orbits are closely related to periodic orbits. What happens is roughly the following. There are two terms in competition in the ‘

![]() $(1/2)\log $

limit’. The first one is

$(1/2)\log $

limit’. The first one is

$$ \begin{align} \sum_{a_0^{n-1}} \mu_\varphi([a_0^{n-1}]\cap \{T_{[a_0^{n-1}]}=\tau([a_0^{n-1}])\}), \end{align} $$

$$ \begin{align} \sum_{a_0^{n-1}} \mu_\varphi([a_0^{n-1}]\cap \{T_{[a_0^{n-1}]}=\tau([a_0^{n-1}])\}), \end{align} $$

where

![]() $T_{[a_0^{n-1}]}(x)$

is the first time that the orbit of x enters

$T_{[a_0^{n-1}]}(x)$

is the first time that the orbit of x enters

![]() $[a_0^{n-1}]$

, and

$[a_0^{n-1}]$

, and

![]() $\tau ([a_0^{n-1}])$

is the smallest first return time among all

$\tau ([a_0^{n-1}])$

is the smallest first return time among all

![]() $y\in [a_0^{n-1}]$

. The second term is

$y\in [a_0^{n-1}]$

. The second term is

$$ \begin{align} \sum_{a_0^{n-1}} \mu_\varphi([a_0^{n-1}])^{q}. \end{align} $$

$$ \begin{align} \sum_{a_0^{n-1}} \mu_\varphi([a_0^{n-1}])^{q}. \end{align} $$

Depending on the value of

![]() $q<0$

, when we take the logarithm and then divide by n, the first term (2) will beat the second one in the limit

$q<0$

, when we take the logarithm and then divide by n, the first term (2) will beat the second one in the limit

![]() $n\to \infty $

, or vice-versa. Since the second term (3) behaves like

$n\to \infty $

, or vice-versa. Since the second term (3) behaves like

![]() ${e}^{nP((1-q)\varphi )}$

, and since we prove that the first one behaves like

${e}^{nP((1-q)\varphi )}$

, and since we prove that the first one behaves like

![]() ${e}^{n \sup _\eta \int \varphi \,d\eta }$

, this indicates why the critical value

${e}^{n \sup _\eta \int \varphi \,d\eta }$

, this indicates why the critical value

![]() $q^*_\varphi $

shows up. The asymptotic behavior of the first term (2) is rather delicate to analyze (see Proposition 4.2), and is an important ingredient of the present paper.

$q^*_\varphi $

shows up. The asymptotic behavior of the first term (2) is rather delicate to analyze (see Proposition 4.2), and is an important ingredient of the present paper.

1.6 Organisation of the paper

The framework and the basic definitions are given in §2. In §2.1, we collect basic facts about equilibrium states and topological pressure. In §2.2, we define

![]() $L^q$

-spectra for measures, return times and waiting times. In §3, we give our main results and two simple examples in which all the involved quantities can be explicitly computed. The proofs are given in §4.

$L^q$

-spectra for measures, return times and waiting times. In §3, we give our main results and two simple examples in which all the involved quantities can be explicitly computed. The proofs are given in §4.

2 Setting and basic definitions

2.1 Shift space and equilibrium states

2.1.1 Notation and framework

For any sequence

![]() $(a_k)_{k\geq 0}$

where

$(a_k)_{k\geq 0}$

where

![]() $a_k\in \mathcal {A}$

, we denote the partial sequence (‘string’)

$a_k\in \mathcal {A}$

, we denote the partial sequence (‘string’)

![]() $(a_i,a_{i+1},\ldots ,a_j)$

by

$(a_i,a_{i+1},\ldots ,a_j)$

by

![]() $a_i^j$

, for

$a_i^j$

, for

![]() $i<j$

. (By convention,

$i<j$

. (By convention,

![]() $a_i^i:=a_i$

.) In particular,

$a_i^i:=a_i$

.) In particular,

![]() $a_i^\infty $

denotes the sequence

$a_i^\infty $

denotes the sequence

![]() $(a_k)_{k \geq i}$

.

$(a_k)_{k \geq i}$

.

We consider the space

![]() $\mathcal {A}^{\mathbb {N}}$

of infinite sequences

$\mathcal {A}^{\mathbb {N}}$

of infinite sequences

![]() $x=(x_0,x_1,\ldots )$

, where

$x=(x_0,x_1,\ldots )$

, where

![]() $x_i\in \mathcal {A}$

,

$x_i\in \mathcal {A}$

,

![]() $i\in \mathbb {N}:=\{0,1,\ldots \}$

. Endowed with the product topology,

$i\in \mathbb {N}:=\{0,1,\ldots \}$

. Endowed with the product topology,

![]() $\mathcal {A}^{\mathbb {N}}$

is a compact space. The cylinder sets

$\mathcal {A}^{\mathbb {N}}$

is a compact space. The cylinder sets

![]() $[a_i^j]=\{x \in \mathcal {A}^{\mathbb {N}}: x_i^j=a_i^j\}$

,

$[a_i^j]=\{x \in \mathcal {A}^{\mathbb {N}}: x_i^j=a_i^j\}$

,

![]() $i,j\in \mathbb {N}$

, generate the Borel

$i,j\in \mathbb {N}$

, generate the Borel

![]() $\sigma $

-algebra

$\sigma $

-algebra

![]() $\mathscr {F}$

. Now define the shift

$\mathscr {F}$

. Now define the shift

![]() $\theta :\mathcal {A}^{\mathbb {N}}\to \mathcal {A}^{\mathbb {N}}$

by

$\theta :\mathcal {A}^{\mathbb {N}}\to \mathcal {A}^{\mathbb {N}}$

by

![]() $(\theta x)_i=x_{i+1}$

,

$(\theta x)_i=x_{i+1}$

,

![]() $i\in \mathbb {N}$

. Let

$i\in \mathbb {N}$

. Let

![]() $\mu $

be a shift-invariant probability measure on

$\mu $

be a shift-invariant probability measure on

![]() $\mathscr {F}$

, that is,

$\mathscr {F}$

, that is,

![]() $\mu (B)=\mu (\theta ^{-1}B)$

for each cylinder B. We then consider the stationary process

$\mu (B)=\mu (\theta ^{-1}B)$

for each cylinder B. We then consider the stationary process

![]() $(X_k)_{k\geq 1}$

on the probability space

$(X_k)_{k\geq 1}$

on the probability space

![]() $(\mathcal {A}^{\mathbb {N}},\mathscr {F},\mu )$

, where

$(\mathcal {A}^{\mathbb {N}},\mathscr {F},\mu )$

, where

![]() $X_n(x)=x_n$

,

$X_n(x)=x_n$

,

![]() $n\in \mathbb {N}$

. We will use the shorthand notation

$n\in \mathbb {N}$

. We will use the shorthand notation

![]() $X_i^j$

for

$X_i^j$

for

![]() $(X_i,X_{i+1},\ldots ,X_j)$

, where

$(X_i,X_{i+1},\ldots ,X_j)$

, where

![]() $i<j$

. As usual,

$i<j$

. As usual,

![]() $\mathscr {F}_i^j$

is the

$\mathscr {F}_i^j$

is the

![]() $\sigma $

-algebra generated by

$\sigma $

-algebra generated by

![]() $X_i^j$

, where

$X_i^j$

, where

![]() $0\leq i\leq j\leq \infty $

. We denote by

$0\leq i\leq j\leq \infty $

. We denote by

![]() $\mathscr {M}_\theta (\mathcal {A}^{\mathbb {N}})$

the set of shift-invariant probability measures. This is a compact set in the weak topology.

$\mathscr {M}_\theta (\mathcal {A}^{\mathbb {N}})$

the set of shift-invariant probability measures. This is a compact set in the weak topology.

2.1.2 Equilibrium states and topological pressure

We refer to [Reference Bowen4, Reference Walters22] for details on the material of this section. We consider potentials of the form

![]() $\beta \varphi $

, where

$\beta \varphi $

, where

![]() and

and

![]() is of summable variation, that is,

is of summable variation, that is,

where

Obviously,

![]() $\beta \varphi $

is of summable variation for each

$\beta \varphi $

is of summable variation for each

![]() $\beta $

, and it has a unique equilibrium state denoted by

$\beta $

, and it has a unique equilibrium state denoted by

![]() $\mu _{\beta \varphi }$

. This means that it is the unique shift-invariant measure such that

$\mu _{\beta \varphi }$

. This means that it is the unique shift-invariant measure such that

$$ \begin{align} \sup_{\eta\in \mathscr{M}_\theta(\mathcal{A}^{\mathbb{N}})}\bigg\{h(\eta)+\int \beta\varphi \,d\eta\bigg\}=h(\mu_{\beta\varphi})+ \int \beta\varphi \,d\mu_{\beta\varphi} =P(\beta\varphi), \end{align} $$

$$ \begin{align} \sup_{\eta\in \mathscr{M}_\theta(\mathcal{A}^{\mathbb{N}})}\bigg\{h(\eta)+\int \beta\varphi \,d\eta\bigg\}=h(\mu_{\beta\varphi})+ \int \beta\varphi \,d\mu_{\beta\varphi} =P(\beta\varphi), \end{align} $$

where

![]() $P(\beta \varphi )$

is the topological pressure of

$P(\beta \varphi )$

is the topological pressure of

![]() $\beta \varphi $

.

$\beta \varphi $

.

For convenience, we ‘normalize’

![]() $\varphi $

as explained in [Reference Walters22, Corollary 3.3], which implies, in particular, that

$\varphi $

as explained in [Reference Walters22, Corollary 3.3], which implies, in particular, that

This gives the same equilibrium state

![]() $\mu _\varphi $

. (Since

$\mu _\varphi $

. (Since

![]() $\sum _{a\in \mathcal {A}}{e}^{\varphi (ax)}=1$

for all

$\sum _{a\in \mathcal {A}}{e}^{\varphi (ax)}=1$

for all

![]() $x\in \mathcal {A}^{\mathbb {N}}$

, we have

$x\in \mathcal {A}^{\mathbb {N}}$

, we have

![]() $\varphi <0$

.)

$\varphi <0$

.)

The maximal entropy is

![]() $\log |\mathcal {A}|$

and, because

$\log |\mathcal {A}|$

and, because

![]() $P(\varphi )=0$

, it is the equilibrium state of the potentials of the form

$P(\varphi )=0$

, it is the equilibrium state of the potentials of the form

![]() $u-u\circ \theta -\log |\mathcal {A}|$

for some continuous function

$u-u\circ \theta -\log |\mathcal {A}|$

for some continuous function

![]() .

.

We will use the following property, often referred to as the ‘Gibbs property’. There exists a constant

![]() $C=C_\varphi \geq 1$

such that, for any

$C=C_\varphi \geq 1$

such that, for any

![]() $n\geq 1$

, any cylinder

$n\geq 1$

, any cylinder

![]() $[a_0^{n-1}]$

and any

$[a_0^{n-1}]$

and any

![]() $x\in [a_0^{n-1}]$

,

$x\in [a_0^{n-1}]$

,

$$ \begin{align} C^{-1}\leq \frac{\mu_\varphi([a_0^{n-1}])}{\exp(\sum_{k=0}^{n-1} \varphi(x_k^\infty))}\leq C. \end{align} $$

$$ \begin{align} C^{-1}\leq \frac{\mu_\varphi([a_0^{n-1}])}{\exp(\sum_{k=0}^{n-1} \varphi(x_k^\infty))}\leq C. \end{align} $$

See [Reference Parry and Pollicott16], where one can easily adapt the proof of their Proposition 3.2 to generalize their Corollary 3.2.1 to get (5) with

![]() $C=\exp (\sum _{k\geq 1}\operatorname {var}_k(\varphi ))$

. We will also often use the following direct consequence of (5). For

$C=\exp (\sum _{k\geq 1}\operatorname {var}_k(\varphi ))$

. We will also often use the following direct consequence of (5). For

![]() $g\ge 0$

,

$g\ge 0$

,

![]() $m,n\ge 1$

and

$m,n\ge 1$

and

![]() $a_0^{m-1}\in \mathcal {A}^{m},b_0^{n-1}\in \mathcal {A}^n$

, we have

$a_0^{m-1}\in \mathcal {A}^{m},b_0^{n-1}\in \mathcal {A}^n$

, we have

$$ \begin{align} C^{-3} \leq \frac{\mu_\varphi([a_0^{m-1}]\cap \theta^{-m-g}[b_0^{n-1}])}{\mu_\varphi([a_0^{m-1}])\, \mu_\varphi([b_0^{n-1}])}\leq C^3=:D. \end{align} $$

$$ \begin{align} C^{-3} \leq \frac{\mu_\varphi([a_0^{m-1}]\cap \theta^{-m-g}[b_0^{n-1}])}{\mu_\varphi([a_0^{m-1}])\, \mu_\varphi([b_0^{n-1}])}\leq C^3=:D. \end{align} $$

For completeness, the proof is given in an appendix.

For the topological pressure of

![]() $\beta \varphi $

, we have the formula

$\beta \varphi $

, we have the formula

$$ \begin{align} P(\beta\varphi)=\lim_{n} \frac{1}{n}\log \sum_{a_0^{n-1}} {e}^{\beta \sup\{\sum_{k=0}^{n-1} \varphi(a_k^{n-1}x_n^\infty): x_n^\infty\in \mathcal{A}^{\mathbb{N}}\}}\!. \end{align} $$

$$ \begin{align} P(\beta\varphi)=\lim_{n} \frac{1}{n}\log \sum_{a_0^{n-1}} {e}^{\beta \sup\{\sum_{k=0}^{n-1} \varphi(a_k^{n-1}x_n^\infty): x_n^\infty\in \mathcal{A}^{\mathbb{N}}\}}\!. \end{align} $$

One can easily check that

![]() $P(\psi +u-u\circ \theta +c)=P(\psi )+c$

for any continuous potential

$P(\psi +u-u\circ \theta +c)=P(\psi )+c$

for any continuous potential

![]() $\psi $

, any continuous

$\psi $

, any continuous

![]() and any

and any

![]() . The map

. The map

![]() $\beta \mapsto P(\beta \varphi )$

is convex and continuously differentiable with

$\beta \mapsto P(\beta \varphi )$

is convex and continuously differentiable with

It is strictly decreasing since

![]() $\varphi <0$

. Moreover, it is strictly convex if and only if

$\varphi <0$

. Moreover, it is strictly convex if and only if

![]() $\mu _\varphi $

is not the measure of maximal entropy, that is, the equilibrium state for a potential of the form

$\mu _\varphi $

is not the measure of maximal entropy, that is, the equilibrium state for a potential of the form

![]() $u-u\circ \theta -\log |\mathcal {A}|$

, where

$u-u\circ \theta -\log |\mathcal {A}|$

, where

![]() is continuous. We refer to [Reference Takens and Verbitski21] for a proof of these facts.

is continuous. We refer to [Reference Takens and Verbitski21] for a proof of these facts.

2.2 Hitting times, recurrence times and related

$L^q$

-spectra

$L^q$

-spectra

2.2.1 Hitting and recurrence times

Given

![]() $x\in \mathcal {A}^{\mathbb {N}}$

and

$x\in \mathcal {A}^{\mathbb {N}}$

and

![]() $a_0^{n-1}\in \mathcal A^n$

, the (first) hitting time of x to

$a_0^{n-1}\in \mathcal A^n$

, the (first) hitting time of x to

![]() $[a_0^{n-1}]$

is

$[a_0^{n-1}]$

is

that is, the first time that the pattern

![]() $a_0^{n-1}$

appears in x. The (first) return time is defined by

$a_0^{n-1}$

appears in x. The (first) return time is defined by

that is, the first time that the first n symbols reappear in x. Finally, given

![]() $x,y\in \mathcal {A}^{\mathbb {N}}$

, we define the waiting time

$x,y\in \mathcal {A}^{\mathbb {N}}$

, we define the waiting time

which is the first time that the n first symbols of x appear in y.

2.2.2

$L^q$

-spectra

$L^q$

-spectra

Consider a sequence

![]() $(U_n)_{n\ge 1}$

of positive measurable functions on some probability space

$(U_n)_{n\ge 1}$

of positive measurable functions on some probability space

![]() $(\mathcal {A}^{\mathbb {N}},\mathscr {F},\mu )$

, where

$(\mathcal {A}^{\mathbb {N}},\mathscr {F},\mu )$

, where

![]() $\mu $

is shift-invariant, and define, for each

$\mu $

is shift-invariant, and define, for each

and

![]() $n\in \mathbb {N}^*$

, the quantities

$n\in \mathbb {N}^*$

, the quantities

and

Definition 2.1. (

$L^q$

-spectrum of

$L^q$

-spectrum of

$(U_n)_{n\ge 1}$

)

$(U_n)_{n\ge 1}$

)

When

![]() for all

for all

![]() , this defines the

, this defines the

![]() $L^q$

-spectrum of

$L^q$

-spectrum of

![]() $(U_n)_{n\ge 1}$

, denoted by

$(U_n)_{n\ge 1}$

, denoted by

![]() .

.

We will be mainly interested in three sequences of functions, which are, for

![]() $n\ge 1$

,

$n\ge 1$

,

Corresponding to (8), we naturally associate the functions

where for the third one, we mean that we integrate, in (8), with

![]() $\mu \otimes \mu $

: in other words, x and y are drawn independently and according to the same law

$\mu \otimes \mu $

: in other words, x and y are drawn independently and according to the same law

![]() $\mu $

. Finally, according to Definition 2.1, when the limits exist, we let

$\mu $

. Finally, according to Definition 2.1, when the limits exist, we let

be the

![]() $L^q$

-spectrum of the measure, the return-time

$L^q$

-spectrum of the measure, the return-time

![]() $L^q$

-spectrum and the waiting-time

$L^q$

-spectrum and the waiting-time

![]() $L^q$

-spectrum, respectively.

$L^q$

-spectrum, respectively.

The existence of these spectra is not known in general. Trivially,

![]() , and

, and

![]() . It is easy to see that

. It is easy to see that

![]() for ergodic measures (this follows from Kač’s lemma).

for ergodic measures (this follows from Kač’s lemma).

In this paper, we are interested in the particular case where

![]() $\mu =\mu _\varphi $

is an equilibrium state of a potential

$\mu =\mu _\varphi $

is an equilibrium state of a potential

![]() $\varphi $

of summable variation. In this setting, it is easy to see (this follows from (5) and (7)) that

$\varphi $

of summable variation. In this setting, it is easy to see (this follows from (5) and (7)) that

![]() exists and that, for all

exists and that, for all

![]() ,

,

On the other hand, as mentioned in the introduction, [Reference Chazottes and Ugalde7] proved, in the same setting, that

It is one of the main objective of the present paper to compute

![]() (and, in particular, to show that it exists).

(and, in particular, to show that it exists).

3 Main results

3.1 Two preparatory results

We start with two propositions about the critical value of q below which we will prove that the return-time

![]() $L^q$

-spectrum is different from the

$L^q$

-spectrum is different from the

![]() $L^q$

-spectrum of

$L^q$

-spectrum of

![]() $\mu _\varphi $

.

$\mu _\varphi $

.

Proposition 3.1. Let

![]() $\varphi $

be a potential of summable variation. Then, the equation

$\varphi $

be a potential of summable variation. Then, the equation

has a unique solution

![]() $q^*_\varphi \in [-1,0[$

. Moreover,

$q^*_\varphi \in [-1,0[$

. Moreover,

![]() $q_\varphi ^*=-1$

if and only if

$q_\varphi ^*=-1$

if and only if

![]() $\varphi =u-u\circ \theta -\log |\mathcal {A}|$

for some continuous function

$\varphi =u-u\circ \theta -\log |\mathcal {A}|$

for some continuous function

![]() .

.

See §4.1 for the proof.

The following (non-positive) quantity naturally shows up in the proof of the main theorem. Given a probability measure

![]() $\nu $

, let

$\nu $

, let

$$ \begin{align*} \gamma_\nu^+:=\lim_n\frac{1}{n}\log\max_{a_0^{n-1}}\nu([a_0^{n-1}]) \end{align*} $$

$$ \begin{align*} \gamma_\nu^+:=\lim_n\frac{1}{n}\log\max_{a_0^{n-1}}\nu([a_0^{n-1}]) \end{align*} $$

whenever the limit exists. In fact, we have the following variational formula for

![]() $\gamma _{\mu _\varphi }^+$

.

$\gamma _{\mu _\varphi }^+$

.

Proposition 3.2. Let

![]() $\varphi $

be a potential of summable variation. Then

$\varphi $

be a potential of summable variation. Then

![]() $\gamma _\varphi ^+:=\gamma ^+(\mu _\varphi )$

exists and

$\gamma _\varphi ^+:=\gamma ^+(\mu _\varphi )$

exists and

$$ \begin{align} \gamma_\varphi^+=\sup_{\eta\,\in\mathscr{M}_\theta(\mathcal{A}^{\mathbb{N}})} \int \varphi \,d\eta. \end{align} $$

$$ \begin{align} \gamma_\varphi^+=\sup_{\eta\,\in\mathscr{M}_\theta(\mathcal{A}^{\mathbb{N}})} \int \varphi \,d\eta. \end{align} $$

The proof is given in §4.2.

3.2 Main results

We can now state our main results.

Theorem 3.1. (Return-time

$L^q$

-spectrum)

$L^q$

-spectrum)

Let

![]() $\varphi $

be a potential of summable variation. Assume that

$\varphi $

be a potential of summable variation. Assume that

![]() $\varphi $

is not of the form

$\varphi $

is not of the form

![]() $u-u\circ \theta -\log |\mathcal {A}|$

for some continuous function

$u-u\circ \theta -\log |\mathcal {A}|$

for some continuous function

(i.e.,

![]() $\mu _\varphi $

is not the measure of maximal entropy). Then the return-time

$\mu _\varphi $

is not the measure of maximal entropy). Then the return-time

![]() $L^q$

-spectrum

$L^q$

-spectrum

exists, and we have

where

![]() $q_\varphi ^*$

is given in Proposition 3.1.

$q_\varphi ^*$

is given in Proposition 3.1.

In view of (9) and (12), the previous formula can be rewritten as

In other words, the return-time

![]() $L^q$

-spectrum coincides with the

$L^q$

-spectrum coincides with the

![]() $L^q$

-spectrum of the equilibrium state only for

$L^q$

-spectrum of the equilibrium state only for

![]() $q\geq q_\varphi ^*$

.

$q\geq q_\varphi ^*$

.

We subsequently deal with the measure of maximal entropy because, for that measure, the return-time and the waiting-time spectra coincide.

In view of the waiting-time

![]() $L^q$

-spectrum

$L^q$

-spectrum

![]() , given in (10), which was computed by [Reference Chazottes and Ugalde7], we see that, if

, given in (10), which was computed by [Reference Chazottes and Ugalde7], we see that, if

![]() $\varphi $

is not of the form

$\varphi $

is not of the form

![]() $u-u\circ \theta -\log |\mathcal {A}|$

, then

$u-u\circ \theta -\log |\mathcal {A}|$

, then

![]() in the interval

in the interval

![]() $]$

$]$

![]() $-\infty ,q_\varphi ^*[\supsetneq ]-\infty , -1[$

. The fact that

$-\infty ,q_\varphi ^*[\supsetneq ]-\infty , -1[$

. The fact that

![]() $P(2\varphi )<\sup _{\eta \in \mathscr {M}_\theta (\mathcal {A}^{\mathbb {N}})} \int \varphi \,d\eta $

follows from the proof of Proposition 3.1, in which we prove that

$P(2\varphi )<\sup _{\eta \in \mathscr {M}_\theta (\mathcal {A}^{\mathbb {N}})} \int \varphi \,d\eta $

follows from the proof of Proposition 3.1, in which we prove that

![]() $q_\varphi ^*>-1$

in that case.

$q_\varphi ^*>-1$

in that case.

Figure 1 illustrates Theorem 3.1.

Figure 1 Illustration of Theorem 3.1. Plot of

![]() when

when

![]() $\mu =m^{\mathbb {N}}$

(product measure) with m being the Bernoulli distribution (that is,

$\mu =m^{\mathbb {N}}$

(product measure) with m being the Bernoulli distribution (that is,

![]() $\mathcal A=\{0,1\}$

) with parameter

$\mathcal A=\{0,1\}$

) with parameter

![]() $p= 1/3$

. This corresponds to a potential

$p= 1/3$

. This corresponds to a potential

![]() $\varphi $

which is locally constant on the cylinders

$\varphi $

which is locally constant on the cylinders

![]() $[0]$

and

$[0]$

and

![]() $[1]$

, and therefore it obviously fulfils the conditions of the theorem. See Subsection 3.4). For a general potential of summable variation which is not of the form

$[1]$

, and therefore it obviously fulfils the conditions of the theorem. See Subsection 3.4). For a general potential of summable variation which is not of the form

![]() $u-u\circ \theta -\log |\mathcal {A}|$

, the above graphs have the same shapes.

$u-u\circ \theta -\log |\mathcal {A}|$

, the above graphs have the same shapes.

We now consider the case where

![]() $\mu _\varphi $

is the measure of maximal entropy.

$\mu _\varphi $

is the measure of maximal entropy.

Theorem 3.2. (Coincidence of

and

and

)

)

The return-time

![]() $L^q$

-spectrum coincides with the waiting-time

$L^q$

-spectrum coincides with the waiting-time

![]() $L^q$

-spectrum if and only if

$L^q$

-spectrum if and only if

![]() $\varphi =u-u\circ \theta -\log |\mathcal {A}|$

for some continuous function

$\varphi =u-u\circ \theta -\log |\mathcal {A}|$

for some continuous function

. In that case,

3.3 Consequences on large deviation asymptotics

Let

![]() $\varphi $

be a potential of summable variation and assume that it is not of the form

$\varphi $

be a potential of summable variation and assume that it is not of the form

![]() $u-u\circ \theta -\log |\mathcal {A}|$

for some continuous function u. Let

$u-u\circ \theta -\log |\mathcal {A}|$

for some continuous function u. Let

We define the function

by

where

![]() $q(v)$

is the unique real number

$q(v)$

is the unique real number

![]() $q\in ]q^*_\varphi ,+\infty [$

such that

$q\in ]q^*_\varphi ,+\infty [$

such that

. It is easy to check that

. (This is because

is strictly convex, by the assumption we made on

![]() $\varphi $

, and is strictly increasing.) Notice that since

$\varphi $

, and is strictly increasing.) Notice that since

, we have

![]() $h(\mu _\varphi )\in ]v^*_\varphi ,v^+_\varphi [$

, and, in that interval,

$h(\mu _\varphi )\in ]v^*_\varphi ,v^+_\varphi [$

, and, in that interval,

is strictly convex and only vanishes at

![]() $v=h(\mu _\varphi )$

.

$v=h(\mu _\varphi )$

.

We have the following result.

Theorem 3.3. Let

![]() $\varphi $

be a potential of summable variation and assume that it is not of the form

$\varphi $

be a potential of summable variation and assume that it is not of the form

![]() $u-u\circ \theta -\log |\mathcal {A}|$

for some continuous function u. Then, for all

$u-u\circ \theta -\log |\mathcal {A}|$

for some continuous function u. Then, for all

![]() $v\in [h(\mu _\varphi ),v^+_\varphi [$

,

$v\in [h(\mu _\varphi ),v^+_\varphi [$

,

For all

![]() $v\in [v^*_\varphi ,h(\mu _\varphi )[$

,

$v\in [v^*_\varphi ,h(\mu _\varphi )[$

,

Proof. We apply a theorem from [Reference Plachky and Steinebach17], a variant of the classical Gärtner–Ellis theorem [Reference Dembo and Zeitouni10], which roughly says that the rate function

![]() is the Legendre transform of the cumulant generating function

is the Legendre transform of the cumulant generating function

![]() in the interval where it is continuously differentiable. We have that

in the interval where it is continuously differentiable. We have that

![]() is not differentiable at

is not differentiable at

![]() $q=q_\varphi ^*$

since

$q=q_\varphi ^*$

since

![]() and

and

![]() . Hence, we apply the large deviation theorem from [Reference Plachky and Steinebach17] for

. Hence, we apply the large deviation theorem from [Reference Plachky and Steinebach17] for

![]() $q\in ]q^*_\varphi ,+\infty [$

to prove the theorem.

$q\in ]q^*_\varphi ,+\infty [$

to prove the theorem.

Remark 3.1. Theorem 3.3 tells nothing about the asymptotic behaviour of

![]() ${\mu _\varphi ((1/2)\log R_n < v)}$

when

${\mu _\varphi ((1/2)\log R_n < v)}$

when

![]() $v\leq v^*_\varphi $

. Notice that the situation is similar for the large deviation rate function of waiting times; the only difference is that we take

$v\leq v^*_\varphi $

. Notice that the situation is similar for the large deviation rate function of waiting times; the only difference is that we take

![]() $-1$

in place of

$-1$

in place of

![]() $q^*_\varphi $

and, therefore,

$q^*_\varphi $

and, therefore,

![]() $-\int \varphi \,d \mu _{2\varphi }$

in place of

$-\int \varphi \,d \mu _{2\varphi }$

in place of

![]() $v^*_\varphi $

. We believe that there exists a non-trivial rate function describing the large deviation asymptotic for these values of v for both return and waiting times, but this has to be proved using another method.

$v^*_\varphi $

. We believe that there exists a non-trivial rate function describing the large deviation asymptotic for these values of v for both return and waiting times, but this has to be proved using another method.

3.4 Some explicit examples

3.4.1 Independent random variables

The return-time and hitting-time spectra are non-trivial even when

![]() $\mu $

is a product measure, that is, even for a sequence of independent random variables taking values in

$\mu $

is a product measure, that is, even for a sequence of independent random variables taking values in

![]() $\mathcal {A}$

. Take, for instance,

$\mathcal {A}$

. Take, for instance,

![]() $\mathcal {A}=\{0,1\}$

and let

$\mathcal {A}=\{0,1\}$

and let

![]() $\mu =m^{\mathbb {N}}$

, where m is a Bernoulli measure on

$\mu =m^{\mathbb {N}}$

, where m is a Bernoulli measure on

![]() $\mathcal {A}$

with parameter

$\mathcal {A}$

with parameter

![]() $p_1\neq \tfrac 12$

. This corresponds to a potential

$p_1\neq \tfrac 12$

. This corresponds to a potential

![]() $\varphi $

which is locally constant on the cylinders

$\varphi $

which is locally constant on the cylinders

![]() $[0]$

and

$[0]$

and

![]() $[1]$

. We can identify it with a function from

$[1]$

. We can identify it with a function from

![]() $\mathcal {A}$

to

$\mathcal {A}$

to

such that

![]() $\varphi (1)=\log p_1$

. For concreteness, let us take

$\varphi (1)=\log p_1$

. For concreteness, let us take

![]() $p_1=\tfrac 13$

. Then, it is easy to verify that

$p_1=\tfrac 13$

. Then, it is easy to verify that

and

and hence

![]() $P(2\varphi )<\gamma ^+_\varphi $

, as expected. Solving equation (11) numerically gives

$P(2\varphi )<\gamma ^+_\varphi $

, as expected. Solving equation (11) numerically gives

So, in this case, Theorem 3.1 reads

We refer to Figure 1 where this spectrum is plotted, together with

and

.

Remark 3.2. One can check that, as

![]() $p_1\to \tfrac 12$

,

$p_1\to \tfrac 12$

,

![]() and

and

![]() $\lim \nolimits _{p_1\to {1/2}} q^*_\varphi =-1$

, as expected.

$\lim \nolimits _{p_1\to {1/2}} q^*_\varphi =-1$

, as expected.

3.4.2 Markov chains

If a potential

![]() $\varphi $

depends only on the first two symbols, that is,

$\varphi $

depends only on the first two symbols, that is,

![]() $\varphi (x)=\varphi (x_1,x_2)$

, then the corresponding process is a Markov chain. For Markov chains on

$\varphi (x)=\varphi (x_1,x_2)$

, then the corresponding process is a Markov chain. For Markov chains on

![]() $\mathcal {A}=\{1,\ldots ,K\}$

with matrix

$\mathcal {A}=\{1,\ldots ,K\}$

with matrix

![]() $(Q(a,b))_{a,b\in \mathcal {A}}$

, a well-known result [Reference Szpankowski19, for instance] states that

$(Q(a,b))_{a,b\in \mathcal {A}}$

, a well-known result [Reference Szpankowski19, for instance] states that

$$ \begin{align} \gamma^+_\varphi=\max_{1\le \ell\le K}\max_{a_1^{\ell}\in \mathcal{C}_{\ell}}\frac{1}{\ell}\log\prod_{i=1}^{\ell}Q(a_{i},a_{i+1}), \end{align} $$

$$ \begin{align} \gamma^+_\varphi=\max_{1\le \ell\le K}\max_{a_1^{\ell}\in \mathcal{C}_{\ell}}\frac{1}{\ell}\log\prod_{i=1}^{\ell}Q(a_{i},a_{i+1}), \end{align} $$

where

![]() $\mathcal {C}_{\ell }$

is the set of cycles of distinct symbols of

$\mathcal {C}_{\ell }$

is the set of cycles of distinct symbols of

![]() $\mathcal {A}$

, with the convention that

$\mathcal {A}$

, with the convention that

![]() $a_{i+1}=a_i$

(circuits). On the other hand, it is well known [Reference Szpankowski19] that

$a_{i+1}=a_i$

(circuits). On the other hand, it is well known [Reference Szpankowski19] that

where

![]() $\unicode{x3bb} _{\ell }$

is the largest eigenvalue of the matrix

$\unicode{x3bb} _{\ell }$

is the largest eigenvalue of the matrix

![]() $((Q(a,b))^\ell )_{a,b\in \mathcal {A}}$

. This means that, in principle, everything is explicit for the Markov case. In practice, calculations are intractable even with some innocent-looking examples. Let us restrict to binary Markov chains (

$((Q(a,b))^\ell )_{a,b\in \mathcal {A}}$

. This means that, in principle, everything is explicit for the Markov case. In practice, calculations are intractable even with some innocent-looking examples. Let us restrict to binary Markov chains (

![]() $\mathcal {A}=\{0,1\}$

) which enjoy reversibility. In this case, (13) simplifies to

$\mathcal {A}=\{0,1\}$

) which enjoy reversibility. In this case, (13) simplifies to

(See, for instance [Reference Kamath and Verdú14].) If we further assume symmetry, that is,

![]() $Q(1,1)=Q(0,0)$

, then we obtain

$Q(1,1)=Q(0,0)$

, then we obtain

and

![]() $\gamma ^+_\varphi =\max \{\log Q(0,0),\log Q(0,1)\}$

. If we want to go beyond the symmetric case, the explicit expression of

$\gamma ^+_\varphi =\max \{\log Q(0,0),\log Q(0,1)\}$

. If we want to go beyond the symmetric case, the explicit expression of

gets cumbersome. As an illustration, consider the case

![]() $Q(0,0)=0.2$

and

$Q(0,0)=0.2$

and

![]() $Q(1,1)=0.6$

. Then

$Q(1,1)=0.6$

. Then

From (14) we easily obtain

![]() $\gamma _\varphi ^+=\log (0.6)$

. The solution of equation (11) can be found numerically:

$\gamma _\varphi ^+=\log (0.6)$

. The solution of equation (11) can be found numerically:

![]() $q^*_\varphi \approx -0.870750$

.

$q^*_\varphi \approx -0.870750$

.

4 Proofs

4.1 Proof of Proposition 3.1

Recall that

$$ \begin{align*} \mathcal M_\varphi(q)=P((1-q)\varphi)\quad\text{and}\quad \gamma_\varphi^+=\sup_{\eta\in\mathscr{M}_\theta(\mathcal{A}^{\mathbb{N}})} \int \varphi \,d\eta. \end{align*} $$

$$ \begin{align*} \mathcal M_\varphi(q)=P((1-q)\varphi)\quad\text{and}\quad \gamma_\varphi^+=\sup_{\eta\in\mathscr{M}_\theta(\mathcal{A}^{\mathbb{N}})} \int \varphi \,d\eta. \end{align*} $$

It follows easily from the basic properties of

![]() $\beta \to P(\beta \varphi )$

listed above that the map

$\beta \to P(\beta \varphi )$

listed above that the map

![]() $q\mapsto \mathcal M_\varphi (q)$

is a bijection from

$q\mapsto \mathcal M_\varphi (q)$

is a bijection from

![]() to

to

![]() since it is a strictly increasing

since it is a strictly increasing

![]() $C^1$

function. This implies that the equation

$C^1$

function. This implies that the equation

![]() $\mathcal M_\varphi (q)=\gamma _\varphi ^+$

has a unique solution

$\mathcal M_\varphi (q)=\gamma _\varphi ^+$

has a unique solution

![]() $q_\varphi ^*$

that is necessarily strictly negative, since

$q_\varphi ^*$

that is necessarily strictly negative, since

![]() $\gamma _\varphi ^+<0$

(because

$\gamma _\varphi ^+<0$

(because

![]() $\varphi <0$

) and

$\varphi <0$

) and

![]() $\mathcal M_\varphi (q)< 0$

if and only if

$\mathcal M_\varphi (q)< 0$

if and only if

![]() $q< 0$

(since

$q< 0$

(since

![]() $P(\varphi )=0$

).

$P(\varphi )=0$

).

We now prove that

![]() $q_\varphi ^*\geq -1$

. We use the variational principle (4) twice, first for

$q_\varphi ^*\geq -1$

. We use the variational principle (4) twice, first for

![]() $2\varphi $

and then for

$2\varphi $

and then for

![]() $\varphi $

, to get

$\varphi $

, to get

$$ \begin{align*} \mathcal M_\varphi(-1) &=P(2\varphi)=h(\mu_{2\varphi})+2\int \varphi \,d\mu_{2\varphi}\\ & = h(\mu_{2\varphi})+\int \varphi \,d\mu_{2\varphi}+\int \varphi \,d\mu_{2\varphi}\\ & \leq P(\varphi) +\int \varphi \,d\mu_{2\varphi}=\int \varphi \,d\mu_{2\varphi}\quad\text{(since } P(\varphi)=0\text{)}\\ & \leq\gamma_\varphi^+. \end{align*} $$

$$ \begin{align*} \mathcal M_\varphi(-1) &=P(2\varphi)=h(\mu_{2\varphi})+2\int \varphi \,d\mu_{2\varphi}\\ & = h(\mu_{2\varphi})+\int \varphi \,d\mu_{2\varphi}+\int \varphi \,d\mu_{2\varphi}\\ & \leq P(\varphi) +\int \varphi \,d\mu_{2\varphi}=\int \varphi \,d\mu_{2\varphi}\quad\text{(since } P(\varphi)=0\text{)}\\ & \leq\gamma_\varphi^+. \end{align*} $$

Hence,

![]() $q_\varphi ^*\geq -1$

since

$q_\varphi ^*\geq -1$

since

![]() $q\mapsto \mathcal M_\varphi (q)$

is increasing. Notice that

$q\mapsto \mathcal M_\varphi (q)$

is increasing. Notice that

![]() $\mathcal M_\varphi $

is a bijection between

$\mathcal M_\varphi $

is a bijection between

![]() $[-1,0]$

and

$[-1,0]$

and

![]() $[P(2\varphi ),0]$

, and

$[P(2\varphi ),0]$

, and

![]() $\gamma _\varphi ^+\in [P(2\varphi ),0]$

.

$\gamma _\varphi ^+\in [P(2\varphi ),0]$

.

It remains to analyze the ‘critical case’, that is,

![]() $q_\varphi ^*=-1$

.

$q_\varphi ^*=-1$

.

If

![]() $\varphi =u-u\circ \theta -\log |\mathcal {A}|$

, where

$\varphi =u-u\circ \theta -\log |\mathcal {A}|$

, where

![]() is continuous, then the equation

is continuous, then the equation

![]() $\mathcal M_\varphi (q)=\gamma _\varphi ^+$

boils down to the equation

$\mathcal M_\varphi (q)=\gamma _\varphi ^+$

boils down to the equation

![]() $q\log |\mathcal {A}|=-\log |\mathcal {A}|$

, and hence

$q\log |\mathcal {A}|=-\log |\mathcal {A}|$

, and hence

![]() $q_\varphi ^*=-1$

.

$q_\varphi ^*=-1$

.

We now prove the converse. It is convenient to introduce the auxiliary function

We collect its basic properties in the following lemma, the proof of which is given at the end of this section.

Lemma 4.1. The map

![]() has a continuous extension in

has a continuous extension in

![]() $0$

, where it takes the value

$0$

, where it takes the value

![]() $h(\mu _\varphi )$

. It is

$h(\mu _\varphi )$

. It is

![]() $C^1$

and decreasing on

$C^1$

and decreasing on

![]() $(0,+\infty )$

, and

$(0,+\infty )$

, and

![]() . Moreover,

. Moreover,

![]() .

.

The condition

![]() $q_\varphi ^*=-1$

is equivalent to

$q_\varphi ^*=-1$

is equivalent to

![]() $\mathcal M_\varphi (-1)=\gamma _\varphi ^+$

, which, in turn, is equivalent to

$\mathcal M_\varphi (-1)=\gamma _\varphi ^+$

, which, in turn, is equivalent to

![]() . But, since

. But, since

![]() decreases to

decreases to

![]() $-\gamma _\varphi ^+$

, we must have

$-\gamma _\varphi ^+$

, we must have

![]() for all

for all

![]() $q\geq 1$

, and hence the right derivative of

$q\geq 1$

, and hence the right derivative of

![]() at

at

![]() $1$

is equal to

$1$

is equal to

![]() $0$

, but, since

$0$

, but, since

![]() is differentiable, this implies that the left derivative of

is differentiable, this implies that the left derivative of

![]() at

at

![]() $1$

is also equal to

$1$

is also equal to

![]() $0$

. Hence,

$0$

. Hence,

![]() . But, by the last statement of the lemma, this means that

. But, by the last statement of the lemma, this means that

![]() $h(\mu _{2\varphi })+\int \varphi \,d\mu _{2\varphi }=0$

, which is possible if and only if

$h(\mu _{2\varphi })+\int \varphi \,d\mu _{2\varphi }=0$

, which is possible if and only if

![]() $\mu _{2\varphi }=\mu _\varphi $

, by the variational principle (since

$\mu _{2\varphi }=\mu _\varphi $

, by the variational principle (since

![]() $h(\eta )+\int \varphi \,d\eta =0$

if and only if

$h(\eta )+\int \varphi \,d\eta =0$

if and only if

![]() $\eta =\mu _\varphi $

). In turn, this equality holds if and only if there exists a continuous function

$\eta =\mu _\varphi $

). In turn, this equality holds if and only if there exists a continuous function

![]() and

and

![]() such that

such that

![]() $2\varphi =\varphi + u-u\circ \theta +c$

, which is equivalent to

$2\varphi =\varphi + u-u\circ \theta +c$

, which is equivalent to

Since

![]() $P(\varphi )=0$

, one must have

$P(\varphi )=0$

, one must have

![]() $c=-\log |\mathcal {A}|$

.

$c=-\log |\mathcal {A}|$

.

The proof of the proposition is complete.

Proof of Lemma 4.1

Since

$$ \begin{align*} \frac{\,d}{\,d q} P(\varphi+q\varphi)\bigg|_{q=0}=\int \varphi \,d\mu_\varphi, \end{align*} $$

$$ \begin{align*} \frac{\,d}{\,d q} P(\varphi+q\varphi)\bigg|_{q=0}=\int \varphi \,d\mu_\varphi, \end{align*} $$

we can use l’Hospital’s rule to conclude that

where we used the variational principle for

![]() $\varphi $

. Hence, we can extend

$\varphi $

. Hence, we can extend

at

![]() $0$

(and denote the continuous extension by the same symbol). Then, since the pressure function is

$0$

(and denote the continuous extension by the same symbol). Then, since the pressure function is

![]() $C^1$

, we have for

$C^1$

, we have for

![]() $q>0$

, and using the variational principle twice, that

$q>0$

, and using the variational principle twice, that

Hence,

is

![]() $C^1$

and decreases on

$C^1$

and decreases on

![]() $(0,+\infty )$

. Taking

$(0,+\infty )$

. Taking

![]() $q=1$

gives the last statement of the lemma. Finally, we prove that

$q=1$

gives the last statement of the lemma. Finally, we prove that

. By an obvious change of variable and a change of sign, it is equivalent to prove that

By the variational principle applied to

![]() $q\varphi $

,

$q\varphi $

,

for any shift-invariant probability measure

![]() $\eta $

. Therefore, for any

$\eta $

. Therefore, for any

![]() $q>0$

, we get

$q>0$

, we get

and hence

Taking

![]() $\eta $

to be a maximizing measure for

$\eta $

to be a maximizing measure for

![]() $\varphi $

, we obtain

$\varphi $

, we obtain

(By compactness of

![]() $\mathscr {M}_\theta (\mathcal {A}^{\mathbb {N}})$

, there exists at least one shift-invariant measure maximizing

$\mathscr {M}_\theta (\mathcal {A}^{\mathbb {N}})$

, there exists at least one shift-invariant measure maximizing

![]() $\int \varphi \,d\eta $

.) We now use (7). For any

$\int \varphi \,d\eta $

.) We now use (7). For any

![]() $q>0$

, we have the trivial bound

$q>0$

, we have the trivial bound

$$ \begin{align*} \frac{1}{n}\log \sum_{a_0^{n-1}} {e}^{q\sup\{\sum_{k=0}^{n-1} \varphi(a_k^{n-1}x_n^\infty): x_n^\infty\in \mathcal{A}^{\mathbb{N}}\}} \leq q\, \frac{1}{n} {\sup_y \sum_{k=0}^{n-1}\varphi(y_k^\infty)} + \log|\mathcal{A}|. \end{align*} $$

$$ \begin{align*} \frac{1}{n}\log \sum_{a_0^{n-1}} {e}^{q\sup\{\sum_{k=0}^{n-1} \varphi(a_k^{n-1}x_n^\infty): x_n^\infty\in \mathcal{A}^{\mathbb{N}}\}} \leq q\, \frac{1}{n} {\sup_y \sum_{k=0}^{n-1}\varphi(y_k^\infty)} + \log|\mathcal{A}|. \end{align*} $$

Hence, by taking the limit

![]() $n\to \infty $

on both sides, and using (19) (see the next subsection), we have, for any

$n\to \infty $

on both sides, and using (19) (see the next subsection), we have, for any

![]() $q>0$

,

$q>0$

,

and hence

$$ \begin{align*} \limsup_{q\to+\infty}\frac{P(q\varphi)}{q} \leq \gamma_\varphi^+. \end{align*} $$

$$ \begin{align*} \limsup_{q\to+\infty}\frac{P(q\varphi)}{q} \leq \gamma_\varphi^+. \end{align*} $$

Combining this inequality with (16) gives (15). The proof of the lemma is complete.

4.2 Proof of Proposition 3.2

For each

![]() $n\geq 1$

, let

$n\geq 1$

, let

$$ \begin{align*} \gamma_{\varphi,n}^+=\frac{1}{n} \max_{a_0^{n-1}} \log \mu_\varphi([a_0^{n-1}])\quad\text{and}\quad s_n(\varphi)=\max_y \sum_{k=0}^{n-1}\varphi(y_k^\infty). \end{align*} $$

$$ \begin{align*} \gamma_{\varphi,n}^+=\frac{1}{n} \max_{a_0^{n-1}} \log \mu_\varphi([a_0^{n-1}])\quad\text{and}\quad s_n(\varphi)=\max_y \sum_{k=0}^{n-1}\varphi(y_k^\infty). \end{align*} $$

(We can put a maximum instead of a supremum in the definition of

![]() $s_n(\varphi )$

since, by compactness of

$s_n(\varphi )$

since, by compactness of

![]() $\mathcal {A}^{\mathbb {N}}$

, the supremum of the continuous function

$\mathcal {A}^{\mathbb {N}}$

, the supremum of the continuous function

![]() $x\mapsto \sum _{k=0}^{n-1}\varphi (x_k^\infty )$

is attained for some y.) Fix

$x\mapsto \sum _{k=0}^{n-1}\varphi (x_k^\infty )$

is attained for some y.) Fix

![]() $n\geq 1$

. We have

$n\geq 1$

. We have

$$ \begin{align*} s_n(\varphi) =\max_{a_0^{n-1}}\max_{y:y_0^{n-1}=a_0^{n-1}} \sum_{k=0}^{n-1}\varphi(y_k^\infty) =\max_{a_0^{n-1}}\max_{y_{n}^\infty} \sum_{k=0}^{n-1}\varphi(a_k^{n-1} y_{n}^\infty). \end{align*} $$

$$ \begin{align*} s_n(\varphi) =\max_{a_0^{n-1}}\max_{y:y_0^{n-1}=a_0^{n-1}} \sum_{k=0}^{n-1}\varphi(y_k^\infty) =\max_{a_0^{n-1}}\max_{y_{n}^\infty} \sum_{k=0}^{n-1}\varphi(a_k^{n-1} y_{n}^\infty). \end{align*} $$

Since

![]() $\mathcal {A}^{\mathbb {N}}$

is compact and

$\mathcal {A}^{\mathbb {N}}$

is compact and

![]() $\varphi $

is continuous, for each n there exists a point

$\varphi $

is continuous, for each n there exists a point

![]() $z^{(n)}\in \mathcal {A}^{\mathbb {N}}$

such that

$z^{(n)}\in \mathcal {A}^{\mathbb {N}}$

such that

$$ \begin{align} s_n(\varphi)=\max_{a_0^{n-1}}\sum_{k=0}^{n-1}\varphi(a_k^{n-1} (z^{(n)})_{n}^\infty). \end{align} $$

$$ \begin{align} s_n(\varphi)=\max_{a_0^{n-1}}\sum_{k=0}^{n-1}\varphi(a_k^{n-1} (z^{(n)})_{n}^\infty). \end{align} $$

Now, using (5), we get

$$ \begin{align} \bigg| \gamma_{\varphi,n}^+- \frac{1}{n}\max_{a_0^{n-1}}\sum_{k=0}^{n-1} \varphi(a_{k}^{n-1} x_{n}^\infty)\bigg| \leq \frac{C}{n} \end{align} $$

$$ \begin{align} \bigg| \gamma_{\varphi,n}^+- \frac{1}{n}\max_{a_0^{n-1}}\sum_{k=0}^{n-1} \varphi(a_{k}^{n-1} x_{n}^\infty)\bigg| \leq \frac{C}{n} \end{align} $$

for any choice of

![]() $x_{n}^\infty \in \mathcal {A}^{\mathbb {N}}$

, so we can take

$x_{n}^\infty \in \mathcal {A}^{\mathbb {N}}$

, so we can take

![]() $x_{n}^\infty =(z^{(n)})_{n}^\infty $

. By using (18) and (17), we thus obtain

$x_{n}^\infty =(z^{(n)})_{n}^\infty $

. By using (18) and (17), we thus obtain

$$ \begin{align*} \bigg| \gamma_{\varphi,n}^+- \frac{s_n(\varphi)}{n}\bigg| \leq \frac{C}{n},\; n\geq 1. \end{align*} $$

$$ \begin{align*} \bigg| \gamma_{\varphi,n}^+- \frac{s_n(\varphi)}{n}\bigg| \leq \frac{C}{n},\; n\geq 1. \end{align*} $$

Now, one can check that

![]() $(s_n(\varphi ))_n$

is a subadditive sequence such that

$(s_n(\varphi ))_n$

is a subadditive sequence such that

![]() $\inf _m m^{-1} s_m(\varphi ) \geq -\|\varphi \|_\infty $

. Hence, by Fekete’s lemma (see for example, [Reference Szpankowski20])

$\inf _m m^{-1} s_m(\varphi ) \geq -\|\varphi \|_\infty $

. Hence, by Fekete’s lemma (see for example, [Reference Szpankowski20])

![]() $\lim _n n^{-1}s_n(\varphi )$

exists, so the limit of

$\lim _n n^{-1}s_n(\varphi )$

exists, so the limit of

![]() $(\gamma _{\varphi ,n}^+)_{n\ge 1}$

also exists and coincides with

$(\gamma _{\varphi ,n}^+)_{n\ge 1}$

also exists and coincides with

![]() $\lim _n n^{-1}s_n(\varphi )$

. We now use the fact that

$\lim _n n^{-1}s_n(\varphi )$

. We now use the fact that

$$ \begin{align} \lim_n \frac{s_n(\varphi)}{n}=\sup_{\eta\in\mathscr{M}_\theta(\mathcal{A}^{\mathbb{N}})} \int \varphi \,d \eta. \end{align} $$

$$ \begin{align} \lim_n \frac{s_n(\varphi)}{n}=\sup_{\eta\in\mathscr{M}_\theta(\mathcal{A}^{\mathbb{N}})} \int \varphi \,d \eta. \end{align} $$

The proof is found in [Reference Jenkinson13, Proposition 2.1]. This finishes the proof of Proposition 3.2.

4.3 Auxiliary results concerning recurrence times

In this section, we state some auxiliary results which will be used in the proofs of the main theorems and are concerned with recurrence times.

4.3.1 Exponential approximation of return-time distribution

The following result of [Reference Abadi, Amorim and Gallo1] will be important in the proof of Theorem 3.1 for

![]() $q>0$

.

$q>0$

.

We recall that a measure

![]() $\mu $

enjoys the

$\mu $

enjoys the

![]() $\psi $

-mixing property if there exists a sequence

$\psi $

-mixing property if there exists a sequence

![]() $(\psi (\ell ))_{\ell \geq 1}$

of positive numbers decreasing to zero where

$(\psi (\ell ))_{\ell \geq 1}$

of positive numbers decreasing to zero where

$$ \begin{align*} \psi(\ell):=\sup_{j\geq 1}\sup_{B\in \mathscr{F}_0^j,\, B'\in \mathscr{F}_{j+\ell}^\infty} \bigg| \frac{\mu(B\cap B')}{\mu(B)\mu(B')}-1\bigg|. \end{align*} $$

$$ \begin{align*} \psi(\ell):=\sup_{j\geq 1}\sup_{B\in \mathscr{F}_0^j,\, B'\in \mathscr{F}_{j+\ell}^\infty} \bigg| \frac{\mu(B\cap B')}{\mu(B)\mu(B')}-1\bigg|. \end{align*} $$

Theorem 4.1. (Exponential approximation under

$\psi $

-mixing)

$\psi $

-mixing)

Let

![]() $(X_k)_{k\geq 0}$

be a process distributed according to a

$(X_k)_{k\geq 0}$

be a process distributed according to a

![]() $\psi $

-mixing measure

$\psi $

-mixing measure

![]() $\mu $

. There exist constants

$\mu $

. There exist constants

![]() $C,C'>0$

such that, for any

$C,C'>0$

such that, for any

![]() $x\in \mathcal {A}^{\mathbb {N}}$

,

$x\in \mathcal {A}^{\mathbb {N}}$

,

![]() $n\geq 1$

and

$n\geq 1$

and

![]() $t\ge \tau (x_0^{n-1})$

,

$t\ge \tau (x_0^{n-1})$

,

$$ \begin{align} &|\,\mu_{x_0^{n-1}}(T_{x_0^{n-1}}> t)-\zeta_\mu(x_0^{n-1}){e}^{-\zeta_\mu(x_0^{n-1})\mu([x_0^{n-1}])(t-\tau(x_0^{n-1}))}|\nonumber\\ &\quad\le \begin{cases} C\epsilon_n & \text{if } t\le \dfrac{1}{2\mu([x_0^{n-1}])},\\[3pt] C\epsilon_n\mu([x_0^{n-1}])\,t{e}^{-(\zeta_\mu(x_0^{n-1})-C'\!\epsilon_n)\mu([x_0^{n-1}])t} & \text{if } t> \dfrac{1}{2\mu([x_0^{n-1}])}, \end{cases} \end{align} $$

$$ \begin{align} &|\,\mu_{x_0^{n-1}}(T_{x_0^{n-1}}> t)-\zeta_\mu(x_0^{n-1}){e}^{-\zeta_\mu(x_0^{n-1})\mu([x_0^{n-1}])(t-\tau(x_0^{n-1}))}|\nonumber\\ &\quad\le \begin{cases} C\epsilon_n & \text{if } t\le \dfrac{1}{2\mu([x_0^{n-1}])},\\[3pt] C\epsilon_n\mu([x_0^{n-1}])\,t{e}^{-(\zeta_\mu(x_0^{n-1})-C'\!\epsilon_n)\mu([x_0^{n-1}])t} & \text{if } t> \dfrac{1}{2\mu([x_0^{n-1}])}, \end{cases} \end{align} $$

where

![]() $(\epsilon _n)_n$

is a sequence of positive real numbers converging to

$(\epsilon _n)_n$

is a sequence of positive real numbers converging to

![]() $0$

, and where

$0$

, and where

![]() $\tau (x_0^{n-1})$

and

$\tau (x_0^{n-1})$

and

![]() $\zeta _\mu (x_0^{n-1})$

are defined in (22) and (23), respectively.

$\zeta _\mu (x_0^{n-1})$

are defined in (22) and (23), respectively.

In [Reference Abadi, Amorim and Gallo1], this is Theorem 1, statement 2, combined with Remark 2. A consequence of

![]() $\psi $

-mixing is that there exist

$\psi $

-mixing is that there exist

![]() $c_1,c_2>0$

such that

$c_1,c_2>0$

such that

![]() $\mu ([x_0^{n-1}])\leq c_1 {e}^{-c_2 n}$

for all x and n. This also follows from (5) since

$\mu ([x_0^{n-1}])\leq c_1 {e}^{-c_2 n}$

for all x and n. This also follows from (5) since

![]() $\varphi <0$

.

$\varphi <0$

.

Remark 4.1. Notice that a previous version of the present paper relied on an exponential approximation of the return-time distribution given in [Reference Abadi and Vergne3], but their error term turned out to be wrong for

![]() $t\le {1}/{2\mu ([x_0^{n-1}])}$

. This mistake was fixed in [Reference Abadi, Amorim and Gallo1].

$t\le {1}/{2\mu ([x_0^{n-1}])}$

. This mistake was fixed in [Reference Abadi, Amorim and Gallo1].

Equilibrium states with potentials of summable variation are

![]() $\psi $

-mixing.

$\psi $

-mixing.

Proposition 4.1. Let

![]() $\varphi $

be a potential of summable variation. Then its equilibrium state

$\varphi $

be a potential of summable variation. Then its equilibrium state

![]() $\mu _\varphi $

is

$\mu _\varphi $

is

![]() $\psi $

-mixing.

$\psi $

-mixing.

Proof. The proof follows easily from (6), for

![]() $i=0$

. First, notice that this double inequality obviously holds for any

$i=0$

. First, notice that this double inequality obviously holds for any

![]() $F\in \mathscr F_0^{m-1}$

in place of

$F\in \mathscr F_0^{m-1}$

in place of

![]() $a_0^{m-1}\in \mathcal {A}^{m}$

. Moreover, by the monotone class theorem, it also holds for any

$a_0^{m-1}\in \mathcal {A}^{m}$

. Moreover, by the monotone class theorem, it also holds for any

![]() $G\in \mathscr F$

in place of

$G\in \mathscr F$

in place of

![]() $b_0^{n-1}\in \mathcal {A}^n$

, and we obtain that, for any

$b_0^{n-1}\in \mathcal {A}^n$

, and we obtain that, for any

![]() $n\ge 1,F\in \mathscr F_0^{n-1}, G\in \mathscr F$

,

$n\ge 1,F\in \mathscr F_0^{n-1}, G\in \mathscr F$

,

$$ \begin{align} C^{-3} \leq \frac{\mu_\varphi(F\cap \theta^{-m}G)}{\mu_\varphi(F)\,\mu_\varphi(G)}\leq C^3. \end{align} $$

$$ \begin{align} C^{-3} \leq \frac{\mu_\varphi(F\cap \theta^{-m}G)}{\mu_\varphi(F)\,\mu_\varphi(G)}\leq C^3. \end{align} $$

We now apply Theorem 4.1(2) in [Reference Bradley5] to conclude the proof.

Remark 4.2. Let us mention that, although the

![]() $\psi $

-mixing property, per se, is not studied in [Reference Walters22], it is a consequence of what is actually proved in the proof of Theorem 3.2 therein.

$\psi $

-mixing property, per se, is not studied in [Reference Walters22], it is a consequence of what is actually proved in the proof of Theorem 3.2 therein.

4.3.2 First possible return time and potential well

For the proof of the main theorem in the case

![]() $q<0$

, we will need to consider the short recurrence properties of the measures. The smallest possible return time in a cylinder

$q<0$

, we will need to consider the short recurrence properties of the measures. The smallest possible return time in a cylinder

![]() $[a_0^{n-1}]$

, also called its period, will have a particularly important role. It is defined by

$[a_0^{n-1}]$

, also called its period, will have a particularly important role. It is defined by

One can check that

![]() $\tau (a_0^{n-1})=\inf \{k\geq 1: [a_0^{n-1}]\cap \theta ^{-k}[a_0^{n-1}]\neq \emptyset \}$

. Observe that

$\tau (a_0^{n-1})=\inf \{k\geq 1: [a_0^{n-1}]\cap \theta ^{-k}[a_0^{n-1}]\neq \emptyset \}$

. Observe that

![]() $\tau (a_0^{n-1})\leq n$

, for all

$\tau (a_0^{n-1})\leq n$

, for all

![]() $n\geq 1$

.

$n\geq 1$

.

Let

![]() $\mu $

be a probability measure and assume that it has complete grammar, that is, it gives a positive measure to all cylinders. We denote by

$\mu $

be a probability measure and assume that it has complete grammar, that is, it gives a positive measure to all cylinders. We denote by

![]() $\mu _{a_0^{n-1}}(\cdot ):=\mu ([a_0^{n-1}]\cap \cdot )/\mu ([a_0^{n-1}])$

the measure conditioned on

$\mu _{a_0^{n-1}}(\cdot ):=\mu ([a_0^{n-1}]\cap \cdot )/\mu ([a_0^{n-1}])$

the measure conditioned on

![]() $[a_0^{n-1}]$

. For any

$[a_0^{n-1}]$

. For any

![]() $a_0^{n-1}\in \mathcal {A}^n$

, define

$a_0^{n-1}\in \mathcal {A}^n$

, define

$$ \begin{align} \zeta_\mu(a_0^{n-1}) & := \mu_{a_0^{n-1}}( T_{a_0^{n-1}}\neq \tau(a_0^{n-1}))\\ \nonumber & =\mu_{a_0^{n-1}}( T_{a_0^{n-1}}> \tau(a_0^{n-1})). \end{align} $$

$$ \begin{align} \zeta_\mu(a_0^{n-1}) & := \mu_{a_0^{n-1}}( T_{a_0^{n-1}}\neq \tau(a_0^{n-1}))\\ \nonumber & =\mu_{a_0^{n-1}}( T_{a_0^{n-1}}> \tau(a_0^{n-1})). \end{align} $$

This quantity was called potential well in [Reference Abadi, Amorim and Gallo1, Reference Abadi, Cardeño and Gallo2], and shows up as an additional scaling factor in exponential approximations of the distributions of hitting and return times (see, for instance, the next subsection).

Remark 4.3. For

![]() $t<\mu ([a_0^{n-1}])\,\tau (a_0^{n-1})$

,

$t<\mu ([a_0^{n-1}])\,\tau (a_0^{n-1})$

,

$$ \begin{align*} \mu_{a_0^{n-1}}\bigg(T_{a_0^{n-1}}\le \frac{t}{\mu([a_0^{n-1}])}\bigg)=0 \end{align*} $$

$$ \begin{align*} \mu_{a_0^{n-1}}\bigg(T_{a_0^{n-1}}\le \frac{t}{\mu([a_0^{n-1}])}\bigg)=0 \end{align*} $$

since, by definition,

![]() $\mu _{a_0^{n-1}}(T_{a_0^{n-1}}< \tau (a_0^{n-1}) )=0$

(and hence the rightmost equality in (23)).

$\mu _{a_0^{n-1}}(T_{a_0^{n-1}}< \tau (a_0^{n-1}) )=0$

(and hence the rightmost equality in (23)).

As already mentioned, equilibrium states with potential of summable variation are

![]() $\psi $

-mixing (see Proposition 4.1). Since, moreover, they have complete grammar, they, therefore, satisfy the conditions of Theorem 2 of [Reference Abadi, Amorim and Gallo1]. This result states that the potential well is bounded away from

$\psi $

-mixing (see Proposition 4.1). Since, moreover, they have complete grammar, they, therefore, satisfy the conditions of Theorem 2 of [Reference Abadi, Amorim and Gallo1]. This result states that the potential well is bounded away from

![]() $0$

, that is,

$0$

, that is,

in which