Introduction

CBT competence assessments involve making judgements about the quality or skilfulness of therapists’ performance and abilities in delivering cognitive behaviour therapy (CBT). This article provides a framework designed to support trainers, assessors, supervisors and therapists when making decisions about selecting and implementing effective strategies for assessing CBT competence. It focuses on how competence assessments can be used to facilitate the acquisition, development and evaluation of CBT knowledge and skills, both within training settings and in supporting continued professional development within clinical practice. The framework centres on five central questions of assessing CBT competence: why, what, when, who and how competence is assessed. The evidence base is used to explore each of these key questions and make recommendations (see Fig. 1 for an overview of key guidelines).

Figure 1. Recommendations to guide effective and useful strategies for assessing CBT competence.

Why assess CBT competence?

There are several reasons why the assessment of CBT competence is a critical component in maintaining ongoing delivery of high-quality CBT. First, effective assessment of CBT competence provides a means of monitoring standards of practice, thus ensuring treatment provision continues to be delivered in line with current best practice guidelines and in a way that is optimally effective for clients (Kazantzis, Reference Kazantzis2003). Second, competence assessments facilitate evaluation of the training of CBT therapists and ensure newly qualified therapists have not only acquired the necessary knowledge and skills but can also apply these in clinical practice (Decker et al., Reference Decker, Jameson and Naugle2011). Third, competence assessments play a vital role in supporting acquisition and development of the knowledge and skills required to effectively deliver CBT by offering targeted, structured and focused feedback, promoting self-reflection, and guiding future learning (Bennett-Levy, Reference Bennett-Levy2006; Laireiter and Willutzki, Reference Laireiter and Willutzki2003; McManus et al., Reference McManus, Rosen, Jenkins, Mueller, Kennerley, McManus and Westbrook2010a). These issues have been highlighted by the United Kingdom’s (UK) initiative to Improve Access to Psychological Therapies (IAPT) (Clark, Reference Clark2018), which has necessitated the large-scale training of psychological therapists to deliver evidence-based interventions in routine care. Such initiatives have relied heavily on assessment of competence in CBT to inform and evaluate the training of therapists as well as their subsequent provision of evidence-based interventions.

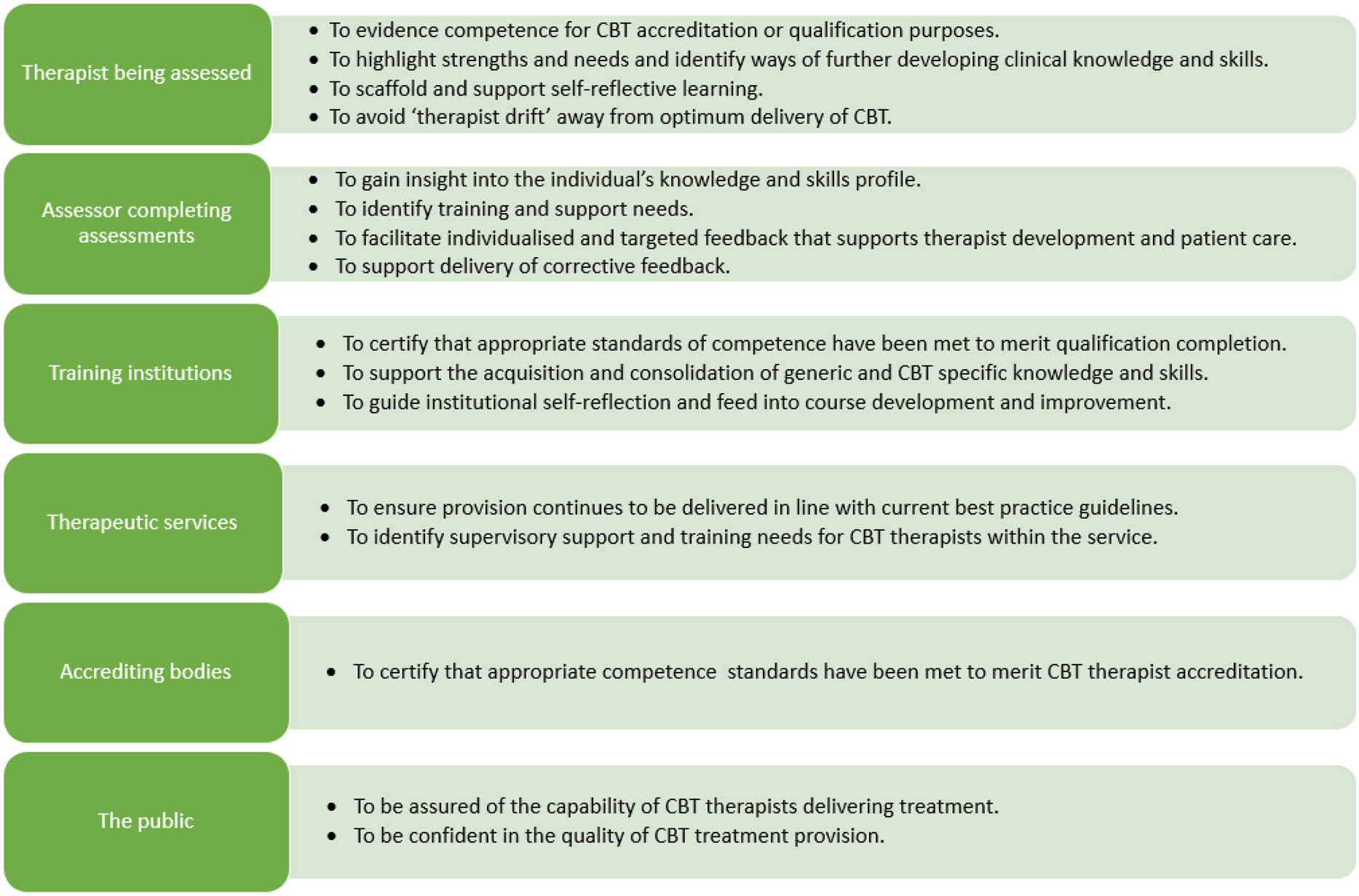

Assessments of CBT competence can serve a range of individual, institutional and societal purposes (see Fig. 2). Careful consideration of the purpose of an assessment of competence is an essential first step in deciding what, when, who and how to assess CBT competence. A helpful distinction is between formative and summative functions. Assessments are considered summative when used to establish if a therapist has reached a specified standard of competence (Harlen and Deakin Crick, Reference Harlen and Deakin Crick2003). This is important when certifying therapists’ completion of qualifications, granting accreditation, and monitoring quality of practice. The primary goal of formative assessments, however, is to facilitate learning by using structured feedback, which helps those being assessed recognise their strengths and needs, and identify ways of improving practice (Sadler, Reference Sadler1998). Formative assessment plays an important role not only in training but also in continued professional development and supervision. While formative and summative functions of assessments often overlap, identifying a primary focus as formative or summative will influence decisions about the optimal assessment strategy in a given circumstance. Where the assessment is summative, it is most important to provide a robust assessment, often made on the basis of a range of validated assessment tools that provide overall numerical ratings across all key areas of competence (Epstein, Reference Epstein2007). Where the primary purpose is formative, priority can be given to assessments providing in-depth and individually targeted feedback that encourages self-reflection and guides strategies for further development (Juwah et al., Reference Juwah, Macfarlane-Dick, Matthew, Nicol, Ross and Smith2004; Nicol and Macfarlane-Dick, Reference Nicol and Macfarlane-Dick2006). Whilst many summative assessment methods also offer opportunities for formative feedback, some formative assessment methods may not be suitable to meet summative requirements (Muse and McManus, Reference Muse and McManus2013).

Figure 2. An overview of key individual, institutional and societal purposes of conducting CBT competence assessments.

Key stakeholders’ perspectives of the purpose of the assessment must also be considered. Although often aligned, it is important to be aware of, respond to, and manage discrepancies between key stakeholders’ goals for the assessment of competence when they arise. For example, trainees understandably prioritise optimising their chances of passing anxiety-provoking summative assessments (Van der Vleuten et al., Reference Van der Vleuten, Schuwirth, Scheele, Driessen and Hodges2010). Consequently, there may be a temptation to self-select their ‘best’ therapy sessions for review, to strategically and narrowly focus on learning and delivering the knowledge and skills being assessed by the measures of competence, or to attend only to negative feedback or the overall numeric feedback grade awarded. Anticipating such conflicts of interest allows for management strategies to be put in place to support formative development. This may include developing non-judgemental learning environments in which trainees are well-prepared for, understand, and are actively engaged in the process of how, why and when they will be assessed; ensuring feedback is delivered in a non-threatening, engaging manner within the context of a supportive supervisory or training relationship; clearly highlighting the most important ‘take home’ messages; ensuring feedback is strengths-based; allowing time and space for trainees to digest and respond to both positive and corrective feedback, and implementing formative as well as summative evaluations.

What is CBT competence?

A critical issue when assessing CBT competence is for all parties involved to agree a clear working definition of what is understood by the term CBT competence. This is not necessarily straightforward, as CBT competence is a complex construct involving multiple distinct aspects of knowledge and skill. For example, it needs to be decided whether to include protocol-specific elements, or non-CBT-specific general therapeutic skills. Broadly speaking, competent delivery of CBT can be defined as the degree to which a therapist demonstrates the knowledge and skills necessary to appropriately deliver CBT interventions in line with the current evidence base for treatment of an individual client’s presenting problem and with sensitivity to the patient’s assets, needs and values (Barber et al., Reference Barber, Sharpless, Klostermann and McCarthy2007; Kaslow, Reference Kaslow2004). However, the broad range of treatment approaches under the CBT umbrella and constantly evolving nature of CBT means that there is a lack of agreement about what constitutes effective CBT and therefore what is considered competent CBT practice. Roth and Pilling (Reference Roth and Pilling2007) presented a framework of competences required to deliver effective CBT. While this framework has not been empirically investigated, it is reflective of expert opinion at that time and offers the most comprehensive overview of CBT competence available, identifying over 50 inter-related competencies.

The first group of Roth and Pilling’s (Reference Roth and Pilling2007) competences are generic competences applicable across psychological therapies, including aspects of generic therapeutic knowledge (e.g. of mental health problems, ethical guidelines, etc.) and skills (e.g. ability to foster therapeutic alliance, warmth, empathy, listening skills, etc.). Four further aspects of competence relate to the knowledge and skills specific to the domain of CBT. Basic CBT competences are those used in most CBT interventions (e.g. knowledge of CBT principles, ability to explain CBT rationale, etc.). Specific behavioural and cognitive techniques relate to the use of specific techniques employed in most interventions (e.g. exposure techniques, working with safety-seeking behaviours, etc.). Problem-specific CBT skills are those used to deliver treatment packages for a particular problem presentation (e.g. understanding the role of hypervigilance in anxiety disorders, behavioural experiments to modify catastrophic misinterpretation). Finally, metacompetences are those needed to flexibly apply, adapt and pace CBT according to individual client needs (e.g. capacity to select and apply most appropriate CBT method, manging obstacles, etc.). Although typically not evaluated within CBT competence assessments, this set of knowledge and skills also sits within a broader framework of professional competences, ranging from effective use of supervision to research engagement and self-reflection (Barber et al., Reference Barber, Sharpless, Klostermann and McCarthy2007; Kaslow, Reference Kaslow2004). These key areas of knowledge and skills required to competently deliver CBT are outlined in Fig. 3.

Figure 3. A framework of clinical knowledge and skills required to competently deliver CBT drawn from Barber et al. (Reference Barber, Sharpless, Klostermann and McCarthy2007), Kaslow (Reference Kaslow2004), and Roth and Pilling (Reference Roth and Pilling2007).

Examining the full range of CBT competences and using this to specify a working definition of CBT competence allows a decision to be made about which aspects of CBT competence should be assessed. Given the broad range of competences required to deliver CBT and the variety of ways of working within the CBT framework, assessments typically focus on a specified subset of generic and CBT-specific knowledge or skill. Given that it is essential that the methods used to assess competence are well aligned with the competences being evaluated, this decision directly influences decision about how competence will be assessed. This may differ depending on the context and situation. For example, within a CBT training setting it may be important to implement a broad multi-method assessment strategy that assesses the breadth of a therapist’s generic and CBT-specific knowledge and skills. Conversely, developmental feedback within routine CBT supervision may focus on a specific skill or on the ability to deliver a specific treatment strategy. Regardless of the decision made, it is important that those completing the assessment, those being assessed, and those reviewing assessment outcomes are aware of, and understand, what aspects of competence are being examined and why.

When should CBT competence be assessed?

The ‘when’ of CBT competence assessment is important both within and outside of formal training settings. CBT competence assessments are firmly embedded within formal postgraduate CBT training settings in the UK, where multi-source assessments are submitted at several points over the course of training. These practice portfolios usually include assessment of several recorded therapy sessions using standardised rating scales, alongside written case reports and essays (Liness et al., Reference Liness, Beale, Lea, Byrne, Hirsch and Clark2019; McManus et al., Reference McManus, Westbrook, Vazquez-Montes, Fennell and Kennerley2010b). This allows evaluation of the direct and indirect of training efforts across key areas of Kirkpatrick’s (Reference Kirkpatrick, Craig and Bittel1967) training evaluation model: acquisition of knowledge and skill, behaviour in practice settings, and client outcomes (Decker et al., Reference Decker, Jameson and Naugle2011). Within training contexts, assessments serve to establish whether trainee therapists meet a sufficient standard of CBT competence against specified benchmarks and learning outcomes. Training courses must therefore outline and gain institutional agreement as to when and how CBT competence is assessed. This relatively prescribed approach is necessary to ensure a robust and consistent strategy for assessing the acquisition and implementation of general therapeutic and CBT-specific knowledge and skills across training and practice settings (Decker et al., Reference Decker, Jameson and Naugle2011; Muse and McManus, Reference Muse and McManus2013). Yet it is important to remember that this close monitoring and regular feedback on performance is also designed to support the development and consolidation of therapeutic competences. Receiving feedback of in-session performance using standardised rating scales provides useful corrective feedback to trainees, leading to further improvements in competence (Weck et al., Reference Weck, Kaufmann and Höfling2017a; Weck et al., Reference Weck, Junga, Kliegl, Hahn, Brucker and Witthöft2021). Thus, for those completing or receiving assessments within training settings, the key issue may be in understanding the reasons why this strategy has been selected and identifying ways to make use of the feedback within these frameworks to support trainees personal learning goals.

Outside of training settings, ongoing monitoring of competence is not only necessary in the early skills-acquisition phase but is also essential for both novice and experienced therapists to maintain knowledge and skills and prevent ‘therapist drift’ away from optimum delivery of evidence-based CBT treatment practices (Waller and Turner, Reference Waller and Turner2016). Evidence suggests that engaging in such regular deliberate practice can lead to better client outcomes in psychotherapy (Chow et al., Reference Chow, Miller, Seidel, Kane, Thornton and Andrews2015). Furthermore, therapeutic regulatory bodies typically use CBT competence assessments to provide evidence of competent practice for accreditation purposes. For example, within the UK and Ireland the British Association of Behavioural and Cognitive Psychotherapies (BABCP) provide CBT practitioner accreditation, which is maintained through periodic re-accreditation. Criteria for ongoing re-accreditation include engagement in ongoing supervision involving regular formal assessment of skill viewed in vivo or via session recordings using standardised rating scales (British Association of Behavioural and Cognitive Psychotherapies, 2012). However, using competence assessments within supervision offers much more than a formal annual proof of competence for accreditation purposes. Good practice guidelines recommend that formative competence assessments should be embedded within routine CBT supervision practices to support life-long competence development. In particular, standardised competence assessments based on direct observation of therapists’ skills within treatment sessions or role-plays provides structured, specific, accessible and accurate supervisor feedback, which is useful for supporting supervisee self-reflection and planning for further competence development (Milne, Reference Milne2009; Padesky, Reference Padesky and Salkovskis1996). Worryingly supervision frequently relies on ‘talking about’ therapy within therapist-selected cases, rather than observation of therapist skill during supervisor-selected treatment sessions or role-plays (Ladany et al., Reference Ladany, Hill, Corbett and Nutt1996; Townend et al., Reference Townend, Iannetta and Freeston2002; Weck et al., Reference Weck, Kaufmann and Witthöft2017b). Pragmatic and flexible use of competence assessments can support these methods to be routinely embedded within supervision. For example, the ‘I-spy’ technique can be used within supervision to focus on developing a specific micro skill, such as agenda setting or reflective summaries (Gonsalvez et al., Reference Gonsalvez, Brockman and Hill2016). This technique involves reviewing segments of a recorded treatment session within which the specific skill is demonstrated to identify opportunities for alternative ways of responding. Using single items from standardised rating scales can provide useful insight into both parties’ perspective on the supervisee’s performance, as well as providing a framework to support identification of potentially more skilful responses.

Who is best placed to assess CBT competence?

After relevant decisions have been made regarding what to assess and when, the next question is who should carry out the assessment. Research suggests that CBT therapists are not accurate when assessing their own competence. Evidence about the direction of self-assessment biases is mixed, with most research reporting over-estimation of performance compared with independent assessors (Brosan et al., Reference Brosan, Reynolds and Moore2008; Hogue et al., Reference Hogue, Dauber, Lichvar, Bobek and Henderson2015; Parker and Waller, Reference Parker and Waller2015; Rozek et al., Reference Rozek, Serrano, Marriott, Scott, Hickman, Brothers, Lewis and Simons2018; Walfish et al., Reference Walfish, McAlister, O’Donnell and Lambert2012) and one study reporting an under-estimation (McManus et al., Reference McManus, Rakovshik, Kennerley, Fennell and Westbrook2012). Thus it is not recommended that self-assessments be used as a formal summative measure of therapist competence (Muse and McManus, Reference Muse and McManus2013). However, self-monitoring can still have a useful role in developing and maintaining CBT competences. Self-assessment is a core aspect of reflective practice that allows therapists to manage their own learning and facilitates identification of professional development needs. The ability to accurately self-assess competence is also a meta-competence that can be improved with training and supervisory feedback (Beale et al., Reference Beale, Liness and Hirsch2020; Brosan et al., Reference Brosan, Reynolds and Moore2008; Loades and Myles, Reference Loades and Myles2016). Self-assessing competence by rating performance within therapy or role-play sessions may be a useful self-development strategy, especially when used in combination with objective assessor or supervisory ratings. The collaborative comparative process may support therapists’ ability to both ‘reflect-on’ and ‘reflect-in’ action, facilitate more accurate self-awareness of competence, and allow assessors to tailor feedback depending on individual therapists’ self-confidence.

There has been a move towards competency-based CBT supervision paradigms that include regular assessment of supervisee competence. However, research suggests that supervisors are compromised by leniency errors and halo effects (Gonsalvez and Crowe, Reference Gonsalvez and Crowe2014), resulting in supervisors assessing CBT competence more positively than independent judges (Dennhag et al., Reference Dennhag, Gibbons, Barber, Gallop and Crits-Christoph2012; Peavy et al., Reference Peavy, Guydish, Manuel, Campbell, Lisha, Le, Delucchi and Garrett2014). This ‘positivity bias’ calls into question the ability of supervisors to accurately complete high-stakes summative assessments (Muse and McManus, Reference Muse and McManus2013). Independent assessors may be less influenced by demand characteristics such as a pressure to award a ‘pass’, relationship dynamics, or the halo effect caused by information beyond assessment material (e.g. prior competence, ability in other domains). Yet the factors that increase supervisory biases may also mean that supervisors are well placed to offer global insight into a supervisee’s ability across a range of competences over time, and to provide formative feedback on these. For example, supervisors have access to a greater wealth of contextual information about the treatment context, client’s history, supervisee’s work across time and different clients, supervisee’s professional interactions, and supervisee’s developmental stage. This allows supervisors to tailor feedback to support ongoing, individualised, developmentally appropriate and scaffolded learning strategies within the context of a supervisory alliance. Peer feedback delivered within the context of group or peer supervision may also be considered as a strategy for formative assessment of CBT competence. Although potentially less accurate and reliable, peer assessment may be less threatening and can equip therapists with the skills to both provide constructive feedback to others and to self-assess their own skills.

An ideal approach to assessment of CBT competence would be to triangulate across assessments completed by supervisors, therapists and independent assessors (Muse and McManus, Reference Muse and McManus2013). However, the time and financial cost of this strategy means it may not be possible, and for some purposes not necessary. Decisions about who should complete assessments will therefore need to appraise these pros and cons and make a pragmatic decision informed by the assessment purpose and cost. Strategies to reduce potential biases should also be considered. For example, where supervisory assessments are used, reliability checks could be completed by independent assessors for a subset of core competences at key points in a therapist’s training or practice. Active awareness of possible biases, engagement in reflexive practice whereby the potential impact of personal beliefs, attitudes, assumptions on assessment is acknowledged and examined, and supervision of supervision may help to overcome biases.

Whether supervisors, independent assessors, peers, or self-ratings are used, it is also necessary to provide appropriate training in how to use the competence measures employed. The provision of training should involve as a minimum developing familiarity with rating guidelines and completing practice ratings to standardise ratings (Milne, Reference Milne2009). However, individual, peer or group ‘supervision of assessment’ may also be beneficial in supporting assessors to complete high-quality assessments (Milne, Reference Milne2009). Supervision of supervision frameworks have been suggested to support supervisor development (Kennerley, Reference Kennerley2019; Milne, Reference Milne2009) and these approaches may also be applicable within broader peer and independent assessor contexts. Training and supervision for assessors not only enhances the inter-rater reliability of assessments (Kühne et al., Reference Kühne, Meister, Maaß, Paunov and Weck2020), but also supports effective delivery and receipt of feedback. Research suggests that therapist competence cannot be reliably rated by trained novices (Weck et al., Reference Weck, Hilling, Schermelleh-Engel, Rudari and Stangier2011) and novices may also be less skilled in providing appropriate formative feedback. Yet it is unclear what expertise is necessary to assess competence, with findings showing mixed evidence about whether accuracy of assessments increases with assessor competence (Brosan et al., Reference Brosan, Reynolds and Moore2008; Caron et al., Reference Caron, Muggeo, Souer, Pella and Ginsburg2020; Hogue et al., Reference Hogue, Dauber, Lichvar, Bobek and Henderson2015; McManus et al., Reference McManus, Rakovshik, Kennerley, Fennell and Westbrook2012). As a minimum, it is recommended that assessor or supervisory based assessments are carried out by therapists who have themselves received formal training or accreditation as a CBT therapist and who have significant experience in CBT practice.

How should CBT competence be assessed?

A broad range of methods can be used to assess CBT competence with different methods assessing distinct aspects of CBT competence and serving different functions (Muse and McManus, Reference Muse and McManus2013). The recommended ‘gold standard’ approach that is typically employed within CBT training courses is to use a multi-source, multi-informant, multi-method practice portfolio to provide a comprehensive and rounded assessment of therapists’ CBT knowledge and skills (Decker et al., Reference Decker, Jameson and Naugle2011; Muse and McManus, Reference Muse and McManus2013). Ensuring that trainees have reached proficiency across a range of core areas of knowledge and skills is central to high-stakes summative assessments that are used to make an overall judgement of CBT competence for qualification or accreditation purposes. However, such a robust, time-consuming and costly method is not always practical, or necessarily needed or useful in routine professional development, where individual methods may be judiciously selected and applied to develop skills and strengthen supervision. Thus, how competence should be assessed will depend on a variety of contextual factors that influence the nature and purpose of the assessment. For example, assessments may be used to give formative feedback within a training setting, to evaluate the impact of training efforts, as evidence for accreditation purposes, to promote self-reflection, to deliver broad feedback within supervision, or to support development of a specific skill within supervision. It is, therefore, necessary to explore the toolbox of methods available, to be aware of the relative strengths and weaknesses of these methods, and to select the right tool(s) for the job.

Miller’s (Reference Miller1990) hierarchy of clinical skill has been used to categorise the different methods of assessing CBT competence into four hierarchical levels (Muse and McManus, Reference Muse and McManus2013; see Fig. 4). This framework identifies which key aspects of competence are assessed by the different methods available. Table 1 outlines each of these methods, provides examples of key tools that can be used, identifies key strengths and challenges of the method, and makes recommendations for appropriate contexts in which to use them.

Figure 4. A framework for CBT therapist competence measures as aligned to Miller’s (Reference Miller1990) clinical skills hierarchy (Muse and McManus, Reference Muse and McManus2013).

Table 1. An overview of available assessment methods for assessing CBT competence drawn from Muse and McManus (Reference Muse and McManus2013)

* This does not provide an exhaustive list of tools available to measure competence but identifies some of the most commonly used tools available in assessing competence in the delivery of individual CBT.

The foundation of CBT competence is a sound understanding of the scientific, theoretical and contextual basis of CBT (‘knows’) and the ability to use this knowledge to inform when, how and why CBT interventions should be implemented (‘knows how’). These foundational aspects of competence can be assessed relatively easily, quickly, inexpensively and reliably using multiple-choice questions, essays, case reports and short-answer clinical vignettes. The upper levels in the hierarchy focus on assessing higher-order skills necessary to draw on and apply this knowledge in clinical situations. Level 3 refers to demonstration of skills within carefully constructed artificial clinical simulations (‘shows how’) and can be assessed using observational scales to rate performance within standardised role-plays. This approach offers the potential for scalable, standardised assessment of a range of clinical skills across varied client presentations and complexities. The highest level is the ability to use these skills within real clinical practice settings (‘does’ independently in practice). This can be assessed using client surveys or outcomes, supervisory assessments, and ratings of treatment sessions (self and assessor ratings). Client outcomes and satisfaction may be problematic as they are not direct measures of CBT competence and are influenced by other factors (e.g. client responsiveness to treatment, quality of the therapeutic relationship). Supervisory assessments offer broad and global insight into therapist competence over time and across situations but are also influenced by a number of biases. Self and assessor ratings of treatment sessions are therefore the most commonly used method for assessing skill in practice.

Several different scales have been developed for assessing CBT skills within routine and role-play treatment sessions. These can be broadly categorised as ‘transdiagnostic’ or ‘disorder-specific’. Transdiagnostic scales are designed to assess competences that underpin most CBT interventions, whilst disorder-specific scales assess the competence with which CBT treatment packages for a specific problem presentation are delivered. Disorder-specific scales may be beneficial within highly specialised training and treatment settings but have less applicability within broader training or practice settings in which therapists work across a range of protocols and approaches. These rating scales are generally designed to apply across any ‘active’ session utilising CBT intervention strategies. This means sessions focusing on other essential CBT activities such as assessment, formulation or relapse management often fall outside the remit of standardised measures. As CBT expands and develops, new measures are also being created to assess competence in these new ways of working (e.g. third-wave interventions, low-intensity interventions etc.).

Caution must be exercised when making judgements about clinical competence using any single scale as it is unlikely that any scale will provide a full and robust measure of CBT competence that is suitable across all settings, contexts, and for all purposes, Nonetheless the CTS-R (Blackburn et al., Reference Blackburn, James, Milne, Baker, Standart, Garland and Reichelt2001) has been widely used as the benchmark for assessing clinical skill across research, training courses and clinical services for more than two decades. However, this was a pragmatically developed tool and there is a lack of empirical evidence supporting this position, thus the CTS-R has been criticised for poor validity, reliability and usability (Muse and McManus, Reference Muse and McManus2013; Rayson et al., Reference Rayson, Waddington and Hare2021). Furthermore, the degree to which any scale provides a valid and reliable measure of CBT competence will be heavily influenced by the way the scale is implemented (e.g. number of assessors, assessor training, moderation, which sessions are selected, etc.) (Muse and McManus, Reference Muse and McManus2013; Roth et al., Reference Roth, Myles-Hooton and Branson2019). Thus it is important to avoid over-reliance on any single measure of CBT competence, to carefully consider not only which scale to use but how to implement it, and to be realistic about what conclusions can drawn about a therapist’s competence as a result of this assessment.

Theoretical models can be helpful in considering how to optimise learning from CBT competence assessments. Although it has received criticism, Kolb’s experiential learning model (Kolb et al., Reference Kolb., Boyatzis, Mainemelis., Sternber and Zhang2001) provides a useful framework to guide therapists through key learning phases and can be easily embedded within CBT training, supervision and professional development practices. Kolb’s model involves four components that interact within a continuous ‘learning cycle’: experience (engaging in an activity), observation (reflection on the experience), abstract conceptualisation (identifying learning), and active experimentation (putting learning into practice). Optimal use of assessments is made when a strategy for delivering and receiving feedback from CBT competence assessments is aligned with this continual process of action and reflection. Within Miller’s (Reference Miller1990) hierarchical framework, the highest and most complex levels of competence to assess are the application of clinical skills in clinical simulations (such as role-plays) and real clinical practice settings. A widely used approach for assessing clinical skills in training and professional development settings is to use observational rating scales such as the CTS-R (Blackburn et al., Reference Blackburn, James, Milne, Baker, Standart, Garland and Reichelt2001) or ACCS (Muse et al., Reference Muse, McManus, Rakovshik and Thwaites2017) to rate performance. The discussion below outlines ways both therapists completing competence ratings (referred to as ‘assessors’) and therapists receiving ratings (referred to as ‘therapists’) can use feedback from observational rating scales to support the cyclical learning process (see Fig. 5 for an overview).

Figure 5. Using the experiential learning model (Kolb et al., Reference Kolb., Boyatzis, Mainemelis., Sternber and Zhang2001) to embed skills-based CBT competence assessments within a continual process of action and reflection.

The first phase of learning is engaging in an activity, in this case a therapeutic encounter within a treatment session or role-play scenario. Experience is a central component of the learning process, with initial engagement in the activity providing the therapist with concrete experience of their actions and an evaluation of the subsequent consequences (Kolb et al., Reference Kolb., Boyatzis, Mainemelis., Sternber and Zhang2001). The therapist and assessor both need to be able to view the session. ‘Live’ in vivo viewing allows for a richer and more authentic insight. However, session recordings are more practical and can also be revisited. Visual recordings are optimal because they provide rich contextual information (e.g. non-verbal behaviour), followed by audio recordings, with transcripts of a real session being useful but less so than recordings. Therapists may be reluctant to record routine therapy sessions due to feeling self-conscious or fearing negative client reactions. An ‘audit-based’ approach involving routine session recording normalises the process as well as providing a pool of recordings. This allows purposeful selection of recordings to demonstrate competence or seek feedback on skills in working within a particular problem area or using a specific CBT model or intervention. This also enables supervisors to reduce therapist selection biases by periodically reviewing supervisee caseloads and reviewing recordings from those not brought to supervision (Padesky, Reference Padesky and Salkovskis1996). Adherence to ethical and legal guidelines is also supported by using standardised procedures to guide clients’ informed consent and storage of recordings. The use of role-plays circumvents these practical issues but does require careful development and adept portrayal of appropriate, relevant, contextualised and realistic scenarios. Additional contextual information is often necessary to enable assessors to make informed ratings. This may include stage of therapy, nature of presenting problem, formulation, client goals, session agenda, outcome data, and relevant homework or in-session materials (e.g. questionnaires, diaries, thought records, etc.). Weck et al.’s (Reference Weck, Grikscheit, Höfling and Stangier2014) findings suggested that ratings can reliably be made based on the middle third of a session, which would reduce time demands. However, it is more challenging to capture or reliably rate specific aspects of competence (Weck et al., Reference Weck, Grikscheit, Höfling and Stangier2014), thus undermining using segments for summative purposes or for providing overall performance feedback. It has been suggested that as many as three clients per therapist and four sessions per client are needed to achieve suitable reliability (Dennhag et al., Reference Dennhag, Gibbons, Barber, Gallop and Crits-Christoph2012). Collecting multiple assessors’ ratings of the same session can also increase reliability (Vallis et al., Reference Vallis, Shaw and Dobson1986) and reduce halo effects (Streiner and Norman, Reference Streiner and Norman2003). Thus the ideal approach to summative assessment would be for multiple assessors to rate a number of sessions, drawn across different clients or role-play scenarios. As this approach is prohibitively resource intensive, competence judgements within training and accreditation contexts are often based on three or four full sessions per therapist.

Phase 2 of Kolb’s learning cycle involves purposeful and reflective observation of what happened within the CBT treatment or role-play session. Observational CBT competence rating scales completed by assessors and therapists (i.e. self-ratings) can support this reflective process. The assessment strategy should outline which scale is most suitable for the given purpose and context. Both parties need training in how to use the scale and need to establish how the scale is being used. For example, whether the scale is used to provide a broad overview of competences or to focus in on a specific skill? It is also important to be clear about what is considered good performance. This will vary according to the developmental level of the therapist and should be tailored to the individual therapist’s learning goals as well as any specified benchmarks. Whichever scale is being used, the following general guidelines can be helpful when using observational rating scales:

Refer to item descriptors to anchor ratings on each item.

-

Make notes whilst viewing the session but wait until the full session recording has been viewed to provide numerical ratings and finalise feedback.

-

Score each item on the scale independently to avoid relying on an overall global impression.

-

Client progress should not influence the rating provided.

-

Refer back to rating manuals to support consistency and prevent assessor drift.

The third phase refers to drawing on reflective observations to form abstract concepts and general principles (Kolb et al., Reference Kolb., Boyatzis, Mainemelis., Sternber and Zhang2001). This involves making sense of and learning from feedback obtained through self and assessor ratings completed using observational rating scales. Giving and receiving feedback can be uncomfortable, especially when feedback is corrective or summative. However, assessors can implement strategies to support this process (James, Reference James2015; Gonsalvez and Crowe, Reference Gonsalvez and Crowe2015; Kennerley, Reference Kennerley2019). Such strategies may include giving a written or verbal feedback summary alongside numerical scale ratings to provide valuable formative feedback. Rating scales can be used to inform this feedback summary by providing a ‘competence profile’ highlighting areas of strength and those where improvement is needed. As well as identifying opportunities for skills development, it is important to recognise and highlight strengths to ensure these are maintained, enhanced and reinforced. The feedback should be specific, giving concrete examples drawn from the rating scale and session material. The summary should also identify key ‘take home’ messages. This is especially important for novice therapists, who may feel overwhelmed and find it hard to identify which the most important issues are. The assessment purpose, context and assessor–therapist alliance will also impact how feedback will be received and should therefore shape the content and tone. For example, a novice trainee completing a summative assessment may feel nervous and less confident in their abilities. Here the assessor–therapist alliance might be used to create a setting where the trainee feels safe enough to hear and engage with the feedback. Then, the key feedback focus may be to encourage motivation and self-esteem. There is a danger that therapists passively ‘receive’ feedback ‘delivered’ by an assessor. Yet feedback should be a collaborative and dynamic endeavour involving Socratic methods of learning (Padesky, Reference Padesky and Salkovskis1996; Kennerley, Reference Kennerley2019; Kennerley and Padesky, Reference Kennerley, Padesky, Padesky and Kennerley2023). The more active and involved in the process the therapist can be, the more they can take control of and reflect on their own learning progress (Nicol and Macfarlane-Dick, Reference Nicol and Macfarlane-Dick2006). Therapist engagement with the learning experience is likely to be enhanced by incorporating therapist preferences about what they would like feedback on, when, and in what format. For example, some may prefer to receive written feedback prior to supervision to allow time to digest this, others may prefer to explore feedback within supervision; some choose to hear critical statements first, others prefer to learn what has been done well before hearing what might be improved. Completing self-assessments allows therapists to identify their own strengths, deficits and needs before receiving external feedback. This also allows comparisons between assessor and therapist ratings, therefore identifying discrepancies in self-view of competence and offering more perspectives for reflection.

The final phase is a future planning process, namely, how to put learning into practice and apply the abstracted principles across contexts (Kolb et al., Reference Kolb., Boyatzis, Mainemelis., Sternber and Zhang2001). This stage is vital for generalisation and application of learning within future therapeutic activities, which can themselves be reflected upon. It can be helpful for therapists to develop a specific learning action plan to support this. Feedback from rating scales can identify which areas of knowledge and skill the therapist wishes or needs to develop, thus shaping individualised learning goals. The plan also needs to outline how these can be achieved. Assessors can facilitate this by including ‘feedforward’: constructive guidance about ways competence could be further developed. This might include corrective feedback, such as offering suggestions of alternative more skilful ways of working. It can also identify specific strategies for supporting development of declarative knowledge (e.g. suggested reading, sharing case studies, etc.) and procedural skills (e.g. experiential training, role-plays in supervision, further clinical experience, etc.). Supervision is essential in supporting competence development (Rakovshik et al., Reference Rakovshik, McManus, Vazquez-Montes, Muse and Ougrin2016; Watkins, Reference Watkins2011) and may be especially helpful in facilitating this final phase of learning. Well-structured supervision offers a secure environment within which therapists can sensitively explore their understandings of, and reactions to, feedback. Supervision also supports movement away from an information transmission model of delivering feedback. Instead, dialogue can be used to collaboratively co-construct meaning, thus supporting the therapist in understanding and internalising feedback (Nicol and Macfarlane-Dick, Reference Nicol and Macfarlane-Dick2006). Through a mutual process of discovery, therapist and supervisor can create and implement a developmentally appropriate learning action plan that will ultimately enhance client care. Although it may be preferrable from a developmental perspective for rating feedback to be provided by the supervisor, ratings completed by independent assessors can be usefully explored. Feedback can also be provided by peer-supervisors, as it is not always possible or necessary for the supervisor to have an ‘expert’ role. Viewing clips from session recordings may further illustrate feedback and aid discussion. Supervision also provides opportunity for modelling particular skills and for experiential activities (e.g. role-play, ‘chair work’). This can help consolidate learning, prime for more fluent performance, and enhance procedural knowledge about how to implement new skills (Bennett-Levy, Reference Bennett-Levy2006).

Conclusions

Much progress has been made in defining the range of competences that constitutes ‘CBT competence’. This has led to examination and evaluation of different ways of assessing, monitoring and enhancing therapists’ competences. Although the assessment of CBT competence is a complex and challenging issue, it remains a vital component in ensuring the ongoing delivery of high-quality CBT. Thus there is a need to encourage a shift in culture, whereby the allocation of resources to the assessment of CBT competence is more clearly recognised and prioritised. There is a particular need for CBT competence monitoring to be more routinely embedded within routine clinical practice to facilitate continued professional development, self-reflection and maximise the efficacy of supervision. A range of different methods can be used to assess therapists’ generic and CBT-specific knowledge and skills, all of which have inherent advantages and disadvantages. There is a need to continue to build upon and improve the reliability, validity and feasibility of current assessment methods as well as exploring innovative methods of assessing competence. Due to the multi-faceted nature of CBT competence and the limitations of existing methods of assessing competence, a standardised ‘one size fits all’ approach cannot be taken when determining how best to assess CBT competence. Instead, a pragmatic assessment strategy needs to be specified and implemented according to the specific context and purpose of the assessment. This must consider what aspects of competence to assess, which method or combination of methods to use, and how these methods will be implemented (i.e. when and by whom). This should also include a strategy for delivering and receiving feedback to enhance formative functions and optimise learning. Formative feedback is important in supporting acquisition and consolidation of competence for trainee or newly qualified therapists and is also essential for more experienced therapists to support lifelong learning and reduce therapist drift. Future development in the area may consider how best to support those assessing competence – that is, how we assess, develop and monitor skill in assessing competence.

Key practice points

-

(1) There is a need for a culture shift in which ongoing and career-long assessment of CBT competence is supported, encouraged and valued. It is particularly important to consider pragmatic and flexible ways to routinely embed assessments based on direct observation of skills (in sessions or role-plays) into routine supervision or audit practices to support lifelong learning.

-

(2) Collaboration is key. Therapists should be firmly situated at the heart of CBT competence assessed by others. Approaching assessment as a collaborative venture whereby the therapist being assessed is actively engaged in the process promotes independent learning and fosters self-reflection.

-

(3) It is important to strengthen the formative function of CBT competence assessments in order to optimise learning. Experiential learning models and effective communication models can be used as frameworks to guide effective delivery and receipt of feedback and support therapists along their individual learning journey.

-

(4) Caution must be exercised when making judgements about CBT competence based on limited evidence, especially for summative purposes. A multi-source, multi-informant, multi-method practice portfolio provides the most comprehensive and robust assessment of CBT competence.

-

(5) There is not a ‘one size fits all’ approach to assessing CBT competence. Instead, careful consideration needs to be given to which assessment methods to use and how and when they should be implemented given the specific context and purpose.

Data availability statement

No new data were generated for this publication.

Acknowledgements

None.

Author contributions

Kate Muse: Conceptualization (lead), Project administration (lead), Resources (lead), Writing – original draft (lead); Helen Kennerley: Conceptualization (supporting), Resources (supporting), Writing – review & editing (equal); Freda McManus: Conceptualization (supporting), Resources (supporting), Writing – review & editing (equal).

Financial support

This research received no specific grant from any funding agency, commercial or not-for-profit sectors.

Conflicts of interest

None

Ethical standards

The authors have abided by the Ethical Principles of Psychologists and Code of Conduct as set out by the BABCP and BPS.

Comments

No Comments have been published for this article.