1. Introduction

The study of argumentation, that is, the linguistic and rhetorical strategies used to justify or refute a standpoint, with the aim of securing agreement in views (van Eemeren, Jackson, and Jacobs Reference van Eemeren, Jackson and Jacobs2015), dates back to ancient times, with Aristotle being often acknowledged as one of the seminal scholars devoted to this intrinsically human activity. A vast amount of work on the study of argument and argumentation processes has been conducted over the centuries, with a recent treatment of both classical and modern backgrounds appearing in van Eemeren et al. (Reference van Eemeren, Garssen, Krabbe, Snoeck Henkemans, Verheij and Wagemans2014). Besides its usefulness in fields such as philosophy, linguistics, and the law, argumentation has also gained attention in computer science, with a long tradition in artificial intelligence research. Within this field, argumentation has been studied in knowledge representation and reasoning (including non-monotonic or defeasible reasoning Nute Reference Nute1994; Pollock Reference Pollock1995) and also as a means to develop sophisticated interactions (e.g., in expert systems Ye and Johnson Reference Ye and Johnson1995, or to perform automated negotiation in multi-agent systems Rahwan et al. Reference Rahwan, Ramchurn, Jennings, Mcburney, Parsons and Sonenberg2003). Several studies have been developed on abstract argumentation frameworks, including the seminal work by Dung (Reference Dung1995). More recently, argumentation has been explored in the context of computational linguistics and natural language processing (NLP) (Lippi and Torroni Reference Lippi and Torroni2016; Moens Reference Moens2018; Lawrence and Reed Reference Lawrence and Reed2019).

Several approaches to model argumentation have been proposed, with different degrees of expressiveness. An often-used distinction in the study of argumentation considers two perspectives (O’Keefe Reference O’Keefe1977; Reed and Walton Reference Reed and Walton2003): argument as product and argument as process. The former is more focused on studying how arguments are internally structured, while the latter looks at how arguments are used in the context of dialogical interactions (regardless of whether the interlocutor is explicitly present or not). Analyzing argument structure amounts to looking at its logic and deals with issues related to the reasoning steps employed and their validity. Studying arguments as ingredients of an argumentation process borrows a dialogical (in fact dialectical) conceptualization, including both verbal (e.g., live debates) and written communication (e.g., manifestos or argumentative essays). Modeling such a process entails considering the persuasive nature of argumentation, or at least its influencing character (Huber and Snider Reference Huber and Snider2006). Even though this distinction between product and process is not clear-cut, most of the proposed theoretical argumentation models can be seen as relying mostly on one of these perspectives. For instance, Freeman’s standard approach (Freeman Reference Freeman2011) (after the work of Thomas Reference Thomas1986) focuses on the relations between argument components (premises and claims) and thus primarily studies argument as product.Footnote a At the other end of the spectrum, models that take into account argumentative interactions, for example, by anticipating possible attacks (such as in Toulmin’s model Toulmin Reference Toulmin1958) or by explicitly handling dialogues (such as in Inference Anchoring Theory Reed and Budzynska Reference Reed and Budzynska2011) clearly approach arguments as process. Several other models have been proposed and framed within a different taxonomy (Bentahar et al. Reference Bentahar, Moulin and Bélanger2010): monological, dialogical, and rhetorical models of argumentation. We review the most relevant proposals in Section 2.

Within artificial intelligence, the use of computational models of natural argument is particularly relevant in the flourishing area of argument mining (Stede and Schneider Reference Stede and Schneider2018), which aims at automatically extracting argument structures from natural language text. A combination of NLP techniques and machine learning (ML) methods has been used for this purpose. Although approaches to unsupervised language representations and knowledge transfer between different NLP tasks are on the agenda, argument mining is an extremely demanding task in terms of semantics. As such, any successful approach will have to rely on annotated data (Pustejovsky and Stubbs Reference Pustejovsky and Stubbs2012) to apply supervised learning methods. In fact, several argument annotation projects have been put in place, following different argumentation models, focusing on different text genres, and having different aims. We will analyze the most relevant projects in Section 3. As in all of NLP tasks, most available corpora are written in English.

This is, in effect, the main research question we address: How have theoretical argumentation models been used in corpus annotation? While doing so, we critically explore, in Section 4, issues of compatibility between the output of argument annotation projects, also in light of current trends in the field of NLP and machine learning.

Thus, this article makes the following contributions. Firstly, we provide an updated account of existing argumentation models and highlight those that can be or have already been explored in corpus annotation. Secondly, we present a survey on existing argument annotation projects, taking the identified argumentation models as a starting point. Finally, we discuss the challenges and prospects of combining existing argument-annotated corpora. We end this survey by prospectively looking at the usefulness of argument-annotated corpora in several foreseeable applications, some of which are already being explored.

Despite the existence of somewhat recent surveys in this domain (namely, Lippi and Torroni Reference Lippi and Torroni2016; Budzynska and Villata Reference Budzynska and Villata2018; Cabrio and Villata Reference Cabrio and Villata2018; Lawrence and Reed Reference Lawrence and Reed2019; Schaefer and Stede Reference Schaefer and Stede2021), most of them are task-oriented, focus more on argument mining techniques, or do not put in perspective the usage of theoretical argumentation models for building annotated corpora. On the contrary, our starting point is to review argumentation models and to look at how they have been used and adapted with the aim of analyzing argumentative discourse and ultimately producing annotated corpora for various tasks.

2. Argumentation models

Given the wide study of argumentation in different fields—such as philosophy, linguistics, the law, and artificial intelligence—unsurprisingly several distinct models have been proposed to handle argumentation from different perspectives. In the classical Aristotelian theory, persuasion can be argumentatively explored in three dimensions: logos, pathos, and ethos. Logos is concerned with the construction of logical arguments, pathos focuses on appealing to the emotions of the target audience, and ethos explores the credibility of the arguer or of an entity mentioned in the discourse. Similarly, philosophers have long established a distinction between logical, dialectical, and rhetorical argumentation perspectives (Blair Reference Blair2003). A different, albeit similar, taxonomy has been put forward by Bentahar et al. (Reference Bentahar, Moulin and Bélanger2010), who proposed a classification into monological, dialogical, and rhetorical argumentation models. The similarity comes from the fact that monological models focus on the links between argument components, for example, how conclusions are drawn from premises, thereby providing an account for logical connections. Moreover, the dialectical/dialogical distinction (Wegerif Reference Wegerif2008) is rather subtle. In essence, it focuses on the contents of the expressed views in an interaction: while dialectics assumes the presence of opposing or conflicting views that are in need of settlement, dialogical interactions are not necessarily argumentative. In any case, they share this spirit in light of the proposed taxonomy. The rhetorical perspective focuses on the nature of argumentation as a means of persuasion and thus takes into account the audience’s perception of the employed arguments. Instead of looking primarily at the structure of arguments, rhetorical models are more concerned with how a discourse’s target audience is convinced or affected by it.

After discussing, in Section 2.1, the nature of argumentative text, in Section 2.2 we present existing argumentation models, with a bias towards those that can be or have been explored in corpus annotation. This will provide the needed grounds to better appreciate the annotation projects that we will later discuss.

2.1. Argumentative text

Argumentation can take various forms, either spoken, written, or graphical; it can even be multi-modal by taking on a combination of these. In argumentation, it is implicitly assumed that someone, a so-called reasonable critic (van Eemeren Reference van Eemeren2001), will take in the expressed arguments and possibly be convinced by them. Yet, the immediate presence of an interlocutor is what distinguishes a dialogue from a monologue, the latter being one of the most prolific forms of persuasive argumentation (Reed and Long Reference Reed and Long1997). Going beyond dyadic relationships, a complex multi-party discussion has been framed into the notion of a polylogue (Kerbrat-Orecchioni Reference Kerbrat-Orecchioni2004; Lewiński and Aakhus 2014).

Regardless of the immediate availability of the interlocutor of an argumentation exercise, different kinds of argumentative texts exist, whose differences we explore by connecting argumentative discourse with text genres.

2.1.1. Variations in argumentative content

A genre is a communicative event characterized by a set of communicative purposes (Bhatia Reference Bhatia1993) and extra-textual elements (Biber Reference Biber1988), whose external form and use situations determine how each text is perceived, categorized, and used by the members of a community (Swales Reference Swales1990). Texts of the argumentative type can take the form, for example, of essays, debates, opinion articles, or research articles: they are all argumentative in the sense that they aim to persuade the other party (Hargie, Dickson, and Tourish Reference Hargie, Dickson and Tourish2004), but take different communicative functions and hence are of different genres. Consequently, depending on the particular genre, they can be more or less argumentatively structured.

Explicit argumentative texts (such as persuasive essays) are highly structured and usually include a thesis-antithesis-synthesis type of structure (Schnitker and Emmons Reference Schnitker and Emmons2013) while employing linguistic argumentative devices. Other less structured genres (such as opinion articles) tend to express arguments in a less orderly manner: argumentation tends to be more subtle and resorts to enthymemes, in which an argument includes implicit and omitted elements. Furthermore, argumentative (discourse) connectors (van Eemeren, Houtlosser, and Snoeck Henkemans Reference van Eemeren, Houtlosser and Snoeck Henkemans2007; Das and Taboada Reference Das and Taboada2013, Reference Das and Taboada2018) are often avoided, and the logical reasoning is left to the reader to establish. When argumentative discourse markers or connectors are prevalent (such as in legal texts or persuasive essays), identifying arguments becomes a comparatively easier task; conversely, when such linguistic clues are omitted or mostly absent (such as in user-generated content or in opinion articles, considered a free and fluid text genre), a careful interpretation of the text content is needed to identify argumentative reasoning steps.

It is thus pertinent to analyze different text genres in terms of argumentative content. This is a crucial aspect that should be considered when implementing an argument annotation project.

2.1.2. Argumentation and text genres

Argumentation in debates (O’Neill, Laycock, and Scales Reference O’Neill, Laycock and Scales1925) has a long tradition in human societies and is particularly impactful in public visibility settings, such as election debates (Haddadan, Cabrio, and Villata Reference Haddadan, Cabrio and Villata2018; Visser et al. Reference Visser, Konat, Duthie, Koszowy, Budzynska and Reed2020) or policy regulations (Lewiński and Mohammed Reference Lewiński and Mohammed2019). Debates are a (typically oral) form of interaction where participants express their views on a common topic. They enable two or more participants to respond to previously expressed arguments, for example, by attacking them or providing alternative points of view, either on the fly or in a turn-based fashion. Debates are usually highly argumentative in nature, and, in most cases, each participant aims to convince the audience that their standpoint prevails. Exploring argumentative strategies in debates is no longer exclusive to humans: as a recent demonstration (Aharonov and Slonim Reference Aharonov and Slonim2019) has shown that machines too can use them, although perhaps not with the competence and versatility with which humans can employ such strategies. This raises new challenges and concerns about the ethical usage of machines to conduct debates.

Persuasive essays and legal texts (especially court decisions) are among the most argumentative text genres. Essays (Burstein et al. Reference Burstein, Kukich, Wolff, Lu and Chodorow1998) and legal texts (both in case Wyner et al. Reference Wyner, Mochales-Palau, Moens and Milward2010 and civil law do Carmo Reference do Carmo2012 documents) are typically well-structured in terms of discourse and make use of explicit markers and connectors that point to the presence of argumentative reasoning structures. The field of legal reasoning is actively exploring ways to automate the handling of legal documents through computational means (Rissland Reference Rissland1988; Eliot Reference Eliot2021).

Speeches and manifestos, in particular those with a political bias (Menini et al. Reference Menini, Cabrio, Tonelli and Villata2018, Reference Menini, Nanni, Ponzetto and Tonelli2017), may involve content as structured as essays. However, some speeches include a mix of informational and persuasive elements, and thus, argumentative structures may not be as prevalent as in persuasive essays or legal texts.

Scientific articles (Gilbert Reference Gilbert1976; Lauscher, Glavaš, and Eckert Reference Lauscher, Glavaš and Eckert2018a) should have, at least in principle, a markedly scientific writing style, including sections such as introduction, related work, proposal, experimental evaluation, discussion, or conclusions. Their argumentative structure often follows a predictable pattern (Teufel Reference Teufel1998; Wagemans Reference Wagemans2016b), which can be exploited to look for arguments in specific sections.

News editorials and opinion articles (Bal and Saint Dizier Reference Bal and Saint Dizier2010) typically have a loose structure while at the same time including relevant argumentative material. In these texts, however, argumentation structures do not necessarily abide by standard reasoning steps and often include figures of speech such as metaphors, irony, or satire. This more or less free writing style makes it harder to clearly identify arguments in text, since the lack of explicit markers hinders the task of telling descriptive from argumentative content.

Review articles such as product reviews often include a mix of description and comparative evaluation. As such, the employed argument schemes are expected to be rather focused (Wyner et al. Reference Wyner, Schneider, Atkinson and Bench-Capon2012). Finally, certain kinds of user-generated content, including opinions, customer feedback, complaints, comments (Park and Cardie Reference Park and Cardie2014), and social media posts (Goudas et al. Reference Goudas, Louizos, Petasis and Karkaletsis2014; Schaefer and Stede Reference Schaefer and Stede2021), often raise a number of challenges, related to poorly substantiated claims or careless writing. This kind of content is also often short, lacking enough context to allow for a deep understanding of the author’s arguments, when available. Hence, it is a more difficult genre to process from an argumentation point of view.

The diversity of argumentative content across different text genres must be considered both when drafting annotation guidelines and when developing argument mining models. More specifically, analyzing texts in terms of argumentative content may justify the adoption of particular argumentation models. We next survey such models, while in Section 3 we analyze annotation efforts that have been carried out, targeting different text genres.

2.2. Theoretical models of argumentation

According to van Eemeren et al., the general objective of argumentation theory is to provide adequate instruments for analyzing, evaluating, and producing argumentative discourse (van Eemeren et al. Reference van Eemeren, Garssen, Krabbe, Snoeck Henkemans, Verheij and Wagemans2014, p. 12). There are now several attempts to provide such instruments, with varying degrees of complexity. In this section, we review some of the most relevant ones. We highlight those that can be or have been used in corpus annotation. We will contrast those with a few others that are useful for more qualitative argument analysis.

Arguably, the most basic model that can be used for argumentation is propositional logic (Govier Reference Govier2010, ch. 8). In fact, deductive argumentation (Besnard and Hunter Reference Besnard and Hunter2001) can be represented through propositions and propositional logic connectives (and, or, not, entailment). However, classical (propositional) logic is not appropriate to deal with conflicting information (Besnard and Hunter Reference Besnard and Hunter2008, pp. 16-17), which is prevalent in argumentative reasoning.

2.2.1. Toulmin’s model

One of the most influential argumentation models of the

![]() $20{\rm th}$

century is Toulmin’s (Toulmin Reference Toulmin1958). The model is composed of six argument components (as illustrated in Figure 1), which together articulate a theoretically sound and well-formed argument: a claim, whose strength is marked by a qualifier, is supported by certain assumptions in the form of data (grounds); this support is based on a warrant (a general and often implicit, commonsense rule whose applicability is explainable by a backing), unless certain circumstances, stated in the form of a rebuttal, occur. An example is included in Figure 2.

$20{\rm th}$

century is Toulmin’s (Toulmin Reference Toulmin1958). The model is composed of six argument components (as illustrated in Figure 1), which together articulate a theoretically sound and well-formed argument: a claim, whose strength is marked by a qualifier, is supported by certain assumptions in the form of data (grounds); this support is based on a warrant (a general and often implicit, commonsense rule whose applicability is explainable by a backing), unless certain circumstances, stated in the form of a rebuttal, occur. An example is included in Figure 2.

Figure 1. Toulmin model, adapted from Toulmin (Reference Toulmin1958). D = Data, Q = Qualifier, C = Claim, W = Warrant, B = Backing, R = Rebuttal.

Figure 2. An instantiation of Toulmin’s model, adapted from Toulmin (Reference Toulmin1958).

Despite its apparent comprehensiveness, Toulmin’s model has been criticized for its lack of applicability to real-life arguments. Freeman (Reference Freeman1991) and Simosi (Reference Simosi2003) agree that the model is more adequate for analyzing arguments as process (in a dialectical setting) than as product. In fact, the model takes for granted that certain elements, such as data, warrant, or backing, are put in place in response to critical questions raised by a challenger. However, this is seldom the case in everyday arguments. Furthermore, according to Freeman, the distinction between these elements is not clear, making it hard to identify them when analyzing argumentative texts.

2.2.2. Freeman’s model

By building upon the criticisms of Toulmin’s model, and starting from Thomas’ work (Thomas Reference Thomas1986) (dubbed as the standard approach), Freeman (Reference Freeman1991; Reference Freeman2011) proposes to represent argument as product using diagrams that include as main elements simply premises and conclusions. These fit together by forming various structures (illustrated in Figure 3): divergent, where a premise is given to support two distinct conclusions; serial, where a chain of premise, intermediate conclusion, and final conclusion is used; linked, where two premises are needed to support, together, a conclusion; and convergent, where a conclusion is independently supported by two different premises. These structures can be combined to obtain argument structures of arbitrary complexity.

Figure 3. Argument structures, adapted from Freeman (Reference Freeman2011).

According to Freeman, the notion of warrant and backing in Toulmin’s model is something that is typically not explicit in an argument as product but rather elicited by a challenger. Given its interpretation as an inference rule, a warrant fits nicely as an element of modus ponens and is thus representable as a premise in a linked structure. In his proposal of an extended standard approach, Freeman introduces a reinterpretation of the qualifier, which he renames modality. Instead of qualifying the conclusion, a modality indicates how strongly the combination of the premises supports the conclusion. The example (from Freeman Reference Freeman2011, p. 18) “All humans are mortal. Socrates is a human. So, necessarily, Socrates is mortal” includes a linked argument structure with two premises, where “necessarily” plays the role of the modality—it does not qualify the conclusion, but rather the strength of the argumentative reasoning step. Another example (adapted from Freeman Reference Freeman2011, p. 18) is “That die came up one in the previous 100 tosses. Therefore, it is probable that it will come up one on the next toss,” where “probable” is the modality. Diagrammatically, Freeman suggests representing modalities as labeled nodes that are interposed between premises and conclusion (see Figure 4a).

Figure 4. Extended standard approach, adapted from Freeman (Reference Freeman2011).

To refine Toulmin’s notion of rebuttal, Freeman borrows Pollock’s distinction between rebutting and undercutting defeaters (Pollock Reference Pollock1995) (i.e., countermoves). While a rebutting defeater may present evidence that the conclusion is false, an undercutting defeater questions the reliability of the employed inferential step from premises to conclusion. Freeman suggests drawing both kinds of defeaters similarly to Toulmin’s approach, connecting them to the modality. In Figure 4b, however, we distinguish the cases for undercutting and rebutting defeaters in a way that more clearly illustrates their different targets, which is in line with Pollock’s approach (Freeman found this distinction in notation to be unnecessarily complicated). Finally, Freeman introduces counters to defeaters (both rebutting and undercutting), which thus indirectly support the conclusion.

2.2.3. Walton’s argumentation schemes

To harness the diversity of argumentation used in practice, several taxonomies have been developed over the years (Kienpointner Reference Kienpointner1986, Reference Kienpointner1992; Pollock Reference Pollock1995; Walton Reference Walton1996; Katzav and Reed Reference Katzav and Reed2004; Lumer Reference Lumer2011). These so-called argumentation schemes (Macagno, Walton, and Reed Reference Macagno, Walton and Reed2017) vary in depth and coverage and attempt to capture stereotypical patterns of inference occurring in argumentation practice. They thus depart from logic-based deductive forms of reasoning, focusing instead on how humans express themselves in natural language.

The concept of argumentation scheme is so prevalent that many other theoretical models of argumentation encompass their own schemes, such as different functions of warrant in Toulmin’s model (Toulmin Reference Toulmin1958), or argument classifications in the New Rhetoric (Perelman and Olbrechts-Tyteca Reference Perelman and Olbrechts-Tyteca1969). Of the existing taxonomies, however, Walton’s argumentation schemes (Walton Reference Walton1996) are arguably one of the most well-known and widely used, even though it has gone through several variations (Walton, Reed, and Macagno Reference Walton, Reed and Macagno2008; Walton and Macagno Reference Walton and Macagno2015).

While other more elaborated argumentation theories define arguments functionally, a theory focusing on argumentation schemes empirically collects and classifies arguments as used in actual practice, distinguishing them by their contents (following mostly a bottom-up approach). Aggregating arguments according to their similarities gives rise to elaborate taxonomies, containing more than sixty different schemes (Walton et al. Reference Walton, Reed and Macagno2008), from which one may identify the main categories. Walton et al. (Reference Walton, Reed and Macagno2008) identify three— reasoning arguments, source-based arguments, and arguments applying rules to cases— while Walton and Macagno (Reference Walton and Macagno2015) divide the first of these main categories into discovery arguments and practical reasoning. Figure 5 shows some examples of argument schemes.

Figure 5. Argument scheme examples, adapted from Macagno et al. (Reference Macagno, Walton and Reed2017).

Besides its own pattern of premises and conclusion, in Walton’s approach, each argumentation scheme encompasses a critical perspective on the strength of an argument through a set of critical questions. These represent the defeasibility conditions of the argument or the weaknesses it may suffer from and may be used to search for attack clues. Examples of critical questions regarding the argument scheme from practical reasoning illustrated in Figure 5b include (Macagno et al. Reference Macagno, Walton and Reed2017):

-

CQ1 What other goals do I have that might conflict with G?

-

CQ2 What alternative actions to A would also bring about G?

-

CQ3 Among A and these alternative actions, which is arguably the most efficient?

-

CQ4 What grounds are there for arguing that it is practically possible for me to do A?

-

CQ5 What consequences of doing A should also be taken into account?

Critical questions may be relied upon to build a strong (and virtually unbeatable) argument or used by an attacker to refute the argument—which grants the model a dialogical flavor.

2.2.4. Argumentum model of topics

One alternative proposal for argument schemes, focusing on the inferential steps of argumentation, has been proposed by Rigotti and Greco under the name of Argumentum Model of Topics (AMT) (Rigotti and Greco Morasso Reference Rigotti and Greco Morasso2010; Rigotti and Greco Reference Rigotti and Greco2019). This model distinguishes between two components of argument schemes: the procedural component and the material component.

The procedural component focuses on the semantic-ontological structure generating the inferential connection that is the basis for the argument’s logical form. Within it, three levels are identified: the locus concerns the ontological relation on which argumentative reasoning is based; each locus gives rise to inferential connections called maxims; finally, maxims activate logical forms, such as modus ponens or modus tollens. An example provided by Rigotti and Greco Morasso (Reference Rigotti and Greco Morasso2010, pp. 499-500) goes as follows: “(1) It is true of this evening (our national holiday) that there will be traffic jams. (2) Because the fact that there were traffic jams was true for New Year’s Eve. (3) And the national holiday is comparable to New Year’s Eve.” Here, the semantic-ontological relation is one of locus from analogy by comparing the “national holiday” to “New Year’s Eve.” The inferential connection is that if something (“traffic jams”) has been the case for a circumstance (“New Year’s Eve”) of the same functional genus as X (“national holiday”), it may be the case for X as well. Finally, the logical form employed is modus ponens, when instantiating the inferential connection with “traffic jams,” “New Year’s Eve” and “national holiday.”

The material component provides applicability of the inferential connection to an actual argument by exploring both implicit and explicit premises. For the above example, the fact that “the national holiday is comparable to New Year’s Eve” requires an effectual backing coming from an outside material starting point shared by the interlocutors. AMT claims to make it possible to explain and reconstruct the inferential mechanism employed in argumentative discourse, deemed to be often complex or implicit.

In terms of argument schemes (or loci), AMT proposes a taxonomy based on three domains (Rigotti and Greco Morasso Reference Rigotti and Greco Morasso2009; Musi and Aakhus Reference Musi and Aakhus2018). The syntagmatic/intrinsic domain concerns things that are referred to in the standpoint and aspects ontologically linked to it, including arguments from definition, from extensional implications, from causes, from implications and concomitances, and from correlates. The paradigmatic/extrinsic domain refers to arguments based on paradigmatic relations, including those from opposition, analogy, alternatives, or termination. Finally, middle/complex loci lie in the intersection between intrinsic and extrinsic ones and include arguments from authority, from promising and warning, from conjugates, and from derivates.

2.2.5. Inference Anchoring Theory

Specifically exploring the dialogical nature of argumentation, Reed and Budzynska propose the Inference Anchoring Theory (IAT) (Budzynska and Reed Reference Budzynska and Reed2011; Reed and Budzynska Reference Reed and Budzynska2011) as a means to offer an explanation of argumentative conduct in terms of the anchoring of reasoning structures in persuasive dialogical interactions. In this sense, IAT provides a bridge between the reasoning (logical) and the communicative (dialogical) dimensions of argumentation.

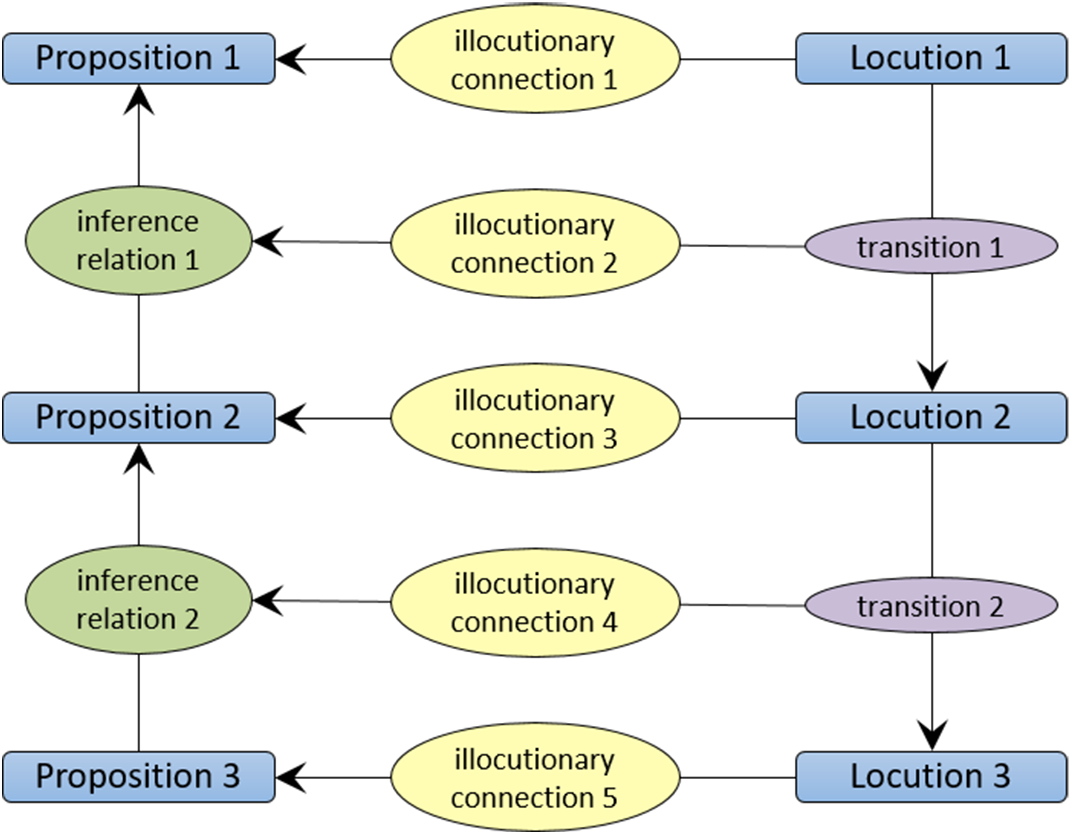

IAT relies on the fact that the logical links between dialogical utterances are governed by dialogue rules (transitions) expressing how sequences of utterances can be composed (Budzynska et al. Reference Budzynska, Janier, Reed, Saint-Dizier, Stede and Yakorska2014). These transitions act as “anchors” to the underlying reasoning steps employed in the dialogue. The propositions and relations that together form the argumentative reasoning are anchored, by means of illocutionary connections, in the locutions and transitions that constitute the dialogue. IAT includes locutions (dialogical units) and transitions between them; illocutions connect the communicative and the reasoning dimensions, particularly by connecting locutions to propositions; propositional relations recover the reasoning that is anchored in the discourse. Different relations between propositions can be used, distinguishing not only between inference, conflict, or rephrase, but also encompassing argumentation schemes.

Figure 6 shows the general structure of IAT usage: the flow of the dialogue is depicted, on the right side of the figure, through (usually chronologically ordered) transitions; propositions and reasoning steps are shown on the left side anchored in illocutions in the discourse. Examples of illocutions (or illocutionary connections) include asserting, questioning, challenging, conceding, (dis)agreeing, restating, or arguing; some of them are illustrated in the example shown in Figure 7.

Figure 6. IAT model.

Figure 7. An instantiation of the IAT model, adapted from Budzynska et al. (Reference Budzynska, Janier, Reed, Saint-Dizier, Stede and Yakorska2014): an assertion p is challenged and then justified using q, resulting in the inference relation

![]() $q \implies p$

.

$q \implies p$

.

2.2.6. Periodic Table of Aarguments

The wide variety of taxonomies of argumentation schemes hinders their adoption in different fields, including artificial intelligence (Katzav and Reed Reference Katzav and Reed2004). Wagemans (Reference Wagemans2016a) points out that existing theories are not based on a formal ordering principle, making them harder to use. Because they often follow a bottom-up approach, no theoretical rationale is provided regarding the number of categories identified.

To circumvent these problems, Wagemans proposes a Periodic Table of Arguments (PTA) (Wagemans Reference Wagemans2016a), which orthogonally classifies arguments in three dimensions. The first dimension approaches the propositional content of both premises and standpoints (conclusions) to distinguish between predicate and subject arguments: if both premise and standpoint share the same subject, we are in the presence of a predicate argument, as the arguer aims to increase the acceptability of the standpoint by exploiting a relationship (through a common subject) between predicates; conversely, if the argument elements share the same predicate, we have a subject argument, as the arguer exploits a relationship (through a common predicate) between subjects. The second dimension identifies second-order, as opposed to first-order, arguments. The former is the case when someone else’s standpoint—an assertion—is used to reconstruct another standpoint, as happens in the scheme argument from expert opinion. First-order arguments do not rely on such kind of assertion but work rather on propositions (Wagemans Reference Wagemans2019). Finally, the third dimension details the types of propositions included in both premise and standpoint, and their combinations. A proposition of policy (P) expresses a specific policy that should be carried out. A proposition of value (V) expresses a judgment towards an arbitrary subject. A proposition of fact (F) denotes an assertive observation about the subject. The argument is characterized by the pairs of types of propositions employed.

When combined, these three dimensions give rise to 36 different argument types (some of which are shown in Figure 8), which can be represented as an acronym capturing the classification of the argument in each dimension. For instance, 1 pre VF stands for a first-order (1) predicate (pre) argument connecting a proposition of fact premise to a proposition of value conclusion. An example would be “unauthorized copying is not a form of theft, since it does not deprive the owner of use” (Wagemans Reference Wagemans2016a). As another example, “you should take his medicine, because it will prevent you from getting ill” (Wagemans Reference Wagemans2016a) is a first-order predicate argument combining a proposition of fact premise with a proposition of policy conclusion—1 pre PF.

Figure 8. Periodic table of arguments, adapted from Wagemans (Reference Wagemans2016a).

2.2.7. Other models

The argumentation models listed so far have been used in corpora annotation projects, which will be discussed in Section 3. A few other models are worth mentioning, given their importance in the analysis of argumentation practice. These models have been used for qualitatively analyzing arguments and for building argumentation support systems.

The New Rhetoric (Perelman and Olbrechts-Tyteca Reference Perelman and Olbrechts-Tyteca1958, Reference Perelman and Olbrechts-Tyteca1969) was proposed by Perelman and Olbrechts-Tyteca as an effort to recover the Aristotelian tradition of argumentation and is focused on rhetoric as a means of persuasion. In this sense, the targeted audience is a central issue in this model, as argumentation strategies are used to convince or persuade others. The New Rhetoric focuses on the analysis of rational arguments in “non-analytic thinking” (i.e., reasoning based on discursive means with the aim of persuasion)—it does not attempt to prescribe ways to practice rational argumentation, but rather to describe various kinds of argumentation that can be successful in practice. Perelman and Olbrechts-Tyteca define the new rhetoric as “the study of the discursive techniques allowing us to induce or to increase the mind’s adherence to the theses presented for its assent” (van Eemeren et al. Reference van Eemeren, Garssen, Krabbe, Snoeck Henkemans, Verheij and Wagemans2014, p. 262). For such techniques to be successful, the first step is to understand who is the audience of the discourse. Differently from formal logic-based approaches, argumentation is considered sound if it is effective in convincing the target audience. Perelman and Olbrechts-Tyteca describe a taxonomy of argument schemes where premises are divided into two classes: those accepted by a universal audience (e.g., facts) and those that deal with preferences. The latter are thus subjective in nature and can be used effectively if they match the preferences (values and value hierarchies) of the audience. The schemes are grouped into two distinct processes: association (connecting elements conceived as separate by the audience) and dissociation (splitting something seen as a whole into separate elements).

Developed by van Eemeren and Grootendorst, pragma-dialectics (van Eemeren and Grootendorst Reference van Eemeren and Grootendorst2004; van Eemeren Reference van Eemeren2018) is an argumentation theory that aims to analyze and evaluate argumentation in actual practice. Instead of focusing on argument as product or as process, pragma-dialectics looks at argumentation as a discursive activity, that is, as a complex speech act (van Eemeren and Grootendorst Reference van Eemeren and Grootendorst1984) with specific communicative goals. According to pragma-dialectical theory, argumentation is part of an explicit or implicit dialogue, in which one participant aims at convincing the other on the acceptability of their standpoint. When the dialogue between the writer and prospective readers is implicit, the former must elaborate by foreseeing any doubts or criticisms that the latter may have or bring about. The kind of analysis proposed by pragma-dialectics is based on a dialog model, on which a qualitative review is employed, for instance, to detect fallacious arguments (van Eemeren and Grootendorst Reference van Eemeren and Grootendorst1987; Visser, Budzynska, and Reed Reference Visser, Budzynska and Reed2017). In terms of argument structure, pragma-dialectics distinguishes between multiple, subordinatively compound, and coordinatively compound argumentation. As a way of comparison, these resemble, respectively, the convergent, linked, and serial structures in the Freeman model (Freeman Reference Freeman1991, Reference Freeman2011).

After discussing the most relevant theoretical models of argumentation, in the next section we focus on the usage of such models in argument annotation projects.

3. Argument annotation

The increase in research on argument mining has brought a need to build annotated corpora that provide enough data for supervised machine learning approaches to succeed in this domain. Several annotation projects have been undertaken, based on different argumentation models, such as those described in Section 2.2.

We address the topic of conducting argument annotation projects in Section 3.1. Then, we survey some of the most relevant projects that closely follow a specific argumentation model in Section 3.2. In Section 3.3, we discuss other annotation projects which do not explicitly adopt any of such models.

3.1. Annotation effort

Any manual corpus annotation task is very time-consuming and requires an appropriate level of expertise (although some annotation projects rely exclusively on crowdsourcing-based approaches Snow et al. Reference Snow, O’Connor, Jurafsky and Ng2008; Rodrigues, Pereira, and Ribeiro Reference Rodrigues, Pereira and Ribeiro2013; Nguyen et al. Reference Nguyen, Duong, Nguyen, Weidlich, Aberer, Yin and Zhou2017; Habernal and Gurevych Reference Habernal and Gurevych2016b; Stab et al. Reference Stab, Miller, Schiller, Rai and Gurevych2018). When dealing with argumentation, this requirement is considerably more critical, given the intricate process of detecting arguments in written text. The implicit or explicit nature of arguments is largely determined by the genre of the text, as discussed in Section 2.1. Some text genres are therefore more susceptible to different human interpretations, due to their free structure and writing style; depending on the genre of the target corpus, identifying arguments properly can be subjective and context-dependent, often reliant on background and world knowledge (Lauscher et al. Reference Lauscher, Wachsmuth, Gurevych and Glavaš2022). The demanding nature of an argument annotation project is determined by two interdependent main axes: (i) the nature or genre of the target corpus and (ii) the argumentation theory employed. Typically, argumentatively rich and explicit text sources are less difficult to annotate. In this kind of text, arguments are often expressed by employing strong discourse markers, thus making it easier to interpret and extract the reasoning adopted by the author. This explains why most annotation projects rely on such kinds of sources, including persuasive essays (Stab and Gurevych Reference Stab and Gurevych2014; Musi, Ghosh, and Muresan Reference Musi, Ghosh and Muresan2016) or legal texts (Mochales and Ieven Reference Mochales and Ieven2009; Zhang, Nulty, and Lillis Reference Zhang, Nulty and Lillis2022).

The argumentation model to adopt is largely related to the respective text genre. When deciding on a specific argumentation model to follow, there is often a trade-off between expressiveness and annotation complexity. Some models, while providing a rich set of building blocks that may be useful to identify and fully characterize arguments within the text, are more demanding for the annotator. This entails the need for a more elaborated set of annotation guidelines, together with careful training. Furthermore, harder annotation tasks that make use of complex annotation schemes bring two main concerns. On the one hand, there is an aggravated need for expert annotators, caused by increasing cognitive demands. On the other hand, high inter-annotator agreement is less likely to occur when using larger annotation schemes (Bayerl and Paul Reference Bayerl and Paul2011), due to annotator bias (Lumley and McNamara Reference Lumley and McNamara1995). Such personal bias is worsened when using a larger set of annotators, as it may translate into different interpretations of annotation guidelines. For these reasons, annotation projects often fall back to a shallow approach, which in some cases allows for exploring crowdsourcing (Habernal and Gurevych Reference Habernal and Gurevych2016b; Nguyen et al. Reference Nguyen, Duong, Nguyen, Weidlich, Aberer, Yin and Zhou2017; Stab et al. Reference Stab, Miller, Schiller, Rai and Gurevych2018).

3.2. Annotation projects grounded in argumentation models

The study of argumentation from a philosophical and linguistic perspective has given rise to a number of studies on its use in practice. Encouraged by the application of NLP in the analysis of written arguments and also by a flourishing research interest in argument mining (Stede and Schneider Reference Stede and Schneider2018), a number of projects have been conducted to build linguistic resources annotated with arguments—argument corpora. There is considerable variability in the theoretical grounding of the existing argument annotation projects. While some of them try to employ a specific argumentation model, in many cases the model is simplified to accommodate the characteristics of the corpus or the project aims. However, building a corpus on the basis of a short-term project’s goal may limit its potential use in future research. As was argued by Reed et al. (Reference Reed, Palau, Rowe and Moens2008), one of the key roles that a corpus can play is providing a foundation for multiple projects.

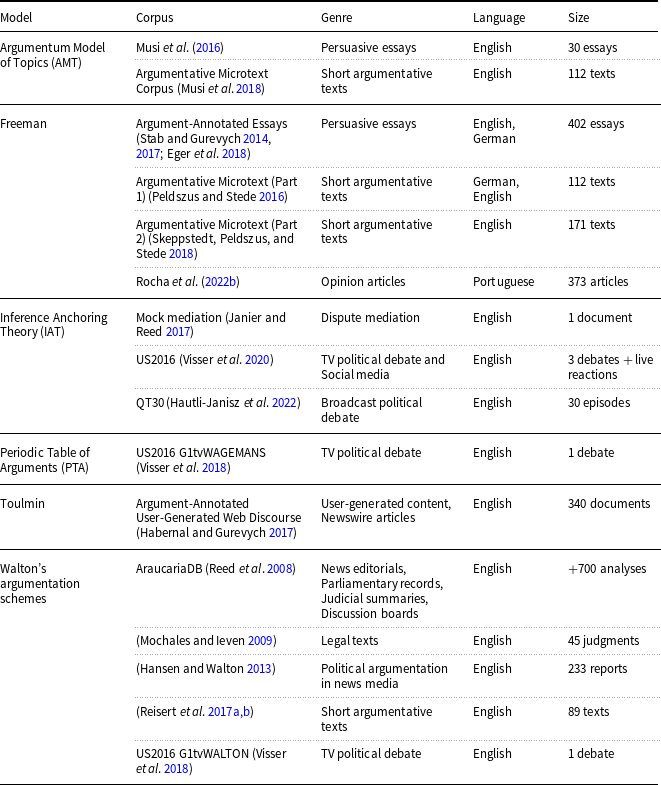

Table 1 shows a collection of argument annotation efforts that have produced corpora by following, to a significant extent, a theoretical model of argumentation.Footnote b To collect this list, we searched for published works in LREC,Footnote c in top-tier NLP conferences (including *ACL, EMNLP, and COLING), in the Argument Mining workshop series (Al-Khatib, Hou, and Stede Reference Al-Khatib, Hou and Stede2021), and also those cited in available surveys (Lippi and Torroni Reference Lippi and Torroni2016; Lawrence and Reed Reference Lawrence and Reed2019). Furthermore, we favored those for which the produced corpus has been made publicly available.

In several cases, simplifications or adaptations are made to the underlying theoretical argumentation model. We now discuss these annotation projects in further detail.

Table 1. Annotated corpora following argumentation models

3.2.1. Toulmin annotations

An attempt to use Toulmin’s model to annotate arguments has been made by Habernal and Gurevych (Reference Habernal and Gurevych2017). This project targeted a dataset of English user-generated content and newswire articles about six controversial topics in education: homeschooling, public versus private schools, redshirting, prayer in schools, single-sex education, and mainstreaming. In total, 340 documents have been annotated.Footnote d

The authors proposed a modified Toulmin model, which includes some of the original elements— claim, backing, rebuttal —and adds premise and refutation. These changes are based on a number of observations: the lack of explicit mentions to cogency degrees in the analyzed texts, which hinders the detection of the qualifier; the fact that warrants are often implicit and fail to be found in practice; the need to consider rebuttal attacks, dubbed refutations, which are used to ensure the consistency of the argument’s position; the equivalence between grounds and premises, the latter being used in several works in argument mining; the reinterpretation of backing as additional, non-essential evidence of support (often a stated fact) to the whole argument, as opposed to a support to the warrant, which is left out. Furthermore, claims are allowed to be implicit, and annotators have been asked to put forward the stance of the author in such cases. In this new setting, refutation looks similar to Freeman’s notion of counters to countermoves applied to the warrant of the argument, that is, counter rebutting/undercutting defeaters (Freeman Reference Freeman2011, pp. 24-25). An example of application of this modified Toulmin model is shown in Figure 9.

Figure 9. Modified Toulmin model example, adapted from Habernal and Gurevych (Reference Habernal and Gurevych2017).

The authors report a moderate inter-annotator agreement. Unsurprisingly, they have found that a source of disagreement between annotators was the easy confusion between refutations and premises, as they both function as support for the claim. The (modified) Toulmin model has been found to be better suited for short persuasive documents, and more problematic in the longer ones explored in the project. This is because, in many cases, the discourse presented in such documents has been found to be more rhetoric than argumentative – argument components are not employed with specific argumentative functions in mind in the logos dimension (the one focused on by the Toulmin model).

3.2.2. Freeman annotations

Given its more simple nature based on premises and conclusions with different kinds of support and attack relations, Freeman’s argumentation model has been used in some annotation projects, although in many cases without exploiting its full potential. Peldszus and Stede (Reference Peldszus and Stede2016) conducted a controlled text generation experiment of 112 short argumentative texts, followed by their annotation—the Argumentative Microtext Corpus.Footnote e Each text was triggered by a specific question, and it should be a short, self-contained sequence of about five sentences providing a standpoint and justification. All texts were originally written in German and have been professionally translated into English. After validating the developed annotation guidelines, the final markup of argumentation structures in the full corpus was done by one expert annotator. The annotation scheme, further detailed in Peldszus and Stede (Reference Peldszus and Stede2013), includes linked and convergent arguments, as well as two types of attacks: undercuts and rebuttals. Furthermore, the special case of support by example is considered.

Skeppstedt et al. (Reference Skeppstedt, Peldszus and Stede2018) have extended this original work, exploring crowdsourcing as a means to increase the size of the short text corpus. Each participant was asked to write a 5-sentence long argumentative text on a particular topic, in English, while making its stance clear through a claim statement and providing at least one argument for the opposite view. The resulting 171 texts have then been annotated (in a non-crowdsourced way) following the same guidelines. An example is shown in Figure 10. The main difficulties reported in the annotation process include implicit claims, restatements, direct versus indirect supports, the distinction between argument support and mere causal connections, implicit annotator evaluations, and the existence of non-argumentative text units.

Figure 10. Freeman example annotation in the Argumentative Microtext Corpus, adapted from Skeppstedt et al. (Reference Skeppstedt, Peldszus and Stede2018). Rounded elements support the central claim (e1), while the boxed element critically questions it; arrow-headed connections are supports, the circle-headed is a rebuttal, and the square-headed is an undercut.

Figure 11. Freeman example annotation in the Argument-Annotated Essays Corpus, adapted from Stab and Gurevych (Reference Stab and Gurevych2017).

Stab and Gurevych (Reference Stab and Gurevych2014) worked on a particularly rich argumentative text genre—persuasive essays — to create an annotated corpus of 90 documentsFootnote f where the argumentative structure of each document includes a major claim, claims, and premises. A major claim expresses the author’s standpoint concerning the topic and is assumed to be expressed in the first paragraph (introduction) and possibly reinstated in the last one (conclusion). Each argument is composed of a claim and at least one premise. Claims are labeled with a stance attribute (for or against the major claim). Two kinds of directed relations between premises and claims are included: support and attack. Both relations can hold between a premise and another premise and between a premise and a claim. An example is shown in Figure 11.

Some simplifications to the Freeman model have been introduced by Stab and Gurevych based on the analysis of the argumentative essay corpus contents. The annotation process did not consider the linked versus convergent structures distinction, namely because it has been considered ambiguous (Freeman Reference Freeman2011, p. 91). Also, divergent arguments have been excluded from consideration to model the argumentation used in the essays with tree structures. The authors report inter-annotator agreements for argument component annotations, for the stance attribute, and argumentative relations. The most significant source of confusion among annotators is the distinction between claims and premises, originated by the fact that chains of reasoning can be established (serial structures Freeman Reference Freeman2011).

Stab and Gurevych (Reference Stab and Gurevych2017) have extended this work, this time creating an annotated corpus of 402 English documents,Footnote g with similar inter-annotator analysis. Eger et al. (Reference Eger, Daxenberger, Stab and Gurevych2018) provide a fully parallel human-translated English-German version of this corpus and machine-translate the corpus into Spanish, French, and Chinese. In both cases, argumentation annotations are kept, either manually or automatically projected from the original annotated corpus.

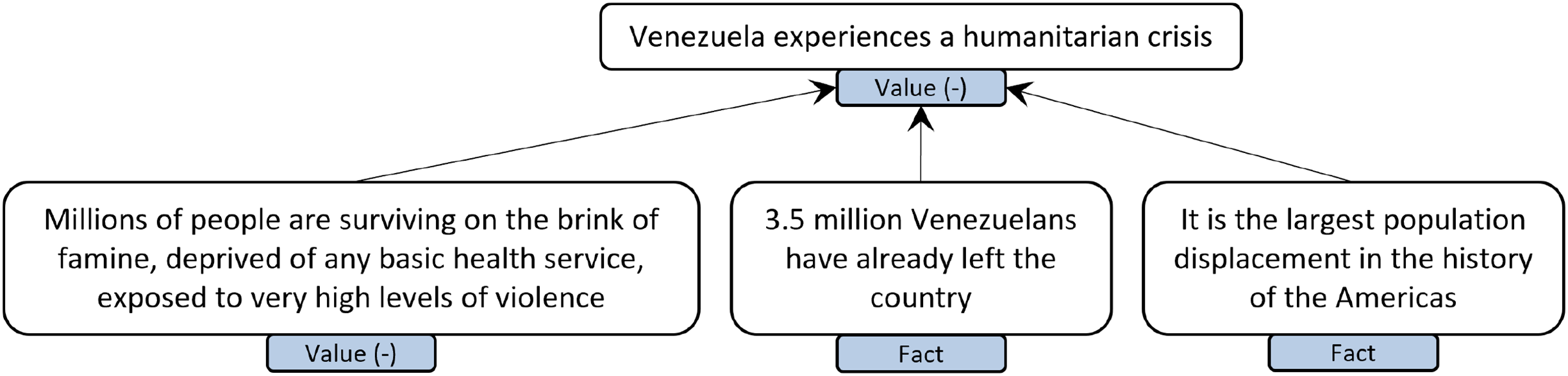

Rocha et al. (Reference Rocha, Trigo, Lopes Cardoso, Sousa-Silva, Carvalho, Martins and Won2022b) have made some inroads into applying Freeman’s model to a less structured argumentative genre—opinion articles as published in a Portuguese newspaper. In their approach, each of a set of 373 articles has been annotated considering the full Freeman model, including several kinds of argument structures (linked, convergent, divergent, and serial) as well as two kinds of relations (support and attack). As an additional annotation layer, each annotated proposition (seen as a premise or a conclusion) was classified into one of fact, policy, or value (in this case distinguishing between positive, negative, and neutral), closely following part of the Periodic Table of Arguments schema. An example annotation is shown in Figure 12.

Figure 12. Freeman example annotation in the opinion articles corpus, adapted (and translated) from Rocha et al. (Reference Rocha, Trigo, Lopes Cardoso, Sousa-Silva, Carvalho, Martins and Won2022b).

Given the expected difficulty in annotating arguments in this kind of text, they opted to have three independent annotations per document. Arguments are assumed to be contained in a single paragraph. The authors carry out an extensive inter-annotator agreement analysis, in which they have found that token-level agreement on argumentative units is challenging. However, for the argumentative units in which there is agreement, higher-level component analysis (such as types and roles of propositions, and macro-structure of arguments) can obtain considerable agreement. Based on this corpus, they have explored the recent trend into perspectivist approaches to NLP (Basile et al. Reference Basile, Fell, Fornaciari, Hovy, Paun, Plank, Poesio and Uma2021), by considering different ways of aggregating annotations (Rocha et al. Reference Rocha, Leite, Trigo, Cardoso, Sousa-Silva, Carvalho, Martins and Won2022a).

3.2.3. Walton’s argumentation schemes annotations

Walton’s argumentation schemes have been used, to a different extent, in several argumentation studies. In terms of annotated corpora, some projects are worth being mentioned. One of the first efforts in analyzing and annotating argumentative structures in text is part of the Araucaria project (Rowe and Reed Reference Rowe and Reed2008). The AraucariaDB corpusFootnote h comprises a set of argumentative examples extracted from diverse sources and geographical regions. The source material, in English, includes newspaper editorials, parliamentary records, judicial summaries, and discussion boards.

Work on the corpus started in 2003. Reed et al. (2008) provide a concise description of the development principles behind the construction of this corpus and discuss potential uses of this kind of language resources. Araucaria is based on different Walton argumentative scheme sets. Reed et al. concede that argument annotation is a subjective task, and for that reason, no careful inter-coder agreement analysis has been carried out on Araucaria.

Mochales and Ieven (Reference Mochales and Ieven2009) explore legal cases from the European Court of Human Rights (ECHR), in order to analyze how judges of this court present their arguments. In this kind of legal case, an applicant presents a complaint about the violation of a specific article of the European Convention on Human Rights. A corpus of 45 judgments and decisions written in English has been annotated. The documents follow a very well-defined structure, and the part where the court’s argumentation is expressed is clearly identifiable.

The authors have characterized arguments by distinguishing discourse relations as per pragma-dialectics (multiple, coordinative, and subordinative relations) and further classify arguments following Walton’s argumentation schemes. Starting from Walton (Reference Walton1996), but with a mixture of scheme types and subtypes, Mochales and Ieven proposed a list of 26 schemes, of which 6 have been found to be more frequent: argument from analogy, from established rule, from consequences, from sign, from precedent, and from example, in decreasing order of occurrences. A simplified version of the corpus, including premises, conclusions, and their relations, is available as the ECHR Corpus (Poudyal et al. Reference Poudyal, Savelka, Ieven, Moens, Goncalves and Quaresma2020).Footnote i

The most particular cause of disagreement between annotators was the fact that the ECHR corpus, or more specifically the document sections that were targeted, include “reported arguments”, where the court revises the arguments employed by both the plaintiff and the defendant. Some annotators regarded this as argumentation, while others did not. Furthermore, disagreement on distinguishing arguments from facts has also been observed, an issue related to the fact that annotators had diverse legal backgrounds, bringing different conceptions of law. Another major source of disagreement is related to argument structure. Given the predominance of the complexly structured argument from established rule scheme in the ECHR corpus (losing only to argument from analogy), considerable disagreement has been observed due to premises/conclusions being identified as conclusions/premises, and with subordinative structures being mistakenly identified as coordinative. Finally, distinguishing between sub-classes of argumentation schemes has been found to be much harder than keeping with more general classes.

Hansen and Walton (Reference Hansen and Walton2013) have studied political public interventions in the context of the 2011 Ontario provincial election. Their data source is indirect: they analyze the politicians’ arguments as reported in four Canadian newspapers, which they have monitored during the election period, in order to collect reported arguments that could be attributed to a candidate. In total, they have collected 256 distinct argument events. They initially followed a subset of 14 argumentation schemes, taken from Walton (Reference Walton2006, pp. 132-137). Hansen and Walton point out that they do not assume that either politicians or reporters have any knowledge of argument schemes, which are seen as conceptual tools that interest analysts, not necessarily argument makers. After finding that some of the arguments analyzed did not fit any of the schemes in the list and that a couple of schemes were not applied to any arguments, the authors changed the list to one with 21 schemes, where 9 new schemes were added.

Starting with the Argumentative Microtext Corpus, (Peldszus and Stede Reference Peldszus and Stede2016), Reisert et al. (Reference Reisert, Inoue, Okazaki and Inui2017a,b) have applied an additional annotation layer based on Walton’s argumentation schemes (Walton et al. Reference Walton, Reed and Macagno2008). A total of 89 texts from that corpus were annotated with several rhetorical patterns, covering argumentative relations of support, rebuttal, and undercut. Such patterns have been created mainly for the argument from consequence scheme.

Visser et al. (Reference Visser, Lawrence, Wagemans and Reed2018) analyze TV political debates on the US 2016 presidential election. This dataset had already been annotated with IAT (to be discussed later), but in this work, the authors have followed two additional theories to annotate the inference relations identified: Walton’s argumentation schemes and Wagemans’ Periodic Table of Arguments. In terms of the former, the authors make use of an extensive list of 60 schemes (the main schemes from Walton et al. Reference Walton, Reed and Macagno2008), to annotate the first general election debate between Hilary Clinton (Democrat) and Donald Trump (Republican).Footnote j Two annotators were employed, who used a classification decision tree prepared by the authors—an intuitive means of distinguishing between the schemes, taken to be mutually exclusive. An example is shown in Figure 13. In practice, only 39 different schemes have been identified, with a predominance of argument from example. Visser et al. also point out that one of the most highlighted academic schemes—argument from expert opinion —is quite rare, which is illustrative of the discrepancies between a scholarly take on argument schemes and their practical prevalence. As expected, some classes of argument schemes turned out to be particularly difficult to distinguish, such as practical reasoning and argument from values. Only 14 of the original 505 inference nodes have been marked with a default inference label, meaning that the annotators did not manage to frame them within one of the 60 schemes. Substantial agreement between annotators is reported.

Figure 13. Walton’s argumentation scheme example annotation in the US 2016 presidential election Corpus, adapted from Visser et al. (Reference Visser, Lawrence, Wagemans and Reed2018).

3.2.4. Argumentum Model of Topics annotations

The Argumentum Model of Topics has been comparatively less used in practice (also due to the fact that it is a much more recent proposal). Here we briefly cover two works by Musi et al. on applying AMT to Argument-Annotated Essays (Musi et al. Reference Musi, Ghosh and Muresan2016)Footnote k and to the Argumentative Microtext Corpus (Musi et al. Reference Musi, Stede, Kriese, Muresan and Rocci2018).Footnote l

Musi et al. (Reference Musi, Ghosh and Muresan2016) developed a pilot annotation study of 30 persuasive essays. The authors hypothesize that AMT has the potential to enhance the recognition of argument schemes, by arguing that it offers, unlike other theoretical models, a taxonomic hierarchy based on distinctive and mutually exclusive criteria. Such criteria appeal to semantic properties that are part of premises and claims, rather than the logical forms of arguments. However, annotations following AMT require reconstruction of implicit premises: common ground knowledge, inferential rules, or intermediary claims.

Working on top of the Argument-Annotated Essays corpus (Stab and Gurevych Reference Stab and Gurevych2014), the authors base their annotation project on first identifying the argument scheme, from either intrinsic (definitional, mereological, causal), extrinsic (analogy, opposition, practical evaluation, alternatives), or complex (authority) relations. For that, a set of identification questions and linguistic clues was provided. Only support relations were considered. Annotators were then asked to identify the inferential rule at work (for which representative rules for each argument scheme were provided). This annotation exercise has come to a slight agreement outcome, with a considerable degree of confusion between the employed schemes.

Following the previous work and using an updated set of guidelines, Musi et al. (Reference Musi, Stede, Kriese, Muresan and Rocci2018) applied AMT to the Argumentative Microtext Corpus (Peldszus and Stede Reference Peldszus and Stede2016), by annotating its 112 short texts. When doing so, both support and rebut relations were considered, again making use of the eight middle-level schemes of AMT and the associated inference rules. The annotation process has achieved only fair agreement, again due to confusion between some of the employed schemes (most notably argument from practical evaluation and the default “no argument”).

3.2.5. Inference Anchoring Theory annotations

The more elaborate nature of Inference Anchoring Theory, whose full power is harnessed when analyzing dialogical interactions, has been explored in recent annotation efforts. Janier and Reed (Reference Janier and Reed2017) focus on dispute mediation discourse. The aim of dispute mediation is to help conflicting parties in finding a way to solve their dispute by resorting to a mediator third party. Given the usually confidential nature of such disputes, Janier and Reed resort to a 45-page transcript document of a mock mediation session provided by Dundee’s Early Dispute Resolution service.Footnote m According to the authors, the transcription is taken to be realistic and useful for the purpose of studying how dialogues unfold in the particular context of dispute mediation—how mediators suggest arguments and deal with impasses, how the arguments exchanged between conflicting parties form a reasonable discussion, and so on. Janier and Reed annotate the document using IAT schemes,Footnote n thereby exposing the “shape of the discussion,” that is to say, the argumentative structure of the dialog. They then analyze whether mediation-specific argumentative moves can be easily detected and how they configure mediation tactics and strategies.

Visser et al. (Reference Visser, Konat, Duthie, Koszowy, Budzynska and Reed2020) describe a publicly available corpus of argumentatively annotated debate, which makes use of a detailed approach to argument analysis, using IAT. They analyze TV political debates on the US 2016 presidential election, together with reactions to the debates on social media. More specifically, the authors have focused on the first Republican and the first Democrat primary debates and on the first general election debate between the candidates of both parties (Donald Trump and Hillary Clinton). An example annotation is shown in Figure 14. Besides analyzing the debates themselves, Visser et al. have also collected online live (i.e., contemporaneous) reactions on Reddit. By annotating inter-textual correspondence, they provide an unusually rich corpus.Footnote o The inclusion, in their study, of this social media debate thread brings greater diversity in language use, given the variability of participants’ backgrounds and even nationalities. Still, Visser et al. observe that Reddit discussions also contain well-structured argumentative content.

Figure 14. IAT example annotation in the US 2016 presidential election Corpus, adapted from Visser et al. (Reference Visser, Konat, Duthie, Koszowy, Budzynska and Reed2020).

To validate the annotation process and compute inter-annotator agreement, approximately 10% of the corpus (word count) was annotated by two independent annotators. When combined and normalized by overall corpus word count, the annotations of the various sub-corpora obtained substantial agreement. When analyzing each annotation sub-task (including segmentation, transitions, illocutionary connections, and propositional relations), the authors observed that the annotation of illocutionary connections was more challenging than the other sub-tasks, due to the closeness between certain types of connections. They also observed higher agreement for the Reddit subcorpus than for the TV debates. Reasons for this include the shorter dialogue turns and the explicit response structure between posts in the thread, making it easier to identify discourse transitions and relations. In terms of propositional relations, those of inference have been found to be predominant in both the TV debates and Reddit sub-corpora. Moreover, conflict relations were more frequent in the Reddit discussion, while rephrases and reformulations were more identified in the TV debates.

Intertextual correspondence explored the fact that some Reddit participants draw conclusions based on arguments presented in the TV debates or show disagreement by first rephrasing what the candidates have said. This annotation layer has created new transitions, illocutionary connections, and propositional relations between the two genres of sub-corpora. For obvious reasons, in the explored scenario Reddit participants could react to the actual candidates’ utterances in the debate, but not vice versa. Visser et al. observe that rephrase relations are the most common, indicating the expected practice that Reddit contributors often restate what the candidates have said during the debate, in order to support their own online arguments.

More recently, Hautli-Janisz et al. (Reference Hautli-Janisz, Kikteva, Siskou, Gorska, Becker and Reed2022) have explored using IAT in broadcast political debate, focusing on 30 episodes of BBC’s “Question Time” from 2020 and 2021. The authors claim that the obtained corpus, QT30,Footnote p is the largest corpus of broadcast debate and report moderate agreement for the annotations. While they make use of nine illocutionary connections, they have found that more than half of those annotated correspond to assertions (which is typical in dialogical argumentation). Hautli-Janisz et al. also distinguish between three roles in debates: moderator, panel, and audience. They analyze whether patterns of conflict or support differ between roles and verify that most attacks are observed between panel members (as expected) while a much lesser number of supports between different speakers is obtained.

3.2.6. Periodic Table of Arguments annotations

We are aware of a single annotation project making use of the Periodic Table of Arguments model. As mentioned earlier, Visser et al. (Reference Visser, Lawrence, Wagemans and Reed2018) analyze TV political debates on the US 2016 presidential election. They do so by extending the original IAT-based inference relation annotations with Walton’s argumentation schemes and with Wagemans’ model, focusing on the first general election debate between Hilary Clinton and Donald Trump. The Periodic Table of Arguments model has been applied to its full extent, focusing on (i) inference relations so as to classify them as first-order or second-order, and as predicate or subject arguments, and (ii) the corresponding information nodes (sources and targets of relations), classifying them as propositions of fact, value, or policy. An example is shown in Figure 15.

Figure 15. PTA example annotation in the US 2016 presidential election Corpus, adapted from Visser et al. (Reference Visser, Lawrence, Wagemans and Reed2018).

Based on a sample of approximately 10% of the corpus annotated by two different annotators, the annotation process revealed substantial to almost perfect agreement, combining the three partial classifications (the lowest partial agreement concerns the distinction between first and second-order arguments and is attributed to its unbalanced nature, with an overwhelming predominance of first-order arguments). From the generated corpus,Footnote q Visser et al. obtain a final coding of each argument with one of the 36 possible types in the Periodic Table of Arguments. Failure to classify an argument in one of the partial classification tasks has led to a default inference fallback, which turned out to represent a significant number of cases (approximately 17%, much more than that observed with Walton’s argumentation schemes for the same corpus, as reported in Section 3.2.3). This is, however, not surprising since the argument schemes result directly from the classification of both inference relations and the intervening information nodes—they are not chosen by the annotator from a list, as with Walton’s argumentation schemes.

3.3. Other annotation efforts

It is worth noting that most annotation projects focus on the logos dimension of the classical Aristotelian distinction, or at least do not explicitly consider the pathos and ethos dimensions. Exceptions to this include Duthie et al. (2016), who explore the ethos dimension, Habernal and Gurevych (Reference Habernal and Gurevych2017), who consider the pathos alongside the logos dimension, and Hidey et al. (Reference Hidey, Musi, Hwang, Muresan and McKeown2017), who consider annotating premises with one of the three dimensions of logos, pathos, and ethos. Several other argument annotation projects have been carried out without a clear adherence to one of the argumentation models introduced in Section 2. We refer to some of those works here.

Aharoni et al. (Reference Aharoni, Polnarov, Lavee, Hershcovich, Levy, Rinott, Gutfreund and Slonim2014) annotate 586 Wikipedia articles on 33 controversial topics, following a simple claim-evidence structure. For each document, a context-dependent claim is identified, together with context-dependent evidence, which are further classified into one of study, expert, or anecdotal. The corpus includes a total of 2683 argument elements, including 1392 claims and 1291 evidences.

In the domain of political debates, Haddadan, Cabrio, and Villata (Reference Haddadan, Cabrio and Villata2019) perform a manual argument mining effort targeting 39 political debates from 50 years of US presidential campaign debates. The output is a corpus of 29k argument components, labeled as premises and claims, but without any relation links between them. The authors observe that the corpus contains a higher number of claims as compared to premises, which is explained by the fact that political candidates often put forward arguments without providing premises for their claims.

Focusing on news editorials, Al-Khatib et al. (Reference Al-Khatib, Wachsmuth, Kiesel, Hagen and Stein2016) aim at mining argumentation strategies. According to the authors, editorials lack a clear argumentative structure and frequently resort to enthymemes. After employing an automatic segmentation of editorials into argument discourse units (ADU), annotators were asked to annotate each ADU according to one of the following roles: common ground, indicating common knowledge or a generally accepted truth; assumption, when the unit states an assumption or opinion of the author, or a general observation or (possibly false) fact; testimony, stating a proposition made by some expert, authority, witness, and so on; statistics, when expressing quantitative evidence; anecdote, transmitting a personal experience of the author or a specific event; and other, when the unit does not add to the argumentative discourse or does not match any of the previous roles.

While there seems to be some connection between such roles and some of Walton’s argumentation scheme elements, the authors’ purpose is to analyze argumentation strategy at a macro-document level, as opposed to a finer granularity of analyzing individual arguments. The corpus obtainedFootnote r is composed of 300 editorials from Al Jazeera, Fox News, and The Guardian. While analyzing the corpus, the authors observed the highest proportion of assumptions in The Guardian, with Fox News strongly relying on common ground and having twice as many testimony evidence when compared to The Guardian; Al Jazeera emphasizes anecdotal discourse units. All three sources resort to statistics in a similar way.

The Internet Argument Corpus (Walker et al. Reference Walker, Tree, Anand, Abbott and King2012) includes annotations of debate on internet forums obtained in a crowdsourcing setting. Covering 10 different topics, the chosen annotation scheme was based on several dialogic and argumentative markers (namely related to degrees of agreement, emotionality, cordiality, sarcasm, target, and question nature), assigned to quote-response pairs and to chains of three posts. The second version of the corpus (Abbott et al. Reference Abbott, Ecker, Anand and Walker2016)Footnote s contains entries from three distinct online fora, with a total of 482k posts.

Habernal and Gurevych have also addressed argumentation in debate portals with a rather shallow approach. They have cast the problem to a relation annotation task, in which crowdsourced annotators have been asked to select the most convincing argument from a pair (Habernal and Gurevych 2016b)Footnote t In the sequel, they have extended the corpus with reason annotations (Habernal and Gurevych Reference Habernal and Gurevych2016a),Footnote u for which they have used a hierarchical annotation process guided by questions leading to one of 19 distinct labels.

To understand what makes a message persuasive, Hidey et al. (2017) have explored annotating comments in the popular Reddit ChangeMyView online persuasion forum. They follow a two-tiered crowdsourced annotation scheme, where premises are labeled according to their persuasive mode (ethos, logos, or pathos), while claims are labeled as interpretation, evaluation, agreement, or disagreement—which also capture the dialogical nature of the corpus. Egawa, Morio, and Fujita (2019) have worked on the same online forum and use five types of elementary units— fact, testimony, value, policy, and rhetorical statement —and two types of relations— support or attack. The resulting corpus contains 4612 elementary units and 2713 relations in 345 posts.

A shallower approach to dealing with debate datasets is followed by Durmus and Cardie (Reference Durmus and Cardie2018), who collected debates from different topic categories together with votes from the readers of the debates. In parallel, they collected user information (political and religious beliefs) intending to study the role of prior beliefs when assessing debate winners. They have found prior beliefs to be more important than language use and argument quality.

Also focusing on persuasion strategies, Wang et al. (Reference Wang, Shi, Kim, Oh, Yang, Zhang and Yu2019) collect a dataset with 1,017 dialogues, of which 300 have been annotatedFootnote v regarding 10 possible strategies, divided into persuasive appeal and persuasive inquiry. They then analyze both persuaders’ and persuadees’ dialogue acts and check how strategies evolve during dialogue turns. A significant number of dialogue acts (40%) have been found to be non-strategic.

Ghosh et al. (Reference Ghosh, Muresan, Wacholder, Aakhus and Mitsui2014) have carried out an annotation project of blog postings, in which they employed a simplistic callouts and targets model, based on a so-called Pragmatic Argumentation Theory. This theory states that argument arises from calling out some aspect of a prior contribution. As such, a callout occurs when a subsequent post addresses (part of) a prior post (the target), responding to it. The task of a set of expert annotators was to find each instance of a callout, determine its boundaries and link it to the most recent target, whose boundaries must also be determined. In a follow-up annotation task, crowdsourced annotators have been asked to label the (dis)agreement relation between a callout and its corresponding target and to identify the stance and rationale in callouts identified by expert annotators.