Policy Significance Statement

This study seeks ways to identify stakeholder motives such as interests, intentions, agendas, and strategies in relation to normative AI policy processes. By combining social constructivism and science & technology studies perspectives with desk research, the study proposes a theoretical framework and methodologies to deepen the understanding of policymaking in the evolving AI landscape. The findings not only offer insights into research disciplines, theoretical frameworks, and analytical methods but also emphasize the need for critical evaluation and further exploration. This contribution aims to stimulate ongoing research and critical debates, providing a starting point for a more profound exploration of policy processes. The study’s overview of disciplines, frameworks, and methods invites researchers and policymakers to engage with this underexplored field, which can lead to a more comprehensive understanding of stakeholder motivations in AI governance.

1. Introduction

Artificial intelligence (AI)Footnote 1 is experiencing a resurgence, thanks to recent breakthroughs in machine learning and generative AI. This is leading to new applications and services across various fields but also new policy initiatives that seek to manage these advancements. Normative AI governance measures in both soft law, such as ethical guidelines, and hard law (legislation), are generally perceived as essential tools in guiding the development and implementation of AI innovations (Kim et al. Reference Kim, Zhu and Eldardiry2023; Veale et al., Reference Veale, Matus and Gorwa2023). These policy documents aim to address potential negative consequences and promote societal benefits.Footnote 2 Despite their good intentions, their operationalization faces numerous challenges.

The effectiveness of AI governance frameworks is challenged by multifaceted issues, revealing complex stakeholder dynamics. Deficiencies impede their effectiveness, which is beneficial to some industry stakeholders, and prompt them to strategize around delaying the development of clear and practical frameworks (Floridi et al., Reference Floridi, Cowls, Beltrametti, Chatila, Chazerand, Dignum, Luetge, Madelin, Pagallo, Rossi, Schäfer, Valcke and Vayena2018). Furthermore, the lack of clarity in the inclusion of certain values, coupled with phenomena such as “ethics washing,” due to hidden agendas, raises concerns about the legitimacy of ethical considerations of businesses and governments. Claims by Conti and Seele (Reference Conti and Seele2023), Munn (Reference Munn2022), and Hagendorf (Reference Hagendorff2020) about potential political motives in policy documents and guidelines have surfaced. However, these claims lack a robust research method to substantiate beyond the guidelines themselves the presence of political motives. This triggers questions of transparency and authenticity in policymaking among various stakeholders. The insufficiently explored nature of the policymaking process, particularly within the context of innovation and technology, further compounds these challenges mentioned above.

A theoretical framework and methodological outlines are needed, aiming to uncover stakeholders’ motivationsFootnote 3 to influence policy. This is undertaken in this article by assembling an eclectic overview, bringing together research disciplines, theoretical frameworks and concepts that can help analyze stakeholder motivations, and analytical methods. The two research questions guiding this study are: (1) “How can we identify social constructivist factors in the shaping of AI governance documents?” and (2) “What research has been conducted to explore and scrutinize stakeholder motivations encompassing interests, strategies, intentions, and agendas?”

Through this inquiry, this research seeks to contribute valuable insights to the ongoing discourse on AI governance, shedding light on stakeholder motivations and enhancing our understanding of the policymaking process in this rapidly evolving field.

This article is structured as follows: Section 2 summarizes the current AI governance efforts and their limitations, demonstrates that the field of AI governance is dominated by and moving further toward self-regulation, and highlights the role that stakeholder dynamics plays in this context. Section 3 describes the bridge between policymaking and social constructivism theory. That account is further complemented by a contextualization of the link between the theory of the social construction of technology (SCOT) and stakeholder motivations. With this theoretical background, we outline how the formulation of AI governance policies is shaped by social constructs. Section 4 explains the research methodology. Section 5 discusses the findings, divided into disciplines, theoretical frameworks, and analytical models. Section 6 presents the findings, the research gaps, and future research directions. The final section presents the concluding remarks.

2. AI governance, limitations, and stakeholder dynamics

The rapid evolution and inherent complexity of AI pose challenges for traditional regulatory approaches (Hadfield and Clark, Reference Hadfield and Clark2023; Smuha, Reference Smuha2021). This causes the field of AI governance to be currently dominated by self-regulation (Schiff et al., Reference Schiff, Biddle, Borenstein and Laas2020; De Almeida et al., Reference De Almeida, Santos and Farias2021). Gutiérrez and Marchant (Reference Gutiérrez and Marchant2021) see it as the leading form of AI governance in the near future. These frameworks offer advantages such as a lack of jurisdictional constraints, low barriers to entry, and a propensity for experimentation. Soft law can be easily modified and updated to keep pace with the continuously evolving nature of AI. This flexibility and adaptability allow stakeholders like governments, companies, and civil society organizations to participate in their development (Hadfield and Clark, Reference Hadfield and Clark2023).

However, limitations often hinder their effectiveness. Documents tend to be overly abstract, theoretical, or inconsistent, making them difficult to operationalize. In addition, they frequently contain an imbalance of conflicting values, such as accuracy and privacy or transparency and nondiscrimination (Petersen, Reference Petersen2021), and disconnect from real-world contexts. For instance, many AI ethics guidelines and policies predominantly address concerns related to algorithmic decision-making, the integrity of fairness, accountability, sustainability, and transparency within corporate decision-making spheres where AI systems are embedded. These spheres are frequently compromised by competitive and speculative norms, ethics washing, corporate secrecy, and other detrimental business practices (Attard-Frost et al., Reference Attard-Frost, De Los Ríos and Walters2022), which receive far less attention. The nonbinding nature of the documents also leads to disregard in some cases, further reducing their efficacy on AI innovations that enter society (Munn, Reference Munn2022; Ulnicane et al., Reference Ulnicane, Knight, Leach, Stahl and Wanjiku2020).

In addition, stakeholder motivations can significantly influence policy outcomes (Valle-Cruz et al., Reference Valle-Cruz, Criado, Sandoval-Almazan and Ruvalcaba-Gomez2020). In traditional policy processes, it is common that there is pressure on policymakers to limit or dilute rules to avoid overly strict legislative frameworks. AI governance differs from traditional approaches due to stakeholder dynamics. Here, actors leverage the unique characteristics of AI, such as its rapid evolution and inherent complexity, to advocate for self-regulation as the preferred approach and delay legislative intervention as much as possible (Butcher and Beridze, Reference Butcher and Beridze2019; Hagendorff, Reference Hagendorff2020). In the meantime, companies can benefit from the absence of enforceable mechanisms for compliance in self-regulation frameworks (Papyshev and Yarime, Reference Papyshev and Yarime2022).

Within the context of stakeholder motivations, a distinction can be made between declared or stated interests and strategies, and hidden intentions or agendas. Both aspects have been understudied so far. Concerning public interests and strategies, Ayling and Chapman (Reference Ayling and Chapman2021) argue that meta-analyses of AI ethics papers narrowly focus on the comparison of ethical principles, neglecting stakeholder representation and ownership of the process. In terms of hidden interests and agendas, McConnell (Reference McConnell2017) indicates that there is a dearth of analysis on the phenomenon of hidden agendas. These limitations highlight a broader gap in research on stakeholder motivations. While a wealth of analysis exists on specific AI governance documents (Christoforaki and Beyan, Reference Christoforaki and Beyan2022), the role of stakeholder motivations within the complex socioeconomic landscape of policymaking remains largely unexplored.

Another reason for the field’s importance is that policymakers, in practice, are not solely driven by motives to serve the public interest. Policy design is often viewed from an overly optimistic perspective (Arestis and Kitromilides, Reference Arestis and Kitromilides2010). They can also be driven by malicious or venal motivations such as corruption or clientelism, rather than socially beneficial ones (Howlett, Reference Howlett2021). Oehlenschlager and Tranberg (Reference Oehlenschlager and Tranberg2023) illustrate this with a case study on the role Big Tech has played in influencing policy in Denmark. Valle-Cruz et al. (Reference Valle-Cruz, García-Contreras and Gil-Garcia2023) highlight that AI can have negative impacts on government, such as a lack of understanding of AI outcomes, biases, and errors. This highlights the need for research into political motivations for AI deployment in government and the ethical guidelines to guide implementation.

When translating principles into practice, stakeholder motivations can undermine good intentions, leading to issues such as ethics washing, where organizations pay lip service to ethical principles without actively implementing them in their AI systems and practices (Bietti, Reference Bietti2020; Floridi, Reference Floridi2019). Potential risks also include ethics bashing, where stakeholders intentionally undermine or criticize ethical principles to advance their interests (Bietti, Reference Bietti2020), or ethics shopping (Floridi, Reference Floridi2019), where stakeholders selectively adopt and promote ethical principles that align with their interests.

3. Theoretical background

3.1. Social constructivism

In this section, we define a framework to consider stakeholders in the social construction of AI governance. We first define social constructivism and then visit its application in policy design. In analyzing stakeholder motivations, it is imperative to examine the intricate interplay between social interactions, prevailing norms and values, and the nuances of language, as these elements shape and influence society (Elder-Vass, Reference Elder-Vass2012). The theory is, therefore, a fundamental framework for understanding the mechanisms of policy processes and the role of stakeholders.

Flockhart (Reference Flockhart2016) identifies four key constructivist propositions:

-

1. A belief in the social construction of reality and the importance of social facts.

-

2. A focus on ideational as well as material structures and the importance of norms and rules.

-

3. A focus on how people’s identities influence their political actions and the significance of understanding the reasons behind people’s actions.

-

4. A belief that people and their surroundings shape each other, and a focus on practice (real-life situations) and action.

The propositions of social constructivism hold significant explanatory power within the context of the article’s topic. Firstly, the emergence of societal norms, values, and shared beliefs through interactions leads to the formation of a collective reality, with social facts being produced by social practices (Yalçin, Reference Yalçin2019). This social construction of reality and social facts influences policy processes, determining priorities, viable solutions, and resource allocation. Secondly, constructivist thought emphasizes the influence of meanings attached to objects and individuals on people’s actions and perceptions, highlighting the intertwining of material and ideational structures in shaping societal organization (Adler, Reference Adler1997). In addition, social constructivists emphasize the role of identity in influencing interests, choices in actions, and engagement in political actions, recognizing identity as essential for interpreting the underlying reasoning behind certain actions (Zehfuss, Reference Zehfuss2001; Wendt, Reference Wendt1992). Lastly, constructivism underscores the symbiotic relationship between individuals and societal structures, emphasizing the reciprocal influence of individuals and their practices on these structures, thus highlighting the fluid nature of policymaking within specific social and cultural contexts (Zhu et al., Reference Zhu, Habisch and Thøgersen2018).

3.1.1 Social constructivism in policy design

Pierce et al. (Reference Pierce, Siddiki, Jones, Schumacher, Pattison and Peterson2014) refer to Theories of the Policy Process (Sabatier, Reference Sabatier2019) as a canonical volume defining policy studies at that time. The edited volume excluded the work of constructivists, who were a minority of policy process scholars at that time. Constructivists emphasized the socially constructed nature of policy and reality, highlighting the importance of perceptions and intersubjective meaning-making processes in understanding the policy process. This theoretical framework only found a foothold in the next edition dating from 2007 (Pierce et al., Reference Pierce, Siddiki, Jones, Schumacher, Pattison and Peterson2014, p. 2). The approach consists of eight assumptions that influence policy design (Figure 1).

Figure 1. Assumptions of the theory of social construction and policy design.

Source: Pierce et al. (Reference Pierce, Siddiki, Jones, Schumacher, Pattison and Peterson2014, p. 5).

Schneider and Ingram’s (Reference Schneider and Ingram1993) approach focuses on the analysis of who is allowed to be present as a target group within the design phase of policy documents. This choice is made by the statutory designers who are influenced by the factors outlined in Figure 1.

3.1.2 Statutory regulations and statutory designers

Schneider and Ingram (Reference Schneider and Ingram1993) define policy design as the content of public policy as found in the text of policies, the practices through which policies are conveyed, and the subsequent consequences associated with those practices (Pierce et al., Reference Pierce, Siddiki, Jones, Schumacher, Pattison and Peterson2014). In addition, statutory regulations are laws, rules, procedures, or voluntary guidelines initiated, recommended, mandated, implemented, and enforced by national governments to promote a certain goal (Patiño et al., Reference Patiño, Rajamohan, Meaney, Coupey, Serrano, Hedrick, Da Silva Gomes, Polys and Kraak2020). Therefore, statutory designers can be understood as individuals or entities responsible for creating and implementing laws, rules, and procedures to shape public policy and promote specific societal outcomes. These designers play a crucial role in shaping the content and practices of policies, ultimately influencing the impact of these policies on the target populations (Pierce et al., Reference Pierce, Siddiki, Jones, Schumacher, Pattison and Peterson2014; Patiño et al., Reference Patiño, Rajamohan, Meaney, Coupey, Serrano, Hedrick, Da Silva Gomes, Polys and Kraak2020).

Schneider and Ingram’s proposition introduces a classification of target populations based on social construction and power. They depict individuals on a gradient from undeserving to deserving on the social construction dimension and from powerful to lacking power on the power dimension. This is visually represented in a 2 × 2 matrix, creating four categories of target populations: advantaged, contenders, dependents, and deviants.

The advantaged are positively constructed and have high power, expected to receive a disproportionate share of benefits and few burdens, while the contenders, despite having high power, are negatively constructed and expected to receive subtle benefits and few burdens. The dependents, with low power but positive construction, are expected to receive rhetorical and underfunded benefits and hidden burdens, and the deviants, with low power and negative construction, are expected to receive limited to no benefits and a disproportionate share of burdens.

3.1.3 Social constructivism and AI governance

Building on the concept of social constructivism influencing policy design, we can identify similar dynamics in shaping AI governance documents. Schneider and Ingram’s classification of target populations, introduced in Section 3.1.2, can help shed light on how power and social perception can influence who benefits from and who bears the burdens of AI governance.

Power dynamics and representation

Large tech companies and established research institutions, with their positive image as innovation drivers, hold significant sway in policy formation (Ulnicane et al., Reference Ulnicane, Knight, Leach, Stahl and Wanjiku2020). They can be seen as advantaged groups, which have “the resources and capacity to shape their constructions and to combat attempts that would portray them negatively” (Schneider and Ingram, Reference Schneider and Ingram1993). Conversely, emerging AI start-ups (contenders) occupy a complex space in AI governance. While their potential for innovation and economic growth grants them power, they also face public concerns, such as fear of job displacement due to automation. This creates a challenge for policymakers. The societal benefits of AI advancements developed by start-ups might not be readily apparent to the public. This lack of public recognition, coupled with public concern, makes it difficult to design clear and effective policies that govern AI development by emerging start-ups (Sloane and Zakrzewski, Reference Sloane and Zakrzewski2022; Winecoff and Watkins, Reference Winecoff and Watkins2022).

Small and medium-sized enterprises (SMEs), looking to integrate AI in their operations, can be considered as dependents. While SMEs are positively constructed as potential drivers of innovation and economic growth, they often have limited power compared to larger corporations because of a lack of resources and expertise. As a result, they may receive rhetorical support and insufficient resources from AI governance efforts (Watney et al., Reference Watney and Auer2021; Kergroach, Reference Kergroach2021). For instance, policymakers may express encouragement for SME participation in AI initiatives, but these businesses may struggle to access adequate funding and infrastructure needed to effectively utilize AI technologies. In addition, they may face hidden burdens such as compliance costs and regulatory complexities. Meanwhile, marginalized communities and citizens, despite being potentially harmed by AI, have minimal influence on the design of policy (Donahoe and Metzger, Reference Donahoe and Metzger2019). They are often overlooked by policymakers, and their perspectives may be underrepresented in the final documents. Schneider and Ingram’s classification system also shows that unequal distribution of power, one of the core assumptions of social constructivism, is evident within AI governance.

Bounded relativity and interpretation

Statutory designers, as discussed in Section 3.1.2, face a complex and evolving technology in AI governance. This is exemplified by the core challenge of defining AI itself in policy documents. Policymakers struggle with defining AI in a way that is both technically accurate and broad enough to encompass different applications of AI, from simple algorithms to complex deep learning systems (O’Shaughnessy, Reference O’Shaughnessy2022). Reaching agreement on a definition is a major challenge, but so is finding cross-border consensus (Schmitt, Reference Schmitt2021) or aligning different views on liability and responsibility (Zech, Reference Zech2021), which are other examples of how statutory designers can rely on their ideologies in policy design. This reflects the concept of bounded relativity – stakeholders perceive and interpret the impacts of AI technologies based on their perspectives and experiences. For instance, tech companies may view AI algorithms in hiring processes as efficient tools, while jobseekers and civil rights advocates may see them as potential sources of discrimination and bias. These differing perspectives highlight how social constructions of AI are shaped by objective conditions (the capabilities and limitations of the technology), leading to divergent interpretations of its implications for society.

Dynamic policy environment

Just like any policy, AI policy shapes the social reality and how people understand and interact with the technology (Eynon and Young, Reference Eynon and Young2020). By designing policy, AI governance documents can influence policy elements such as resource allocation and public discourse (Cheng et al., Reference Cheng, Varshney and Liu2021). For example, AI legislation can strictly regulate the use of the technology, prompting policymakers to take additional measures to stimulate innovation. If policymakers focus only on restriction, this could potentially stifle innovation and drive entrepreneurs away to other jurisdictions (De Cooman and Petit, Reference De Cooman and Petit2020; Scherer, Reference Scherer2015). Policymakers can also send messages to organizations or citizens through AI-related initiatives, such as ensuring trust or security, which can then adjust their orientation and participation accordingly.

Navigating uncertainty

AI governance operates in a dynamic and rapidly evolving environment, characterized by technological advancements, emerging risks, and evolving societal norms. Policymakers must navigate this uncertainty when crafting AI governance documents, balancing the need for regulatory flexibility with the imperative to address potential risks and societal concerns associated with AI technologies (Thierer et al., Reference Thierer, O’Sullivan and Russell2017).

3.2. Stakeholder motivations and the social construction of technology

Building upon the foundations of social constructivism theory, the SCOT theory takes this dynamic interplay a step further by examining how technology itself is a product of social factors, cultural contexts, and the perspectives and actions of different stakeholders (Bijker et al., Reference Bijker, Hughes and Trevor1987). SCOT asserts that technology is not merely a neutral tool but a complex entity whose form, function, and impact are linked to the perspectives and interests of various stakeholders. Within this theoretical field, Brück (Reference Brück2006) conceptualizes technology as the embodiment of individuals’ perceptions and conceptions of the world. This theory highlights the dynamic and reciprocal relationship between society and technology.

In light of this theoretical perspective, it is imperative to underscore the profound significance of stakeholders in the shaping of technological constructs and the formulation of policies aimed at integrating these technologies within the fabric of society. Stakeholders, whether they are industry leaders, policymakers, advocacy groups, or the broader public, bring their interests, values, beliefs, and power dynamics into the innovation and policy processes.

This can shape the direction and outcomes of technological innovations. For example, in the development of new medical technology, stakeholders such as medical professionals, pharmaceutical companies, regulatory bodies, patients, and advocacy groups all have a vested interest in the technology’s success or failure. Their input and influence can determine factors such as the prioritization of research and development, the allocation of resources, the ethical considerations and guidelines, and the degree of accessibility and affordability of the technology.

AI governance documents are developed to guide the responsible implementation of AI in society. This is a task that involves defining which problems need to be avoided (values) and what methods (means) to use to reach this goal. This process often occurs behind closed doors,Footnote 4 leading to a need for more transparency about how problems were prioritized or how certain methods were chosen over others (Aaronson and Zable, Reference Aaronson and Zable2023; Edgerton et al., Reference Edgerton, Seddiq and Leinz2023; Perry and Uuk, Reference Perry and Uuk2019). SCOT suggests that different social groups can interpret and use the same technology in different ways, based on their interests. In the case of AI governance, various stakeholders—such as policymakers, AI developers, end users, and the public—may have different views on what constitutes a “problem” and how it should be addressed. For example, policymakers might prioritize issues related to privacy and security, while AI developers might be more concerned with technical challenges like improving algorithmic fairness. End users, on the other hand, might focus on usability and the impact of AI on their daily lives. The main question thus becomes how ideational factors (worldviews, ideas, collective understandings, norms, values, etc.) impact political action (Saurugger, Reference Saurugger2013) and whose perspectives are taken into account or worse, whose perspectives are excluded.

The theory serves as a valuable tool for comprehending the sociology of technology and its derivatives—exploring who communicates what, when, and why—and the underlying dynamics contributing to the pluralism of technology (Ehsan and Riedl, Reference Ehsan and Riedl2022). Metcalfe (Reference Metcalfe1995), for example, indicates that similar dynamics as the ones that were mentioned in the context of social constructivism such as lobbying and hidden agendas, but also imperfect information, bureaucratic capture, and shortsighted politics, may lead to mistaken government interventions. To formulate effective technology policies, policymakers must have access to detailed microeconomic and social information. In addition, the insights from the SCOT theory can be applied to examine the diverse perceptions and practices surrounding AI. Eynon and Young (2020), for example, explored this aspect in the context of lifelong learning policy and AI, examining the perspectives of stakeholders in government, industry, and academia.

3.3. Stakeholder motivations

The significance of motivations to fulfil policy desires in the context of AI and ethics has been acknowledged in the scholarly literature (Cihon et al., Reference Cihon, Schuett and Baum2021; Jobin et al., Reference Jobin, Guettel, Liebig and Katzenbach2021; Krzywdzinski et al., Reference Krzywdzinski, Gerst and Butollo2022; Ulnicane et al., Reference Ulnicane, Knight, Leach, Stahl and Wanjiku2020). However, existing discussions on the role of motivations, and the power dynamics associated with safeguarding these, often oversimplify the complexity of this topic. Present depictions often simplify the situation into a division between the vested interests of established actors and the emerging concerns of newcomers (Bakker et al., Reference Bakker, Maat and Van Wee2014). These distinctions do not encompass the complex intricacies of interests and agency as AI and ethics continue to evolve.

The presence and absence of stakeholders in policymaking is such an aspect, critical for democratic governance. It is commonly agreed that the inclusion of diverse stakeholder groups in policy formulation can lead to more informed and effective policies. Their participation can enhance the legitimacy of the policymaking process (Garber et al., Reference Garber, Sarkani and Mazzuchi2017). However, full consultation of all stakeholders with an interest in a given policy issue is rarely achieved. This may be because of practical reasons such as resource constraints, but exclusion can occur for various other reasons, such as power dynamics or differing policy agendas (Headey and Muller, Reference Headey and Muller1996; Balane et al., Reference Balane, Palafox, Palileo-Villanueva, McKee and Balabanova2020).

Understanding why certain stakeholder groups are included or excluded is important for several reasons. It can reveal power imbalances and potential biases in the policymaking process (Balane et al., Reference Balane, Palafox, Palileo-Villanueva, McKee and Balabanova2020; Jaques, Reference Jaques2006). But it can also inform efforts to improve stakeholder engagement strategies, which will enhance the quality and legitimacy of policy outcomes (Pauwelyn, Reference Pauwelyn2023).

Dynamics may emerge that actors set in motion to achieve a particular policy desire. Stakeholders may secretly lobby or use political influence to help shape regulation in their favor (Stefaniak, Reference Stefaniak2022). The wielding of hidden agendas (Duke, Reference Duke2022) is another dynamic. Stakeholders with varying agendas also often compete for priorities during policymaking on ethical grounds. These competing interests can dilute essential considerations such as fairness, enabling biases, and prejudice within decision-making processes related to AI system design (Mittelstadt, Reference Mittelstadt2019; Stahl, Reference Stahl and Stahl2021). The strategic manoeuvering by different stakeholders may impede progress toward developing effective governance frameworks for ensuring trustworthiness across various dimensions of AI technology.

Understanding these stakeholder motivations is essential for analyzing the dynamics around AI policies and ethics. By delving into the complexities of stakeholder interactions and engagement, researchers can uncover hidden agendas, potential conflicts, or collaborative opportunities. This nuanced understanding forms the foundation for the subsequent exploration of theoretical frameworks, research disciplines, and analytical methods.

4. Methodology

This article addresses the following research questions: (1) “How can we identify social constructivist factors in the shaping of AI governance policy documents?” and (2) “What research has been conducted to explore and scrutinize stakeholder motivations encompassing interests, strategies, intentions, and agendas?” The systematic literature review approach is best suited to answer this question. For this systematic review of the literature, the scoping study methodology was selected. This methodology offers a structured and comprehensive approach to mapping and synthesizing existing literature on a specific research topic. Scoping studies encompass a wide array of studies within the review process to chart the fundamental concepts that form the basis of a research domain, as well as the primary sources of evidence (Arksey and O’Malley, Reference Arksey and O’Malley2005). This methodology was suitable for this study because it enables a holistic exploration of the breadth and depth of the topic. It can indicate the boundaries of a field, the extent of research already completed, and any research gaps (Yu and Watson, Reference Yu and Watson2017).

The scoping study methodology involves a systematic process of gathering, analyzing, and synthesizing a wide range of literature sources. This study uses a five-step framework (Arksey and O’Malley, Reference Arksey and O’Malley2005) that is considered the basis for a scoping study:

Stage 1: identifying the research question.

Stage 2: identifying relevant studies.

Stage 3: study selection.

Stage 4: charting the data.

Stage 5: collating, summarizing, and reporting the results.

In Stage 2 (identifying relevant studies), a search strategy was initially developed that included the formulation of keywords and identification of research tools. Therefore, “identifying,” “stakeholders,” “stakeholders interests,” “stakeholder strategies,” “stakeholder motivations,” “hidden agendas,” and “hidden motives” were selected as keywords. To enable a systematic retrieval of relevant scholarly literature, specialized academic databases and digital repositories were explored. In addition, AI-powered tools were used to perform an extensive search. The initial search was performed through Scopus, ACM Digital Library, JSTOR, and Google Scholar. The AI tools for the literature review used were Elicit, Connected Papers, and SciSpace.

In Stage 3, the inclusion criteria were defined as academic journal articles and relevant reports by organizations published in English and available online in full text, deemed pertinent to the research aim. This entails selecting articles that are relevant to and contribute to addressing the research aim and question. Conversely, the exclusion criteria encompassed publications falling outside the scope of the aforementioned inclusion criteria.

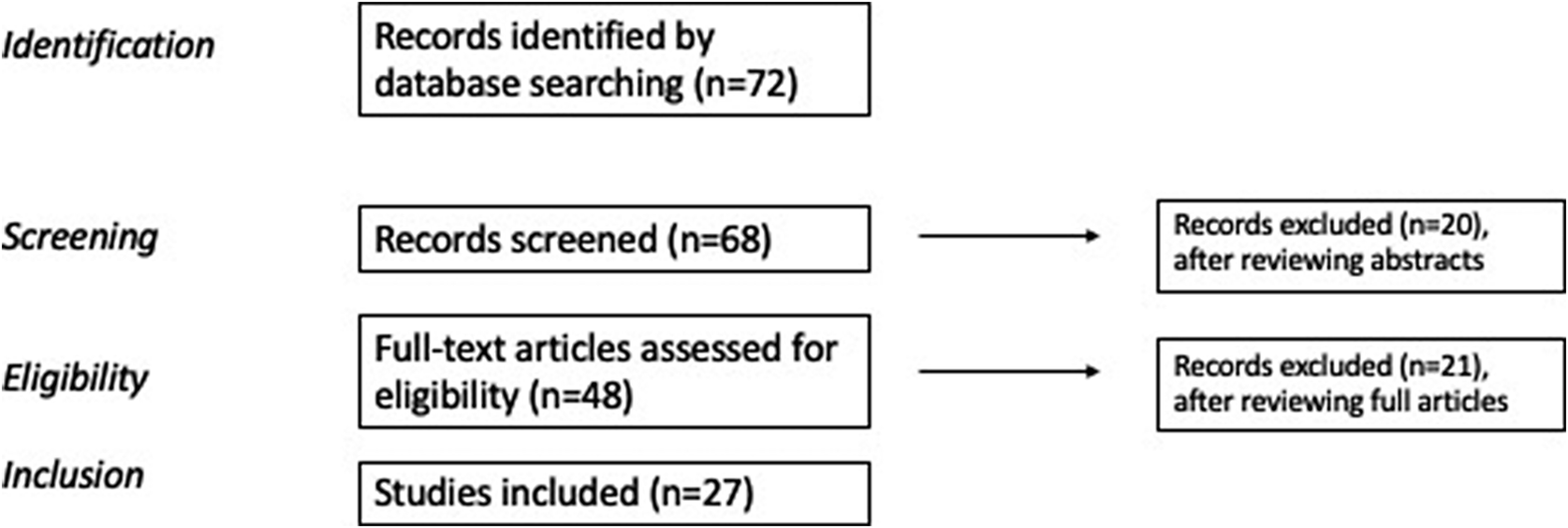

During Stage 4, the initial step involved evaluating the abstracts of the chosen studies. If there was alignment with the research aim, a more in-depth examination of the complete studies ensued. This procedural sequence is depicted in Figure 2. The narrative of the findings assumes descriptive exploration rather than a static analysis of the results. The findings were divided into three subgroups: disciplines, theoretical frameworks, and analytical methods, to get an overview of both theoretical and practical approaches. Organizing the findings in this structure enhances the clarity, focus, and comprehensiveness and provides a nuanced exploration of stakeholder motivations.

Figure 2. Literature search and evaluation for inclusion.

Stage 5 entailed documenting and presenting our findings in the format of a literature review paper. In total, 27 studies were reviewed.

5. Findings

5.1. Disciplines

The first research discipline that can be used to dig into the main topic of this article is moral philosophy. This discipline provides a robust foundation for examining stakeholder motivations. According to Bietti (Reference Bietti2020), moral philosophy provides a meta-level perspective for considering disagreements in technology governance, situating problems within broader contexts, and understanding them in relation to other debates. It broadens perspectives, overcomes confusion, and draws clarifying distinctions.

In political sciences and social movement studies, dynamic representation can help with understanding and analyzing complex systems, phenomena, or data over time. This discipline can be used to study how stakeholders’ interests evolve or to measure the impact of a stakeholder group on a policy agenda. Bernardi et al. (2021) studied, for example, how legislative agendas respond to signals such as protests by public opinion.

Critical policy studies offer a multifaceted framework for evaluating stakeholders in various contexts. One such application involves analyzing stakeholders’ argumentative turns, focusing on language, context, argumentation, and communicative practices within policy processes. In the context of public policy, communication serves as a pivotal conduit connecting the state with society or bridging the public and private sectors. This interaction can take the form of unilateral information dissemination or reciprocal dialogues. At its core, the policymaking process is entwined with ongoing discursive conflicts and communicative practices (Durnová et al., Reference Durnová, Fischer and Zittoun2016).

Legrand (Reference Legrand2022) identifies in this field of study political strategies applied by policymakers to disconnect individuals or groups from political participation. The author applies political exclusion as a methodological means to examine the malevolent aspects within the policymaking process, specifically applied to the context of Australia’s asylum seeker policy.

Park and Lee (2020) employed a stakeholder-oriented approach to assess policy legitimacy. Their research delved into the communicative dynamics between state elites and societal stakeholders in South Korea, concentrating on anti-smoking policies under two distinct government administrations. In addition, frame analysis emerged as a valuable tool for comprehending discrepancies between policy intent and implementation.

Anthropologists may study stakeholders within specific cultural contexts, exploring how cultural norms and values influence stakeholders’ motivations. Hoholm and Araujo (Reference Hoholm and Araújo2011) see ethnography therein as a promising method to track an innovation process in real time. Real-time ethnography enhances our understanding of innovation processes by revealing the uncertainties, choices, and contextual interpretations faced by actors. It provides insights into agential moments, the construction of action contexts, and the complexities of selecting options, emphasizing the messy and multifaceted nature of innovation processes influenced by various conflicting factors. We explore ethnography further in the methodological section of the findings.

5.2. Theoretical frameworks

A self-evident theoretical framework for the examination of stakeholders is stakeholder theory, which originates from the field of business ethics. According to the definition of Mahajan et al. (Reference Mahajan, Lim, Sareen, Kumar and Panwar2023), the theory promotes “… understanding and managing stakeholder needs, wants, and demands.” Ayling and Chapman (Reference Ayling and Chapman2021) recognize that stakeholder theory provides a sound framework to, among other things, identify and describe all interested and affected parties in the application of technology and confirm that stakeholders have a legitimate interest in technology. Stakeholder theory was introduced in the 1980s as a management theory to counter the dominance of shareholder theory. But the theory can also be applied in a government/policy context (Flak and Rose, Reference Flak and Rose2005; Harrison et al., Reference Harrison, Freeman and De Abreu2015).

A concrete application can be seen in Neville and Mengucs’ work (Reference Neville and Menguc2006), which introduces an advanced framework within stakeholder theory. It emphasizes complex interactions among stakeholders, incorporating multiple forms of fit (matching, moderation, and gestalts), integrating stakeholder identification and salience theory, and proposing a hierarchy of influence among stakeholder groups (governments, customers, and employees) to understand stakeholder multiplicity and interactions in organizational contexts. Furthermore, the work of Miller (Reference Miller2022) provides a good example of the application of stakeholder theory, utilizing the stakeholder salience model to manage stakeholders in AI projects based on power, legitimacy, and urgency, and adding a harm attribute to identify passive stakeholders. Passive stakeholders are individuals affected by AI systems but lacking the power to influence the project. Miller’s main theoretical contribution is the use of the different stages of an AI life cycle (planning and design, data collection, etc.) to show at which stage certain stakeholders can have an impact on an AI project or system.

McConnell (Reference McConnell2017) introduces a specific framework that serves as a heuristic for assessing hidden policy agendas. This novel approach includes criteria such as who hides, what is hidden, who stakeholders conceal it from, and the tools/techniques used for concealment. In addition, it explores the consequences of hidden agendas that remain undisclosed and the outcomes once exposed. While scientifically proving the existence of a hidden agenda is challenging, McConnell proposes a four-step framework to provide a more foundational basis for claims about hidden agendas, offering a common analytical filter.

In subsequent work, McConnell (Reference McConnell2020) introduces the concept of “placebo policies,” policies implemented to address the symptoms of a problem without addressing the deeper causal factors. This implies that stakeholders advocating for placebo policies may strategically seek to appear proactive without taking substantive action. Such stakeholders, including politicians and government officials, aim to avoid political risks or criticism. The article provides a road map for researchers to study this phenomenon, guiding them to examine policy intentions, focus, and effectiveness to identify whether a policy is a placebo.

The “Success for Whom”-framework can be applied to a particular policy to address and understand the success (or lack thereof) of that policy for stakeholders such as government officials or lobbyists. From that success or lack of success, stakeholder intentions can be derived. McConnel et al. (Reference McConnell, Grealy and Lea2020) developed a three-step road map and applied this in their study in a case study of housing policy in Australia.

The theoretical construct of coalition magnets encapsulates attractive policy ideas, for example, sustainability or social inclusion, strategically employed by policy entrepreneurs to foster coalitional alliances. With this, the concept can be a good tool to assess stakeholder intentions or strategies. Béland and Cox (Reference Béland and Cox2015) offer illustrative examples where these ideas have functioned as pivotal coalition magnets across diverse policy domains and periods. Coalition magnets also serve as instruments that are manipulated by policy entrepreneurs, enabling them to redefine prevailing policy problems. These magnets possess the unique ability to harmonize actors with previously conflicting interests or awaken new policy preferences in actors who were not previously engaged with the issue. Within their discourse, the authors provide a set of defining characteristics facilitating the identification of coalition magnets. Ideas characterized by ambiguity or infused with profound emotional resonance emerge as potent foundational elements for the creation of a coalition magnet.

5.3. Analytical methods

Content analysis is a valuable analytical instrument for identifying stakeholder motivations, due to its ability to provide both quantitative and qualitative insights, its systematic and replicable nature, the ability it gives to identify patterns and trends, and its adaptability to different types and scales of data (Wilson, Reference Wilson2016; Prasad, Reference Prasad2008). In the context of policy analysis, Hopman et al. (Reference Hopman, De Winter and Koops2012) conducted a qualitative content analysis of policy reports and transcribed interviews to identify and analyze the values and beliefs underlying Dutch youth policy. The study is innovative because it uses a theory on the content and structure of values. Text fragments expressing values were labeled with corresponding values of the theory, and the percentages of text fragments per value domain were calculated to rank them in order of importance. This resulted in insights into the latent value perspective in Dutch family policy and how these values shape policy strategies.

A powerful tool for uncovering implicit aspects of communication, making it well-suited for identifying stakeholder motivations, is discourse analysis. Its focus on language, framing, power dynamics, and contextual understanding could provide a nuanced perspective on the underlying forces at play in stakeholder discourse. Lynggaard and Triantafillou (Reference Lynggaard and Triantafillou2023) use discourse analysis to categorize three types of discursive agency that empower policy actors to shape and influence policies: maneuvering within established communication frameworks, navigating between conflicting discourses, and transforming existing discourses. This approach provides a nuanced understanding of the dynamics at play.

In the same academic methodological domain, Germundsson (Reference Germundsson2022) employs Bacchi’s (Reference Bacchi2009) “What’s the problem represented to be?” framework in the analysis of policy discourse. This application aims to deconstruct policy discourse and systematically examine the underlying presuppositions inherent in the delineation of issues. Specifically, Germundsson applies this framework in the context of scrutinizing the utilization of automated decision-support systems by public institutions in Sweden. Leifeld (Reference Leifeld, Victor, Lubell and Montgomery2017) utilizes discourse network analysis within the scope of the research to uncover various elements, including intrinsic endogenous processes such as popularity, reciprocity, and social balance, evident in the exchanges between policy actors.

Social semiotics also offers interesting tools. Inwood and Zappavinga (Reference Inwood and Zappavigna2021) combine the Corpus Linguistics Sampling Method with a specific theoretical framework and coupling analysis to uncover values such as political ideology discursively contained in white papers of four blockchain start-ups. The Corpus Linguistics Sampling Method involves analyzing concordance lines—listing instances of specifically selected words—in white papers using AntConc software, focusing on the most frequent “3-grams” (sets of three co-occurring words) to establish prominent concerns and themes in the data set.

Klüver (Reference Klüver2009) demonstrates the efficacy of quantitative text analysis, specifically with the Wordfish scaling method, in measuring policy positions of interest groups. This computerized content analysis tool proves valuable for analyzing the influence of interest groups, as exemplified in the study’s application to a European Commission policy proposal regarding CO2 emission reductions in the automotive sector.

Engaging with stakeholders through interviews allows researchers to gather firsthand insights, perspectives, and other information, directly from those who are affected by or involved in a certain action. Hansen et al. (Reference Hansen, Robertson, Wilson, Thinyane and Gumbo2011) demonstrate this in the ICT4D study field. The interviews revealed a diverse range of agendas and interests in projects involving multiple organizations.

Studies employing a mix of methodologies demonstrate significant potential. Tanner and Bryden (Reference Tanner and Bryden2023) executed a two-phase research project on AI public discourse, employing AI-assisted quantitative analysis of videos and media articles, followed by qualitative interviews with policy and communication experts. The study illuminates a deficiency within the public discourse surrounding AI, wherein discussions concerning its broader societal ramifications receive limited attention and are predominantly shaped by technology corporations. Moreover, the research suggests that Civil Society Organizations are inadequately effective in contributing to and influencing this discourse. Ciepielewska-Kowalik (Reference Ciepielewska-Kowalik2020) combined surveys with in-depth interviews to uncover (un)intended consequences and hidden agendas related to education reform in Poland. Wen (Reference Wen2018) conducted a laboratory experiment using human-assisted simulation to investigate decision-making logic among Chinese public officials and citizens, aiming to explore hidden motivations within structured social systems in China. Results were supplemented by post-simulation and longitudinal interviews.

Ethnography proves to be a compelling method for examining stakeholders’ motivations in a policy process, given its immersive and holistic nature. By embedding researchers within the social settings where policies are formulated, debated, and implemented, ethnography facilitates an in-depth understanding of stakeholders’ lived experiences and perspectives. Utilizing participant observation, interviews, and the analysis of everyday interactions, ethnography unveils tacit knowledge, implicit norms, and nuanced social dynamics not readily apparent through conventional research methods. Emphasizing context and offering a rich narrative of the social milieu, ethnography contributes to a comprehensive comprehension of stakeholders’ behaviors and motivations (Cappellaro, Reference Cappellaro2016).

In this methodological domain, various subcategories exist. Hoholm and Araújo (Reference Hoholm and Araújo2011), studying innovation processes in real time, contend that real-time ethnography enhances theorizing processes by providing insights into uncertainties, contingencies, and choices faced by actors. They emphasize its capacity to contextualize interpretations of past and future projects. In addition, real-time ethnography elucidates how actors interpret and construct contexts of action, offering a better analytical understanding of controversies, tensions, and fissures arising from alternative choice paths. The authors dispel the simplistic view of ethnography, stressing the necessity to trace elements challenging or impossible to observe through other methods.

Critical ethnography is an approach that goes beyond traditional ethnography by incorporating a critical perspective on social issues and power structures. Thomas (Reference Thomas1993) describes this as follows: “Critical ethnographers describe, analyze, and open to scrutiny otherwise hidden agendas, power centres, and assumptions that inhibit, repress, and constrain.” We find an application of this in Myers and Young (Reference Myers and Young1997), who use critical ethnography to uncover hidden agendas, power, and issues such as managerial assumptions in the context of an information system development project in mental health.

Within the scope of this paper, policy ethnography and political ethnography are particularly pertinent. While policy ethnography focuses on the study of policies and their implementation, political ethnography encompasses a broader spectrum, including diverse political activities and structures such as political institutions and movements. Dubois (Reference Dubois2009) uses critical policy ethnography to examine welfare state policies in France. The study mainly provides a realistic understanding of the implementation of the policies and the impact on recipients of welfare, but the author indicates that the method also provided insight into the control practices of officials and their intentions. Namian (2021) shows through policy ethnography the values associated with essential structures in a policy field, specifically examining homeless shelters in the context of the Housing First policy.

While explicit instances showcasing the utilization of political ethnography in investigating stakeholder motivations were not identified, the method remains an intriguing instrument for such research. For instance, Baiocchi and Connor (Reference Baiocchi and Connor2008) offer a comprehensive yet not fully exhaustive survey of the method’s application in examining politics, interactions between individuals and political actors, as well as the intersection of politics and anthropology.

6. Discussion

This study delves into existing research on identifying stakeholder motivations, revealing ample opportunities across various academic disciplines, theoretical frameworks, and analytical methods. The results show that a lot of opportunities exist for different academic disciplines. In addition, different theoretical frameworks and analytical methods can be employed. The results show that stakeholders are already approached in different ways in studies, but a limited focus on intentions, agendas, or strategies is observed.

In critical policy studies, there is much potential to deepen the understanding of stakeholder motivations, particularly within the dynamic contexts of innovation and AI. The policymaking process, especially in the context of innovation and AI, requires more in-depth research, especially against the current topical background. One recent example is the EU AI Act, where there have been widespread claims that the final text was diluted due to lobbying efforts by major technology companies (Vranken, Reference Vranken2023; Floridi and Baracchi Bonvicini, Reference Floridi and Baracchi Bonvicini2023). Critical policy researchers can, for example, explore power dynamics, language, and communicative practices within policy processes. The emphasis on discourse within this discipline allows researchers to uncover hidden agendas, power struggles, and how stakeholders shape policy narratives to advance their interests (Fischer et al., Reference Fischer, Torgerson, Durnová and Orsini2015).

In the context of the selected theoretical frameworks, McConnell’s work assessing hidden policy agendas serves as an important foundation for studying stakeholder motives in policymaking. It could be very interesting for researchers to link methods to this framework to explore this topic in depth. Leveraging McConnel’s conceptualization of placebo policies holds considerable promise within the domain of AI policy. This framework facilitates the identification of policy measures that ostensibly address an issue but do so in a cursory or temporary manner, neglecting deeper underlying factors. This analytical lens proves particularly insightful when examining themes such as fairness or transparency in AI governance. While stakeholder theory offers an interesting lens to explore power dynamics and relationships, it has limited applicability for in-depth understanding, necessitating additional research tools.

Disciplines and theoretical frameworks can be interesting building blocks from which research on stakeholder motivations can start or be supported and strengthened. Analytical methods, predominant in our results, offer more practical insights that can be put to work. Content analysis, despite its reductive nature (Kolbe and Burnett, Reference Kolbe and Burnett1991), can yield valuable results with an appropriate theoretical framework (Hopman et al., Reference Hopman, De Winter and Koops2012). Discourse analysis, while widely used, requires complementation with other methodologies for a comprehensive understanding.

The integration of diverse research methodologies, as evidenced by studies such as Tanner and Bryden’s AI discourse analysis, Ciepielewska-Kowalik’s combined surveys and interviews on education reform, and Wen’s multifaceted approach to uncovering hidden motivations in Chinese social systems, highlights the substantial potential of employing a mixed-methods approach. This versatility allows researchers to gain comprehensive insights.

Ethnography and its subdomains is the research method with the most potential in the context of this study. The various studies indicate that the method can gain unique insights and challenge existing views. Although we observed a concrete application to stakeholders’ hidden agendas in one case (Myers and Young, Reference Myers and Young1997), we can find much evidence from the other studies that the methodology fits well with the objective of observations in an innovation policy context. In light of our study, this approach enables researchers to grasp the intricacies of stakeholders’ decision-making processes, the rationales behind their actions, and the complex interplay of factors influencing policy outcomes.

Listing the various subcategories within ethnography reveals the potential for cross-fertilization between them. For instance, the synergy between subcategories like real-time ethnography and policy ethnography holds promise. This entails examining policy stakeholders in real time at various stages of a policy process, expanding beyond the predominant focus on the implementation stage in traditional policy ethnography.

In general, this review provides a valuable resource for scholars and practitioners exploring stakeholder dynamics. Further, this article also contributes indirectly to the epistemological problem of whether there can be conscience and in what ways hidden stakeholder motivations exist.

In its initial phase, this study provides tools for researchers to utilize. Subsequently, it holds the potential to yield insights for policymakers. As posited in the article, AI ethics guidelines and policy documents fall short for the following reasons: they are too abstract and theoretical, disconnect from real-world contexts, do not deal with balancing conflicting values, overemphasize self-regulation, have limited stakeholder inclusion, and are vulnerable to hidden agendas. Further research on stakeholder motivations within a policy context offers valuable information for refining these documents and improving the overall production process. The chosen approach provides a rather limited description of disciplines, frameworks, and methods. A critical evaluation of whether methods are suitable to apply in the complex (innovation) policy context requires further research.

7. Conclusion

The present study sought to address the research questions (1) “How can we identify social constructivist factors in the shaping of AI governance documents?” and (2) “What research has been conducted to explore and scrutinize stakeholder motivations encompassing interests, strategies, intentions, and agendas?” through a comprehensive review of literature spanning research disciplines, theoretical frameworks, and analytical methods. The results reveal a noticeable dearth of research on stakeholder motivation in policy processes. While several studies touch upon unilateral examinations of stakeholders’ expressions, such as text or statement analyses, our results highlight a scarcity of studies delving into the “hidden” domain, uncovering concealed agendas or intentions.

Consequently, this study serves as a foundational contribution, intended to stimulate continued research and foster further critical debates on this topic, aiming for a more profound exploration of policy processes. This extends beyond merely understanding how guidelines and policy documents are created but can also be employed from a social-critical point of view to unmask the true intentions of stakeholders. Future research will build upon the insights gained in this study by critically evaluating the existing knowledge and exploring applicable theoretical frameworks and methods within the context of innovation policy.

In sum, this study exposes a research area that has already been explored to a very limited extent. It provides an overview of disciplines, theoretical frameworks, and methods with which researchers can engage in this field. While providing an overview of relevant disciplines, theoretical frameworks, and methods, this study not only prompts additional research into their utility but also highlights the potential inherent in combining different tools. Over time, such pathways may offer more insight into policy processes for researchers but may also be valuable for stakeholders engaged in the policy process.

Author contributions

Conceptualization—F.H. and R.H.; Data curation—F.H.; Formal analysis—F.H.; Investigation—F.H.; Methodology—F.H.; Writing – original draft—F.H. and R.H.

Provenance

This article is part of the Data for Policy 2024 Proceedings and was accepted in Data & Policy on the strength of the Conference’s review process.

Competing interest

The authors declare no competing interests exist.

Comments

No Comments have been published for this article.