1. Introduction

Active flow control is of wide interest due to its extensive industrial applications where the fluid motion is manipulated with energy-consuming controllers towards a desired target, such as the reduction of drag, the enhancement of heat transfer, and delay of the transition from a laminar flow to turbulence (Brunton & Noack Reference Brunton and Noack2015). Depending on whether we have a model for describing the dynamics of the fluid motion, the control strategies can be categorised into model-based and model-free methods. The former assumes the controller's complete awareness of a model that can describe the flow behaviour accurately (e.g. Navier–Stokes equations). The linear system theory acts as the foundation for the model-based controllers, such as linear quadratic regulator and model predictive control. The model-based approach has been applied widely in the active flow control of academic flows (Kim & Bewley Reference Kim and Bewley2007; Sipp et al. Reference Sipp, Marquet, Meliga and Barbagallo2010; Sipp & Schmid Reference Sipp and Schmid2016).

In real-world flow conditions, however, an accurate flow model is often unavailable. Even in the case where a model can be assumed, once the flow condition changes drastically, beyond the predictability of the model, the control performance will also deteriorate. Model-free techniques based on a system identification method can tackle this issue and have enjoyed success to some extent in controlling flows. Nevertheless, limitations also exist for this method, such as a large number of free parameters (Sturzebecher & Nitsche Reference Sturzebecher and Nitsche2003; Hervé et al. Reference Hervé, Sipp, Schmid and Samuelides2012). Thus more advanced model-free control methods based on machine learning (ML) have been put forward and studied to cope with the complex flow conditions (Lee et al. Reference Lee, Kim, Babcock and Goodman1997; Gautier et al. Reference Gautier, Aider, Duriez, Noack, Segond and Abel2015; Duriez, Brunton & Noack Reference Duriez, Brunton and Noack2017; Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019; Park & Choi Reference Park and Choi2020). In this work, we examine the performance of a model-free deep reinforcement learning (DRL) algorithm in controlling convectively-unstable flows, subjected to random upstream noise. Our investigation will begin with the one-dimensional (1-D) Kuramoto–Sivashinsky (KS) equation, which can be regarded as a reduced model of laminar boundary layer flows past a flat plate, and then extend to the controlling of the two-dimensional (2-D) boundary layer flows. One of our focuses is to optimise the placement of sensors in the flow to maximise the efficiency of the DRL control. In the following, we will first review the recent works on DRL-based flow control and the optimisation of sensor placement in other control methods.

1.1. DRL-based flow control

With the advancement of ML technologies, especially deep neural networks and the ever-increasing amount of data, DRL burgeons and has proven its power in solving complex decision-making problems in various applications, including robotics (Kober, Bagnell & Peters Reference Kober, Bagnell and Peters2013), game playing (Mnih et al. Reference Mnih, Kavukcuoglu, Silver, Graves, Antonoglou, Wierstra and Riedmiller2013) and flow control (Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019). In particular, DRL refers to an automated algorithm that aims to maximise a reward function by evaluating the state of an environment with which the DRL agent can interact via sensors. It is particularly suitable for flow control due to their similar settings, and is being researched actively in the field of active flow control (Rabault & Kuhnle Reference Rabault and Kuhnle2019; Brunton, Noack & Koumoutsakos Reference Brunton, Noack and Koumoutsakos2020; Brunton Reference Brunton2021).

Based on a Markov process model and the classical reinforcement learning algorithm, Guéniat, Mathelin & Hussaini (Reference Guéniat, Mathelin and Hussaini2016) first proposed an experiment-oriented control approach and demonstrated its effectiveness in reducing the drag in a cylindrical wake flow. Pivot et al. (Reference Pivot, Mathelin, Cordier, Guéniat and Noack2017) presented a proof-of-concept application of DRL control strategy to 2-D cylinder wake flow, and achieved a 17 % reduction of drag by rotating the cylinder. Koizumi, Tsutsumi & Shima (Reference Koizumi, Tsutsumi and Shima2010) adopted the DRL-based feedback control to reduce the fluctuation of the lift force acting on the cylinder due to the Kármán vortex shedding via two synthetic jets. They compared the DRL-based control with traditional model-based control, and found that DRL achieved a better performance. A subsequent well-cited work that has attracted much attention to DRL in the fluid community is due to Rabault et al. (Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019). They applied the DRL-based control to stabilise the Kármán vortex alley via two synthetic jets on a cylinder that was confined between two flat walls, and analysed the control strategy by comparing the macroscopic flow features before and after the control. These pioneering works laid the foundation of the DRL-based active flow control and have sparked great interest of the fluid community in DRL.

Other technical improvements and parameter investigations have been achieved. Rabault & Kuhnle (Reference Rabault and Kuhnle2019) proposed a multi-environment strategy to further accelerate the training process of the DRL agent. Xu et al. (Reference Xu, Zhang, Deng and Rabault2020) applied the DRL-based control to stabilise the vortex shedding of a primary cylinder via the counter-rotating small cylinder pair downstream of the primary one. Tang et al. (Reference Tang, Rabault, Kuhnle, Wang and Wang2020) presented a robust DRL control strategy to reduce the drag of a cylinder using four synthetic jets. The DRL agent was trained with four different Reynolds numbers (![]() $Re$), but was proved to be effective for any

$Re$), but was proved to be effective for any ![]() $Re$ between 60 and 400. Paris, Beneddine & Dandois (Reference Paris, Beneddine and Dandois2021) also studied a robust DRL control scheme to reduce the drag of a cylinder via two synthetic jets. They focused on identifying the (sub-)optimal placement of the sensors in the cylinder wake flow from a predefined matrix of the sensors. Ren, Rabault & Tang (Reference Ren, Rabault and Tang2021) further extended the DRL-based control of the cylinder wake from the laminar regime to the weakly turbulent regime with

$Re$ between 60 and 400. Paris, Beneddine & Dandois (Reference Paris, Beneddine and Dandois2021) also studied a robust DRL control scheme to reduce the drag of a cylinder via two synthetic jets. They focused on identifying the (sub-)optimal placement of the sensors in the cylinder wake flow from a predefined matrix of the sensors. Ren, Rabault & Tang (Reference Ren, Rabault and Tang2021) further extended the DRL-based control of the cylinder wake from the laminar regime to the weakly turbulent regime with ![]() $Re=1000$. Li & Zhang (Reference Li and Zhang2022) incorporated the physical knowledge from stability analyses into the DRL-based control of confined cylinder wakes, which facilitated the sensor placement and the reward function design in the DRL framework. Moreover, Castellanos et al. (Reference Castellanos, Cornejo Maceda, de la Fuente, Noack, Ianiro and Discetti2022) assessed both DRL-based control and linear genetic programming control (LGPC) for reducing the drag in a cylindrical wake flow at

$Re=1000$. Li & Zhang (Reference Li and Zhang2022) incorporated the physical knowledge from stability analyses into the DRL-based control of confined cylinder wakes, which facilitated the sensor placement and the reward function design in the DRL framework. Moreover, Castellanos et al. (Reference Castellanos, Cornejo Maceda, de la Fuente, Noack, Ianiro and Discetti2022) assessed both DRL-based control and linear genetic programming control (LGPC) for reducing the drag in a cylindrical wake flow at ![]() $Re = 100$. It was found that DRL was more robust to different initial conditions and noise contamination, while LGPC was able to realise control with fewer sensors. Pino et al. (Reference Pino, Schena, Rabault, Kuhnle and Mendez2022) provided a detailed comparison between some global optimisation techniques and ML methods (LGPC and DRL) in different flow control problems, to better understand how the DRL performs.

$Re = 100$. It was found that DRL was more robust to different initial conditions and noise contamination, while LGPC was able to realise control with fewer sensors. Pino et al. (Reference Pino, Schena, Rabault, Kuhnle and Mendez2022) provided a detailed comparison between some global optimisation techniques and ML methods (LGPC and DRL) in different flow control problems, to better understand how the DRL performs.

All the aforementioned works are implemented through numerical simulations. Fan et al. (Reference Fan, Yang, Wang, Triantafyllou and Karniadakis2020) first demonstrated experimentally the effectiveness of DRL-based control for reducing the drag in turbulent cylinder wakes through the counter-rotation of a pair of small cylinders downstream of the main one. Shimomura et al. (Reference Shimomura, Sekimoto, Oyama, Fujii and Nishida2020) proved the viability of DRL-based control for reducing the flow separation around a NACA0015 aerofoil in experiments. The control was realised through adjusting the burst frequency of a plasma actuator, and the flow reattachment was achieved under angles of attack ![]() $12^\circ$ and

$12^\circ$ and ![]() $15^\circ$. For a more detailed description of the recent studies of DRL-based flow control in fluid mechanics, readers are referred to the latest review papers on this topic (Rabault et al. Reference Rabault, Ren, Zhang, Tang and Xu2020; Garnier et al. Reference Garnier, Viquerat, Rabault, Larcher, Kuhnle and Hachem2021; Viquerat, Meliga & Hachem Reference Viquerat, Meliga and Hachem2021).

$15^\circ$. For a more detailed description of the recent studies of DRL-based flow control in fluid mechanics, readers are referred to the latest review papers on this topic (Rabault et al. Reference Rabault, Ren, Zhang, Tang and Xu2020; Garnier et al. Reference Garnier, Viquerat, Rabault, Larcher, Kuhnle and Hachem2021; Viquerat, Meliga & Hachem Reference Viquerat, Meliga and Hachem2021).

After reviewing the works on DRL applied to flow control, we would like to point out one important research direction that deserves to be explored further, which is to embed domain knowledge or flow physics including symmetry or equivariance in the DRL-based control strategy. It has been demonstrated in other scientific fields that ML methods embedded with domain knowledge or symmetry properties inherent in the physical system can outperform the vanilla ML methods (Ling, Kurzawski & Templeton Reference Ling, Kurzawski and Templeton2016; Zhang, Shen & Zhai Reference Zhang, Shen and Zhai2018; Karniadakis et al. Reference Karniadakis, Kevrekidis, Lu, Perdikaris, Wang and Yang2021; Smidt, Geiger & Miller Reference Smidt, Geiger and Miller2021; Bogatskiy et al. Reference Bogatskiy2022). This will not only enhance the sample efficiency, but also guarantee the physical property of the controlled result. In the DRL community, only a few scattered attempts have been made (Belus et al. Reference Belus, Rabault, Viquerat, Che, Hachem and Reglade2019; Zeng & Graham Reference Zeng and Graham2021; Li & Zhang Reference Li and Zhang2022), and more works need to be followed in this direction to fully unleash the power of DRL in controlling flows.

1.2. Sensor placement optimisation

Sensors probe the states of the dynamical system. The extracted information can be used further for a variety of purposes, such as classification, reconstruction or reduced-order modelling of a high-dimensional system through a sparse set of sensor signals (Brunton et al. Reference Brunton, Brunton, Proctor and Kutz2016; Loiseau, Noack & Brunton Reference Loiseau, Noack and Brunton2018; Manohar et al. Reference Manohar, Brunton, Kutz and Brunton2018), and state estimation of large-scale partial differential equations (Khan, Morris & Stastna Reference Khan, Morris and Stastna2015; Hu, Morris & Zhang Reference Hu, Morris and Zhang2016). Here, we focus mainly on the field of active flow control, where sensors are used to collect information from the flow environment as feedback to the actuator. The effect of sensor placement on flow control performance has been investigated in boundary layer flows (Belson et al. Reference Belson, Semeraro, Rowley and Henningson2013) and cylinder wake flows (Akhtar et al. Reference Akhtar, Borggaard, Burns, Imtiaz and Zietsman2015). Identifying the optimal sensor placement is of great significance to the efficiency of the corresponding control scheme.

Initially, some heuristic efforts were made to guide the sensor placement through modal analyses. For instance, Strykowski & Sreenivasan (Reference Strykowski and Sreenivasan1990) placed a second, much smaller cylinder in the wake region of the primary cylinder, and recorded the specific placements of the second cylinder, which led to an effective suppression of the vortex shedding. Later, Giannetti & Luchini (Reference Giannetti and Luchini2007) found that the specific placements determined by Strykowski & Sreenivasan (Reference Strykowski and Sreenivasan1990) in fact corresponded to the wavemaker region where the direct and adjoint eigenmodes overlapped. Akervik et al. (Reference Akervik, Hoepffner, Ehrenstein and Henningson2007) and Bagheri et al. (Reference Bagheri, Henningson, Hoepffner and Schmid2009) proposed that in the framework of flow control, sensors should be placed in the region where the leading direct eigenmode has a large magnitude, and actuators should be placed in the region where the leading adjoint eigenmode has a large magnitude when the adjoint modes and the global modes have a small overlap. Likewise, Natarajan, Freund & Bodony (Reference Natarajan, Freund and Bodony2016) developed an extended method of structural sensitivity analysis to help place collocated sensor–actuator pairs for controlling flow instabilities in a high-subsonic diffuser. Such placements based on physical characteristics of the flow system may be appropriate for the globally unstable flow where the whole flow system beats at a particular frequency and the major disturbances never leave a specific region (such flows are called absolutely unstable; cf. Huerre & Monkewitz Reference Huerre and Monkewitz1990).

However, for a convectively-unstable flow system with a large transient growth, such a method failed to predict the optimal placement, as demonstrated by Chen & Rowley (Reference Chen and Rowley2011). They proposed to address the optimal placement issue using a mathematically rigorous method. They identified the ![]() $\mathscr {H}_2$ optimal controller in conjunction with the optimal sensor and actuator placement via the conjugate gradient method, in order to control perturbations evolving in spatially developing flows modelled by the linearised Ginzburg–Landau equation (LGLE). Colburn (Reference Colburn2011) improved the method by working directly with the covariance of the estimation error instead of the Fisher information matrix. Chen & Rowley (Reference Chen and Rowley2014) further demonstrated the effectiveness of this method in the Orr–Sommerfeld/Squire equations for the optimal sensor and actuator placement. Oehler & Illingworth (Reference Oehler and Illingworth2018) analysed the trade-offs when placing sensors and actuators in the feedback flow control based on the LGLE. Manohar, Kutz & Brunton (Reference Manohar, Kutz and Brunton2021) studied the optimal sensor and actuator placement issue using balanced model reduction with a greedy optimisation method. The effectiveness of this method was demonstrated in the

$\mathscr {H}_2$ optimal controller in conjunction with the optimal sensor and actuator placement via the conjugate gradient method, in order to control perturbations evolving in spatially developing flows modelled by the linearised Ginzburg–Landau equation (LGLE). Colburn (Reference Colburn2011) improved the method by working directly with the covariance of the estimation error instead of the Fisher information matrix. Chen & Rowley (Reference Chen and Rowley2014) further demonstrated the effectiveness of this method in the Orr–Sommerfeld/Squire equations for the optimal sensor and actuator placement. Oehler & Illingworth (Reference Oehler and Illingworth2018) analysed the trade-offs when placing sensors and actuators in the feedback flow control based on the LGLE. Manohar, Kutz & Brunton (Reference Manohar, Kutz and Brunton2021) studied the optimal sensor and actuator placement issue using balanced model reduction with a greedy optimisation method. The effectiveness of this method was demonstrated in the ![]() $\mathscr {H}_2$ optimal control of the LGLE, where a placement was achieved similar to that in Chen & Rowley (Reference Chen and Rowley2011) but with less runtime. More recently, Sashittal & Bodony (Reference Sashittal and Bodony2021) proposed a data-driven method to generate a linear reduced-order model of the flow dynamics and then optimised the sensor placement using an adjoint-based method. Jin, Illingworth & Sandberg (Reference Jin, Illingworth and Sandberg2022) explored the optimal sensor and actuator placement in the context of feedback control of vortex shedding using a gradient minimisation method, and analysed the trade-offs when placing sensors.

$\mathscr {H}_2$ optimal control of the LGLE, where a placement was achieved similar to that in Chen & Rowley (Reference Chen and Rowley2011) but with less runtime. More recently, Sashittal & Bodony (Reference Sashittal and Bodony2021) proposed a data-driven method to generate a linear reduced-order model of the flow dynamics and then optimised the sensor placement using an adjoint-based method. Jin, Illingworth & Sandberg (Reference Jin, Illingworth and Sandberg2022) explored the optimal sensor and actuator placement in the context of feedback control of vortex shedding using a gradient minimisation method, and analysed the trade-offs when placing sensors.

In most of the aforementioned studies, traditional model-based controllers were considered and gradient-based methods were adopted to solve the optimisation problem of the sensor placement, as the gradients of the control objective with respect to sensor positions can be calculated using explicit formulas involved in the model-based controllers. However, in the context of data-driven DRL-based flow control, such accurate gradient information is unavailable because of its model-free probing and controlling of the flow system in a trial-and-error manner. The sensor placement affects the DRL-based control performance in a non-trivial way. Thus studies on the optimised sensor placement in DRL-based flow control are important but currently rare. In the DRL context, Rabault et al. (Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019) attempted to use much fewer sensors – 5 and 11 – to perform the same DRL training, and found that the resulting control performance was far less satisfactory than that of the original placement of 151 sensors (see their Appendix). Paris et al. (Reference Paris, Beneddine and Dandois2021) proposed a novel algorithm named S-PPO-CMA to obtain the (sub-)optimal sensor placement. Nevertheless, they selected the best sensor layout from some predefined sensors, and the sensor position cannot change in the optimisation process. Ren et al. (Reference Ren, Rabault and Tang2021) reported that an a posteriori sensitivity analysis was helpful to improve the sensor layout, but this method was implemented as a post-processing data analysis, unable to provide guidelines for the sensor placement a priori (see also Pino et al. Reference Pino, Schena, Rabault, Kuhnle and Mendez2022). Li & Zhang (Reference Li and Zhang2022) proposed a heuristic way to place the sensors in the wavemaker region in the confined cylindrical wake flow, and the optimal layout was not determined theoretically in an optimisation problem. For optimisation problems where the gradient information is unavailable, it is natural to consider non-gradient-based methods such as genetic algorithm (GA) and particle swarm optimisation (PSO) (Koziel & Yang Reference Koziel and Yang2011). For instance, Mehrabian & Yousefi-Koma (Reference Mehrabian and Yousefi-Koma2007) adopted a bio-inspired invasive weed optimisation algorithm to place optimally piezoelectric actuators on the smart fin for vibration control. Yi, Li & Gu (Reference Yi, Li and Gu2011) utilised a generalised GA to find the optimal sensor placement in the structural health monitoring (SHM) of high-rise structures. Blanloeuil, Nurhazli & Veidt (Reference Blanloeuil, Nurhazli and Veidt2016) applied a PSO algorithm to improve the sensor placement in an ultrasonic SHM system. The improved sensor placement enabled better detection of multiple defects in the target area. Wagiman et al. (Reference Wagiman, Abdullah, Hassan and Radzi2020) adopted a PSO algorithm to find an optimal light sensor placement of an indoor lighting control system. A 24.5 % energy saving was achieved with the optimal number and position of sensors. These works enlighten us on how to distribute optimally the sensors in the model-free DRL control.

1.3. The current work

In the current work, we aim to study the performance of the model-free DRL-based strategy in controlling convectively unstable flows. The difference between absolute instability and convective instability is shown in figure 1 (Huerre & Monkewitz Reference Huerre and Monkewitz1990). An absolutely unstable flow is featured by an intrinsic instability mechanism and is thus less sensitive to the external disturbance. A good example of this type of flow is the global onset of vortex shedding in cylindrical wake flows, which have been studied extensively in DRL-based flow control (Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019; Fan et al. Reference Fan, Yang, Wang, Triantafyllou and Karniadakis2020; Paris et al. Reference Paris, Beneddine and Dandois2021; Li & Zhang Reference Li and Zhang2022). On the contrary, the convectively unstable flow acts as a noise amplifier and is able to selectively amplify the external disturbance, such as boundary layer flows and jets. Compared to absolutely unstable flows, controlling a convectively unstable flow subjected to unknown upstream disturbance is more challenging, representing a more stringent test of the control ability of DRL. This type of flow is less studied in the context of DRL-based flow control.

Figure 1. Schematic of absolute and convective instabilities. A localised infinitesimal perturbation can grow at a fixed location, leading to (a) an absolute instability, or decay at a fixed location but grow as convected downstream, leading to (b) a convective instability.

As a proof-of-concept study, in this work we will control the 1-D linearised Kuramoto–Sivashinsky (KS) equation and the 2-D boundary layer flows. The KS equation is supplemented with an outflow boundary condition, without translational and reflection symmetries, and has been used widely as a reduced model of the disturbance developing in the laminar boundary layer flows. We will investigate the sensor placement issue in the DRL-based control by formulating an optimisation problem in the KS equation based on the PSO method. Then the optimised sensor placement determined in the KS equation will be applied directly to the 2-D Blasius boundary layer flow solved by the complete Navier–Stokes (NS) equations, to evaluate the performance of DRL in suppressing the convective instability in a more realistic flow. We were not able to optimise directly the sensor placement in the 2-D boundary layer flow using the PSO method because the computation in the latter case is exceedingly demanding. We thus circumvented this issue by resorting to the reduced-order 1-D KS equation.

The paper is structured as follows. In § 2, we introduce the flow control problem. In § 3, we present the numerical method adopted in this work. The results on DRL-based flow control and the optimal sensor placement are reported in § 4. Finally, in § 5, we conclude the paper with some discussions. In the appendices, we investigate the dynamics of the nonlinear KS equation and its control when the nonlinear effect cannot be neglected, and we propose a stability-enhanced design for the reward function to further improve the control performance. In addition, we also provide an explanation of the time delay issue in DRL-based control, and a brief introduction to the classical-model-based linear quadratic regulator (LQR).

2. Problem formulation

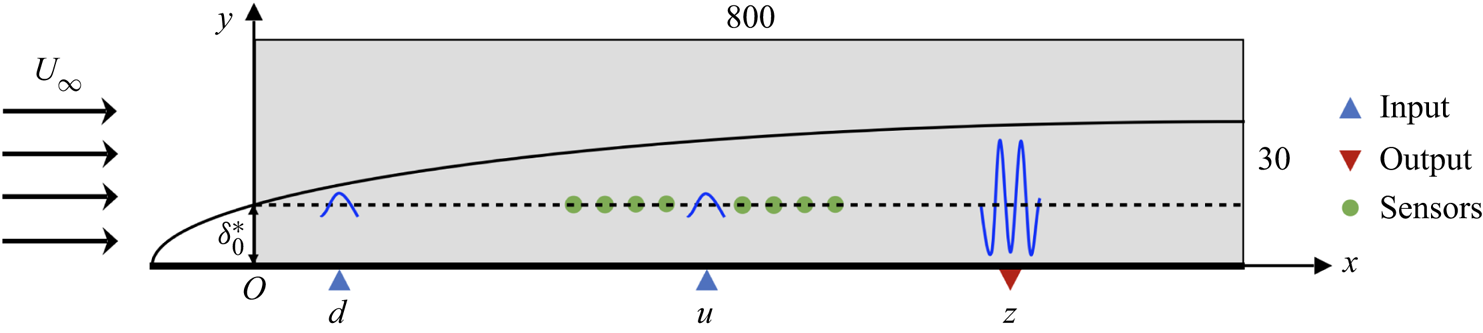

We investigate the control of perturbation evolving in a flat-plate boundary layer flow modelled by the linearised KS equation and the NS equations, as shown in figure 2. A localised Gaussian disturbance is introduced at point ![]() $d$. Due to the convective instability of the flow, the amplitude of the perturbation will grow exponentially with time while travelling downstream, if uncontrolled. The closed-loop control system is formed by introducing a spatially localised forcing at point

$d$. Due to the convective instability of the flow, the amplitude of the perturbation will grow exponentially with time while travelling downstream, if uncontrolled. The closed-loop control system is formed by introducing a spatially localised forcing at point ![]() $u$ as the control action, which is determined by the DRL agent based on the feedback signals collected by sensors (denoted by green points in figure 2). The control objective is to minimise the downstream perturbation measured by an output sensor at point

$u$ as the control action, which is determined by the DRL agent based on the feedback signals collected by sensors (denoted by green points in figure 2). The control objective is to minimise the downstream perturbation measured by an output sensor at point ![]() $z$, trying to abate the exponential flow instability.

$z$, trying to abate the exponential flow instability.

Figure 2. Control set-up for a 2-D flat-plate boundary layer flow. Random noise is introduced at point ![]() $d$, and a feedback control based on states collected by sensors is implemented at point

$d$, and a feedback control based on states collected by sensors is implemented at point ![]() $u$ to minimise the downstream perturbation measured at point

$u$ to minimise the downstream perturbation measured at point ![]() $z$. Here,

$z$. Here, ![]() $U_\infty$ is the uniform free-stream velocity, and

$U_\infty$ is the uniform free-stream velocity, and ![]() $\delta _0^*$ is the displacement thickness of the boundary layer at the inlet of the domain shown by the grey box

$\delta _0^*$ is the displacement thickness of the boundary layer at the inlet of the domain shown by the grey box ![]() $\varOmega = (0, 800) \times (0, 30)$, which is non-dimensionalised by

$\varOmega = (0, 800) \times (0, 30)$, which is non-dimensionalised by ![]() $\delta _0^*$.

$\delta _0^*$.

2.1. Governing equation of the 1-D Kuramoto–Sivashinsky equation

The original KS equation was first used to describe flame fronts in laminar flames (Kuramoto & Tsuzuki Reference Kuramoto and Tsuzuki1976; Sivashinsky Reference Sivashinsky1977) and is one of the simplest nonlinear PDEs that exhibit spatiotemporal chaos (Cvitanović, Davidchack & Siminos Reference Cvitanović, Davidchack and Siminos2010). It has become a common toy problem in the studies of ML, such as the data-driven reduction of chaotic dynamics on an inertial manifold (Linot & Graham Reference Linot and Graham2020) and the DRL-based control of chaotic systems (Bucci et al. Reference Bucci, Semeraro, Allauzen, Wisniewski, Cordier and Mathelin2019; Zeng & Graham Reference Zeng and Graham2021). Here, we investigate the 1-D linearised KS equation as a reduced-order model representation of the disturbance developing in 2-D boundary layer flows; cf. Fabbiane et al. (Reference Fabbiane, Semeraro, Bagheri and Henningson2014) for a detailed illustration of various model-based and adaptive control methods applied to the KS equation. Specifically, the linearised KS equation can describe the flow dynamics at a wall-normal position ![]() $y=\delta _0^{*}$, where

$y=\delta _0^{*}$, where ![]() $\delta _0^*$ is the displacement thickness of the boundary layer at the inlet of the computational domain, as shown in figure 2. The linearised step is outlined briefly below. First, the original non-dimensionalised KS equation reads

$\delta _0^*$ is the displacement thickness of the boundary layer at the inlet of the computational domain, as shown in figure 2. The linearised step is outlined briefly below. First, the original non-dimensionalised KS equation reads

where ![]() $v(x,t)$ represents the velocity field,

$v(x,t)$ represents the velocity field, ![]() $\mathcal {R}$ is the Reynolds number (or

$\mathcal {R}$ is the Reynolds number (or ![]() $Re$ in boundary layer flows),

$Re$ in boundary layer flows), ![]() $\mathcal {P}$ is a coefficient balancing energy production and dissipation, and finally

$\mathcal {P}$ is a coefficient balancing energy production and dissipation, and finally ![]() $L$ is the length of the 1-D domain. Since we study a fluid system that is close to a steady solution

$L$ is the length of the 1-D domain. Since we study a fluid system that is close to a steady solution ![]() $V$ (a constant), we can decompose the velocity into a combination of two terms as

$V$ (a constant), we can decompose the velocity into a combination of two terms as

where ![]() $v^{\prime }(x, t)$ is the perturbation velocity with

$v^{\prime }(x, t)$ is the perturbation velocity with ![]() $\varepsilon \ll 1$. Inserting (2.2) into (2.1) yields

$\varepsilon \ll 1$. Inserting (2.2) into (2.1) yields

where the external forcing term ![]() $f(x,t)$ now appears on the right-hand side for the introduction of disturbance and control terms.

$f(x,t)$ now appears on the right-hand side for the introduction of disturbance and control terms.

When the perturbation is small enough, we can neglect the nonlinear term ![]() $- \varepsilon v^{\prime }\,\partial v^{\prime } / \partial x$ in (2.3) and obtain the following linearised KS equation to model the dynamics of streamwise perturbation velocity evolving in flat-plate boundary layer flows:

$- \varepsilon v^{\prime }\,\partial v^{\prime } / \partial x$ in (2.3) and obtain the following linearised KS equation to model the dynamics of streamwise perturbation velocity evolving in flat-plate boundary layer flows:

with the unperturbed boundary conditions

We adopt the same parameter settings as those in Fabbiane et al. (Reference Fabbiane, Semeraro, Bagheri and Henningson2014), i.e. ![]() $\mathcal {R} = 0.25$,

$\mathcal {R} = 0.25$, ![]() $\mathcal {P} = 0.05$,

$\mathcal {P} = 0.05$, ![]() $V = 0.4$ and

$V = 0.4$ and ![]() $L = 800$, to model the 2-D boundary layer at

$L = 800$, to model the 2-D boundary layer at ![]() $Re = 1000$.

$Re = 1000$.

The external forcing term ![]() $f(x, t)$ in (2.4) includes both noise input and control input:

$f(x, t)$ in (2.4) includes both noise input and control input:

where ![]() $b_{d}(x)$ and

$b_{d}(x)$ and ![]() $b_{u}(x)$ represent the spatial distribution of noise and control inputs, respectively;

$b_{u}(x)$ represent the spatial distribution of noise and control inputs, respectively; ![]() $d(t)$ and

$d(t)$ and ![]() $u(t)$ correspond to the temporal signals. The downstream output measured at point

$u(t)$ correspond to the temporal signals. The downstream output measured at point ![]() $z$ is expressed by

$z$ is expressed by

where ![]() $c_{z}(x)$ is the spatial support of the output sensor. In this work, all three spatial supports

$c_{z}(x)$ is the spatial support of the output sensor. In this work, all three spatial supports ![]() $b_{d}(x)$,

$b_{d}(x)$, ![]() $b_{u}(x)$ and

$b_{u}(x)$ and ![]() $c_{z}(x)$ take the form of a Gaussian function

$c_{z}(x)$ take the form of a Gaussian function

\begin{gather} g(x ; {x}_{n}, \sigma)=\frac{1}{\sigma} \exp \left(-\left(\frac{x-{x}_{n}}{\sigma}\right)^{2}\right), \end{gather}

\begin{gather} g(x ; {x}_{n}, \sigma)=\frac{1}{\sigma} \exp \left(-\left(\frac{x-{x}_{n}}{\sigma}\right)^{2}\right), \end{gather}

We adopt the same spatial parameters used by Fabbiane et al. (Reference Fabbiane, Semeraro, Bagheri and Henningson2014), i.e. ![]() ${x}_{d} = 35$,

${x}_{d} = 35$, ![]() ${x}_{u} = 400$,

${x}_{u} = 400$, ![]() ${x}_{z} = 700$ and

${x}_{z} = 700$ and ![]() $\sigma _{d} = \sigma _{u} = \sigma _{z} = 4$. In addition, the disturbance input

$\sigma _{d} = \sigma _{u} = \sigma _{z} = 4$. In addition, the disturbance input ![]() $d(t)$ in (2.6) is modelled as Gaussian white noise with unit variance. In this work, we will use DRL to learn an effective control law, i.e. how the control action

$d(t)$ in (2.6) is modelled as Gaussian white noise with unit variance. In this work, we will use DRL to learn an effective control law, i.e. how the control action ![]() $u(t)$ in (2.6) varies with time, to suppress the perturbation downstream.

$u(t)$ in (2.6) varies with time, to suppress the perturbation downstream.

2.2. Governing equations for the 2-D boundary layer

We will also test the DRL-based control in 2-D Blasius boundary layer flows, governed by the incompressible NS equations

where ![]() $\boldsymbol {u}=(u, v)^{{\rm T}}$ is the velocity,

$\boldsymbol {u}=(u, v)^{{\rm T}}$ is the velocity, ![]() $t$ is time,

$t$ is time, ![]() $p$ is the pressure, and

$p$ is the pressure, and ![]() $\boldsymbol {f}$ is the external forcing term. Here,

$\boldsymbol {f}$ is the external forcing term. Here, ![]() $Re$ is the Reynolds number defined as

$Re$ is the Reynolds number defined as ![]() $Re = U_\infty \delta _0^*/ \nu$, where

$Re = U_\infty \delta _0^*/ \nu$, where ![]() $U_\infty$ is the free-stream velocity,

$U_\infty$ is the free-stream velocity, ![]() $\delta _0^*$ is the displacement thickness of the boundary layer at the inlet of the computational domain, and

$\delta _0^*$ is the displacement thickness of the boundary layer at the inlet of the computational domain, and ![]() $\nu$ is the kinematic viscosity. We non-dimensionalise the length and the velocity by

$\nu$ is the kinematic viscosity. We non-dimensionalise the length and the velocity by ![]() $\delta _0^*$ and

$\delta _0^*$ and ![]() $U_\infty$, respectively, and use

$U_\infty$, respectively, and use ![]() $Re = 1000$ in all cases, which is consistent with the value in the KS system. The computational domain is

$Re = 1000$ in all cases, which is consistent with the value in the KS system. The computational domain is ![]() $\varOmega = (0, L_x) \times (0, L_y) = (0, 800) \times (0, 30)$, denoted by the grey box in figure 2. The inlet velocity profile is obtained from the laminar base flow profile (Blasius solution). At the outlet of the domain, we impose the standard free-outflow condition with

$\varOmega = (0, L_x) \times (0, L_y) = (0, 800) \times (0, 30)$, denoted by the grey box in figure 2. The inlet velocity profile is obtained from the laminar base flow profile (Blasius solution). At the outlet of the domain, we impose the standard free-outflow condition with ![]() $(p \boldsymbol{\mathsf{I}}-({1}/{Re})\,\boldsymbol {\nabla } \boldsymbol {u}) \boldsymbol {\cdot } \boldsymbol {n}=0$, where

$(p \boldsymbol{\mathsf{I}}-({1}/{Re})\,\boldsymbol {\nabla } \boldsymbol {u}) \boldsymbol {\cdot } \boldsymbol {n}=0$, where ![]() $\boldsymbol{\mathsf{I}}$ is the identity tensor, and

$\boldsymbol{\mathsf{I}}$ is the identity tensor, and ![]() $\boldsymbol {n}$ is the outward normal vector. At the bottom wall, we apply the no-slip boundary condition, and at the top of the domain, we apply the free-stream boundary condition with

$\boldsymbol {n}$ is the outward normal vector. At the bottom wall, we apply the no-slip boundary condition, and at the top of the domain, we apply the free-stream boundary condition with ![]() $u = U_\infty$ and

$u = U_\infty$ and ![]() ${\rm d}v/{{\rm d} y} = 0$.

${\rm d}v/{{\rm d} y} = 0$.

The external forcing term ![]() $\boldsymbol {f}$ in the momentum equation includes both noise input

$\boldsymbol {f}$ in the momentum equation includes both noise input ![]() $d(t)$ and control output

$d(t)$ and control output ![]() $u(t)$:

$u(t)$:

where ![]() $\boldsymbol {b}_{d}$ and

$\boldsymbol {b}_{d}$ and ![]() $\boldsymbol {b}_{u}$ are the corresponding spatial distribution functions, similar to those in (2.6) except that the current supports assume 2-D Gaussian functions:

$\boldsymbol {b}_{u}$ are the corresponding spatial distribution functions, similar to those in (2.6) except that the current supports assume 2-D Gaussian functions:

\begin{equation} \left.\begin{gathered} \boldsymbol{b}_{d}(x, y)=\left[\begin{array}{c} \left(y-y_{d}\right) \sigma_{x} / \sigma_{y} \\ -\left(x-x_{d}\right) \sigma_{y} / \sigma_{x} \end{array}\right] \exp \left(-\frac{\left(x-{x}_{d}\right)^{2}}{\sigma_{x}^{2}}-\frac{\left(y-{y}_{d}\right)^{2}}{\sigma_{y}^{2}}\right),\\ \boldsymbol{b}_{u}(x, y)=\left[\begin{array}{c} \left(y-y_{u}\right) \sigma_{x} / \sigma_{y} \\ -\left(x-x_{u}\right) \sigma_{y} / \sigma_{x} \end{array}\right] \exp \left(-\frac{\left(x-{x}_{u}\right)^{2}}{\sigma_{x}^{2}}-\frac{\left(y-{y}_{u}\right)^{2}}{\sigma_{y}^{2}}\right), \end{gathered}\right\}\end{equation}

\begin{equation} \left.\begin{gathered} \boldsymbol{b}_{d}(x, y)=\left[\begin{array}{c} \left(y-y_{d}\right) \sigma_{x} / \sigma_{y} \\ -\left(x-x_{d}\right) \sigma_{y} / \sigma_{x} \end{array}\right] \exp \left(-\frac{\left(x-{x}_{d}\right)^{2}}{\sigma_{x}^{2}}-\frac{\left(y-{y}_{d}\right)^{2}}{\sigma_{y}^{2}}\right),\\ \boldsymbol{b}_{u}(x, y)=\left[\begin{array}{c} \left(y-y_{u}\right) \sigma_{x} / \sigma_{y} \\ -\left(x-x_{u}\right) \sigma_{y} / \sigma_{x} \end{array}\right] \exp \left(-\frac{\left(x-{x}_{u}\right)^{2}}{\sigma_{x}^{2}}-\frac{\left(y-{y}_{u}\right)^{2}}{\sigma_{y}^{2}}\right), \end{gathered}\right\}\end{equation}

where ![]() $\sigma _x = 4$ and

$\sigma _x = 4$ and ![]() $\sigma _y = 1/4$ determine the size of the two spatial supports centred at

$\sigma _y = 1/4$ determine the size of the two spatial supports centred at ![]() $(x_d, y_d) = (35, 1)$ for noise input and

$(x_d, y_d) = (35, 1)$ for noise input and ![]() $(x_u, y_u) = (400, 1)$ for control input, respectively. As an illustration, the streamwise and wall-normal components of the spatial support

$(x_u, y_u) = (400, 1)$ for control input, respectively. As an illustration, the streamwise and wall-normal components of the spatial support ![]() $\boldsymbol {b}_{d}$ are presented in figure 3, consistent with those in Belson et al. (Reference Belson, Semeraro, Rowley and Henningson2013).

$\boldsymbol {b}_{d}$ are presented in figure 3, consistent with those in Belson et al. (Reference Belson, Semeraro, Rowley and Henningson2013).

Figure 3. Contour plots of the 2-D Gaussian spatial distribution ![]() $\boldsymbol {b}_{d}(x, y)$: (a) streamwise component; (b) wall-normal component.

$\boldsymbol {b}_{d}(x, y)$: (a) streamwise component; (b) wall-normal component.

The downstream output at point ![]() $z$ is a measurement of the local perturbation energy expressed by

$z$ is a measurement of the local perturbation energy expressed by

\begin{equation} z(t)=\sum_{i = 1}^{N}\left[\left(u^{i}(t)-U^{i}\right)^{2}+\left(v^{i}(t)-V^{i}\right)^{2}\right], \end{equation}

\begin{equation} z(t)=\sum_{i = 1}^{N}\left[\left(u^{i}(t)-U^{i}\right)^{2}+\left(v^{i}(t)-V^{i}\right)^{2}\right], \end{equation}

where ![]() $N$ is the number of uniformly distributed neighbouring points around point

$N$ is the number of uniformly distributed neighbouring points around point ![]() $z$ at

$z$ at ![]() $(550, 1)$, and here we use a large

$(550, 1)$, and here we use a large ![]() $N = 78$;

$N = 78$; ![]() $u^i(t)$ and

$u^i(t)$ and ![]() $v^i(t)$ are the instantaneous

$v^i(t)$ are the instantaneous ![]() $x$- and

$x$- and ![]() $y$-direction velocity components at point

$y$-direction velocity components at point ![]() $i$;

$i$; ![]() $U^i$ and

$U^i$ and ![]() $V^i$ are the corresponding base flow velocities, i.e. a steady solution to the nonlinear NS equations.

$V^i$ are the corresponding base flow velocities, i.e. a steady solution to the nonlinear NS equations.

The upstream noise input ![]() $d(t)$ in (2.10) is modelled as Gaussian white noise with zero mean and standard deviation

$d(t)$ in (2.10) is modelled as Gaussian white noise with zero mean and standard deviation ![]() $2 \times 10^{-4}$. Induced by this random disturbance input, the boundary layer flow considered here exhibits the behaviour of convective instability in the form of an exponentially growing Tollmien–Schlichting (TS) wave. The objective of the DRL control is to suppress the growth of the unstable TS wave by modulating

$2 \times 10^{-4}$. Induced by this random disturbance input, the boundary layer flow considered here exhibits the behaviour of convective instability in the form of an exponentially growing Tollmien–Schlichting (TS) wave. The objective of the DRL control is to suppress the growth of the unstable TS wave by modulating ![]() $u(t)$ in (2.10). In figure 2, the input and output positions are specified and will remain unchanged in our investigation, but the sensor position will be improved in an optimisation problem that will be discussed in § 4.5.

$u(t)$ in (2.10). In figure 2, the input and output positions are specified and will remain unchanged in our investigation, but the sensor position will be improved in an optimisation problem that will be discussed in § 4.5.

3. Numerical methods

3.1. Numerical simulation of the 1-D KS equation

We adopt the same numerical method used by Fabbiane et al. (Reference Fabbiane, Semeraro, Bagheri and Henningson2014) to solve the 1-D linearised KS equation. The equation is discretised using a finite difference method on ![]() $n=400$ discretised grid points. The second- and fourth-order derivatives are discretised based on a centred five-node stencil, while the convective term is based on a one-node upwind scheme due to the convective feature. Boundary conditions in (2.5a–d) are implemented using four ghost nodes outside the left and right boundaries. The spatial discretisation yields the following set of finite-dimensional state-space equations (also referred to as plant hereafter):

$n=400$ discretised grid points. The second- and fourth-order derivatives are discretised based on a centred five-node stencil, while the convective term is based on a one-node upwind scheme due to the convective feature. Boundary conditions in (2.5a–d) are implemented using four ghost nodes outside the left and right boundaries. The spatial discretisation yields the following set of finite-dimensional state-space equations (also referred to as plant hereafter):

\begin{equation} \left.\begin{gathered}

\dot{\boldsymbol{v}}(t)

=\boldsymbol{\mathsf{A}}\,\boldsymbol{v}(t)+\boldsymbol{\mathsf{B}}_{d}\,d(t)+\boldsymbol{\mathsf{B}}_{u}\,u(t),

\\ z(t) =\boldsymbol{\mathsf{C}}_{z}\,\boldsymbol{v}(t),

\end{gathered}\right\}\end{equation}

\begin{equation} \left.\begin{gathered}

\dot{\boldsymbol{v}}(t)

=\boldsymbol{\mathsf{A}}\,\boldsymbol{v}(t)+\boldsymbol{\mathsf{B}}_{d}\,d(t)+\boldsymbol{\mathsf{B}}_{u}\,u(t),

\\ z(t) =\boldsymbol{\mathsf{C}}_{z}\,\boldsymbol{v}(t),

\end{gathered}\right\}\end{equation}

where ![]() $\boldsymbol {v}(t)\in R^n$ represents the discretised values at

$\boldsymbol {v}(t)\in R^n$ represents the discretised values at ![]() $n = 400$ equispaced nodes,

$n = 400$ equispaced nodes, ![]() $\dot {\boldsymbol {v}}(t)$ is its time derivative, and

$\dot {\boldsymbol {v}}(t)$ is its time derivative, and ![]() $\boldsymbol{\mathsf{A}}$ is the linear operator of the system. The input matrices

$\boldsymbol{\mathsf{A}}$ is the linear operator of the system. The input matrices ![]() $\boldsymbol{\mathsf{B}}_{d}$ and

$\boldsymbol{\mathsf{B}}_{d}$ and ![]() $\boldsymbol{\mathsf{B}}_{u}$, and output matrix

$\boldsymbol{\mathsf{B}}_{u}$, and output matrix ![]() $\boldsymbol{\mathsf{C}}_{z}$, are obtained by evaluating (2.8b) at the nodes. The implicit Crank–Nicolson method is adopted for time marching

$\boldsymbol{\mathsf{C}}_{z}$, are obtained by evaluating (2.8b) at the nodes. The implicit Crank–Nicolson method is adopted for time marching

where ![]() $\boldsymbol{\mathsf{N}}_{I}=\boldsymbol{\mathsf{I}}-({\Delta t}/{2})\boldsymbol{\mathsf{A}}$,

$\boldsymbol{\mathsf{N}}_{I}=\boldsymbol{\mathsf{I}}-({\Delta t}/{2})\boldsymbol{\mathsf{A}}$, ![]() $\boldsymbol{\mathsf{N}}_{E}=\boldsymbol{\mathsf{I}}+({\Delta t}/{2}) \boldsymbol{\mathsf{A}}$, and

$\boldsymbol{\mathsf{N}}_{E}=\boldsymbol{\mathsf{I}}+({\Delta t}/{2}) \boldsymbol{\mathsf{A}}$, and ![]() $\Delta t$ is the time step, chosen as

$\Delta t$ is the time step, chosen as ![]() $\Delta t = 1$ in the current work.

$\Delta t = 1$ in the current work.

We also investigate the dynamics of the weakly nonlinear KS equation when the perturbation amplitude reaches a certain level, i.e. (2.3) together with boundary conditions (2.5a–d), in Appendix A. Compared with the linear plant described by (3.1), the weakly nonlinear plant has an additional nonlinear term on the right-hand side:

\begin{equation} \left.\begin{gathered} \dot{\boldsymbol{v}}(t) =\boldsymbol{\mathsf{A}}\,\boldsymbol{v}(t)+\boldsymbol{\mathsf{B}}_{d}\,d(t)+\boldsymbol{\mathsf{B}}_{u}\,u(t)+ \boldsymbol{\mathsf{N}} (\boldsymbol{v}(t)),\\ z(t) =\boldsymbol{\mathsf{C}}_{z}\,\boldsymbol{v}(t), \end{gathered}\right\}\end{equation}

\begin{equation} \left.\begin{gathered} \dot{\boldsymbol{v}}(t) =\boldsymbol{\mathsf{A}}\,\boldsymbol{v}(t)+\boldsymbol{\mathsf{B}}_{d}\,d(t)+\boldsymbol{\mathsf{B}}_{u}\,u(t)+ \boldsymbol{\mathsf{N}} (\boldsymbol{v}(t)),\\ z(t) =\boldsymbol{\mathsf{C}}_{z}\,\boldsymbol{v}(t), \end{gathered}\right\}\end{equation}

where ![]() $\boldsymbol{\mathsf{N}}$ represents the nonlinear operator. In terms of the time marching of (3.3), we adopt a third-order semi-implicit Runge–Kutta scheme proposed by Kar (Reference Kar2006) and also used by Bucci et al. (Reference Bucci, Semeraro, Allauzen, Wisniewski, Cordier and Mathelin2019) and Zeng & Graham (Reference Zeng and Graham2021), in which the nonlinear and external forcing terms are time-marched explicitly, while the linear term is marched implicitly using a trapezoidal rule.

$\boldsymbol{\mathsf{N}}$ represents the nonlinear operator. In terms of the time marching of (3.3), we adopt a third-order semi-implicit Runge–Kutta scheme proposed by Kar (Reference Kar2006) and also used by Bucci et al. (Reference Bucci, Semeraro, Allauzen, Wisniewski, Cordier and Mathelin2019) and Zeng & Graham (Reference Zeng and Graham2021), in which the nonlinear and external forcing terms are time-marched explicitly, while the linear term is marched implicitly using a trapezoidal rule.

It should be noted that the models given by ![]() $\boldsymbol{\mathsf{A}}$,

$\boldsymbol{\mathsf{A}}$, ![]() $\boldsymbol{\mathsf{B}}_{u}$ and

$\boldsymbol{\mathsf{B}}_{u}$ and ![]() $\boldsymbol{\mathsf{C}}_{z}$ are only for the purpose of numerical simulations, but not for the controller design. A DRL-based controller has no awareness of the existence of such models; it learns the control law from scratch through interacting with the environment and is thus model-free and data-driven, unlike the model-based controllers whose design depends specifically on such models.

$\boldsymbol{\mathsf{C}}_{z}$ are only for the purpose of numerical simulations, but not for the controller design. A DRL-based controller has no awareness of the existence of such models; it learns the control law from scratch through interacting with the environment and is thus model-free and data-driven, unlike the model-based controllers whose design depends specifically on such models.

3.2. Direct numerical simulation of the 2-D Blasius boundary layer

Flow simulations are performed to solve (2.9a,b) in the computational domain ![]() $\varOmega$ using the open source Nek5000 solver (Fischer, Lottes & Kerkemeier Reference Fischer, Lottes and Kerkemeier2017). The spatial discretisation is implemented using the spectral element method, where the velocity space in each element is spanned by the

$\varOmega$ using the open source Nek5000 solver (Fischer, Lottes & Kerkemeier Reference Fischer, Lottes and Kerkemeier2017). The spatial discretisation is implemented using the spectral element method, where the velocity space in each element is spanned by the ![]() $K$th-order Legendre polynomial interpolants based on the Gauss–Lobatto–Legendre quadrature points. According to the mesh convergence study (not shown), we finally choose a specific mesh composed of 870 elements of the order

$K$th-order Legendre polynomial interpolants based on the Gauss–Lobatto–Legendre quadrature points. According to the mesh convergence study (not shown), we finally choose a specific mesh composed of 870 elements of the order ![]() $K = 7$, with the local refinement implemented close to the wall. In terms of the time integration, the two-step backward differentiation scheme is adopted in the unsteady simulations, with time step

$K = 7$, with the local refinement implemented close to the wall. In terms of the time integration, the two-step backward differentiation scheme is adopted in the unsteady simulations, with time step ![]() $5 \times 10^{-2}$ unit times, and the initial flow field is constructed from similarity solutions of the boundary layer equations.

$5 \times 10^{-2}$ unit times, and the initial flow field is constructed from similarity solutions of the boundary layer equations.

3.3. Deep reinforcement learning

In the context of a DRL-based method, the control agent learns a specific control policy via interaction with the environment. The DRL framework in this work is shown in figure 4, where the agent is represented by an artificial neural network and the environment corresponds to the numerical simulation of the 1-D KS equation or the 2-D boundary layer, as explained above. In general, the DRL works as follows: first, the agent receives states ![]() $s_t$ from the environment; then an action

$s_t$ from the environment; then an action ![]() $a_t$ is determined based on the states and is exerted on the environment; finally, the environment returns a reward signal

$a_t$ is determined based on the states and is exerted on the environment; finally, the environment returns a reward signal ![]() $r_t$ to evaluate the quality of the previous actions. This loop continues until the training process converges, i.e. the expected cumulative reward is maximised for each training episode.

$r_t$ to evaluate the quality of the previous actions. This loop continues until the training process converges, i.e. the expected cumulative reward is maximised for each training episode.

Figure 4. The reinforcement learning framework in flow control. The agent is an artificial neural network; the environment is a numerical simulation of the 1-D KS equation or 2-D boundary layer flow. Action is adjustment of the external forcing; reward is reduction of the downstream perturbation; and state is streamwise velocities collected by sensors.

In the current setting of both KS equation and boundary layer flows, states refer to the streamwise velocities collected by sensors. Since the amplitude of velocity differs at different locations, states are normalised by their respective mean values and standard deviations before they are input to the agent. The number and position of sensors are determined from an optimisation process to be detailed in § 4.5. Action corresponds to ![]() $u(t)$ in both (2.6) and (2.10), with predefined ranges

$u(t)$ in both (2.6) and (2.10), with predefined ranges ![]() $[-5, 5]$ and

$[-5, 5]$ and ![]() $[-0.01, 0.01]$, respectively. Each control action is exerted on the environment for a duration of 30 numerical time steps before the next update. This operation is the so-called sticky action, which is a common choice in DRL-based flow control (Rabault & Kuhnle Reference Rabault and Kuhnle2019; Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019; Beintema et al. Reference Beintema, Corbetta, Biferale and Toschi2020). Allowing quicker actions may be detrimental to the overall performance since the action needs some time to make an impact on the environment (Burda et al. Reference Burda, Edwards, Pathak, Storkey, Darrell and Efros2018). The last crucial component in the DRL framework is reward, which is related directly to the control objective. For the 1-D KS system, the reward is defined as the negative perturbation amplitude measured by the output sensor, i.e. the negative root-mean-square (r.m.s.) value of

$[-0.01, 0.01]$, respectively. Each control action is exerted on the environment for a duration of 30 numerical time steps before the next update. This operation is the so-called sticky action, which is a common choice in DRL-based flow control (Rabault & Kuhnle Reference Rabault and Kuhnle2019; Rabault et al. Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019; Beintema et al. Reference Beintema, Corbetta, Biferale and Toschi2020). Allowing quicker actions may be detrimental to the overall performance since the action needs some time to make an impact on the environment (Burda et al. Reference Burda, Edwards, Pathak, Storkey, Darrell and Efros2018). The last crucial component in the DRL framework is reward, which is related directly to the control objective. For the 1-D KS system, the reward is defined as the negative perturbation amplitude measured by the output sensor, i.e. the negative root-mean-square (r.m.s.) value of ![]() $z(t)$ in (2.7), while for the 2-D boundary layer flow, the reward is defined as the negative local perturbation energy measured around point

$z(t)$ in (2.7), while for the 2-D boundary layer flow, the reward is defined as the negative local perturbation energy measured around point ![]() $z$, i.e.

$z$, i.e. ![]() $-z(t)$ in (2.12). However, due to the time delay effect in convective flows, the instantaneous reward is not a real response to the current action. To remedy the time mismatch, we store the simulation data first and retrieve them later after the action has reached the output sensor. This operation helps the agent to perceive the dynamics of the environment in a time-matched way, and thus leads to a good training result. For details on the time delay and how to remove it, the reader can refer to Appendix B. In addition, we also consider a stability-enhanced design for the reward function, which will penalise the flow instability and further improve the control performance (Appendix C).

$-z(t)$ in (2.12). However, due to the time delay effect in convective flows, the instantaneous reward is not a real response to the current action. To remedy the time mismatch, we store the simulation data first and retrieve them later after the action has reached the output sensor. This operation helps the agent to perceive the dynamics of the environment in a time-matched way, and thus leads to a good training result. For details on the time delay and how to remove it, the reader can refer to Appendix B. In addition, we also consider a stability-enhanced design for the reward function, which will penalise the flow instability and further improve the control performance (Appendix C).

In terms of the training algorithm for the agent, since the action space is continuous, we adopt a policy-based algorithm named the deep deterministic policy gradient (DDPG), which is essentially a typical actor–critic network structure (Sutton & Barto Reference Sutton and Barto2018). This method has also been used in other DRL works (Koizumi et al. Reference Koizumi, Tsutsumi and Shima2010; Bucci et al. Reference Bucci, Semeraro, Allauzen, Wisniewski, Cordier and Mathelin2019; Zeng & Graham Reference Zeng and Graham2021; Kim et al. Reference Kim, Kim, Kim and Lee2022; Pino et al. Reference Pino, Schena, Rabault, Kuhnle and Mendez2022). The actor network ![]() $\mu _{\phi }(s)$, also known as the policy network, is parametrised by

$\mu _{\phi }(s)$, also known as the policy network, is parametrised by ![]() $\phi$ and outputs an action

$\phi$ and outputs an action ![]() $a$ to be applied to the environment for a given state

$a$ to be applied to the environment for a given state ![]() $s$. The aim of the actor is to find an optimal policy that maximises the expected cumulative reward, which is predicted by the critic network

$s$. The aim of the actor is to find an optimal policy that maximises the expected cumulative reward, which is predicted by the critic network ![]() $Q_{\theta }(s, a)$ parametrised by

$Q_{\theta }(s, a)$ parametrised by ![]() $\theta$. The loss function

$\theta$. The loss function ![]() $L(\theta )$ for updating the parameters of the critic reads

$L(\theta )$ for updating the parameters of the critic reads

where ![]() $\mathbb {E}$ represents the expectation of all transition data

$\mathbb {E}$ represents the expectation of all transition data ![]() $(s, a, r, s^{\prime })$ in sequence

$(s, a, r, s^{\prime })$ in sequence ![]() $\mathcal {D}$;

$\mathcal {D}$; ![]() $s$,

$s$, ![]() $a$ and

$a$ and ![]() $r$ are the state, action and reward for the current step, respectively, and

$r$ are the state, action and reward for the current step, respectively, and ![]() $s^{\prime }$ is the state for the next step. The right-hand side of the equation calculates the typical error in reinforcement learning, i.e. temporal-difference error, and

$s^{\prime }$ is the state for the next step. The right-hand side of the equation calculates the typical error in reinforcement learning, i.e. temporal-difference error, and ![]() $\gamma$ is the discount factor selected as 0.95 here. The objective function

$\gamma$ is the discount factor selected as 0.95 here. The objective function ![]() $L(\phi )$ for updating the parameters of the actor is obtained straightforwardly as

$L(\phi )$ for updating the parameters of the actor is obtained straightforwardly as

It should be noted that although the actor aims to maximise the ![]() $Q$ value predicted by the critic, only gradient descent rather than gradient ascent is embedded in the adopted optimiser. Thus a negative sign is introduced on the right-hand side of (3.5).

$Q$ value predicted by the critic, only gradient descent rather than gradient ascent is embedded in the adopted optimiser. Thus a negative sign is introduced on the right-hand side of (3.5).

In addition, some technical tricks such as experience memory replay and a combination of evaluation net and target net are embedded in the DDPG algorithm, in order to cut off the correlation among data and improve the stability of training. More technical details on DDPG can be found in Silver et al. (Reference Silver, Lever, Heess, Degris, Wierstra and Riedmiller2014) and Lillicrap et al. (Reference Lillicrap, Hunt, Pritzel, Heess, Erez, Tassa, Silver and Wierstra2015). For other hyperparameters used in the current study, the reader can refer to Appendix B.

3.4. Particle swarm optimisation

As mentioned earlier, sensors are used to collect flow information from the environment as feedback to the DRL-based controller. Therefore, the sensor placement plays an important role in determining the final control performance. Some studies investigated the optimal sensor placement issue in the context of flow control using an adjoint-based gradient descent method. In these studies, gradients of the objective function with respect to the sensor positions can be obtained exactly using explicit equations involved in the model-based controllers (Chen & Rowley Reference Chen and Rowley2011; Belson et al. Reference Belson, Semeraro, Rowley and Henningson2013; Oehler & Illingworth Reference Oehler and Illingworth2018; Sashittal & Bodony Reference Sashittal and Bodony2021). However, in our work, DRL-based control is model-free and such gradient information is unavailable. Therefore, we adopt a gradient-free method called particle swarm optimisation (PSO) to determine the optimal sensor placement in DRL.

PSO is a type of evolutionary optimisation method that mimics the bird flock preying behaviour and was originally proposed by Kennedy & Eberhart (Reference Kennedy and Eberhart1995). For an optimisation problem expressed by

where ![]() $y$ is the objective function and

$y$ is the objective function and ![]() $x_1, x_2,\ldots,x_D$ are

$x_1, x_2,\ldots,x_D$ are ![]() $D$ design variables, PSO trains a swarm of particles that move around in the searching space bounded by the lower and upper boundaries, and update their positions iteratively according to the rule

$D$ design variables, PSO trains a swarm of particles that move around in the searching space bounded by the lower and upper boundaries, and update their positions iteratively according to the rule

where ![]() $v_{i d}^{k}$ is the

$v_{i d}^{k}$ is the ![]() $d$th-dimensional velocity of particle

$d$th-dimensional velocity of particle ![]() $i$ at the

$i$ at the ![]() $k$th iteration, and

$k$th iteration, and ![]() $x_{i d}^{k}$ is the corresponding position. The first term on the right-hand side of (3.7a) is the inertial term, representing its memory on the previous state, with

$x_{i d}^{k}$ is the corresponding position. The first term on the right-hand side of (3.7a) is the inertial term, representing its memory on the previous state, with ![]() $w$ being the weight. The second term is called self-cognition, representing learning from its own experience, where

$w$ being the weight. The second term is called self-cognition, representing learning from its own experience, where ![]() $p_{i d}^{best}$ is the best

$p_{i d}^{best}$ is the best ![]() $d$th-dimensional position that the particle

$d$th-dimensional position that the particle ![]() $i$ has ever found for the minimum

$i$ has ever found for the minimum ![]() $y$. The third term is called social cognition, representing learning from other particles, where

$y$. The third term is called social cognition, representing learning from other particles, where ![]() $s_{d}^{best}$ is the best

$s_{d}^{best}$ is the best ![]() $d$th-dimensional position among all the particles in the swarm. In (3.7b),

$d$th-dimensional position among all the particles in the swarm. In (3.7b), ![]() $c_1$ and

$c_1$ and ![]() $c_2$ are scaling factors, and

$c_2$ are scaling factors, and ![]() $r_1$ and

$r_1$ and ![]() $r_2$ are cognitive coefficients. After the iteration process converges, these particles will gather near a specific position in the search space, and this is the optimised solution.

$r_2$ are cognitive coefficients. After the iteration process converges, these particles will gather near a specific position in the search space, and this is the optimised solution.

For the optimal sensor placement in our case, design variables in (3.6) are sensor positions ![]() $x_1, x_2,\ldots, x_n$ (where

$x_1, x_2,\ldots, x_n$ (where ![]() $n$ is the number of sensors), and the objective function is related to the DRL-based control performance given the current sensor placement. As shown in figure 5, a specific sensor placement is input to the DRL-based control framework. The DRL training is then executed for 350 episodes – this number will be explained in § 4.2. For each episode, we keep a record of the absolute reward values for the action sequence, and take the average of them as the objective function for this episode, denoted by

$n$ is the number of sensors), and the objective function is related to the DRL-based control performance given the current sensor placement. As shown in figure 5, a specific sensor placement is input to the DRL-based control framework. The DRL training is then executed for 350 episodes – this number will be explained in § 4.2. For each episode, we keep a record of the absolute reward values for the action sequence, and take the average of them as the objective function for this episode, denoted by ![]() $r_a$. In this sense, the quantity

$r_a$. In this sense, the quantity ![]() $r_a$ is representative of the average perturbation amplitude monitored at the downstream location

$r_a$ is representative of the average perturbation amplitude monitored at the downstream location ![]() $x = 700$ for one training episode. In addition, the upstream noise is random and varies for different training episodes, so we take the average of

$x = 700$ for one training episode. In addition, the upstream noise is random and varies for different training episodes, so we take the average of ![]() $r_a$ from the 10 best training episodes, denoted by

$r_a$ from the 10 best training episodes, denoted by ![]() $r_b$, as a good approximation to the test control performance. Then

$r_b$, as a good approximation to the test control performance. Then ![]() $r_b$ is used as the objective function (

$r_b$ is used as the objective function (![]() $y$) in (3.6) in the PSO algorithm based on the Pyswarm package developed by Miranda (Reference Miranda2018). The swarm size is selected as 50, and all the scaling factors are set by default as 0.5. After a number of iterations, the algorithm converges and the optimal sensor placement is found. The results in §§ 4.2–4.4 are based on the optimal sensor placement, and the optimisation process is presented in § 4.5.

$y$) in (3.6) in the PSO algorithm based on the Pyswarm package developed by Miranda (Reference Miranda2018). The swarm size is selected as 50, and all the scaling factors are set by default as 0.5. After a number of iterations, the algorithm converges and the optimal sensor placement is found. The results in §§ 4.2–4.4 are based on the optimal sensor placement, and the optimisation process is presented in § 4.5.

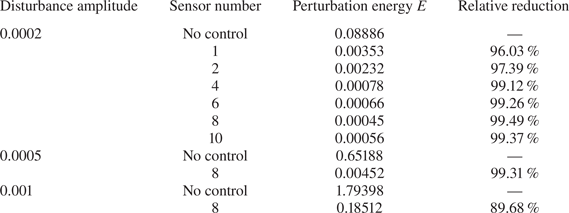

Figure 5. Schematic diagram for sensor placement optimisation. Design variables are ![]() $n$ sensor positions

$n$ sensor positions ![]() $x_1, x_2,\ldots, x_n$. The objective function is the DRL-based control performance quantified by the average absolute value of reward

$x_1, x_2,\ldots, x_n$. The objective function is the DRL-based control performance quantified by the average absolute value of reward ![]() $r_b$ from the 10 best episodes among all the 350 episodes.

$r_b$ from the 10 best episodes among all the 350 episodes.

4. Results and discussion

4.1. Dynamics of the 1-D linearised KS equation

As introduced in § 2, the 1-D linearised KS equation is an idealised equation for modelling the perturbation evolving in a Blasius boundary layer flow, with features such as non-normality, convective instability and a large time delay. Without control, the spatiotemporal dynamics of the KS equation subjected to upstream Gaussian white noise is presented in figure 6(a), where the perturbation is amplified significantly while travelling downstream, and the pattern of parallel slashes demonstrates the presence of a time delay. Figure 6(b) presents the temporal signal of noise with unit variance, and figure 6(c) displays the output signal at ![]() $x=700$.

$x=700$.

Figure 6. Dynamics of the 1-D linearised KS equation when subject to Gaussian white noise with unit variance: (a) spatiotemporal response of the system; (b) temporal signal of noise input ![]() $d(t)$ at

$d(t)$ at ![]() $x = 35$; (c) output signal

$x = 35$; (c) output signal ![]() $z(t)$ measured by sensor at

$z(t)$ measured by sensor at ![]() $x = 700$. The blue arrow represents input, and the red arrow represents output.

$x = 700$. The blue arrow represents input, and the red arrow represents output.

Due to the existence of linear instability in the flow, the amplitude of perturbation grows exponentially along the ![]() $x$-direction, which is verified by the black curve in figure 7(b), where the r.m.s. value of perturbation calculated by

$x$-direction, which is verified by the black curve in figure 7(b), where the r.m.s. value of perturbation calculated by

is plotted along the 1-D domain, and here ![]() $\overline {v^{\prime }(x)}$ represents the time average of the perturbation velocity at a particular position

$\overline {v^{\prime }(x)}$ represents the time average of the perturbation velocity at a particular position ![]() $x$. In addition, by comparing figures 6(b) and 6(c), we find that the perturbation amplitude increases but some frequencies are filtered by the system. This is because when a white noise is introduced, a broadband of frequencies are excited. However, only frequencies corresponding to a certain range of wavelengths are unstable and amplified in the flow. This is the main trait of a convectively unstable flow, plus the nonlinearity of the flow system, rendering it difficult for flow control. Such a flow is usually coined as a noise amplifier in the hydrodynamic stability context (Huerre & Monkewitz Reference Huerre and Monkewitz1990).

$x$. In addition, by comparing figures 6(b) and 6(c), we find that the perturbation amplitude increases but some frequencies are filtered by the system. This is because when a white noise is introduced, a broadband of frequencies are excited. However, only frequencies corresponding to a certain range of wavelengths are unstable and amplified in the flow. This is the main trait of a convectively unstable flow, plus the nonlinearity of the flow system, rendering it difficult for flow control. Such a flow is usually coined as a noise amplifier in the hydrodynamic stability context (Huerre & Monkewitz Reference Huerre and Monkewitz1990).

Figure 7. DRL-based control for reducing the downstream perturbation evolving in the 1-D linearised KS equation. (a) Average perturbation amplitude at point ![]() $z$ recorded during the training process, where the red dashed line denotes the time when the experience memory is full. (b) The r.m.s. value of perturbation along the 1-D domain plotted for both cases, with and without control.

$z$ recorded during the training process, where the red dashed line denotes the time when the experience memory is full. (b) The r.m.s. value of perturbation along the 1-D domain plotted for both cases, with and without control.

4.2. DRL-based control of the KS system

In this subsection, we present the performance of DRL-based control on reducing the downstream perturbation evolving in the KS system. The training process is shown in figure 7(a), where the average perturbation amplitude at point ![]() $z$ (

$z$ (![]() $x = 700$; see figure 2) is plotted for all the training episodes. In the initial stage of training, the perturbation amplitude is large and shows no sign of decreasing, which corresponds to the filling process of experience memory in the DDPG algorithm. Once the memory is full (denoted by the red dashed line in figure 7a), the learning process begins and the amplitude decreases significantly to a value near zero, and remains almost unchanged after 350 episodes, indicating that the policy network has converged and the optimal control policy has been learnt. Note that this explains the periodical training of 350 episodes when we implement the PSO algorithm, as mentioned in § 3.4. Then we test the learnt policy by applying it to the KS environment for 10 000 time steps, with both the external disturbance input

$x = 700$; see figure 2) is plotted for all the training episodes. In the initial stage of training, the perturbation amplitude is large and shows no sign of decreasing, which corresponds to the filling process of experience memory in the DDPG algorithm. Once the memory is full (denoted by the red dashed line in figure 7a), the learning process begins and the amplitude decreases significantly to a value near zero, and remains almost unchanged after 350 episodes, indicating that the policy network has converged and the optimal control policy has been learnt. Note that this explains the periodical training of 350 episodes when we implement the PSO algorithm, as mentioned in § 3.4. Then we test the learnt policy by applying it to the KS environment for 10 000 time steps, with both the external disturbance input ![]() $d(t)$ and control input

$d(t)$ and control input ![]() $u(t)$ turned on.

$u(t)$ turned on.

The spatiotemporal response of the controlled KS system is shown in figure 8(a), subjected to the noise input as shown in figure 8(b). Compared with that in figure 6(a), the downstream perturbation is significantly suppressed after the implementation of control action at ![]() $x = 400$ starting from

$x = 400$ starting from ![]() $t = 1450$ (denoted by the blue dashed line). By observing figures 8(c) and 8(d), we find that there is a short time delay between the control starting time and the time when the downstream perturbation

$t = 1450$ (denoted by the blue dashed line). By observing figures 8(c) and 8(d), we find that there is a short time delay between the control starting time and the time when the downstream perturbation ![]() $z(t)$ starts to decline. This is due to the convective nature that the action needs some time to make an impact downstream. In addition, we also plot the r.m.s. value of perturbation along the 1-D domain, as shown by the blue curve in figure 7(b). In contrast to the originally exponential growth, with the DRL-based control, the perturbation amplitude is largely reduced downstream of the control position (

$z(t)$ starts to decline. This is due to the convective nature that the action needs some time to make an impact downstream. In addition, we also plot the r.m.s. value of perturbation along the 1-D domain, as shown by the blue curve in figure 7(b). In contrast to the originally exponential growth, with the DRL-based control, the perturbation amplitude is largely reduced downstream of the control position (![]() $x = 400$). For instance, the amplitude at

$x = 400$). For instance, the amplitude at ![]() $x = 700$ is reduced from about 20 to 0.03, which demonstrates the effectiveness of the DRL-based method in controlling the perturbation evolving in the 1-D KS system.

$x = 700$ is reduced from about 20 to 0.03, which demonstrates the effectiveness of the DRL-based method in controlling the perturbation evolving in the 1-D KS system.

Figure 8. Dynamics of the 1-D linearised KS equation with both the external disturbance and control inputs: (a) spatiotemporal response of the system; (b) temporal signal of noise input ![]() $d(t)$ at

$d(t)$ at ![]() $x = 35$; (c) temporal signal of control input

$x = 35$; (c) temporal signal of control input ![]() $u(t)$ at

$u(t)$ at ![]() $x = 400$ provided by the DRL agent; (d) output signal

$x = 400$ provided by the DRL agent; (d) output signal ![]() $z(t)$ measured by sensor at

$z(t)$ measured by sensor at ![]() $x = 700$. The blue arrows represent inputs, and the red arrow represents output; the blue dashed line denotes the control starting time.

$x = 700$. The blue arrows represent inputs, and the red arrow represents output; the blue dashed line denotes the control starting time.

4.3. Comparison between DRL and model-based LQR

In order to better evaluate the performance of DRL applied to the 1-D KS equation, we compare the DRL-controlled results with those of the classical linear quadratic regulator (LQR) method. The LQR method for controlling the KS equation has been explained in detail in Fabbiane et al. (Reference Fabbiane, Semeraro, Bagheri and Henningson2014). For the basics of LQR, the reader may consult Appendix D.

Ideally, if there are no action bounds imposed on LQR, then it will generate the optimal control performance as shown in the red curves in figure 9(a), and we call it the ideal LQR control. It is shown that the perturbation amplitude downstream of the actuator position is reduced dramatically, and the corresponding action happens to be in the range ![]() $[-5, 5]$. Thus for a consistent comparison of LQR and DRL, we apply the same action bound

$[-5, 5]$. Thus for a consistent comparison of LQR and DRL, we apply the same action bound ![]() $[-5, 5]$ to DRL-based control, and present the resulting control performance as blue curves. It is found that the DRL-based control is slightly better than the LQR control in reducing the downstream perturbation, as shown in the right-hand plot of figure 9(a). Despite the fact that in the near downstream region from

$[-5, 5]$ to DRL-based control, and present the resulting control performance as blue curves. It is found that the DRL-based control is slightly better than the LQR control in reducing the downstream perturbation, as shown in the right-hand plot of figure 9(a). Despite the fact that in the near downstream region from ![]() $x = 400$ to

$x = 400$ to ![]() $x = 470$, the amplitude of DRL-based control is higher than that of LQR control, the reduction of perturbation is more significant in the DRL control in a further downstream region.

$x = 470$, the amplitude of DRL-based control is higher than that of LQR control, the reduction of perturbation is more significant in the DRL control in a further downstream region.

Figure 9. Comparison between DRL-based control and LQR control when applied to the 1-D linearised KS system with different action bounds. In each panel, the top left plot presents the time-variation curve of the control action ![]() $u(t)$, and the bottom left plot presents the corresponding sensor output

$u(t)$, and the bottom left plot presents the corresponding sensor output ![]() $z(t)$; the right-hand plot shows the r.m.s. value of perturbation velocity along the 1-D domain. (a) Control performance of ideal LQR and of DRL with action bound