Introduction

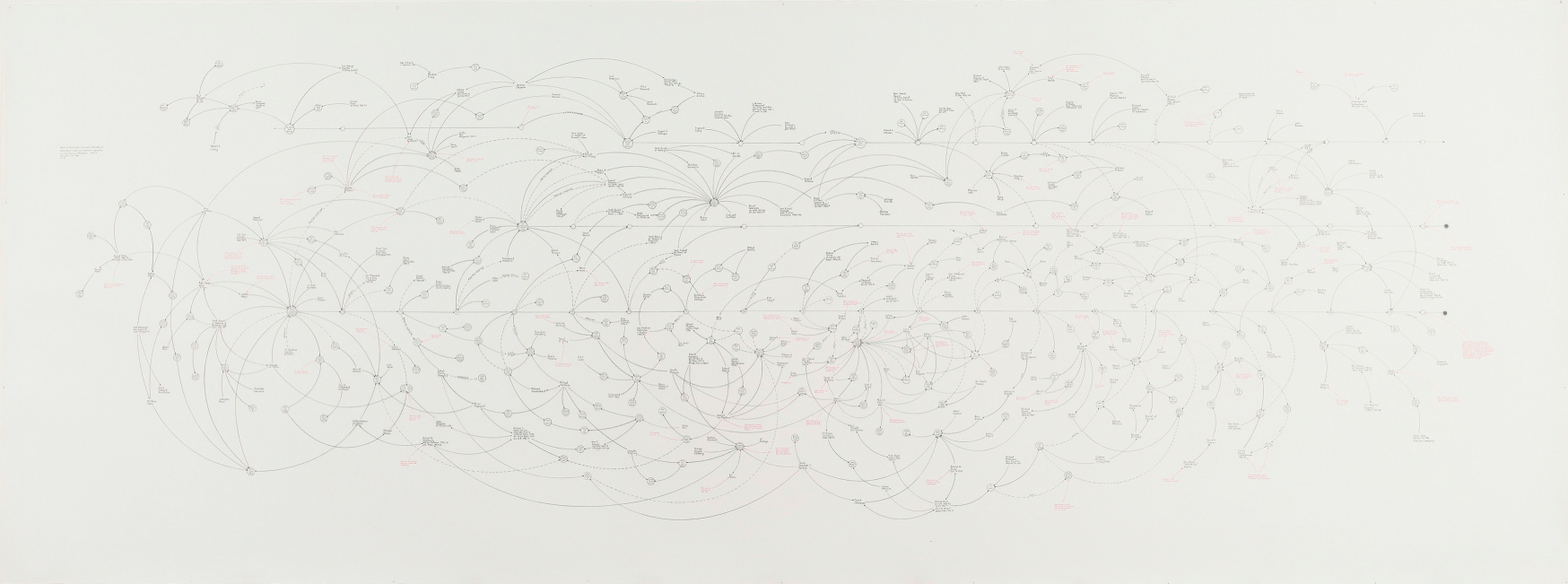

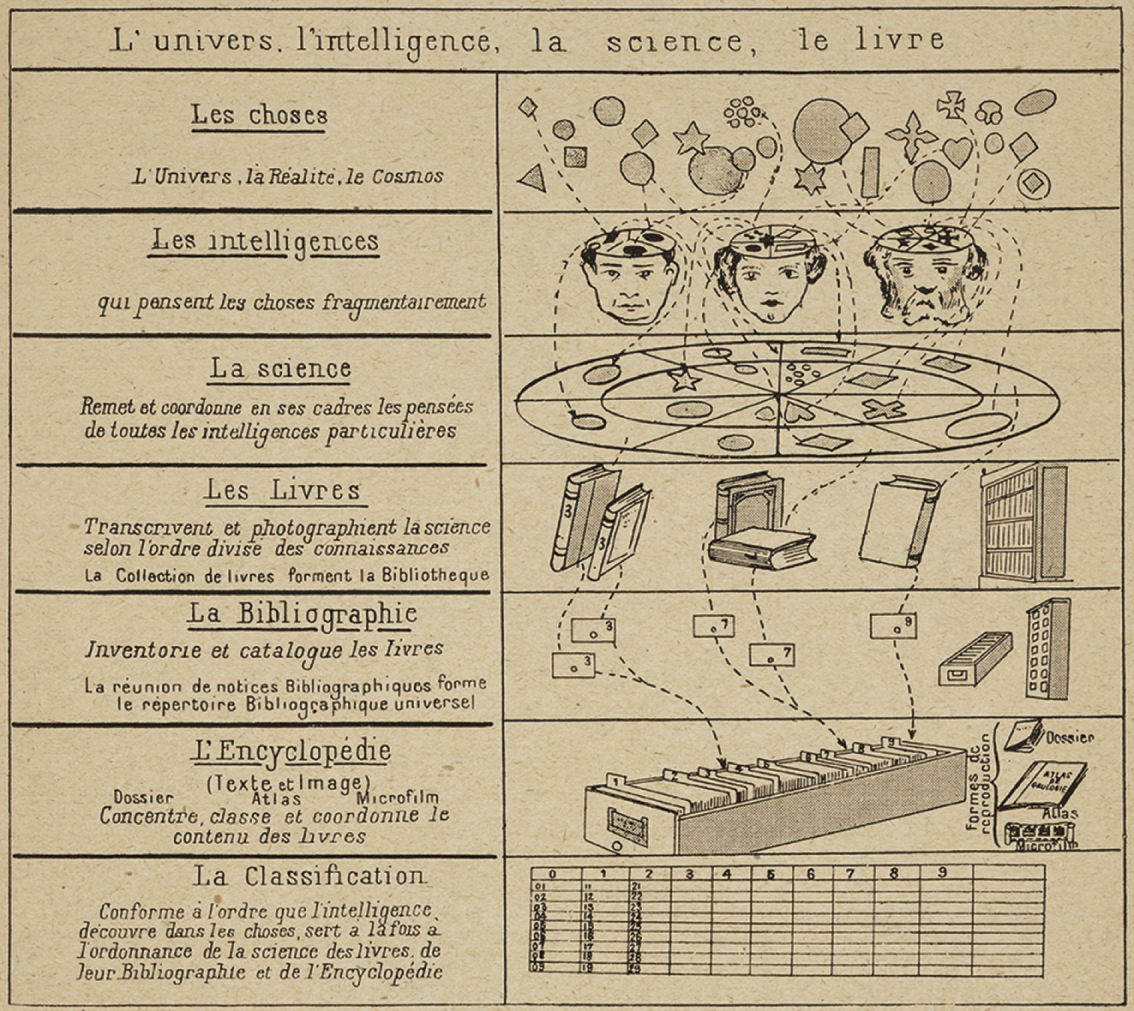

In the days following the 9/11 terror attack, an FBI agent visited the Whitney Museum of American Art to see Mark Lombardi’s 1996 drawing ‘BCCI-ICIC & FAB, 1972–91 (4th version)’ (Figure 1) (Reference HobbsHobbs, 2003: 11–12, 95–8). The web-like image comprises a meticulously researched diagram of individuals and groups with ties to a money-laundering organisation that operated under the name of the Bank of Commerce and Credit International (BCCI), which included Osama bin Laden and others associated with al-Qaeda. As Lombardi himself described, BCCI ‘was used not only by drug dealers and con men but also by the governments of the US, UK, Saudi Arabia and the Gulf Arab states to funnel support to Afghan guerrillas fighting Soviet occupation, to pay off friends and adversaries alike and conduct secret arms sales to Iran’ (Reference LombardiLombardi, 2001). In other words, in black and red ink, Lombardi traced a terrorist network that reached the centres of government. He had grasped the power of the network perspective to reveal conspiracy, adapting graphical traditions associated with the study of social networks developed in the first half of the twentieth century. Tragically, the significance of his research would be uncovered only after his death: in early 2002, a year after the artist’s suicide, the FBI’s Operation Green Quest raided the offices of several Virginia-based Islamic charities whose Saudi funders, including Mahfouz and prominent Bush backers, featured in Lombardi’s work (Reference GoldstoneGoldstone, 2015).

Figure 1 Lombardi, Mark (1951–2000), ‘BCCI-ICIC & FAB, 1972–91 (4th version)’ from the series BCCI, ICIC & FAB, 1996–2000. Graphite and coloured pencil on paper. New York, Whitney Museum of American Art.

Three years after Lombardi produced his artwork, Albert-László Barabási and Réka Albert published a scientific article entitled ‘Emergence of Scaling in Random Networks’ (Reference Barabási and AlbertBarabási & Albert, 1999). It argued that a wide variety of seemingly heterogeneous networks, such as power grids, social networks, and the World Wide Web, exhibit nearly identical distributions of connectivity, and it offered an elegant model that explained how these distributions might arise.Footnote 1 This particular distribution of connectivity was different from those most scientists expected at the time.Footnote 2 The significance of Barabási and Albert’s findings was that they provided a compelling case for analysing seemingly disparate systems and kinds of data using the same mathematical models and tools. For this reason, their article is regarded as one of the founding publications of the interdisciplinary field of modern network science. The argument for the application of analytical tools across domains was extended in Barabási’s best-selling book Linked, in which he argued that many challenges in our world, such as managing the spread of epidemics, fighting terrorism, and handling economic crises, can be cracked by understanding these systems as networks (Reference BarabásiBarabási, 2002). As such, networks appear in Barabási’s study as a kind of Rosetta Stone. This message reached 70,000 readers and thus played a small part in the rise of the ‘network’ perspective in the modern consciousness following the new millennium.

Lombardi and Barabási’s work is part of what we call the ‘network turn’. This turn cannot be attributed to either the artist or the scientist; they are but two examples of a whole host of converging thoughts and practices around the turn of the new millennium – the zeitgeist of the networked age. The World Wide Web had become available to the public only in 1991, but by 2004, the web-based view of relations manifested itself in an entirely new kind of communication platform when ‘TheFacebook’ was launched. The subsequent proliferation of social networking platforms has profoundly shaped the way we understand connectivity in the world today.

Another key driver of the network turn, highlighted by the FBI’s interest in Lombardi’s work, is terrorist activity – both in terms of the perceived threat of terrorist networks, and in the new technologies available to security agencies to mitigate against them. Following 9/11, using data-gathering approaches very similar to Lombardi’s combined with computational analysis, Valdis Krebs used public information and newspaper clippings to produce a partial map of the social network behind the attack. His network analysis showed that all nineteen of the hijackers were within two email or phone call connections of two al-Qaeda members already known to the CIA before the attack. According to three common network analysis metrics, the network’s most central figure was Muhammed Atta, who turned out to be the ringleader (Reference KrebsKrebs, 2001). Krebs’ findings raised the important question of whether the attack could have been predicted. Shortly after posting his analysis online, Krebs was invited to Washington, DC to brief intelligence contractors. The extent to which Krebs’ insight about the power of network analysis fed into the existing methods that intelligence agencies employed is hard to gauge, but by 2013, as the leak by Edward Snowden brought to light, the National Security Agency was engaged in massive-scale network analysis using data from nine internet providers.

The study and critique of networks has predominantly taken place within the domains of computer science and related scientific fields, the military, and the tech sector due to the scale of digital data being analysed and the nature of the investigations prompting their study. This book not only argues that arts and humanities scholars can use the same kind of visual and quantitative analysis of networks to shed light on the study of culture; it also contends that the critical skills native to humanistic inquiry are vital to the theorisation and critique of our networked world. Network analysis, as we define it in this book, is a set of practices and discourses that sit at the interface of the natural sciences, humanities, social sciences, computer science, and design. We contend that networks are a category of study that cuts across traditional academic boundaries and that has the potential to unite diverse disciplines through a shared understanding of complexity in our world – whether that complexity pertains to the nature of the interactions of proteins in gene-regulatory networks or to the network of textual variants that can reveal the lineage of a poem. Moreover, this shared framework provides a compelling case for collaboration across those boundaries, for bringing together computational tools for quantitative network analysis, together with theories, discourses, and applied techniques from the social sciences, the humanities, visual design, and art practice.

The cases of Lombardi and Barabási provide an instructive way of grasping that shared framework because, superficially, their work has very little in common. Barabási and Albert explicitly cite the computerisation of data acquisition as essential to their research. By contrast, Lombardi’s research process was analogue. He gathered his data on three-by-five notecards. There is no evidence that Lombardi read Barabási and Albert’s groundbreaking work in statistics and physics; rather, his inspiration was panorama and history painting. He used the term ‘narrative structures’ to describe his hand-drawn webs of connection. Produced through an iterative process of refinement, the work is human in scale, legible visually in its entirety. Perhaps more importantly, it is his interpretation of a carefully researched but inevitably incomplete record. It does not pretend to objectivity. In stark contrast, Barabási and Albert’s method is scientific: it proposes a model to predict the behaviour of systems and to understand complex topologies ‘independent of the system and the identity of its constituents’ (Reference Barabási and AlbertBarabási & Albert, 1999). Thus, where Lombardi is analysing past events, Barabási and Albert offer a predictive model; where Lombardi is visual, Barabási and Albert use algorithms designed to detect patterns in data sets too large or complex for the human eye to detect. These approaches seem to occupy two very separate worlds.

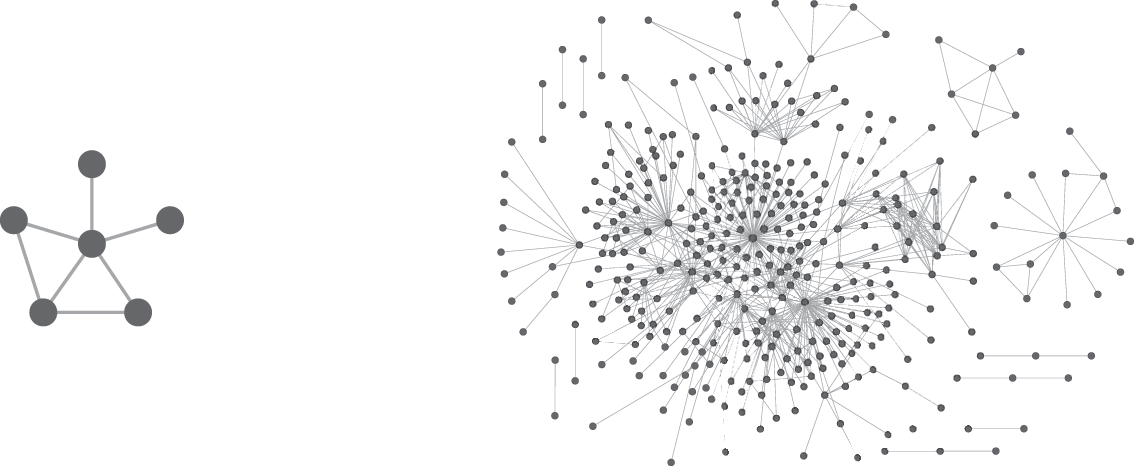

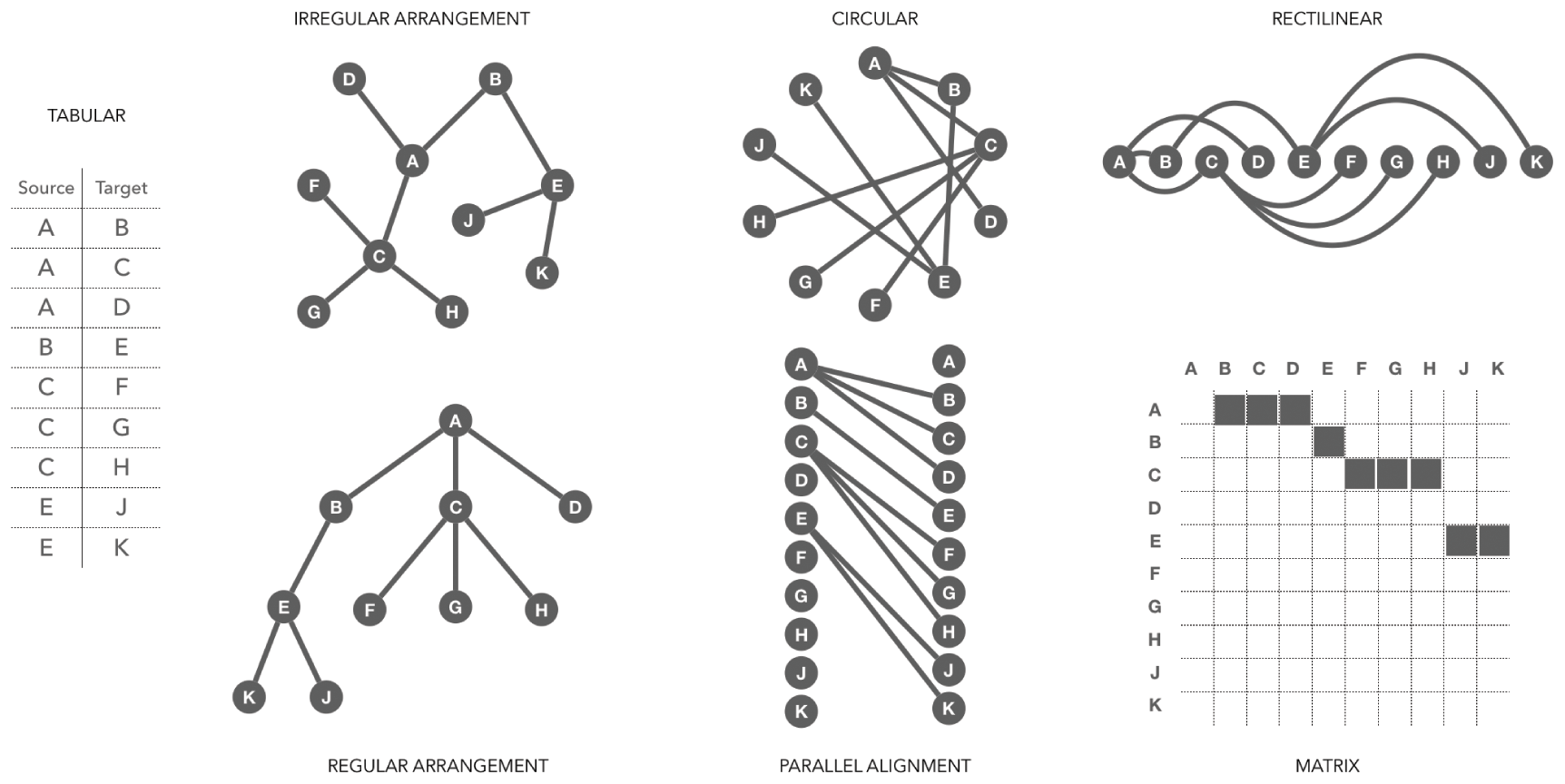

Nevertheless, Lombardi’s art and the scientific approaches of Barabási and Albert have much in common. Lombardi distils the composition of relationships in history painting and the comprehensive ‘at one view’ of the panorama into a formal abstraction rooted in the conceptual art movement of the mid-twentieth century, and reflects the overlapping concerns, discourses, and methods of art and science. The artist and scientists use connectivity to make sense out of data: a representation of knowledge that relies on abstraction. Both produce results that are seductive in their elegance and simplicity. Networks are by definition an abstraction into a system of nodes and edges. Nodes are entities; edges are the relationships between them. Two examples can be seen in Figure 2. Such an abstract system is inherently intuitive. These two elements, nodes and edges, are the simple building blocks of an obviously abbreviated rendering, a malleable geometry that can range in complexity from a direct and declarative schematic to a dense, indecipherable web of connections.

Figure 2 Networks consist of nodes and edges. On the left, a simple network of six nodes and seven edges. On the right, a more complex network (with several disjointed components) that depicts social relationships in a Protestant underground community during the reign of Queen Mary I of England (see Reference Ahnert and AhnertAhnert & Ahnert, 2015).

The worlds from which the artist and the scientists emerge have their own long genealogies. The standard history we tell for network science traces its lineage back through graph theory to Leonard Euler’s solution of the Königsberg Bridge problem in 1736. Similarly, we might argue that artists and humanities scholars have been engaging with network-analytic approaches for at least sixty years. However, these threads have visibly come together only in the past twenty years. In the first decade following the millennium, some pioneers began to apply the methods of network science to the study of cultural artefacts, but most scholars were still learning how to query web-based digitised archives without attention to the computer networks invisibly underpinning this virtual archival experience. However, since 2010 there has been a slow but steady increase in scholars in the arts and humanities employing network visualisation, social network analysis theory, and quantitative measures from network science to address their research questions. In addition to a rise in the number of publications invoking these methodologies, the clear demand for workshops and training in network visualisation and analysis tailored to arts and humanities scholars is evidence of these approaches gaining traction. Such work still tends to be a fringe activity, though, and suspicion among more traditional elements within the disciplines who have interpreted the computational tools and methods associated with network analysis as part of the incursion of scientific method into their domain, which has sometimes been conflated with the neoliberal takeover of the university.

This book does not call for arts and humanities scholars to accept unquestioningly frameworks and methods developed in the field of network science. Rather, it argues that the discourse and analysis of networks can move forward only through collaboration and exchange at the interface of computational method, humanistic inquiry, and design practice. The case for scholars from the arts and humanities engaging with networks is compelling on a number of levels. The use of computational network analysis can lead to the creation of new knowledge, and to the corroboration of theories. It makes it possible, with relative ease and speed, to measure the relationships between many entities in multiple ways, allowing a rich, multidimensional reading of complex systems never possible before. It has proven to be an effective tool for understanding metric data on a very large scale. A seemingly infinite number of calculations can be run on the resulting network to filter and parse that large-scale data, giving a more nuanced understanding of both the local and the global. The ability to analyse data across scales has been rendered increasingly necessary in light of the ever-growing quantity of information made available through the digitisation of our cultural artefacts. Networks further offer the ability to contextualise the large scale with the small and vice versa, breaking the explanatory chasm between part and whole.

Moreover, scholars from the arts and humanities already have the conceptual framework to make this leap: they have been writing about networks for centuries, albeit from the metaphorical perspective, examining communities of practitioners, the dissemination of ideas, or the relationships between certain texts, images, or artefacts. Although researchers with standard humanities training will likely need to acquire some new skills to engage with the computational challenges of network visualisation and quantitative analysis, we contend that they already have a set of skills that are key to the development of the interdisciplinary practice of network analysis. This is not just about receiving wholesale methods and theories developed in the computational and social sciences; rather, the critical skills developed in the arts and humanities are needed to complicate and nuance the current ways in which data are collected, modelled, and queried in the field of network science. Finally, we are at a moment in time when it is crucial that arts and humanities scholars engage critically with both the potential and the pitfalls of technological advancements. By offering an understanding of how networks work, we provide a much-needed framework to articulate how companies and governments can exploit the harnessing of large-scale data and advanced network analysis for the purposes of power, surveillance, and commercial gain.

This book is not a how-to manual: it does not provide instruction in the basics of network analysis or the use of ‘out-of-the-box’ tools, or an introduction to programming, as a plethora of other resources already do this.Footnote 3 Our aim here is more ideological. We seek to open up a space for exchange between the humanities, arts, and sciences – a space that is genuinely collaborative, that is mutually beneficial, and that recognises that networks present a mode of inquiry that draws on knowledge and practices from all these domains. Its combined brevity and breadth mean that it is not the final word, but rather a provocation. We hope this book will be a starting point for debate: not just in the digital humanities community (who are already used to situating themselves at the intersections of disciplines), and not just in the arts and humanities, but also in the natural, social, and computer sciences. It is the desire to engage and debate that motivated us to offer this book open access. Our most basic aim is to persuade colleagues in the arts and humanities of the value of networks as a conceptual and methodological framework that supplements (but does not replace) traditional methods of inquiry. But our intentions are broader than that: we hope for a sharing across domains to deepen our understanding of networks. That deepening is gained by combining world views we might attribute on one hand to Lombardi and on the other to Barabási, the combination of careful research and a choice of parameters at the human scale, that is not only coupled with but iteratively developed in tandem with the power of computational analysis.

Part of the argument for multiple perspectives is manifested in the way this book has been written. It is the product of a collaboration between a scholar of English literature, book history, and digital methods, a physicist specialising in network science, a historian of science concentrating in digital humanities, and a digital research architect with a background in design and tool development. We have not split the chapters among different authors; rather the arguments are the product of ongoing debate among the four of us over a period of three and a half years. Such a process of creation, like much of the work going on within network analysis more broadly, necessitates co-authorship. This is something that remains relatively rare in the arts and humanities. We seek to demonstrate the benefits of co-authorship, the insights and perspectives it brings, which can rarely be replicated by a single-authored work. It is not a shortcut or a faster route to publication. The process of gaining understanding, compromising, and iterating our arguments necessarily takes longer than writing a piece from a single viewpoint. However, we believe that process makes the work stronger. Genuine, deep sharing of ideas across disciplinary boundaries takes patience, goodwill, and a desire to learn and be challenged. We are therefore not simply arguing for a set of methodologies and discourses associated with the network framework. The network turn brings with it a set of research and publication practices that are inherently collaborative and dialogic.

The six chapters that follow are organised into three parts. Part I offers ‘Frameworks’ for understanding the methods developed in the natural, computational, and social sciences. To fully harness the analytical power of networks, we must first attend to the way a specific set of Western linguistic, disciplinary, and visual histories of networks frame the systems and phenomena we observe in the world, shaping, limiting, opening, and reorienting the questions we ask. Part II introduces ‘Cultural Networks’, giving an overview of the ways in which networks have already been used to examine cultural phenomena and artefacts, and the important role of design principles in both querying our data and communicating our research. Finally, Part III examines how network analysis provides a set of ‘Manoeuvres’: intellectual manoeuvres that refigure cultural objects in our minds as abstract systems of nodes and edges, mechanical manoeuvres that structure data and navigate input versus output, and manoeuvres between a landscape of abstraction and research questions that are steeped in contextual information. Taken together, these processes seek to dismantle the binaries between the ‘humanistic’ and the ‘scientific’ and, in so doing, create new norms of practice and inquiry. These new norms, however, are yet to be established. They must necessarily be shaped in ongoing collaboration and exchange. In the closing pages, we therefore suggest how different groups of scholars, practitioners, and professionals can direct the network turn as it becomes a standard part of our critical cultural apparatus.

Part I Frameworks

Networks represent more than a scientific method; they are a mindset shaped by a rich conceptual and visual history. To fully harness the analytical power of networks, we must first attend to the way these histories frame observable systems and phenomena in the world, shaping, limiting, opening, and reorienting the questions we ask.

1 Networks Are Always Metaphorical

In network science, researchers utilise networks as a formalised abstraction that permits computational analysis. In the humanities, by comparison, scholars largely employ networks as a metaphor. Despite these methodological differences, there are important continuities between the act of abstraction and use of metaphor. George Lakoff and Mark Johnson argue that metaphors are not just linguistic embellishment, but rather provide a conceptual framework that structures our most basic understandings of the world (Reference Lakoff and JohnsonLakoff & Johnson, 1980). Lakoff and Raphael Núñez later applied the framework of conceptual metaphor to the domain of mathematics:

Conceptual metaphor is a cognitive mechanism for allowing us to reason about one kind of thing as if it were another … It is a grounded, inference-preserving cross-domain mapping – a neural mechanism that allows us to use the inferential structure of one conceptual domain (say, geometry) to reason about another (say, arithmetic).

This is precisely how networks are used in the sciences. To the scientist a network is an abstract object, a collection of pairwise relationships (termed ‘edges’, ‘links’, or ‘arcs’) between defined entities (termed ‘nodes’ or ‘vertices’). What receives surprisingly little attention in scientific network literature is the definition of those entities and relationships, or, in other words, the process of abstraction from the real world to the network representation. Network science as a field takes the abstract network as a starting point; the process of abstraction often belongs to another domain, namely that in which the network data originates. A historical correspondence network originates in the domain of history, a network of neurons in the domain of neuroscience. Because the process of abstraction leads us across disciplinary boundaries, both the original domain and network science often neglect it. To see the network as a metaphor, by contrast, we have to be fully aware of the process of abstraction: what information it prioritises and what the abstraction elides.

This chapter argues that researchers who employ networks as a metaphor (traditionally those in the arts and humanities) ought to be familiar with the mathematical formalisations. Conversely, scholars wedded to the computational power of quantitative network analysis should be aware that its power derives from its reliance on the metaphorical dimension and an act of interpretation. For this reason we sketch the Western cultural history of the network as a concept, tracing its etymology and its acceptance as an inferential structure that enables interrogation and discovery. Undertaking such a task, which spans centuries as well as disciplines, in just a handful of pages means that the resulting account is also necessarily an abstraction. Moreover, it relies on readily available sources such as the Oxford English Dictionary (OED) and the Google Books corpus, which bring with them their own set of biases – not least an anglophone focus. However, even with the partiality and brevity of our rendering, the shifting applications of the word ‘network’ chart a series of changing views on the organisation of our world and how we can begin to understand it.

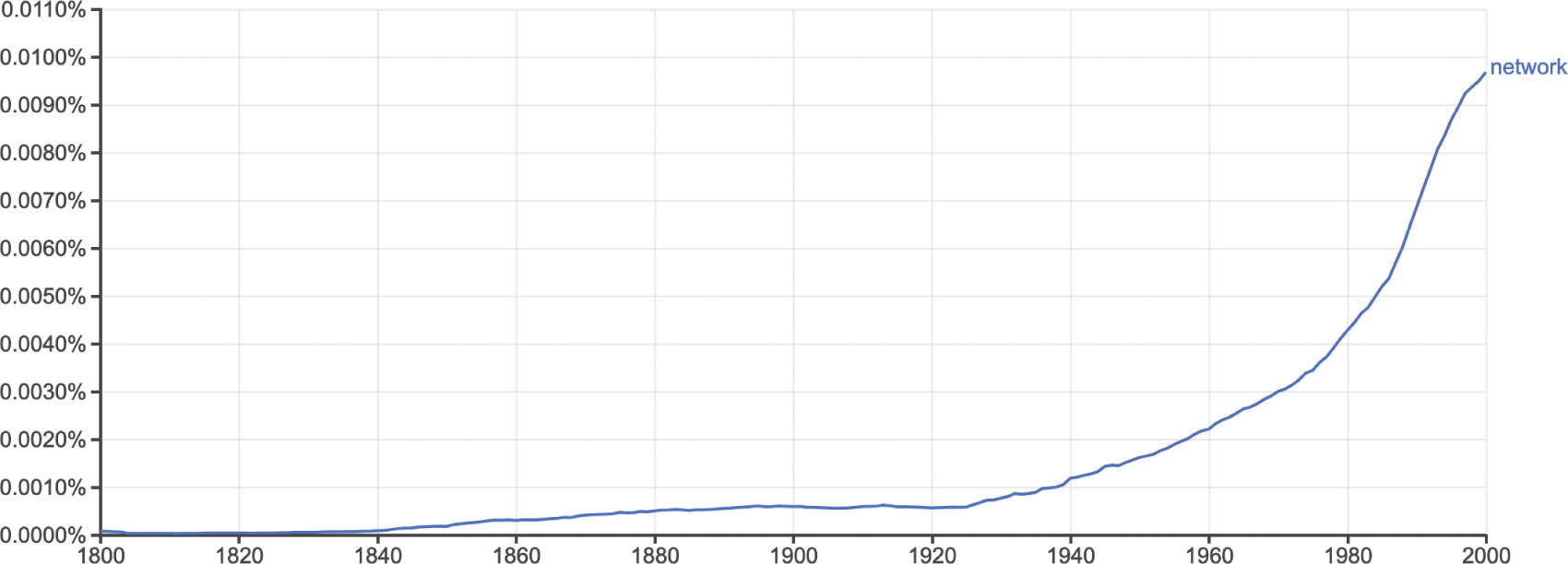

Importantly, taking such an approach removes the narrative of novelty from networks. It is easy to think of the network as a modern concept, and it is certainly the impression we take away if we rely solely on the snapshot of the word’s usage provided by Google Ngrams (see Figure 3). The Ngram shows limited usage in the nineteenth century, an upward curve beginning around 1920, and a sharp uptick after 1980; only isolated references occur before. However, the problems of the Google Books corpus for nuanced linguistic analysis are by now well documented, including the impact of optical character recognition (OCR) errors, the over-representation of scientific literature, messy metadata, the equal weight assigned to each book regardless of its literary impact, and the compounded bias of aggregated source libraries (Reference Pechenick, Danforth and DoddsPechenick, Danforth, & Dodds, 2015). The latter means that a single, prolific author can noticeably insert new phrases into the Google Books lexicon, whether the author is widely read or not.

Figure 3 Frequency of the word ‘network’ in the English Google Books corpus between 1800 and 2000, generated using the Google Ngrams tool, with smoothing parameter set to 3.

A complementary view is provided by the OED which lets us see some isolated data points from before 1800 in context. The earliest cited usage is in William Tyndale’s 1530 translation of the Pentateuch: ‘And he made a brasen gredyren of networke’ (OED, ‘network’, n. 1.a). In this context the words describe a physical work, a gridiron (‘gredyren’), which is constructed from parallel bars, crossed or interlaced in the fashion of a net. Mail armour was formed as a network, and the term was also used to describe fabric during the early modern period. The component words, ‘net’ and ‘work’, are from common Germanic stock; the composite, however, seems to be an English coinage, although it has made its way into numerous languages including Danish (netværk), Dutch (netwerk), German (Netzwerk), Maltese (netwerk), Norwegian (nettverk), and Swedish (nätverk). The word for network in certain other languages carries the same lineage from the word for the material act of weaving nets. For example, the Italian for network is rete, which comes from the Latin rete, meaning ‘net’ (the same root as red in Spanish, rede in Portuguese and Galician, and rețea in Romanian). In English, the root ‘ret’ forms the basis of ‘reticulation’ (a pattern of interlacing lines) and ‘retina’ (the regular net-like arrangement of blood vessels in the eye). The etymology of ‘network’ in both Germanic and Romance languages, therefore, contains a set of assumptions about structure, pattern, order, and distribution. In many cases, a maker or designer is implied.

It is important to recognise these assumptions when we communicate across disciplinary boundaries because the kinds of complex systems the word ‘network’ is now more often used to describe do not necessarily share the ordered woven structural features of mail armour or gridirons. In the modern language of networks, these particular material forms might be referred to instead as lattices, a specific subcategory of networks. The development of the word ‘network’ as a metaphor for systems that have very different patterns of distribution from fabric or mail armour can be seen from at least the seventeenth century, when it was used to describe the system of arteries, veins, and capillaries in humans and animals – what we might describe as rhizomatic structures. Later, as people recognised these patterns in both natural and artificial systems, the word ‘network’ came to represent systems of interconnection in general.

The evolution of the concept to denote the physical infrastructures for the distribution of people, merchandise, and electricity to consumers follows the construction of those systems relatively swiftly. For example, the first purpose-built passenger railway, the Liverpool and Manchester Railway, was authorised by an Act of Parliament in 1826, and by 1836 nearly 400 miles of track had opened in England. During this period, competition emerged regarding different scales of gauge, in response to which Thornton Hunt published a tract in 1846 entitled Unity of the Iron Network; Showing How the Last Argument for the Break of Gauge, Competition, Is at Variance with the True Interests of the Public. ‘Iron network’ might be described as an allusive rather than descriptive metaphor (e.g. railway network). The decision to use this as the main title therefore suggests that the metaphor was already an established way of describing the rail system. Similarly, the idea of investing in a central plant and network to deliver electricity to customers was first acted upon in the late 1870s, and by 1883 references appear in technical journal articles to networks of conductors in the construction of street mains electricity (OED, ‘network’, n. 4.b.).

In the twentieth century, we see the emergence of domain-specific appropriations of the word, which happened in tandem with a long philosophical and cultural crisis surrounding the rise of secular and democratic societies. The 1934 book Who Shall Survive? that emerged from the research undertaken by Jacob Moreno and Helen Hall Jennings contains some of the earliest graphical depictions of social networks, known as sociograms (see Figure 4).Footnote 4 Moreno and Jennings were founders of the journal Sociometry, which was the venue for some of the earliest scholarly articles in this new field. Moreno and Jennings claimed that ‘before the advent of sociometry no one knew what the interpersonal structure of a group “precisely” looked like’ (Reference Moreno and JenningsMoreno [& Jennings], 1953: lvi). The sociogram can be seen as the precursor both to the graphical notation employed by the artist Mark Lombardi, and the network visualisation layouts now familiar to us thanks to the ubiquity of out-of-the-box tools like Gephi (discussed further in Chapter 4). For most of the remainder of the twentieth century, social network analysis followed in the footsteps of Moreno and Jennings, collecting small-scale social networks through intensive surveys of well-defined social groups, numbering typically fewer than 100 individuals, which can be rendered intuitively using the sociogram.

Figure 4 Redesigned network produced by Martin Grandjean based on Hall and Moreno’s work in Who Shall Survive? showing relationships between children in a classroom (Reference GrandjeanGrandjean, 2015, chosen due to original diagrams being in copyright). CC BY SA 4.0.

Social network analysis also produced a number of concepts and measurements that have crucially changed the metaphorical hinterland of the word ‘network’. Central to this changing conceptual framework was the discovery that in social networks, two people are, on average, only separated by a small number of steps. In 1929, the popular Hungarian author Frigyes Karinthy wrote ‘Láncszemek’ (‘Chain-Links’), a short story musing on the shrinking social world during a period of rich international trade in Hungary. In the novel, Karinthy’s characters create a game:

One of us suggested performing the following experiment to prove that the population of the Earth is closer together now than they have ever been before. We should select any person from the 1.5 billion inhabitants of the Earth – anyone, anywhere at all. He bet us that, using no more than five individuals, one of whom is a personal acquaintance, he could contact the selected individual using nothing except the network of personal acquaintances.

Karinthy’s game became reality in Stanley Milgram’s research into the ‘small world’ phenomenon just over three decades later. Building on his earlier work with the mathematician Manfred Kochen and the political scientist Ithiel de Sola Pool, Milgram undertook a series of experiments that sought to determine the degrees of separation between people in real-world networks, which he reported in a 1967 issue of the popular magazine Psychology Today. Milgram invited members of the public to forward a parcel to close acquaintances in their immediate social (but not necessarily geographical) neighbourhood with the goal of eventually reaching a particular individual on the other side of the country. Although the methods and findings have since been disputed, Milgram claimed his study showed that ‘only five intermediaries will, on average, suffice to link any two randomly chosen individuals, no matter where they happen to live in the United States’ (Reference MilgramMilgram, 1967: 66). Milgram’s article generated enormous publicity, thereby connecting in a public consciousness the concept of the network and that of the small world.Footnote 5 More recently the findings have been popularised as the theory of ‘six degrees of separation’. Conceptually this phrase makes sense only if you view the world in terms of the network. The network, then, is a pre-existing framework upon which the concept of six degrees of separation is drawn.

Interestingly, while the phrase is usually attributed to Milgram, it is more likely that John Guare popularised it through his 1990 play Six Degrees of Separation, which spawned a film of the same name in 1993. What is helpful about approaching the sociological findings of Milgram’s experiments via this play is that it captures the surprise Milgram’s discoveries generated and what they might mean for lived experience. The character Ouisa Kittredge says:

I read somewhere that everybody on this planet is separated by only six other people. Six degrees of separation. Between us and everybody else on this planet. The president of the United States. A gondolier in Venice. Fill in the names. I find that A) tremendously comforting that we’re so close and B) like Chinese water torture that we’re so close. Because you have to find the right six people to make the connection. It’s not just big names. It’s anyone. A native in a rain forest. A Tierra del Fuegan. An Eskimo. I am bound to everyone on this planet by a trail of six people.

The accessibility of the concept of six degrees of separation is shown by the way it has been seized on in popular culture. The parlour game ‘Six degrees of Kevin Bacon’ challenges players to find the shortest path between a given actor or actress and prolific actor Kevin Bacon (which is in turn referenced by the digital project Six Degrees of Francis Bacon). And the phrase has appeared as a title of two TV series (a drama about six New Yorkers, Six Degrees, and a comedy reality show, Six Degrees of Everything), songs by the bands Scouting for Girls and The Script and by country artist Miranda Lambert, and an episode in Battlestar Galactica. What is notable about Guare’s monologue, and what seems to make the concept so appealing, is the invisibility of the connections, the difficulty of discovering them, and the sense of wonderment when they emerge.

That sense of wonder, however, is arguably a residue of the analogue era. By contrast, from the late 1990s onwards the rapid growth of both the Internet and computational processing power has made it possible to gather and analyse network data on an unprecedented scale. Now in a few lines of code we can construct and measure networks of various kinds and extract information about their global structure: how big they were, how densely clustered, and in the case of the small world phenomenon, how many degrees on average any randomly selected node was from any other node in a network. In the foundational 1998 publication ‘Collective Dynamics of Small World Networks’, Duncan J. Watts and Steven Strogatz showed how small world properties are not limited to social networks: the neural network of the worm Caenorhabditis elegans, the power grid of the western United States, and the collaboration graph of film actors are all small world networks (Reference Watts and StrogatzWatts & Strogatz, 1998). Together with the 1999 publication by Albert-László Barabási and Réka Albert discussed in the opening pages of this book, Watts and Strogatz’s publication ushered in the field of network science. Whilst some of their observations had precedents in scientific scholarship, they shone light on the elegance of networks as an abstract framework, which opened the floodgates to natural scientists, computer scientists, and applied mathematicians working on network data.

The movement into the digital realm, however, does something to the way we think about networks. It seems to make scientists custodians of the knowledge we have about networks, even though the systems they are analysing are historically the intellectual domains of very different disciplines. By thinking of them as something that can be measured mathematically, they no longer seem metaphorical but real and knowable. However, science is not the saviour of these other disciplines; rather, these discoveries depend on the convergence of numerous disciplines that have zeroed in on one way of understanding the world. In the potted history outlined earlier, we see that the understanding of and public access to the concept of small world networks was shaped by novelists (Karinthy), mathematicians (Kochen, Strogatz), political scientists (de Sola Pool), social psychologists (Milgram), playwrights (Guare), sociologists (Watts), artists and archivists (Lombardi), and physicists (Barabási, Albert), amongst others. The network turn is a product of all of this work, and all of this work was fertilised in a world increasingly straining against its hierarchies of power amidst renewed pushes for decentralised infrastructures of transportation and communication.

However, among these many diverse disciplinary threads that converge in the network turn, the mathematical view is particularly amplified in the digital context, meaning that the metaphorical and human-scale issues of point of view, uncertainty, and exclusion have been sidelined in both the popular and the academic consciousness. We need to redirect attention to the metaphorical dimension because of the way it helps us pay attention to the role of human interpretation: the insights the metaphor provides also necessarily impose constraints on thinking. We must recognise how the etymological root of the word continues to shape research into the network phenomenon. When we employ the word ‘network’ now, we no longer think of physical woven nets or the regular lattice pattern it signified in the earliest known uses; yet the underlying assumption of systematic pattern still pertains to the extent that academic research into networks is still bound up with the task of accounting for those perceived patterns. One might argue that the way networks are imagined is irrelevant to their quantitative measurements, but ultimately such measures are always interpreted in terms of their meaning for the underlying network. And this interpretation is inextricably connected to our imagination of the network.

One of the main reasons Reference Barabási and AlbertBarabási’s and Albert’s 1999 paper became so foundational is because it confounded widespread assumptions regarding the connectivity of large real-world networks. Before information and communications technology made it possible to gather and analyse large-scale network data in the 1990s, such networks were often assumed to be simple random networks in which links exist with uniform probability. Barabási and Albert discovered that many real-world networks have a very different connectivity – one in which a small number of nodes are very highly connected, a larger number of nodes are reasonably well connected, and the vast majority are poorly connected. Moreover this distribution holds across different scales, making it scale-free. This means that in any specific region of the network, regardless of its size, we will also see a small number of relatively well-connected nodes and a large number of poorly connected nodes. Although the word ‘network’ no longer so strongly connotes the regularity it once implied, post-Barabási network science nonetheless operates on the foundation that physical, social, and biological networks are united by regularities in structure and form which allow for a combined science of networks that transcends traditional disciplines. Regularity is a thread still woven deeply into the fabric of networks.

Network measurements are also often conceived with a particular imagined network topology or visual paradigm in mind, and their quantitative rigour cannot entirely free them from this. The popularity of graph drawings to represent social relationships preceded measurements that focused on features like path and centrality. A ‘community’ in such drawings is most often defined as a network region that is more densely connected internally than it is to other network regions. Articles on these methods often feature artificial networks as test cases which have clusters of nodes with high internal connection density and few connections between them. As the field has discovered in the years since such methods were first devised, real-world network communities often do not match this simple imagined community structure. It has been shown that the dozens of different algorithms that grapple with the problem of defining and identifying varying notions of communities in fact demonstrate the shortcomings of the idea of defining network communities in the first place.

The metaphorical dimension of the network allows network scientists to imagine possible ways of navigating mathematical spaces that are both conceptually and topologically vast. The lens of metaphor, therefore, could be described as limiting, but this limitation is productive, giving researchers somewhere to begin their explorations. However, the moment we have identified as the network turn, around the new millennium, has yet to be self-reflexively theorised in this way, and as such the ways this metaphorical framework can be harnessed and challenged remain unexplored. In this gap lies an open invitation for arts and humanities scholars to add their expertise.

Moreover, by recognising that the quantitative approaches leveraged in network science rely on thinking we normally associate with humanistic inquiry, we can begin to break down the barriers between the two cultures of sciences and humanities identified in C. P. Snow’s 1959 Rede lecture. This recognition undercuts the perception of a one-way movement of methods and theories that have been developed and tested in the sciences to solve problems in the arts and humanities. Rather, the interface between these two traditionally divided kingdoms allows greater self-reflexivity about the extent to which networks are what Lakoff and Núñez describe as ‘a cognitive mechanism’ that crosses conceptual domains. However, whereas Lakoff and Núñez see a one-to-one mapping in the most basic description of conceptual metaphors, networks cross multiple domains: from the material domain (of literal net-making), to the linguistic and literary domain (of words used to conjure a sense of complex systems of entanglement), to the graphical or visual sphere (of technical or medical diagrams and of graphics designed for navigating transport systems), to the abstract realm (of nodes and edges measured in mathematical space). Apart from the literal act of net-making, all other renderings of the network leverage at least one of these other domains to make sense of their own. This suggests that networks are inherently domain-crossing. However, although a small but consistent stream of historians have been engaging in interdisciplinary network analysis since at least the 1960s, relatively few arts and humanities scholars are currently involved in this cross-disciplinary exchange and collaboration. We must address this weakness if the field is to become self-critical.

2 Historical Threads

But why would scholars from the arts and humanities bother themselves with the task of theorising and operating within this network turn? Just because we can challenge the divisions between intellectual and conceptual domains with network thinking, it does not follow that we should.

Perhaps our motivation to think through networks should be that it allows us to see features in history, language, literature, or art that would otherwise remain inscrutable. Perhaps we yearn for the ability to recognise patterns across disparate domains as simply kaleidoscoped manifestations of the startlingly predictable human condition. Perhaps the seamless connection between part and whole, the ability to connect the microhistory with the longue durée, explains the allure of this siren’s song. These features certainly drew the natural and social sciences to the network turn, and the move has proven successful many times over. But humanistic incentives often differ from those guiding the sciences. Humanities scholars may seek network thinking for its ability to cut through hierarchies, allowing us to draw threads between and through the geopolitical hegemonies that are often reflected in the construction of physical archives. Networks might give us the language to speak truth to power in configurations learned from and reminiscent of the US civil rights movement, the Iranian Green Movement, or the Extinction Rebellion. The network turn has this capacity as well, and we discuss many of these beneficial network affordances in subsequent chapters.

There are many principled reasons for scholars to engage with networks, but we suspect these are not the reasons the network turn is gripping the humanities, the sciences, and the larger world. Instead, a thousand years of self-reinforcing cultural, technological, and cognitive trends conspired to make the network turn inescapable. We are entangled in a web of fibre optic threads across which political revolutionaries, authoritarian surveillance states, ad-driven technocracies, populist politicians, and chaotic hacker collectives vie for control. The network turn has become complicit in various hegemonic power structures. As centres of power take networks and network theory increasingly seriously, they build ever more network assumptions into their systems. This creates a positive feedback loop forcing much of the world into a network framework even when those structures make little sense (see Reference HealyHealy, 2015). We engage with networks not because it would be irresponsible not to, true though that might be, but because it becomes increasingly impossible not to.

And to understand how networks have actively structured a perception of the world, we must understand them within a millennium-long history of visual practices for depicting and grasping knowledge systems throughout Western Europe. The following chapter explores the epistemological repercussions of the shift from tree to network as the dominant visual system for charting systems of knowledge and information. By visualising knowledge itself as a tree, medieval thinkers ensured their intellectual descendants would think of concepts as part of hierarchies for hundreds of years. As colonial Europeans drifted away from strictly tree-based representations of knowledge, partially in response to engagement with classification systems outside of their own traditions, they also moved away from the sense that knowledge forms an absolute hierarchy. Many of the descendants of that philosophical school now see knowledge as a diffuse system of sparsely interconnected parts.

The genealogy we outline here is not a narrative of progress, revealing how in moving away from arboreal systems we gained a more ‘true’ sense of the world. Rather, we show how shifting metaphors for knowledge have shaped and reflected the way we think, and how this process has radically upended ideas of natural hierarchy and unity. This chapter therefore extends the discourse on conceptual metaphor from the previous chapter, exposing the deep link between visual culture and the network turn, and what it means for the way we think.

In this effort, we do not present a history in the traditional sense. Neither do we escape a necessary presentism. As many threads have woven together to spur the network turn, pulling those threads often leads us to similar but unrelated moments in our cultural past. What we present next, then, might be described using the rhizome metaphor of Felix Guattari and Gilles Deleuze’s 1980 book A Thousand Plateaus, which the authors proffer as an alternative to the arborescent conception of knowledge. The rhizome is a subterranean plant stem that grows horizontally, sending out roots and shoots from its nodes, and was appropriated by Guattari and Deleuze to describe a model of knowledge that allows for multiple, non-hierarchical entry and exit points in data representation and interpretation. The following pages might be described as a select gathering of such shoots used to gesture towards the complex network of roots that contextualise the network turn, revealing a deep relationship between diagrams, ontologies, and epistemologies. How precisely these threads interweave is in urgent need of a longer and more sophisticated approach, and we leave this chapter here as a provocation to whomever finally writes that fuller history.

The first important relationship to understand is that between what we see and what we know. Our understanding and our illustrations of the world have always been entwined, and both have set the stage for the network turn. Aristotle wrote in De Memoria et Reminiscentia that ‘it is impossible to think without an image, for the same phenomenon occurs in thinking as is found in the construction of geometrical figures’ (quoted in Reference Squire, Elsner and SquireSquire & Elsner, 2016). To many ancient Greek philosophers, words and language derive from inner images and knowledge always involves a visual object. The image–thought connection percolated through Christian teachings via St Augustine and his contemporaries and remained prominent until surprisingly recently.

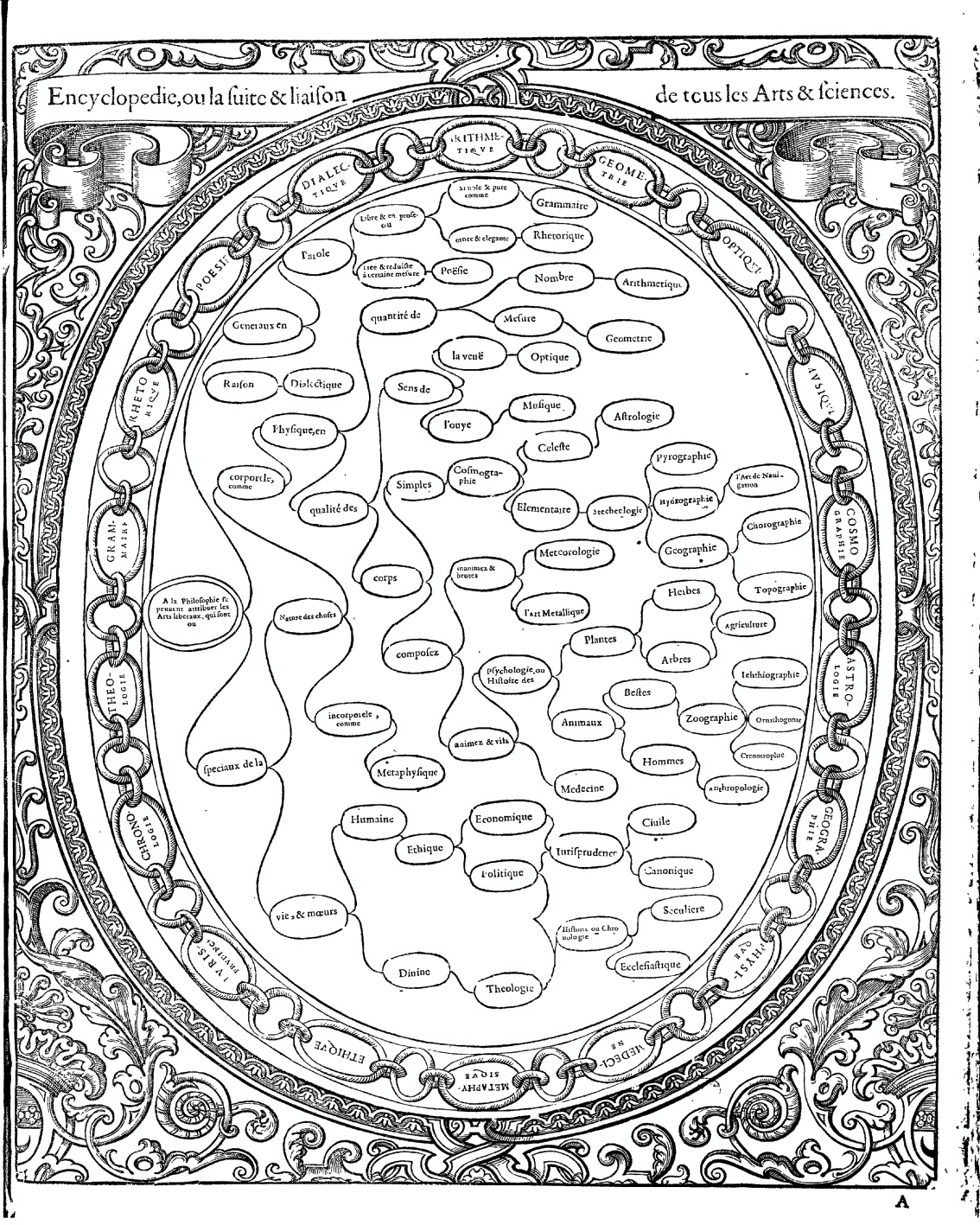

Many prevailing thinkers of the early Middle Ages did not differentiate the order of knowledge from the order of being. An imaginative representation of a concept could therefore not be untangled from the concept itself. The trees of knowledge that scribes illustrated in their manuscripts and that later appeared in printed books, as in Figure 5, were not merely helpful organisational schemes, but indicative of real underlying connections between the branching concepts they depicted.

Figure 5 Ramon Llull showing the Arbor elementalis to a monk in Arbor scientiae ([F. Fradin?],[1515]).

Visualisations help us to construct the objects of scientific study. When discussing microscopic observations of bacteria, the early twentieth-century scientist Ludwik Fleck noted that novices see only blobs when looking under the microscope, whereas with training, the expert finally discerns bacteria. With that training, however, the expert loses the ability to see anything that contradicts the form she knows to be visible. On this topic Lorraine Daston writes: ‘Perception furnishes the universe. It doesn’t create the universe, but it does shape and sort, outlining sharp edges and arranging parts into wholes’ (Reference DastonDaston, 2008: 98). Thus cloud atlases and diagrams of rock strata become as prescriptive as they are descriptive, giving shape to the world around them. Scientific perception of objects is a deeply psychological affair. But physical objects are not the only entities susceptible to construction and shaping through their visual representation. More abstract visualisations, such as trees of knowledge, are equally capable of shaping our thoughts. Medieval scholars associated with ars memoriae (‘the art of memory’) believed their diagrams structurally reflected their world. They were partially right: our diagrams construct our world as much as they reflect it. The implication is that when we collectively settle on particular visual metaphors to order our world – like trees of knowledge – that representation radiates outward, shaping our thoughts in unexpected ways. Conversely and recursively, our understanding of our world shapes our visual metaphors. Many of the examples that follow reflect this observation.

Take, for example, the relationship between knowledge and trees, connected at least since the writing of Genesis. Although historical linguists do not trace a common lineage to either concept, the fact that ‘truth’ and ‘tree’ were both once trēow, and ‘wit’ (as in wisdom) and ‘wood’ (as in forest) were both once quite close to vid has inspired at least a thousand years’ worth of puns (Reference LarringtonLarrington, 2008). We link the two concepts without a second thought, as when we look for the root of the problem or discuss branches of knowledge. This coincidental etymological overlap is perhaps indicative of the strangely linked stories of networks and disciplines. Throughout medieval Europe, and pushing well into the nineteenth century, knowledge and trees coincide frequently.

The earliest such trees to be widely replicated were representations of Aristotle’s classification of categories, based on a third-century treatise by Porphyry. He split the Aristotelian system of categories into a series of branching dichotomies, using the metaphor of a tree. By the tenth century, a Latin translation by Boethius illustrated Porphyry’s metaphorical tree as a visual one. By the twelfth century, this style of diagram had achieved widespread legibility as a visual metaphor to connect hierarchies, lineages, and orders (see Reference Kruja, Marks, Blair, Waters, Mutzel, Jünger and LeipertKruja et al., 2002; Reference DruckerDrucker, 2014). Although other styles of diagrams of knowledge did exist, few if any were entirely free of implicit hierarchy. Ramon Llull’s thirteenth-century Arbor scientiae is likely the first work that attempted to systematically represent all branches of knowledge on trees (see Figure 5). The treatise features sixteen illustrations, the first of which is a single tree of knowledge used as a sort of table of contents, with each of the following trees representing a single branch from the first.

Many of the visual aspects of these trees were reflected in and reinforced the philosophies of their times. Like contemporary genealogical trees, many of the earliest diagrams of knowledge placed the root at the top of the page, indicating at once closeness to divinity, temporal precedence, and hierarchical pre-eminence. As diagrams of both knowledge and genealogy began flipping 180 degrees between the twelfth and sixteenth centuries, that tight coupling between divinity, time, and pre-eminence also began to separate. Similarly, many hierarchical diagrams of knowledge used visual cues, like an encircling chain in Christophe de Savigny’s sixteenth-century Tableaux accomplis de tous les arts libéraux, to bound knowledge and imply its completeness (see Figure 6). As the concepts of boundedness and completeness were challenged, so too were their visual representations.

Figure 6 Christophe de Savigny’s diagram partitioning the arts and sciences, in Tableaux accomplis (Gourmont, 1587).

One exemplar of these and other changes is visible in Francis Bacon’s 1605 The Advancement of Learning: On the Partitions of the Sciences. In it, Bacon wrote: ‘The Partition of Sciences are not like several lines that meet in one angle; but rather like branches of trees that meet in one stemme, which stemme for some dimension and space is entire and continued, before it break, and part it selfe into armes and boughes’ (Reference BaconBacon, 1640: 132). The highly influential book broke from predecessors in its challenge to the ‘one root’ model of knowledge, and in its shift of learning from a closed system to an open system capable of growth and change. Even as theologists and natural philosophers were starting to explicitly question the suitability of the tree as an ordering metaphor, however, the same thinkers often fell back on arboreal diagrams, linguistic metaphors, and the philosophical implications they entailed because that was simply the ontological architecture in which they had learned to think (Reference AriewAriew, 1992).

While trees of knowledge continued to flourish in the seventeenth and eighteenth centuries, being used frequently as organisation schemes for libraries and booksellers’ catalogues all across Europe, educators and natural philosophers increasingly questioned the ability of the tree to truly represent the world of knowledge. By 1759, the encyclopaedists Diderot and d’Alembert rejected even the idea of a proper order of knowledge. They introduced their Encyclopedie saying that unified orders of knowledge are essentially arbitrary and that there are as many different possible systems as there are projections of the world map (Reference AriewAriew, 1992). Not only was there no such thing as a natural order to knowledge, there could not even be a proper genealogical order to knowledge. The editors were careful to separate those two concepts. In rejecting the definitive order of knowledge, however, the encyclopaedists faced a dilemma: their great project still needed to be organised in some fashion. And so, despite their misgivings, they introduced their work with a diagram of a tree.

The tree of knowledge was killed and revived frequently throughout the nineteenth century. In 1854, Herbert Spencer wrote that it was time to dispense once and for all the idea that a tree could be used to represent knowledge (Reference TrompfTrompf, 2011). He writes specifically against the notion of the sciences as the ‘branches of one trunk’, suggesting the notion that ‘the sciences had a common origin’ is fundamentally flawed.

In short, according to many nineteenth-century Western thinkers, knowledge was not a rooted thing but an uprooted network: a non-hierarchical and non-genealogical interconnected web. For Herbert Spencer, the relationships between the sciences needed to be represented in a more multidimensional way (Reference Van den HeuvelVan den Heuvel, 2012). What replaced the unity of knowledge was not disconnected chaos, however, but an organisation of knowledge into separate, distinct, and loosely connected disciplines (Reference YeoYeo, 1991), as evidenced by the various classification systems and institutional department structures that cropped up by the late nineteenth and early twentieth centuries. Most classification systems retained some internal hierarchies, but they lacked a common trunk and existed only for organisational convenience rather than to express something deep and true about how concepts related.

In the late nineteenth and early twentieth centuries, ontological discussions flourished, perhaps growing in the light once obscured by the dense trees of generations prior. Some hoped to regrow those trees to different purposes, while others sought for new metaphors that eschewed hierarchy, unity, and singular order.

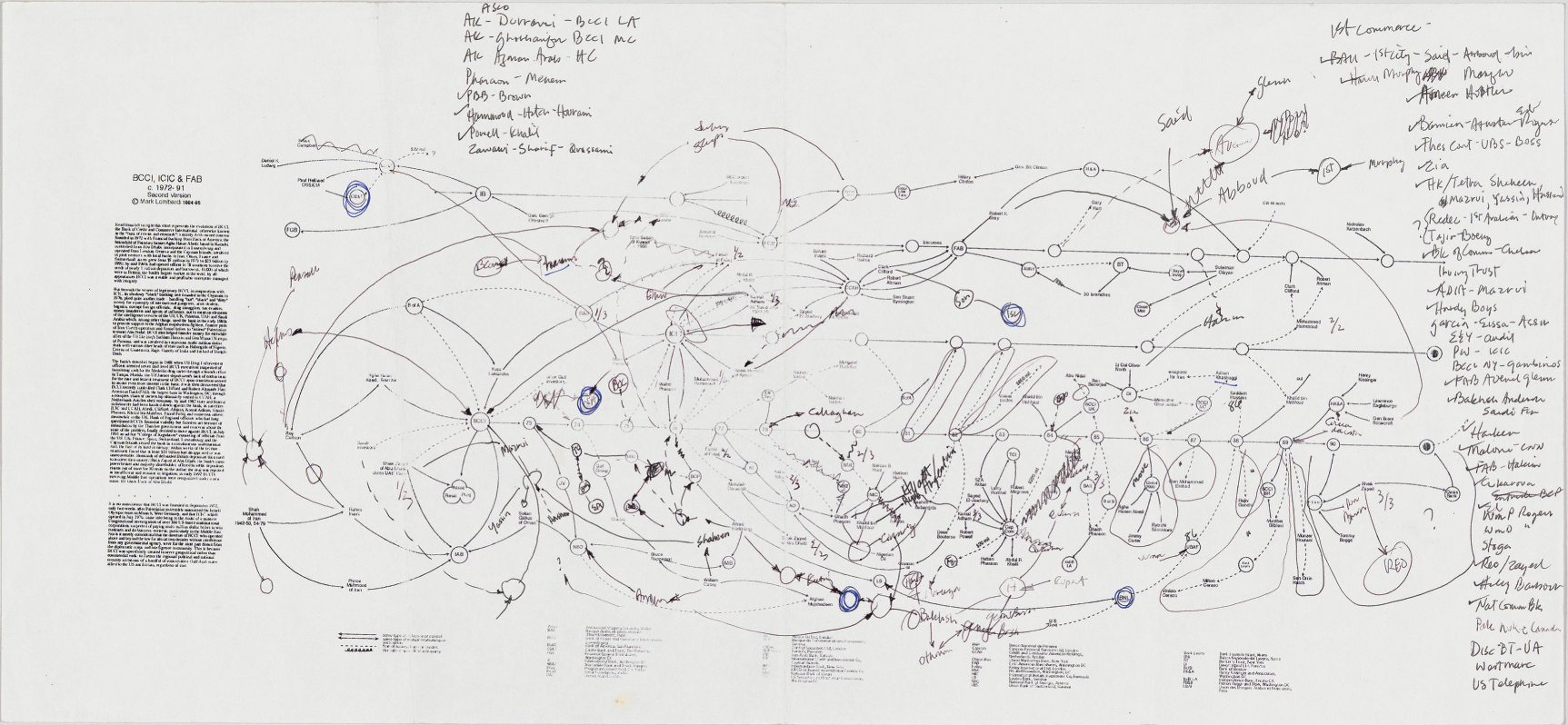

One well-known attempt to eschew hierarchy in classification systems was co-created by the Belgian information activist Paul Otlet near the end of the nineteenth century. Using combinable facets, this Universal Decimal Classification scheme took the multidimensionality of knowledge relationships into account far better than the earlier strict tree hierarchies. Otlet sought to represent this multidimensionality visually, intentionally breaking with the arboreal visual metaphors of the past in lieu of more mechanical diagrams, as shown in Figure 7. While he employed a large variety of visual techniques, many of Otlet’s illustrations featured non-hierarchical network-like representations of classification with circuitous paths and no discernible trunk or preferred hierarchy (Reference Smiraglia and Van den HeuvelSmiraglia & Van den Heuvel, 2011). For Otlet, this classification scheme would ideally lead to ‘an immense map of the domains of knowledge’ (Reference OtletOtlet, 1918). Otlet’s work inspired many others, including S. R. Ranganathan, who in 1933 created a classification scheme which allows knowledge to be classified flexibly and in many dimensions. These new information schemes and metaphors became part of a movement to usher in a future without hierarchies, championed by H. G. Wells and others, coordinated via a vast network of information and communication.

Figure 7 One of Paul Otlet’s classification systems, ‘L’univers, l’intelligence, la science, le livre’, from Traité de documentation: le livre sur le livre, théorie et pratique (Editiones Mundaneum, 1934).

Such utopic and decentralising movements were not without reactions. Faced with a turmoil of disciplines, a growing scientific community sought to reunify the sciences. These scientists were not seeking order in the transcendental sense, but as part of a plan to exert human control over an increasingly chaotic world. This concern was ever-present in the context of a world at war, and it was memorialised in Yeats’ 1920 ‘The Second Coming’:

Among their other goals, eugenicists were at the front of a push to hold the scientific centre. Eugenics was, in a sense, an interdisciplinary kind of teaching, bringing together anthropology, genetics, and social policy, among other communities. The logo for the Second International Eugenics Congress (Figure 8), held at the American Museum of Natural History in New York City, depicts a tree of knowledge. It is notable for several reasons. First and foremost, the science of eugenics sits proudly alone in the tree’s crown at the top of the page. The branching roots represent the foundations of disciplines: physiology and anatomy combine to form biology, biology merges with psychology to become genetics, and so on. All of the sciences meet at the trunk to form eugenics, labelled ‘the self direction of human evolution’, which ‘organizes knowledge into a harmonious entity’. The diagram appeared again at the Third International Eugenics Congress in 1932, this time captioned with ‘Like a tree eugenics draws its materials from many sources and gives them organic unity and purpose’ (emphasis added). The eugenics movement thus actively sought to reinstate concepts of unity, ontology, and hierarchy of knowledge as they were being unravelled. But, of course, eugenics was much more than a theory of knowledge. From the history of the implementation of eugenic policies, which resulted in segregation, sterilisation, and genocide, we know that the unification of knowledge can be an unwitting bearer of ideological content. This offers further evidence of how difficult it is to separate how we order the world from how we live in it.

Figure 8 Logo from the Second International Eugenics Conference, 1921, depicting eugenics as a tree which unites a variety of different fields. Harry H. Laughlin, The Second International Exhibition of Eugenics Held September 22 to October 22, 1921, in Connection with the Second International Congress of Eugenics in the American Museum of Natural History, New York (Baltimore, MD: William & Wilkins, 1923).

It is worth pausing at the turn of the twentieth century to take stock of the state of Western Europe outside of classification theory and of the political context in which particular governments seized upon eugenics. A period of rapid growth in fast, inexpensive transportation and communication networks would soon be punctuated by two world wars. The violent forces of colonialism were about to reach a fever peak, and traces of that colonialism were everywhere. The world wars set the backdrop for a renewed fascination with networks of all sorts. Destruction caused by the First World War, alongside a rapidly growing automobile industry and other improving technologies of travel, contributed to prominent discussions of transportation and communication networks. Hungary particularly enjoyed a period of rich international trade, at least until the Great Depression.

It was in this context in 1929 that the Hungarian Frigyes Karinthy wrote ‘Láncszemek’ (‘Chain-Links’), discussed in the previous chapter, which introduced the concept of six degrees of separation. Uncoincidentally, Karinthy’s other pursuits included translating H. G. Wells and presiding over the Hungarian Esperanto Society, an organisation in support of a global language for a networked world. The networked ideals of Karinthy, Wells, and Otlet were juxtaposed against a massive push towards hierarchical state-run consolidation exemplified in Nazi sensibilities. When the Nazi librarian Hugo Krüss visited Paul Otlet’s Mundaneum in 1940, with its non-hierarchical, sprawling, networked classification system, he considered the whole thing a useless mess. Otlet’s ideals and those of his spiritual contemporaries like Karinthy and Wells were an odd mirror to Hitler’s. While the juxtaposition of Nazi hierarchies against utopianist networks are far too neat to represent the full story, their interplay is tellingly illustrative. Where Nazis sought to unify people under a powerful hierarchy where individual agency was subordinate to the state, Otlet and others sought unification through a distributed ‘World City’, connected in peace via networks of information and communication (perhaps sharing a common language, like Esperanto).

Neither goal succeeded. While the latter half of the twentieth century might have steered closer to Otlet’s vision than to Hitler’s, the twenty-first century might be seen as dealing with the fallout of society’s movement towards networks (see Reference FergusonFerguson, 2017). States now continuously fight frontless wars against distributed communities, a synecdoche for the struggle between hierarchies and networks. Recent global political shifts empowering right-wing governments might be seen as a response to this struggle. In this context, the emergence of the network as a framework to challenge traditional hierarchical world views is the product of a long philosophical and cultural crisis stretching from either end of the twentieth century.

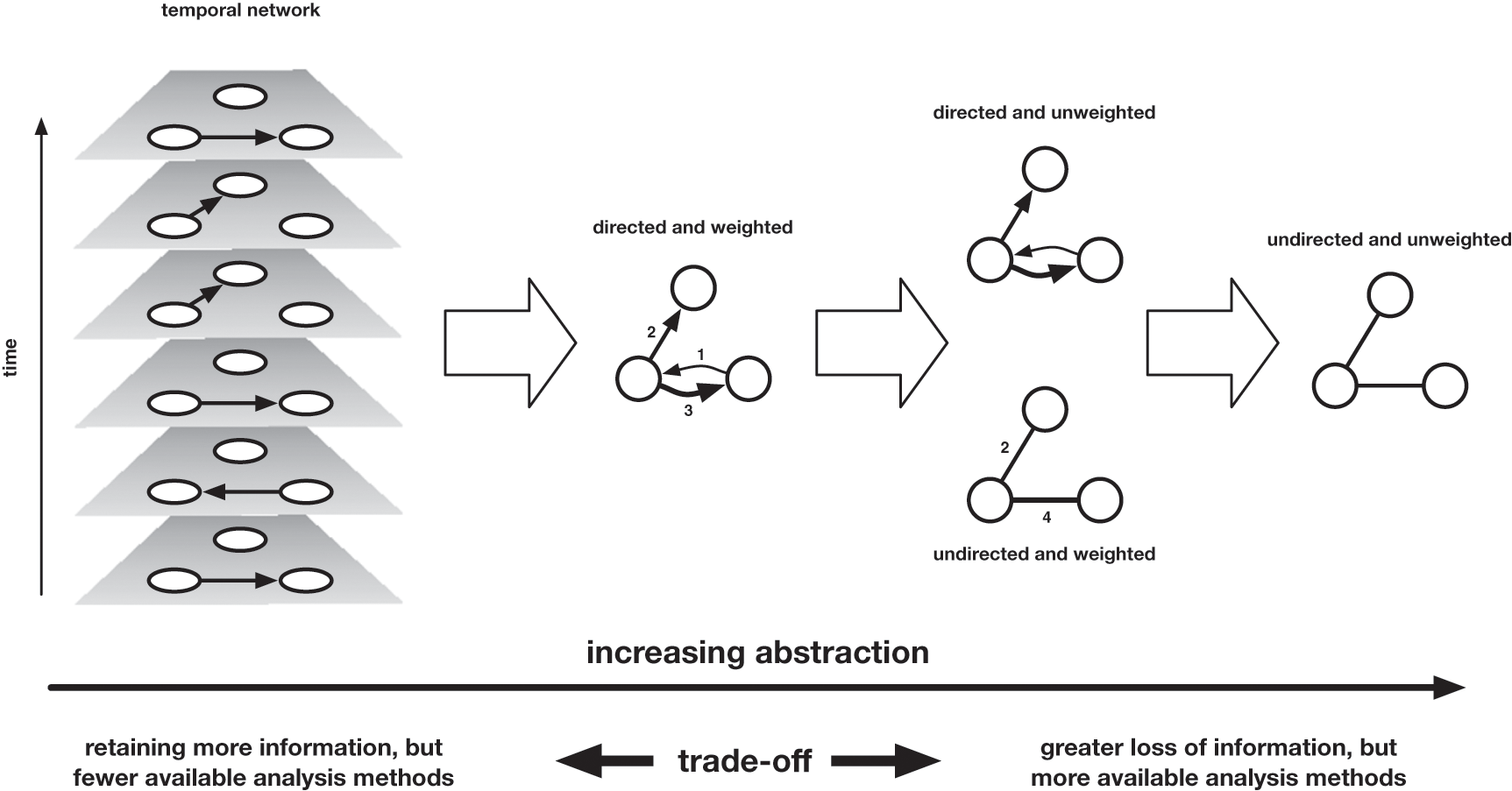

As different as they were, the diagrams of eugenicists and those of H. G. Wells shared a common interpretation: that the universe has no innate order and it is left for us to create one. It was in this context that the visual lexicon of the what we now call networks took hold, perhaps because they are the closest ontological metaphor to trees which require neither hierarchy nor root. The way we conceptualise and chart systems of knowledge in the twenty-first century was radically shaped by systems of notation Jacob Moreno and Helen Hall Jennings developed in the 1930s. In their work on social networks (introduced in Chapter 1), Moreno and Jennings developed a psychological technique involving the construction and analysis of sociograms, diagrams, like the one shown in in Figure 4, that revealed complex social relations between small communities. At the time, the geometry of these diagrams did not feel obvious. Although they borrowed a visual language from family trees and more recent studies into interpersonal connectivity, it was not necessarily obvious that people should be encircled, that lines ought to connect them, and that their layout on the page should conform neither to physical proximity nor to Cartesian coordinates. Indeed, Moreno and Jennings experimented with many layouts throughout their careers, but sociograms proved the most compelling.

Sociograms enjoyed an intense but brief popularity, showing up in national newspapers in the early 1930s. In these diagrams, Moreno and Jennings suggested that important actors should appear central, and by the late 1940s, the concept of centrality was given mathematical specificity. Alex Bavelas and his colleagues at MIT started connecting centrality with influence and power, focusing especially on the importance of network paths. A central figure, Bavelas argued, sits centrally along the paths to the periphery of a network. Paths are easy to see with sociograms, and Bavelas’ early discussions of centrality were unsurprisingly replete with such illustrations. He himself emphasised the role of these visualisations, mentioning that a geometric approach to psychology was only natural in a world where people understand their social world as occupying a physical space around them. Were it not for the precedent of these visual representations, we suspect many early network metrics involving path and centrality would have manifested quite differently.

This visual language of sociograms is now popularised in force-directed layouts. A force-directed layout is a network visualisation that models network elements as though they are physical entities. Edges are modelled as springs, and nodes as junctions connecting various springs together. The computer simulates this system, letting the springs bounce around until each one is as relaxed as possible. At the same time, the nodes repel each other, like magnets of the same polarity, so the nodes do not appear too close together. Just as the tree brought with it implicit notions of hierarchy and unity, this visual language has far-reaching interpretative implications.

Firstly, such visualised networks reject hierarchies. Even when node-and-link diagrams represent hierarchical networks, those hierarchies are difficult to notice. As discussed further in Chapter 4, the lack of any meaningful spatial orientation means even the root node does not occupy a privileged position. Secondly, networks embrace connectedness: in a consilient world, where knowledge collectively acts as a foundation for the whole, or in actor-network theory, where agency can be widely dispersed, this representation fits like a glove. Thirdly, networks separate ontology from essence. Because force-directed networks are stochastic and can therefore look different every time they are constructed, they leave little room to misunderstand a diagram as the one true layout. Knowledge’s order is always left uncertain, which feeds into the fourth point: that networks have the capacity to relate situated perspectives. Especially in the past few years, with popular social networks and in-browser interactive force-directed diagrams, it has become possible to view a social network from one’s own perspective, which may look very different from the same network represented from someone else’s viewpoint. Perspectival visualisations fit remarkably well with feminist and postmodernist understandings of knowledge, and they align poorly with essentialist viewpoints.

The democratising effect of the network view of the world is perhaps most radically realised in actor-network theory (ANT). Despite carrying the label ‘theory’, ANT is better understood as a range of methodological manoeuvres or processes for guiding research that aims to describe the connections that link humans and non-humans (e.g. objects, technologies, policies, and ideas). It is less concerned with visualising those connections; the ethos is clearly influenced by the non-hierarchical and situated perspective of networks. Actor-network theory is based on the principle that all the factors involved in a social situation should be placed on the same level (Reference McLean and HassardMcLean & Hassard, 2004). Michael Callon’s analysis of an investigation into the declining scallop population in St Brieuc Bay outlined a new approach to the study of power, which he described as the sociology of translation (Reference CallonCallon, 1984). Starting from three principles – those of agnosticism (impartiality between actors engaged in controversy), generalised symmetry (the commitment to explain conflicting viewpoints in the same terms), and free association (the abandonment of all a priori distinctions between the natural and the social) – his study examined a scientific and economic controversy about the causes for the decline in the population of scallops in St Brieuc Bay and the attempts by three marine biologists to develop a conservation strategy for that population. One of the notable things about this paper was the way it conceived of the actors in this controversy: in Callon’s narrative, the scallops participate with the fishermen and the scientists in a network of associations that undermines any sense of hierarchy of influence.

There is a revolutionary nature to such a conceptual move, as it undermines the celebration of the lone hero, showing that historical change is almost always the result of networks of forces. It is the contention of Bruno Latour’s The Pasteurisation of France, which asks what one man can accomplish alone. Although every town in France has a street named after Louis Pasteur, he alone was unable to stop people from spitting, persuade them to dig drains, or influence them to undergo vaccination. Rather, Pasteur’s success depended upon a network of forces, including the public hygiene movement, the medical profession (both military physicians and private practitioners), and colonial interests. As Annemarie Mol has observed: ‘All kind of people, journalists, farmers, technicians, vets, were involved in the discovery/invention of anthrax and the inoculations against it. All kinds of things were active as well, Petri-dishes, blood, transport systems … Against the implied fantasy of a masterful, separate actor, what is highlighted is the activity of all the associated actors involved. A strategist may be inventive, but nobody acts alone’ (Reference MolMol, 2010: 256). We can extrapolate a more general argument from this. The process of levelling allows us to challenge other narratives, not only social, but historical, literary, aesthetic, and linguistic, overturning assumptions about causality, hierarchy, the distribution of power, and the direction and quantity of influence.

In these contexts the network perspective is inherently political. There is something decidedly democratic about the initial process that disarms agency and power. It is why early online social networks were praised for their ability to put junior scholars and tenured faculty on equal footing, and why so many of the social movements at the turn of the twenty-first century considered social media a revolutionising force. It may have contributed to the fact that so many early network analysis scholars did not come from traditional places of power.Footnote 6 The way network analysis allows us to challenge expected or received wisdom about power dynamics, we contend, also makes it a helpful tool for interrogating the relationship between the humanities and the sociotechnical world in which they operate.

However, the network turn’s ability to break existing hierarchical power structures does not imply that it is an acid in which all power melts. Networks reify power along different lines, such as centrality, as evidenced by the enormous power of popular social media presences to gatekeep or to spread certain ideas. The widespread use of network images and methods may be so compellingly effective, in part, because they helped construct the world they purport to measure (see Reference HealyHealy, 2015). Traditional arbiters of power have now learned how to harness network effects to their own advantage. As governments, tech companies, and other centres of power began to take seriously the theories and affordances of networks, they started building the assumptions of those affordances into their systems as a means to re-exert control. As systems start operating on these principles, the world contorts around them to oblige. For example, Google’s PageRank algorithm (developed to rank web pages in their search engine results, and the foundation of their success) took web connectivity as a given in a way that fundamentally shaped how web developers thought and acted on hyperlinks. Further, the network processes that were initially hailed as a democratising force have already begun to be strategically employed as an obfuscating layer that hides the reconsolidation of power.

The mantram of the networked modern world is to an extent self-fulfilling: the more we repeat it, the more compellingly appropriate a network lens feels. Without a deeper understanding of the philosophical and rhetorical affordances of the visual forms and conceptual frameworks of networks, we will continue to be unknowingly nudged by their influence.

Part II Cultural Networks

By thinking about culture as data we open up opportunities both for new analytical processes and for new areas of discourse and engagement. Arts and humanities scholars need to theorise the construction of data sets and the use of visualisation, which has a challenging and provocative role to play in the development of network approaches to culture.

3 Culture Is Data

The network framework shapes how we interpret the world around us. Nothing is naturally a network; rather, networks are an abstraction into which we squeeze the world. Nevertheless, almost anything can be turned into a network, whether it be the interactions between characters in Shakespeare’s plays, the dissemination of memes on Facebook, or the trade network implicated in the ancient Roman brick industry. To understand how such diverse topics can both be conceived of and analysed as networks, it is perhaps easiest to think about one of the most crucial types of network for the creation and dissemination of culture: communication networks. The simple act of communication functions as an entry point for the study of more complex processes such as the dissemination of information or the spread of cultural practices.

We are accustomed to thinking about communication as a network because of the infrastructures that mediate our interactions in the modern world: telephone networks, the World Wide Web, and online social networking platforms. We understand now more than ever that when communication happens through these mediums, it can be traced and measured. Investigations following Edward Snowden’s leak in 2013 revealed that the US National Security Agency and its UK counterpart, GCHQ, had ‘broadly compromised the guarantees that internet companies have given consumers to reassure them that their communications, online banking and medical records would be indecipherable to criminals or governments’ (Reference Ball, Borger and GreenwaldBall, Borger, & Greenwald, 2013). Through such data these agencies were able to discover an individual’s network of associations and communication patterns. But citizens are not subject only to network analysis in the service of national security: information provided by Christopher Wylie in 2018 confirmed that at least 87 million Facebook users were impacted by illegal data harvesting by Cambridge Analytica, who used this data about users’ social networks to profile individual voters during the 2016 Brexit referendum in the UK and the presidential election in the USA in order to target them with personalised political advertisements (Reference LaterzaLaterza, 2018).

However, a digital trail is not necessary to reconstruct communication networks, and the data sets need not be big. Sociologists were reconstructing networks long before the data deluge. A classic example is Wayne Zachery’s observations of a single karate club over three years, from 1970 to 1972, recording interactions between the thirty-four members that took place outside the club (Reference ZacharyZachary, 1977). In this context, the members of the club constitute the nodes and the edges are the interactions that occurred between them outside the club: seventy-eight edges were recorded. Zachary used these observations to model the conflict (using the Ford–Fulkerson algorithm) that led ultimately to the club splitting. The emphasis on in-person interaction to model social structure has been appropriated more recently in literary studies, for example in the now much-cited (and much-critiqued) Literary Lab pamphlet ‘Network Theory, Plot Analysis’, in which Franco Moretti attempts to reconstruct the plot of Hamlet as a network graph wherein two characters share an edge when they exchange words. He uses the resulting network diagram to argue for the plot centrality of Horatio (Reference MorettiMoretti, 2011).