Introduction

Research has demonstrated that bilinguals of two spoken languages co-activate both languages when speaking, reading or listening in one language (for reviews, see e.g., Dijkstra & van Heuven, Reference Dijkstra and van Heuven2002; Kroll, Bogulski & McClain, Reference Kroll, Bogulski and McClain2012; Shook & Marian, Reference Shook and Marian2013; van Hell & Tanner, Reference van Hell and Tanner2012). In addition, several studies show robust effects of cross-language activation in deaf and hearing bilinguals of languages that do not share phonological form, i.e., signed and spoken languages, which is also the focus of the current study (for review, see Emmorey, Giezen & Gollan, Reference Emmorey, Giezen and Gollan2016; Ormel & Giezen, Reference Ormel, Giezen, Marschark, Tang and Knoors2014).

For example, Morford, Wilkinson, Villwock, Piñar and Kroll (Reference Morford, Wilkinson, Villwock, Piñar and Kroll2011) found that deaf American Sign Language (ASL)–English bilinguals were faster to decide that two English words were semantically related (e.g., bird and duck) when the ASL sign translation equivalents of these words overlapped in sign phonology (the signs BIRD and DUCK have the same location and movement and only differ in handshape). Conversely, they were slower to decide that two printed English words were not semantically related when their ASL translation equivalents overlapped in sign phonology. Ormel, Hermans, Knoors and Verhoeven (Reference Ormel, Hermans, Knoors and Verhoeven2012) obtained comparable findings in a word-picture verification study with bilingual deaf children learning Dutch and Sign Language of the Netherlands (NGT). Children responded slower and were less accurate when words and pictures were phonologically related in NGT than when they were unrelated. These findings have since been replicated in various other studies with deaf signers (e.g., Kubuş, Villwock, Morford & Rathmann, Reference Kubuş, Villwock, Morford and Rathmann2015; Meade, Midgley, Sevcikova Sehyr, Holcomb & Emmorey, Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017; Morford, Kroll, Piñar & Wilkinson, Reference Morford, Kroll, Piñar and Wilkinson2014; Morford, Occhino-Kehoe, Piñar, Wilkinson & Kroll, Reference Morford, Occhino-Kehoe, Piñar, Wilkinson and Kroll2017), and in studies with native and non-native hearing signers (e.g., Giezen, Blumenfeld, Shook, Marian & Emmorey, Reference Giezen, Blumenfeld, Shook, Marian and Emmorey2015; Giezen & Emmorey, Reference Giezen and Emmorey2016; Shook & Marian, Reference Shook and Marian2012; Villameriel, Dias, Costello & Carreiras, Reference Villameriel, Dias, Costello and Carreiras2016; Williams & Newman, Reference Williams and Newman2015). Thus, cross-language activation is not only a robust characteristic of bilingual processing in bilinguals of spoken languages, but also in deaf and hearing signers, which we will refer to as ‘bimodal bilinguals’.

For bilinguals of two spoken languages, one likely source of co-activation is through phonological overlap between words in different languages, as seen in, for example, cross-language phonological priming effects (e.g., Dijkstra & van Heuven, Reference Dijkstra and van Heuven2002; van Hell & Tanner, Reference van Hell and Tanner2012). However, since spoken and sign languages have no shared phonological system, evidence for co-activation in bimodal bilinguals has been taken to suggest an important role for connections between lexical phonological and orthographical representations in the two languages and/or connections through shared semantic representations (Morford et al., Reference Morford, Occhino-Kehoe, Piñar, Wilkinson and Kroll2017; Ormel, Reference Ormel2008; Shook & Marian, Reference Shook and Marian2012).

Alternatively, modality-specific connections between spoken and signed languages might account for language co-activation in bimodal bilinguals: for example, connections through signs that contain fingerspelled letters linked to their spoken translations (i.e., signs that contain “a direct representation of English orthographic features in (American) Sign Language sublexical form”; Morford, Occhino, Zirnstein, Kroll, Wilkinson & Piñar, Reference Morford, Occhino, Zirnstein, Kroll, Wilkinson and Piñar2019, p. 356). Fingerspelling is the manual encoding of written language, in which each letter of an alphabetic script is represented by a distinct hand configuration: that is, manual orthography. A growing number of studies have linked fingerspelling to reading acquisition and ability in deaf signers (Morere & Allen, Reference Morere and Allen2012; Stone et al., Reference Stone, Kartheiser, Hauser, Petitto and Allen2015). Fingerspelling can be used to sign unfamiliar words, and in many sign languages some conventionalized forms of fingerspelling have been integrated into the signed lexicon as loan words (lexicalized fingerspelling) or ‘initialized signs’. Initialization is an effect of contact between signed and spoken languages that has resulted in a direct representation of orthographic features from the spoken language in manual signs: for example, by including a handshape that is associated with the first letter(s) of the orthographic translation equivalent (e.g., the sign for BLUE in NGT, which contains the letters ‘b’ and ‘l’ from the Dutch translation equivalent ‘blauw’). Morford et al. (Reference Morford, Occhino, Zirnstein, Kroll, Wilkinson and Piñar2019) tested the impact of such direct representations of orthographic features in some ASL signs on language co-activation, and found that signs containing fingerspelled letters overlapping with the orthographic onset of their English translation equivalents did not affect cross-language activation in deaf signers.

Another feature that may drive language co-activation in bimodal bilinguals is mouthings that co-occur with signs and that also share phonological properties with spoken language. Mouthings refer to mouth actions during sign production that map onto phonological representations of the spoken language (i.e., they usually reflect one or more syllables of the spoken translation equivalent of the sign). According to Bank, Crasborn and van Hout's corpus study (Reference Bank, Crasborn and van Hout2011, Reference Bank, Crasborn and van Hout2018), mouthings accompany 61% of the signs in Sign Language of the Netherlands (NGT). In contrast, mouth gestures are linguistically relevant mouth actions that do not map onto phonological representations of the spoken languages. While mouth gestures are often regarded as an integral part of the sign language lexicon (Boyes Braem, Reference Boyes Braem, Boyes Braem and Sutton-Spence2001), the status of mouthings in sign language processing is a topic of ongoing debate. According to some researchers, mouthings are also stored as part of the lexical representation of signs (e. g., Boyes Braem, Reference Boyes Braem, Boyes Braem and Sutton-Spence2001; Sutton-Spence & Day, Reference Sutton-Spence, Day, Boyes Braem and Sutton-Spence2001). Others have argued, however, that mouthings do not form part of the sign language lexicon (e.g., Ebbinghaus & Heßmann, Reference Ebbinghaus, Heßmann, Boyes Braem and Sutton-Spence2001), and should be considered as a form of language mixing similar to the blending of spoken and signed utterances by hearing signing children and adults (e.g., Bank et al., Reference Bank, Crasborn and van Hout2016; Giustolisi, Mereghetti & Cecchetto, Reference Giustolisi, Mereghetti and Cecchetto2017). This is supported by an experimental study showing that mouthings and signs are separately accessed in the mental lexicon (Vinson, Thompson, Skinner, Fox & Vigliocco, Reference Vinson, Thompson, Skinner, Fox and Vigliocco2010).

Especially for hearing bimodal bilinguals who have full access to the (visual) phonological system underlying mouthings, mouthings may boost language co-activation, but this possibility has not been tested yet. In order to directly assess the contribution of mouthings to co-activation patterns in bimodal bilinguals, the current study investigates the co-activation of spoken words during the processing of signs. To our knowledge, only two studies have examined co-activation of spoken phonological forms while bimodal bilinguals were processing signs (Hosemann, Mani, Herrmann, Steinbach & Altvater-Mackensen, Reference Hosemann, Mani, Herrmann, Steinbach and Altvater-Mackensen2020; Lee, Meade, Midgley, Holcomb & Emmorey, Reference Lee, Meade, Midgley, Holcomb and Emmorey2019). Lee et al. (Reference Lee, Meade, Midgley, Holcomb and Emmorey2019) recorded electrophysiological responses (ERPs) in deaf and hearing ASL–English bilinguals who viewed sign pairs in ASL and were asked to judge their semantic relatedness. Part of the sign pairs rhymed in English and were also orthographically similar. The study showed no effects of language co-activation in the behavioral responses. However, the hearing signers showed a smaller N400 for sign pairs that were phonologically related in English. Interestingly, a reversed effect was found for deaf bilinguals, who showed a larger N400 for phonologically related pairs (although this effect was only observed in deaf participants who were unaware of the experimental manipulation). The deaf signers also showed a later and weaker N400 effect than the hearing signers. Lee et al. suggested that this might be due to the fact that English was the non-dominant language for the deaf signers. Hosemann et al. (Reference Hosemann, Mani, Herrmann, Steinbach and Altvater-Mackensen2020) recorded ERPs from deaf native German Sign Language (DGS)–German bilinguals while viewing signed sentences containing prime and target signs that rhymed in German and were also orthographically similar. Their results demonstrated a smaller N400 for target signs for which the translation equivalents in spoken German were phonologically related to the translation equivalents of the primes, again suggesting that cross-language activation in deaf bimodal bilinguals also occurs in this direction. However, neither study considered the potential contribution of mouthings to the observed co-activation patterns.

To examine the impact of mouthing on cross-language activation patterns, the present study contrasted co-activation of spoken words during sign processing in hearing bimodal bilinguals when signs were presented with mouthing (Experiment 1) or without mouthing (Experiment 2). To this end, we conducted two sign-picture verification experiments with hearing Dutch adults who were late learners of NGT, in which we manipulated the phonological relation (unrelated vs. cohort overlap or final rhyme overlap) between the Dutch translation equivalents of the NGT signs and pictures.

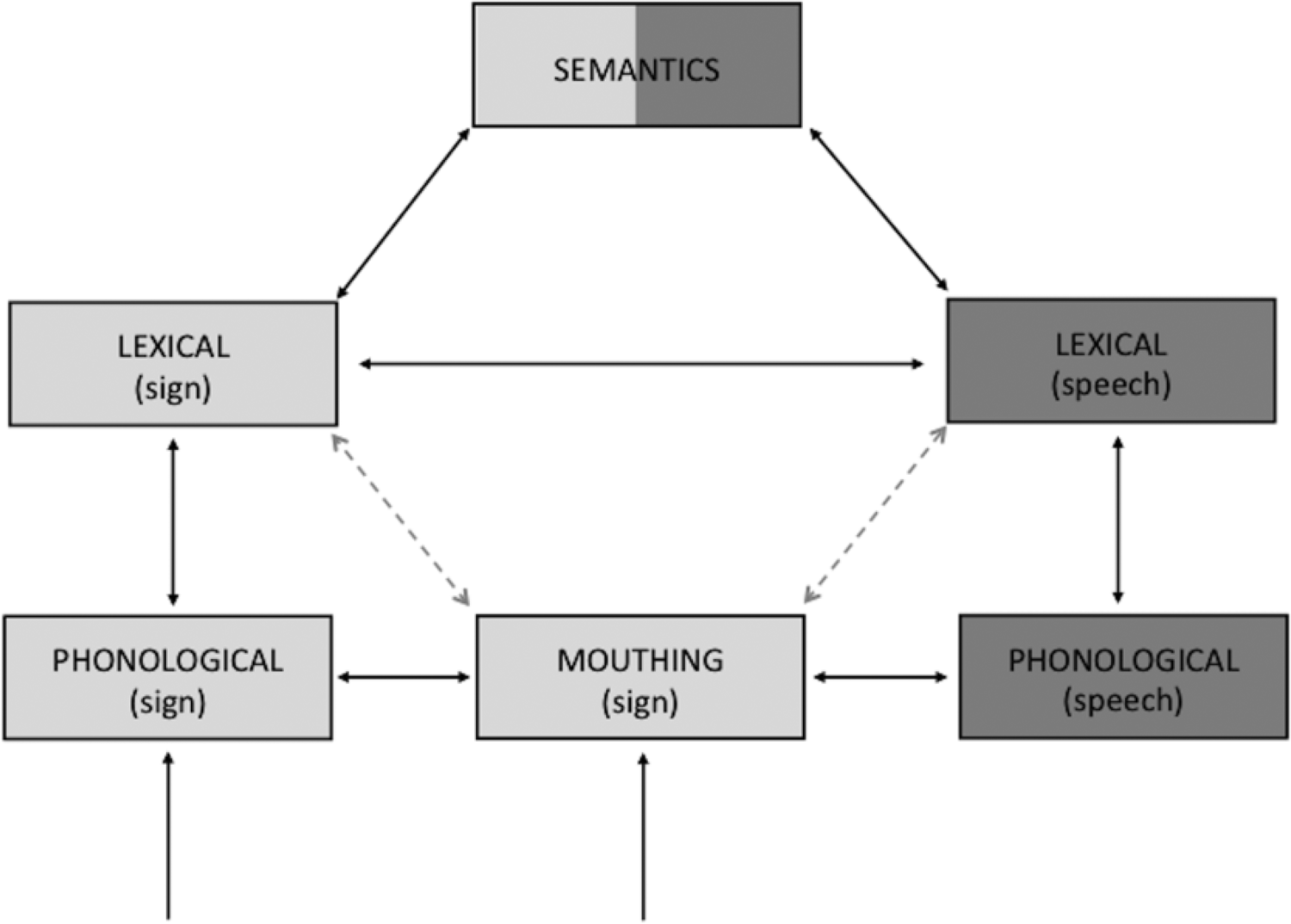

The aim of the present study is twofold: 1) to provide insight into cross-language activation in hearing bimodal bilinguals by examining the co-activation of spoken words during processing of signs by hearing bimodal bilingual users of NGT (late learners) and Dutch (their L1), adding to recent findings in the literature of co-activation of signs during processing of written and spoken words, and 2) to elucidate the contribution of mouthings to the co-activation of spoken language during processing of sign language. Given that spoken and signed languages have no clear phonological connections (shared phonemes or graphemes) through which co-activation can occur, cross-language activation in bimodal bilinguals may occur through lexical connections between the two languages and/or through shared semantic representations (Morford et al., Reference Morford, Occhino-Kehoe, Piñar, Wilkinson and Kroll2017; Ormel, Reference Ormel2008; Shook & Marian, Reference Shook and Marian2013). Alternatively, mouthings that share linguistic features with the spoken language might provide modality-specific connections between the spoken and signed languages that mediate language co-activation between these languages. Framed in the context of an adaptation of the Bilingual Interactive Activation+ (BIA+) model (Dijkstra & van Heuven, Reference Dijkstra and van Heuven2002) for bimodal bilinguals (Morford et al., Reference Morford, Occhino-Kehoe, Piñar, Wilkinson and Kroll2017; Ormel, Reference Ormel2008; Ormel et al., Reference Ormel, Hermans, Knoors and Verhoeven2012), the current study will therefore test the following two hypotheses. If mouthings play a critical (bridging) role in cross-language activation between spoken language and sign language, i.e., if bimodal bilinguals link the mouthing patterns to the (visual) phonological representation of the spoken language, then co-activation of spoken language representations during sign recognition should be modulated by the presence or absence of mouthings (as depicted in Figure 1).

Fig. 1. Schematic representation of language co-activation for bimodal bilinguals based on the hypothesis that mouthings play a bridging role in modulating cross-language activation between spoken and signed languages. Dotted lines represent a potential direct connection between mouthings and lexical spoken/signed representations not tested in this study. Orthographic representations and connections are not shown in the Figure.

Alternatively, if spoken language is co-activated primarily through lexical-semantic links between signed and spoken languages, then co-activation should occur regardless of whether signs are accompanied by mouthings (as depicted in Figure 2).

Fig. 2. Schematic representation of language co-activation for bimodal bilinguals based on the hypothesis that mouthings do not modulate cross-language activation between spoken and signed languages.

Experiment 1: Signs presented with mouthings

Method

Participants

Twenty-four sign language interpreters in training (23 females, 1 male; Mean age = 22.6, SD = 3.04) participated in Experiment 1. All were in their final year of a four-year full-time sign language interpreter program at the Hogeschool Utrecht (HU University of Applied Sciences), The Netherlands. The native language of all participants was Dutch. They all started learning NGT during their sign language interpreter program, and thus were all late learners of NGT. Average self-rated language proficiency in NGT was 5.46 (SD = .51) on a Likert scale ranging from 1 (non-fluent language usage) to 7 (comparable to native language usage), between the scores for ‘good’ (5) and ‘very good’ (6). None of the participants were familiar with another sign language, but all were to some extent fluent in English. The average self-rated proficiency for English was 4.67 (SD = 1.58).

Materials

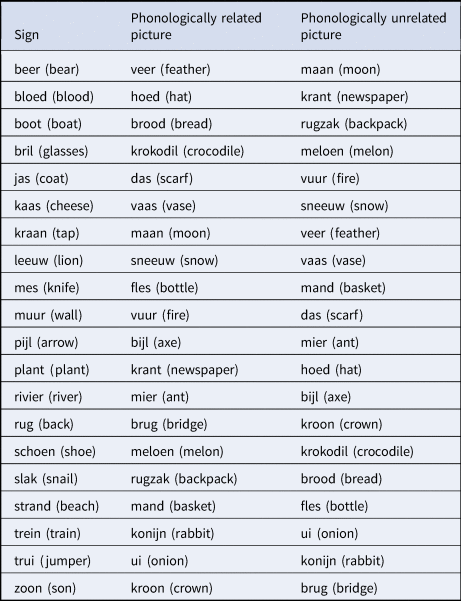

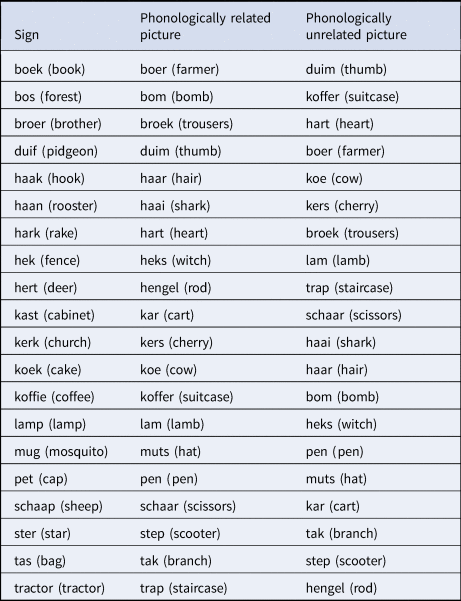

120 videos of lexical signs and 120 black and white line drawings were used, in order to create 240 sign-picture pairs. The line drawings were selected from the picture database at the Max Planck Institute for Psycholinguistics and the picture database created by Snodgrass and Vanderwart (Reference Snodgrass and Vanderwart1980). The signs (10 x 5 cm) and drawings (5 x 5 cm) were simultaneously presented on the left and right side of a computer screen, respectively. The lexical signs were produced by a deaf native signer of NGT. Each sign was recorded twice within the same recording session, once with mouthing, thus mapping onto the (visual) phonological representations of spoken Dutch (used in Experiment 1) and once without mouthing (used in Experiment 2). The experimental items consisted of sign-picture pairs with either word-initial cohort overlap between the Dutch translation equivalents (e.g., the sign KERK [CHURCH]-picture of kers [cherry]), see Appendix A, or word-final rhyme overlap (e.g., the sign MUUR [WALL]–picture of [fire]), see Appendix B. For pairs with cohort overlap, the initial 2-4 letters and accompanying sounds of the Dutch equivalent were shared, always containing at least one consonant (word onset) and vowel (nucleus). For pairs with rhyme overlap, the final 2-4 letters and accompanying sounds of the Dutch equivalent were shared, always containing at least one vowel (nucleus) and consonant (coda)Footnote 1.

Design

Eighty out of the 240 sign-picture pairs were divided across four critical experimental conditions, with 20 pairs in each of these four experimental conditions: cohort overlap-phonologically related (20 pairs), cohort unrelated (20 pairs), final rhyme overlap-phonologically related (20 pairs), final rhyme unrelated (20 pairs), see Table 1. There were 240 sign-picture pairs in total (80 critical pairs and 160 fillers, see below), half in which the sign and the picture referred to the same concept, requiring a ‘yes’ response, and half in which the sign and picture did not match, requiring a ‘no’ response. All 80 critical sign-picture pairs required a ‘no’ response (i.e., sign and picture did not match). The signs and pictures in the two phonologically-related experimental conditions (cohort and rhyme overlap) were recombined to create sign-picture pairs for the phonologically-unrelated experimental conditions. That is, each sign in the critical conditions was paired with two pictures, one that was phonologically related (e.g., KERK–kers [CHURCH–cherry]) and one that was phonologically unrelated (e.g., KERK–haai [CHURCH–shark]).

Table 1. Experimental conditions.

The remaining 160 sign-picture pairs were used as fillers (see Table 1). These filler trials consisted of 80 filler trials in which 40 different sign-picture combinations were repeated once, similar to the repetition of items in the experimental conditions, but all requiring a yes-response (i.e., sign and picture matched). The remaining 80 filler trials entailed 40 sign-picture combinations that were once presented as a matching pair (yes-response) and once recombined as a mismatching pair (no-response). This was done to prevent strategic behavior that the second occurrence of a sign or picture always implied the same response as for the first occurrence.

To avoid repetition effects, the same signs or pictures were always separated by a minimum of 50 different items. Across the entire experiment, 120 trials elicited a no-response (80 trials across 4 experimental conditions and 40 filler trials) and 120 trials elicited a yes-response (all filler trials). None of the sign-picture pairs in the experiment overlapped in NGT phonology (i.e., manual features, such as the location or the orientation of the hands and aspects related to handshapes and movements), and their Dutch translation equivalents were matched for word length, frequency, and number of orthographical neighbors across conditions, using the CELEX database (Baayen, Piepenbrock & van Rijn, Reference Baayen, Piepenbrock and van Rijn1993).

Procedure

E-Prime version 2.0 (Psychology Software Tools, Inc) was used to present the stimuli. An E-prime response box measured participants' response latency. The left response button was marked with ‘different’ (‘no’ response) and the right response button with ‘same’ (‘yes’ response). Response latency was measured from the moment the signs and pictures appeared on the screen until participants pressed one of the buttons.

Participants were individually tested in a quiet room. They were seated 50 cm from the monitor and used their left and right index fingers to press the two response buttons. Participants were told to indicate whether a sign and a picture referred to the same concept or not by pressing the corresponding response button. The experiment consisted of six blocks of 40 trials preceded by three practice trials, with a short 30-second break between blocks. Trial presentation within each block was randomized. The experiment took between 45 and 50 minutes.

Results

Accuracy scores approached ceiling and were therefore not further analyzed (cohort condition: M = 99% (SD = .11) for phonologically-related pairs and M = 99% (SD = .12) for phonologically-unrelated pairs; final rhyme condition: M = 100% (SD = .05) for phonologically-related pairs and M = 99% (SD = .08) for phonologically-unrelated pairs).

Analyses of the reaction time (RT) data were performed on the correct responses. Trials with RTs more than two standard deviation above or below the item mean RT or the participant mean RT were excluded from analysis. In total, 2% of the RT responses were excluded from the analysis. The data were analyzed with a GLM Repeated Measures ANOVA using IBM SPSS software as a within-subject 2 x 2 factorial design with reaction time of the correct responses as dependent variable. The two factors were Overlap (phonologically-related vs. phonologically-unrelated in Dutch) and Position (cohort overlap vs. final rhyme overlap).

Mean RTs are presented in Figure 3. The main effect of Overlap was not significant, F(1,23) = .59, p = .45, ηp2 = .03. The main effect of Position was significant, F(1,23) = 4.95, p < .05, ηp2 = .18, and the interaction between Overlap and Position was also significant, F(1,23) = 9.72, p < .01, ηp2 = .30. To further explore this interaction, we conducted follow-up simple effects analyses for the cohort overlap and final rhyme overlap condition separately. In the cohort condition, the effect of phonological overlap did not reach significance, F(1,23) = 2.42, p = .13, ηp2 = .10, although Figure 3 suggests a trend towards faster responses for phonologically-related sign-picture pairs. In contrast, the effect of phonological overlap was significant in the final rhyme condition, F(1,23) = 8.07, p < .01, ηp2 = .26, indicating slower responses for phonologically-related sign-picture pairs.

Fig. 3. Reaction times in Experiment 1 (with mouthings) for cohort and final rhyme (error bars depict the standard error of the mean). The blue bars represent the results for the sign-picture pairs with overlapping (underlying) phonology in the L1 (spoken Dutch). The red bars reprent the results for the sign-picture pairs without any phonological overlap in the L1.

Discussion

The results from Experiment 1 show co-activation of spoken words during sign processing in hearing late learners of a sign language when signs were presented with mouthings. Specifically, participants were slower to decide that signs and pictures did not match when the Dutch translation equivalents overlapped in final rhyme. Interestingly, an asymmetric pattern was observed for items with word-initial (cohort) and word-final (rhyme) phonological overlap. While rhyme overlap yielded a significant interference effect, indicating cross-language activation, no significant effect of cohort overlap on response times was observed. We will postpone discussion of this difference between cohort and rhyme overlap until the General Discussion.

Experiment 2: Signs presented without mouthings

In Experiment 2, the same sign-picture pairings as in Experiment 1 were presented to a new group of hearing sign language interpreters, but now signs were presented without their corresponding mouthings.

Method

Participants

A newly recruited group of 24 sign language interpreters in training (all females; Mean age = 26.9, SD = 8.5) participated in Experiment 2. They were recruited from the same population as tested in Experiment 1, and all participants were in their final year of a four-year full-time sign language interpreter program at the Hogeschool Utrecht (HU University of Applied Sciences), The Netherlands. The native language of all participants was Dutch and they were all late learners of NGT. Their average self-rated NGT proficiency was 5.26 (SD = .69) and average self-rated English proficiency was 5.2 (SD = .69). None of the participants were familiar with another sign language.

Materials. The same sign-picture pairs were used as in Experiment 1, but the videos of signs without mouthings were used in this experiment. In all other aspects, the experimental design and procedure were identical to Experiment 1.

Results

As in Experiment 1, accuracy scores were near ceiling and therefore not further analyzed (cohort condition: M = 98% (SD = .13) for phonologically-related pairs and M = 98% (SD = .13) for phonologically-unrelated pairs; final rhyme condition: M = 99% (SD = .10) for phonologically-related pairs and M = 99% (SD = .12) for phonologically-unrelated pairs).

Using the same outlier removal procedures as in Experiment 1, 1% of the RT responses were excluded from the analysis. As in Experiment 1, the data were analyzed as a within-subjects 2 x 2 factorial design with Overlap (phonologically-related vs. phonologically-unrelated in Dutch) and Position (cohort overlap vs. rhyme overlap) as factors.

Mean RTs are presented in Figure 4. Similar to Experiment 1, the main effect of Overlap was not significant, F(1,23) = 1.69, p = .21, ηp2 = .07, but there was a significant main effect of Position, F(1,23) = 10.37, p < .01, ηp2 = .31, indicating faster responses overall in the rhyme condition than cohort condition. In contrast to Experiment 1, however, the interaction between Overlap and Position was not significant in Experiment 2, F(1,23) = 1.19, p = .29, ηp2 = .05.

Fig. 4. Reaction times in Experiment 2 (without mouthings) for cohort and final rhyme rhyme (error bars depict the standard error of the mean). The blue bars represent the results for the sign-picture pairs with overlapping (underlying) phonology in the L1 (spoken Dutch). The red bars reprent the results for the sign-picture pairs without any phonological overlap in the L1.

In order to compare the results of Experiment 2 with those of Experiment 1, we conducted simple effects analyses for the cohort overlap and rhyme overlap condition separately. In the cohort condition, there was no significant effect of phonological overlap, F(1,23) < 1. In contrast, in the rhyme condition, the effect of phonological overlap was significant, F(1,23) = 5.58, p = .03 ηp2 = .02, indicating slower responses for phonologically-related sign-picture pairs.

Discussion

Although neither the main effect of Overlap nor the interaction between Overlap and Position were statistically significant in Experiment 2, simple effects analyses showed a similar pattern as for Experiment 1: no effect of cohort overlap but significant interference for rhyme overlap. This result suggests that co-activation of the Dutch translation equivalents of NGT signs is unlikely driven by shared phonological patterns between mouthings and phonological representations of spoken words.

Cross-experiment analysis

To directly test whether the mouthing manipulation yielded different result patterns in Experiments 1 and 2, we conducted an overall analysis on the data from both experiments, treating Experiment as between-subjects factor. The main effect of Experiment was not significant, F(1,46) < 1, nor were any of the interactions with Experiment (Position x Experiment: F(1,46) < 1, Overlap x Experiment: F(1,46) < 1, Position x Overlap x Experiment: F(1,46) = 2.12, p = .15, ηp2 = .04. The main effect of Overlap was also not significant, F(1,46) = 2.22, p = .14, ηp2 = .05. In contrast, the main effect of Position was significant, F(1,46) = 14.97, p < .01, ηp2 = .25, as was the interaction between Overlap and Position, F(1,46) = 8.93, p < .01, ηp2 = .16, indicating slower responses for sign-picture pairs with final rhyme overlap in Dutch, but no significant effect of cohort overlap. This overall analysis confirms the outcome of the separate analyses for each experiment, and further supports the conclusion of co-activation for sign-picture pairs with final rhyme overlap in Dutch, but not for sign-picture pairs with cohort overlap. Importantly, this pattern holds for signs presented both with and without mouthings.

General discussion

The aim of the present study was twofold: (1) to investigate the co-activation of L1 spoken words during the processing of signs in adult hearing late signers, and (2) to establish whether language co-activation is mediated by mouthings that are often co-produced with signed utterances in NGT and that map onto (visual) phonological representations of the spoken language. Hearing adult learners of NGT were tested in two sign-picture verification experiments in which a subset of the signs had Dutch translation equivalents that overlapped in phonology with the picture names at the beginning (cohort overlap) or end (rhyme overlap) of the word. The signs were either presented with mouthings (Experiment 1) or without mouthings (Experiment 2). In both experiments, we found slower responses for sign-picture pairs with final rhyme overlap in spoken Dutch (e.g., MUUR–vuur [WALL–fire]) relative to phonologically unrelated controls, but no significant effect for sign-picture pairs with cohort overlap in Dutch (e.g., KERK–kers [CHURCH–cherry]).

Our findings extend previous studies with deaf and hearing signers that found co-activation of signs during the processing of written or spoken words (Giezen et al., Reference Giezen, Blumenfeld, Shook, Marian and Emmorey2015; Meade et al., Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017; Morford et al., Reference Morford, Wilkinson, Villwock, Piñar and Kroll2011, Reference Morford, Kroll, Piñar and Wilkinson2014, Reference Morford, Occhino-Kehoe, Piñar, Wilkinson and Kroll2017; Ormel, Reference Ormel2008; Ormel et al., Reference Ormel, Hermans, Knoors and Verhoeven2012; Shook & Marian, Reference Shook and Marian2012; Villameriel et al., Reference Villameriel, Dias, Costello and Carreiras2016) by showing that cross-language activation also occurs in the reverse direction, i.e., the co-activation of the spoken language during the processing of signs. The present results are consistent with the findings in two recent studies where ERP recordings revealed co-activation of words during sign processing (Hosemann et al., Reference Hosemann, Mani, Herrmann, Steinbach and Altvater-Mackensen2020; Lee et al., Reference Lee, Meade, Midgley, Holcomb and Emmorey2019), although these studies did not investigate the effect of mouthings. The combined findings strongly suggest that cross-language activation of spoken and signed languages by deaf signers and hearing bimodal bilinguals is bidirectional.

It should be noted that Lee et al. (Reference Lee, Meade, Midgley, Holcomb and Emmorey2019) collected electrophysiological as well as behavioral data, but only found evidence for co-activation in the ERP responses. One possible explanation is that the different experimental paradigms used in these studies vary in their sensitivity to detect co-activation effects. For example, experiments with sign-picture stimuli as used in the present study may be more likely to elicit co-activation of words than experiments with only signed stimuli (see also Lee et al., Reference Lee, Meade, Midgley, Holcomb and Emmorey2019, for discussion).

The second aim of our study was to elucidate the contribution of mouthings to the co-activation of spoken language during processing of sign language. Because spoken and signed languages have no (direct) cross-language phonological connections (shared phonemes or graphemes), cross-language activation has been argued to occur through lexical connections between the two languages and/or through shared semantic representations (Morford et al., Reference Morford, Occhino-Kehoe, Piñar, Wilkinson and Kroll2017; Ormel, Reference Ormel2008; Shook & Marian, Reference Shook and Marian2013). Alternatively, modality-specific connections between spoken and signed languages may account for language co-activation across the two language modalities: for example, signs with fingerspelled letters or signs with mouthings that share orthographic (i.e., fingerspelling) or (visual) phonological (i.e., mouthings) features with the spoken language (e.g., Emmorey et al., Reference Emmorey, Giezen and Gollan2016; Kubuş et al., Reference Kubuş, Villwock, Morford and Rathmann2015). A recent study by Morford et al. (Reference Morford, Occhino, Zirnstein, Kroll, Wilkinson and Piñar2019) found that cross-language activation was not affected by the presence of orthographic overlap between words and signs through initialization (signs containing fingerspelled letters overlapping with the onset of spoken translation equivalents).

The present study investigated the possibility that mouthings co-occurring with signs mediate cross-language activation of spoken words during sign processing (the second variant of the alternative explanation outlined above). We addressed this issue by presenting signs in a sign-picture verification task with mouthings in Experiment 1 and without mouthings in Experiment 2. The results indicate that mouthings, similar to initialization, do not affect the co-activation of representations in the non-target spoken language during sign processing for hearing late signers. This finding is in line with the model presented in Figure 2, in which connections between signs and spoken language representations at the lexical and/or semantic level allow for cross-language activation (cf. Morford et al., Reference Morford, Occhino-Kehoe, Piñar, Wilkinson and Kroll2017; Ormel, Reference Ormel2008; Shook & Marian, Reference Shook and Marian2012).

Although the presence of mouthings did not affect the co-activation of words in the advanced late learners with high fluency levels tested in the present study, it remains to be seen whether this finding generalizes to beginning sign language learners or native signers, and to what extent language co-activation is impacted by relative proficiency in the sign language and spoken language. While previous studies have shown co-activation in both native and non-native signers, and deaf as well as hearing signers, detailed investigations of the impact of fluency on language co-activation in either direction in signers have not been carried out yet. As discussed in Chen, Bobb, Hoshino and Marian (Reference Chen, Bobb, Hoshino and Marian2017), language co-activation in bilinguals is sensitive to fine-grained differences in the bilingual language experience, such as degree of language exposure to – and language fluency in – each of the languages. Furthermore, as suggested by Bank, Crasborn, and van Hout (Reference Bank, Crasborn and van Hout2015), mouthings in isolated signs, such as in the present study, may not behave the same way as mouthings in discourse.

Another finding in both experiments in the present study is that cross-language activation was found for sign-picture pairs with final rhyme overlap only, and not for sign-picture pairs with cohort overlap. A possible explanation is that cohort and rhyme effects reflect differential contributions of pre-lexical and lexical influences in cross-language activation. Specifically, rhyme effects might reflect lexical co-activation of competitors, while cohort effects might primarily reflect bottom-up phonological co-activation of competitors (cf. Desroches, Newman & Joanisse, Reference Desroches, Newman and Joanisse2009, on (pre-)lexical competition effects in monolingual word recognition). Most cross-language activation studies with unimodal bilinguals investigated cohort competition effects, and therefore may have primarily measured phonological competition between the two languages. If cross-language activation in bimodal bilinguals relies on connections at the lexical and/or semantic level between the two languages, then this could explain why only rhyme competitors, but not cohort competitors, yielded co-activation effects in the present study. Furthermore, it is consistent with the finding that mouthings, which also reflect a (visual) phonological connection between spoken and signed languages, did not modulate co-activation effects in the present study.

In addition to contributing to our understanding of bimodal bilingual language processing, the current findings add to general theories of bilingual language processing. In particular, the findings provide further empirical evidence for the answer to the question whether phonological overlap between languages is required for language co-activation (NO), whether co-activation in bimodal bilinguals extends to co-activation of spoken phonology during sign processing (YES), and if modality-specific language features that convey a potential link to the phonologically non-overlapping other language (here tested by mouthing, referring to the visual phonological form of a spoken language accompanying a sign) provide a bridging function for cross-language activation (NO).

In conclusion, the present study extends previous studies of co-activation of signs during spoken word processing in deaf and hearing bimodal bilinguals by demonstrating that hearing late signers also co-activate spoken words during sign processing, thereby indicating that the co-activation of signs and spoken words is bidirectional. Furthermore, this form of co-activation is not mediated by mouthings, providing further evidence for activation of lexical representations in the non-target language in the absence of phonological overlap between languages in the input. The impact of the degree of language fluency in the respective languages and the effect of linguistic experiences as a deaf or a hearing signer requires further investigation in order to fully grasp the mechanisms driving bimodal bilingual language comprehension.

Competing interests

The authors declare none

Acknowledgements

The authors thank Merel van Zuilen for her help in developing the stimuli, Daan Hermans for his valuable contribution to many aspects of the study, and Joyce van der Loop, Elselieke Hermes, Maaike Korpershoek, Maud Graste, and Marli van Sark for their valuable contributions to parts of the study.

Appendices

Appendix A. Stimuli list cohort overlap

Appendix B. Stimuli list final rhyme overlap